Introduction to Weekly Offenders

A bit of a light week…

Peter gives us a heavy does of the REST API in PBCS after Kscope17.

Sibin has several posts this week. He starts off with a post about digging into the XML of an LCM export. He also tells us that UAC is very bad for a Hyperion install. Keeping with his previous installation post, he also tells us about the dependency of IIS for EPMA.

Rodrigo shows us how to install the ODI 12c Standalone Agent.

The Proactive Support Blog has a set of OBIEE patches this week:

Opal has a quick post on how to access the OAC log files.

Be On the Lookout!

That’s it for this week! Be on the lookout for more great EPM Blog content next week!

Brian Marshall

July 24, 2017

Introduction to Weekly Offenders

This week was a bit busier than last week. We even had a few more posts about Kscope17 this week. What new this week?

Gary has a pair of posts this week. First, he went and started his own Oracle User group for the Detroit Area (DOUG). He also dives head-first into the Essbase behind Oracle’s cloud ERP.

I continued my series focusing on Essbase Performance. This week we started talking about physical vs. virtual Essbase performance.

Sibin started off the week with a post about keeping your downstream systems in mind when making changes. He then has a post re-capping his series on Universal Data Access in FDMEE.

John Goodwin has an excellent post showing off the new clearcube functionality in EPM Automate and the REST API for PBCS. Finally…ASO clears can be executed via batch!

The Proactive Support Blog brings us news of just one on-prem patch this week. Oracle Hyperion Tax Provision 11.1.2.4.202 has been released. Perhaps this is exciting to someone?

The blog also provides us with news of an HFM patch (11.1.2.4.205). While a variety of new features are listed, they are generic to the .2xx series of HFM patches. This appears to be mostly bug fixes.

Celvin had a busy week starting off with a re-cap of Kscope17. He then takes a detour away from Oracle in general and focuses on an OLAP tool named Mondrian (Open Source ROLAP tool). Pretty neat. Finally, he also has a post about new PBCS features. The focuses on the ability to actually get audit information from PBCS. I just recently mentioned in a demo that we had been waiting for this…now I can demo it!

Sarah has a pair of posts this week starting out with a post on understanding Candlestick graphs. She continues on this theme and also tells us how to read and understand Box Plots.

Tim Tow’s Hyperion Blog

Tim tells us how to get TLS 1.2. working on Java 1.6 (the version that ships with Oracle’s EPM stack). Pretty interesting. For those of you wondering what TLS 1.2 is…it’s basically a more recent version of SSL.

Opal is back this week with a pair of posts. First she has a quick tip on restarting your Essbase Cloud instance. She finishes out our week in review with her re-cap of Kscope17.

Be On the Lookout!

That’s it for this week! Be on the lookout for more great EPM Blog content next week!

Brian Marshall

July 17, 2017

Welcome to the seventh part in an on-going blog series about Essbase performance. Today we will focus on Essbase Physical vs. Virtual Performance, but first here’s a summary of the series so far:

I started sharing actual Essbase benchmark results in part six of this series, focusing on Hyper-Threading. Today I’ll shift gears away from physical Essbase testing and focus on virtualized Essbase.

Testing Configuration

All testing is performed on a dedicated benchmarking server. This server changes software configurations between Windows and ESXi, but the hardware configuration is static. Here is the physical configuration of the server:

I partitioned each of the storage devices into equal halves: one half for use in the Windows installation on the physical hardware and one half for use in the VMware ESXi installation. here is the virtual configuration of the server:

| Processors | 32 vCPU (Intel Xeon E5-2670) |

| Memory | 96GB RAM (DDR3 1600) |

| Solid State Storage | (4) Samsung 850 EVO 250 GB on LSI SAS in RAID 0 (Data store) |

| Solid State Storage | (1) Samsung 850 EVO 250 GB on LSI SAS (Data store) |

| NVMe Storage | Intel P3605 1.6TB AIC (Data store) |

| Hard Drive Storage | (12) Fujitsu MBA3300RC 300GB 15000 RPM on LSI SAS in RAID 10 (Data store) |

| Network Adapter | Intel X520-DA2 Dual Port 10 Gbps Network Adapter |

Network Storage

Before we get too far into the benchmarks, I should probably also talk about iSCSI and network storage. iSCSI is one of the many options out there to provide network-based storage to servers. This is one implementation used by many enterprise storage area networks (SAN). I implemented my own network attache storage device so that I could test out this type of storage. Network storage is even more common today because most virtual clusters require some sort of network storage to provide fault tolerance. Essentially, you have one storage array that is shared by multiple hosts. This simplifies back-ups and maintains high availability and performance.

There is however a problem. Essbase is heavily dependent on disk performance. Latency of any kind will harm performance because as latency increases, random I/O performance decreases. So why does network storage have a higher latency? Let’s take a look at our options.

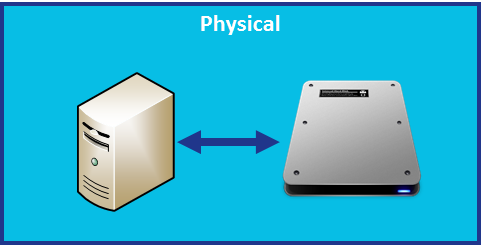

Physical Server with Local Storage

In this configuration, the disk is attached to the physical server and the latency is contained inside of the system. Think of it like a train: the longer we travel on a track, the longer it takes to reach out destination and return home.

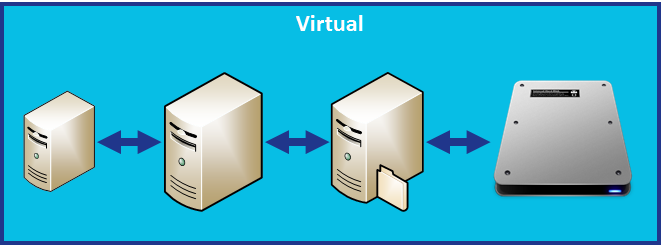

Virtual Server with Local Storage

With virtual storage, we have a guest server that communicates to the host server for I/O requests that has a disk attached. Now our train is traveling on a little bit more track. So latency increases and performance decreases.

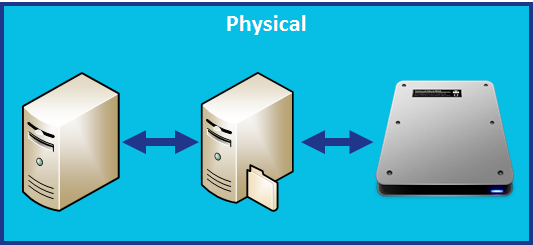

Physical Server with Network Storage

Our train track seems to be getting longer by the minute. With network storage on a physical server, we now have our physical server communicating with our file server that has a disk attached. This adds an additional stop.

Virtual Server with Network Storage

This doesn’t look promising at all. Our train now makes a pretty long round-trip to reach its destination. So performance might not be great on this option for Essbase. That’s unfortunate, as this is a very common configuration. Let’s see what happens.

EssBench Application

The application used for all testing is EssBench. This application has the following characteristics:

- Dimensions

- Account (1025 members, 838 stored)

- Period (19 members, 14 stored)

- Years (6 members)

- Scenario (3 members)

- Version (4 members)

- Currency (3 members)

- Entity (8767 members, 8709 stored)

- Product (8639 members, 8639 stored)

- Data

- 8 Text files in native Essbase load format

- 1 Text file in comma separated format

- PowerShell Scripts

- Creates Log File

- Executes MaxL Commands

- MaxL Scripts

- Resets the cube

- Loads data (several rules)

- Aggs the cube

- Executes allocation

- Aggs the allocated data

- Executes currency conversion

- Restructures the database

- Executes the MaxL script three times

Testing Methodology

For these benchmarks to be meaningful, we need to be consistent in the way that they are executed. The tests in both the physical and virtual environment were kept exactly the same on an Essbase level. Today I will be focusing on native Essbase loads. The process takes eight (8) native Essbase files produced from a parallel export and loads them into Essbase using a parallel import. Because this is a test of loading data, CALCPARALLEL and RESTRUCTURETHREADS have no impact. Let’s take a look at the steps used to perform these tests:

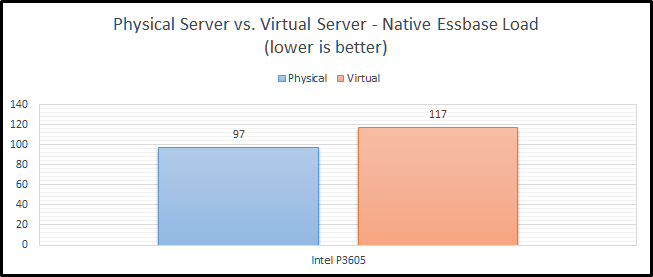

- Physical Test – Intel P3605

- A new instance of EssBench was configured using the application name EssBch06.

- The data storage was changed to the Intel P3605 drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

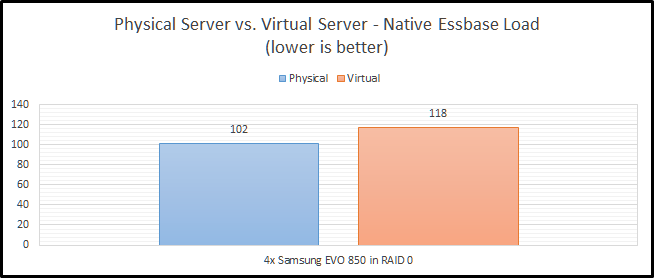

- Physical Test – SSD RAID

- A new instance of EssBench was configured using the application name EssBch08.

- The data storage was changed to the SSD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

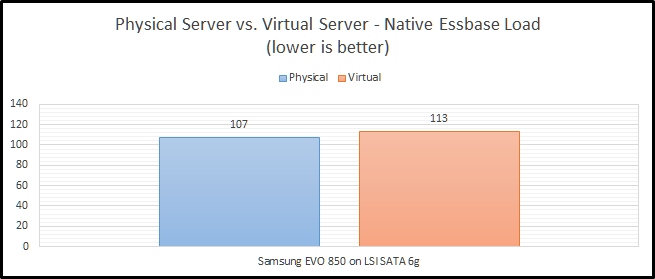

- Physical Test – Single SSD

- A new instance of EssBench was configured using the application name EssBch09.

- The data storage was changed to the single SSD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

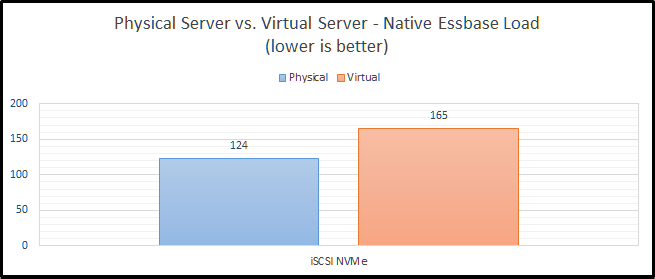

- Physical Test – iSCSI NVMe

- A new instance of EssBench was configured using the application name EssBch10.

- The data storage was changed to the iSCSI NVMe drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

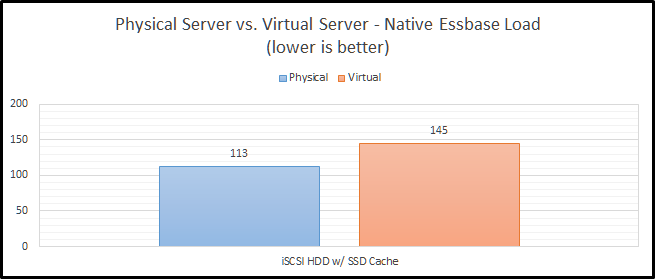

- Physical Test – iSCSI HDD w/ SSD Cache

- A new instance of EssBench was configured using the application name EssBch11.

- The data storage was changed to the iSCSI HDD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

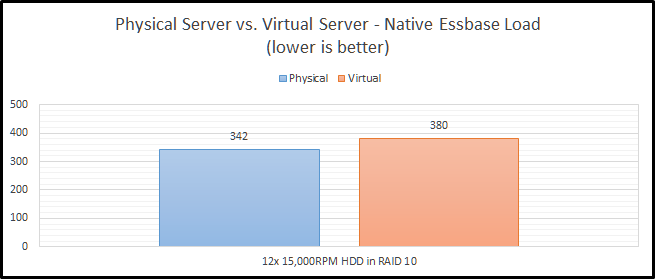

- Physical Test – HDD RAID

- A new instance of EssBench was configured using the application name EssBch07.

- The data storage was changed to the HDD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – Intel P3605

- A new instance of EssBench was configured using the application name EssBch15.

- The data storage was changed to the Intel P3605 drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – SSD RAID

- A new instance of EssBench was configured using the application name EssBch16.

- The data storage was changed to the SSD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – Single SSD

- A new instance of EssBench was configured using the application name EssBch17.

- The data storage was changed to the single SSD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – iSCSI NVMe

- A new instance of EssBench was configured using the application name EssBch18.

- The data storage was changed to the iSCSI NVMe drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – iSCSI HDD w/ SSD Cache

- A new instance of EssBench was configured using the application name EssBch19.

- The data storage was changed to the iSCSI HDD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – HDD RAID

- A new instance of EssBench was configured using the application name EssBch14.

- The data storage was changed to the HDD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

So…that was a lot of testing. Luckily, that set of steps will apply to the next several blog posts.

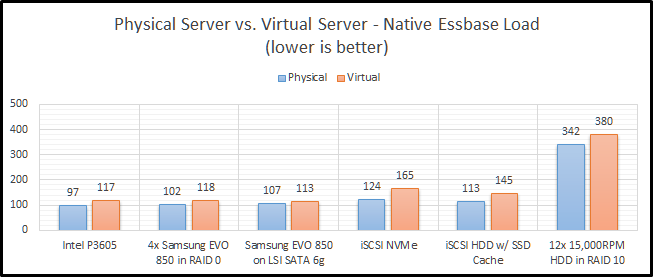

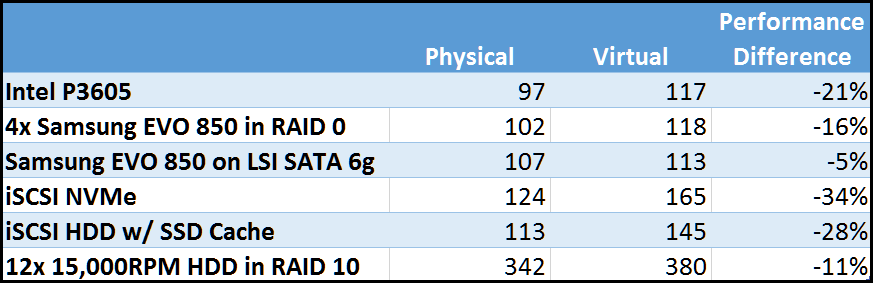

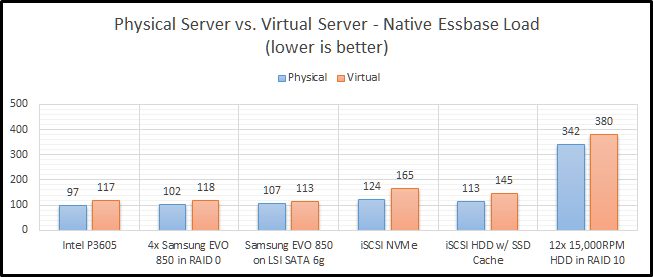

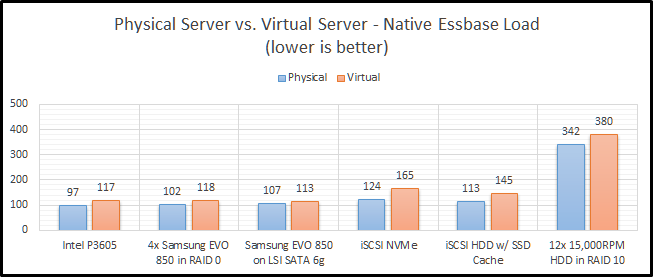

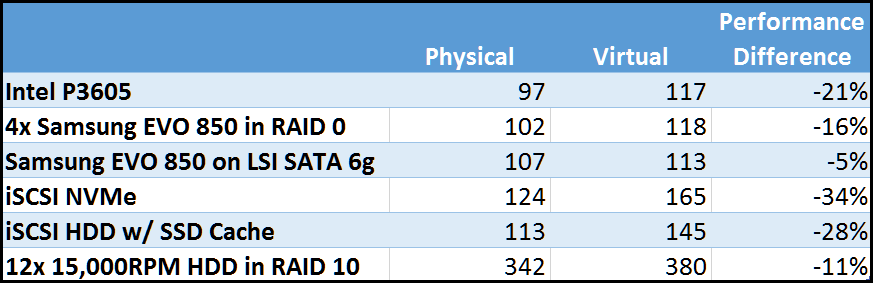

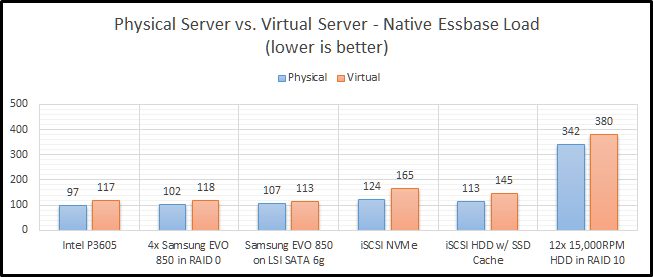

Physical vs. Virtual Test Results

This is a very simple test. It really just needs a disk drive that can provide adequate sequential writes, and things should be pretty fast. So how did things end up? Let’s break it down by storage type.

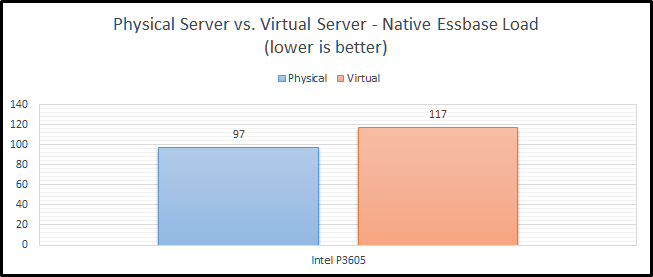

Intel P3605

The Intel P3605 is an enterprise-class NVMe PCIe SSD. Beyond all of the acronyms, its basically a super-fast SSD. When comparing these two configurations, we see that clearly the physical server is much faster (21%).

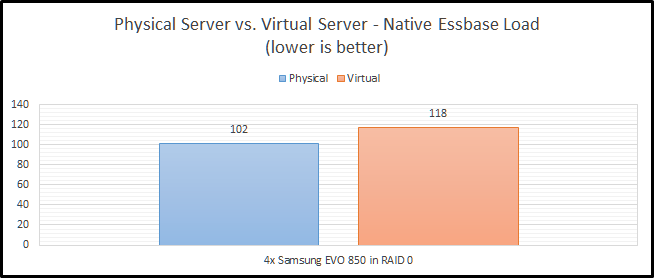

SSD RAID

I’ve chosen to implement SSD RAID using a physical LSI 9265-8i SAS controller with 1GB of RAM. The drives are configured in RAID 0 to get the most performance possible. RAID 1+0 is preferred, but then I would need eight drives to get the same performance level. The finance committee (my wife) would frown on another four drives. Our SSD RAID option is still faster on a physical server, as we would expect. The difference however is lower at 16%. It is also worth noting that it is basically the same speed as our NVMe option in the virtual configuration. I believe this is likely due to the drivers for Windows being better for the NVMe SSD than they are for ESXi.

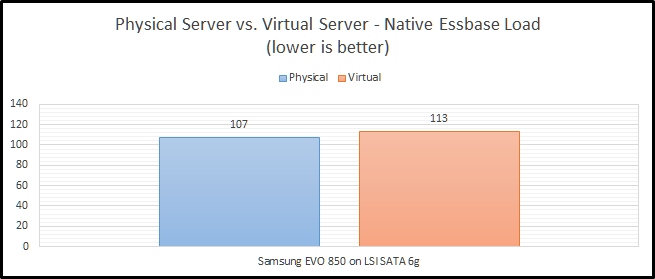

Single SSD

How did our single SSD fair? Pretty well actually with only a 5% decrease in performance going to a virtual environment. In our virtual environment, we actually see that it is faster than both our NVMe and SSD RAID options. But…they are pretty close. We’ll wait to see how everything performs in later blog posts before we draw any large conclusions from this test.

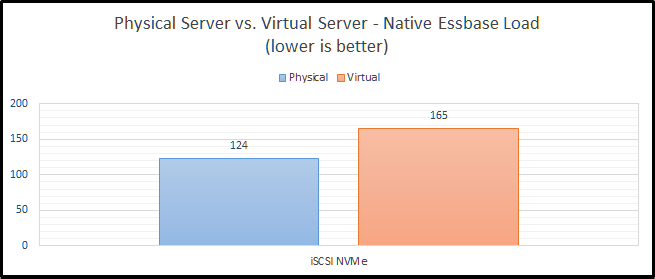

iSCSI NVMe

Now we can move on to a little bit more interesting set of benchmarks. Network storage never seems to work out at my clients. It especially seems to struggle as they go virtual. Here, we see that NVMe over iSCSI performs “okay” on a physical system, but not so great on a virtual platform. The difference is a staggering 34%.

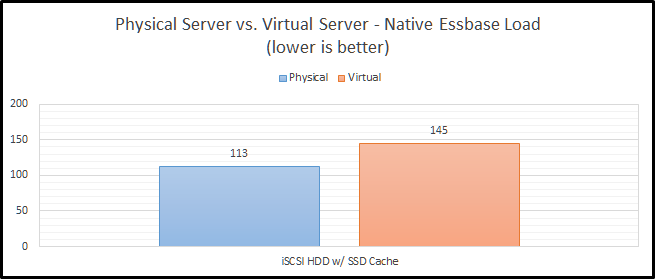

iSCSI HDD w/ SSD Cache

Staying on the topic of network storage, I also tested out a configuration with hard drives and an SSD cache. The problem with this test is that I’m the only user on the system and the cache is a combination of 200GB of SSD and a ton of RAM. Between those two things, I don’t think it ever leaves the cache. The difference between physical and virtual is still pretty bad at 28%. I still can’t explain why this configuration is faster than the NVMe configuration, but my current guess is that it is related to drivers and/or firmware in FreeBSD.

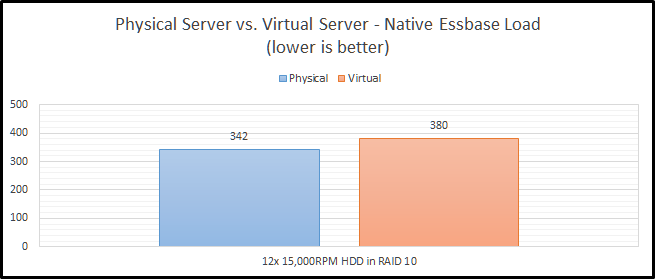

HDD RAID

Finally, we come to our slowest option…old-school hard drives. It isn’t just a little slower…it is a LOT slower. This actually uses the same RAID card that I am using for the SSD RAID configuration. These drives are configured in RAID 1+0. With the SSD’s, I was trying to see how fast I can make it. With the HDD configuration, I was really trying to test out a real-world configuration. RAID 0 has no redundancy, so it is very uncommon with hard drives. We see here that the hard drive configuration is only 11% slower in a virtual configuration than in a physical configuration. We’ll call that the silver lining.

Summarized Essbase Physical vs. Virtual Performance Results

Let’s look at the all of the results in a grid:

And as a graph:

CONCLUSION

In my last post, I talked about preconceived notions. In this post, I get to confirm one of those. Physical hardware is faster than virtual hardware. This shouldn’t be shocking to anyone. But, I like having a percentage and seeing the different configurations. For instance, network storage in this instance is an upgrade compared to regular hard drives. But if you are going from local SSD storage to network storage, you will end up slower on two fronts. First, you obviously lose speed going to a virtual environment. Next, you also lose speed going to the network.

Brian Marshall

July 12, 2017

Introduction to Weekly Offenders

Last week I started by up my weekly review of EPM blogging. There was quite a bit of content with Kscope17 having just finished up and everyone seemed excited to contribute to the community. Between the holidays and everyone recovering from Kscope…last week wasn’t nearly as productive. However, it was an odd week with a holiday in the middle of the week. And luckily, Oracle had a few on-prem patching to keep things interesting. Here we go!

Gary has a PSA for us regarding Smart View in Office 2016. Microsoft broke something…

Cameron wrapped up his coverage of Kscope17. He takes a lot more pictures than I do…I should work on that.

The EPM Lab is a new addition to my EPM Blog List. Jun has a post this week showing off the new Strategic Modeling portion of EPBCS.

I had a pair of posts this week. First I wished everyone in America a Happy Independence Day. Next, I posted my first set of actual Essbase benchmarks since releasing EssBench.

Sibin has a pair post again this week. He first discusses the configuration of FDMEE using an Oracle DB table as a source. Next, he continues down the Oracle DB and FDMEE path by showing us how to create an import format and actually load some data into the workbench.

The Proactive Support Blog brings us news of a few on-prem patches this week. First, we have an update to Hyperion Financial Reports. The 11.1.2.4.706 patch appears to solve a number of defects, but I don’t see any new functionality. As with all future FR patches, Oracle reminds us that the desktop Financial Reporting Studio is dead.

The blog also provides us with news of an HFM patch (11.1.2.4.205). While a variety of new features are listed, they are generic to the .2xx series of HFM patches. This appears to be mostly bug fixes.

Celvin takes an in-depth look at some of the Simplified User Interface (SUI) features in PBCS. He touches the new dimension editor and composite forms in navigation flows.

Be On the Lookout!

That’s it for this week! Be on the lookout for more great EPM Blog content next week!

Brian Marshall

July 9, 2017

Essbase Performance Series

Welcome to the sixth part in an on-going blog series about Essbase performance. Here’s a summary of the series so far:

Now that EssBench is official, I’m ready to start sharing the benchmarks in Essbase and some explanation. Much of this was covered in my Kscope17 presentation, but if you download the PowerPoint, it lacks some context. My goal is to provide that context via my blog. We’ll start off with actual Essbase benchmarks around Hyper-Threading. For years I’ve always heard that Hyper-Threading was something you should always turn off. This normally lead to an argument with IT about how you even go about turning it off and if they would support turning it off. But before we too far, let’s talk about what Hyper-Threading is.

What is Hyper-Threading

Hyper-Threading is Intel’s implementation of a very old technology known as Simultaneous Multi-Threading (SMT). Essentially it allows each core of a processor to address two threads at once. The theory here is that you can make use of otherwise idle CPU time. The problem is that at the time of release in 2002 in the Pentium IV line, the operating system implementation of the technology was not great. Intel claimed up that it would increase performance, but it was very hit and miss depending on your application. At the time, it was considered a huge miss for Essbase. Eventually Intel dropped Hyper-Threading for the next generation of processors.

The Return of Hyper-Threading

With the release of the Xeon X5500 series processors, Intel re-introduced the technology. This is where it gets interesting. The technology this time around wasn’t nearly as bad. With the emergence of hypervisors and a massive shift in that direction, Hyper-Threading can actually provide a large benefit. In a system with 16 cores, now guests can address 32 threads. In fact, if you look at the Oracle documentation for their relational technology, they recommend leaving it on starting with the X5500 series of processors. With that knowledge, I decided to see how it would perform in Essbase.

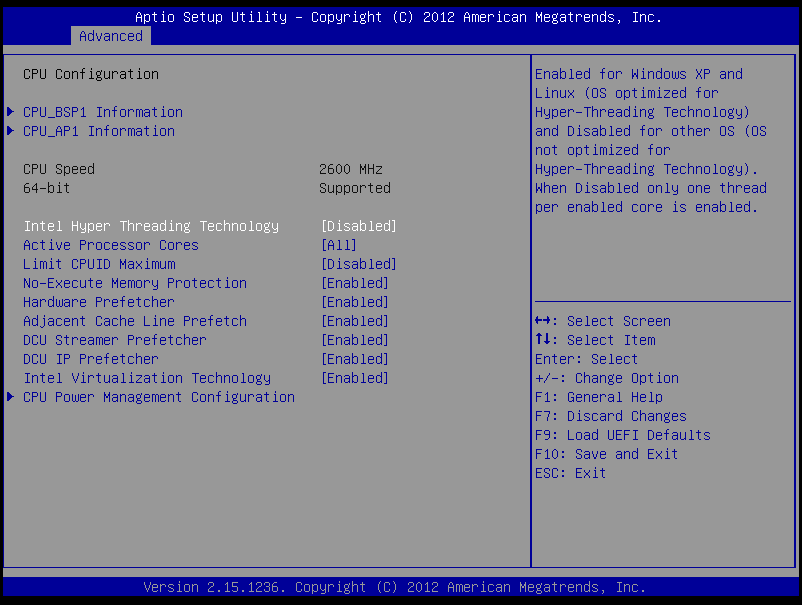

Testing Configuration

All testing is performed on a dedicated benchmarking server. This server changes software configurations between Windows and ESXi, but the hardware configuration is static:

One important note on hardware configuration. All of the benchmarks that have been run are using the same NVMe storage. This is our fastest storage option available, so this should ensure that we don’t skew the results with hardware limitations.

EssBench Application

The application used for all testing is EssBench. This application has the following characteristics:

- Dimensions

- Account (1025 members, 838 stored)

- Period (19 members, 14 stored)

- Years (6 members)

- Scenario (3 members)

- Version (4 members)

- Currency (3 members)

- Entity (8767 members, 8709 stored)

- Product (8639 members, 8639 stored)

- Data

- 8 Text files in native Essbase load format

- 1 Text file in comma separated format

- PowerShell Scripts

- Creates Log File

- Executes MaxL Commands

- MaxL Scripts

- Resets the cube

- Loads data (several rules)

- Aggs the cube

- Executes allocation

- Aggs the allocated data

- Executes currency conversion

- Restructures the database

- Executes the MaxL script three times

Testing Methodology

For these benchmarks to be meaningful, we need to be consistent in the way that they are executed. This particular set of benchmarks requires a configuration change at the system level, so it will be a little less straight forward than the future methodology. Here are the steps performed:

- With Hyper-Threading enabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 32. A new instance of EssBench was configured using the application name EssBch01. The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- With Hyper-Threading enabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 16. Another new instance of EssBench was configured using the application name EssBch06. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

- With Hyper-Threading enabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 8. Another new instance of EssBench was configured using the application name EssBch05. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

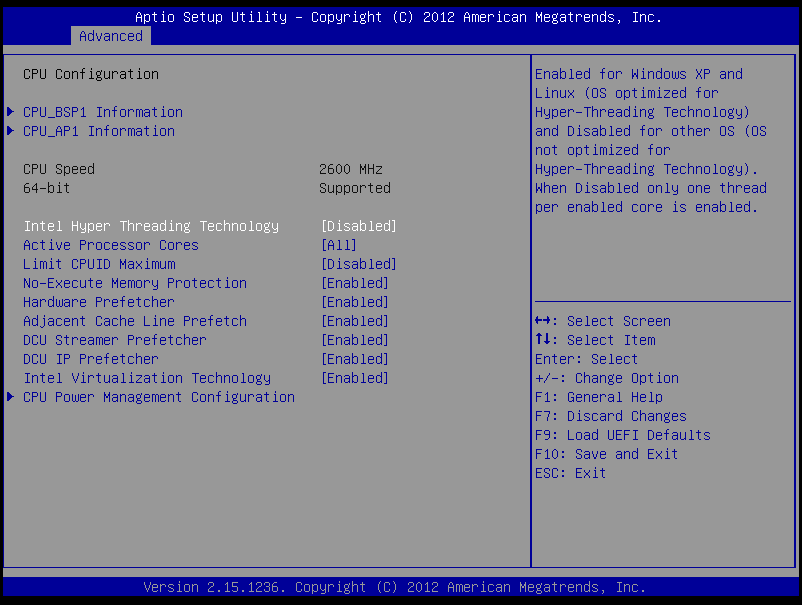

- Now we disable Hyper-Threading:

- With Hyper-Thread disabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 16. Another new instance of EssBench was configured using the application name EssBch02. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

- With Hyper-Thread disabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 8. Another new instance of EssBench was configured using the application name EssBch04. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

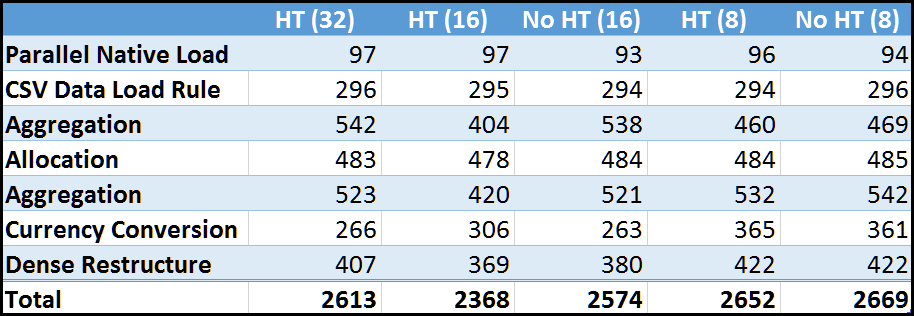

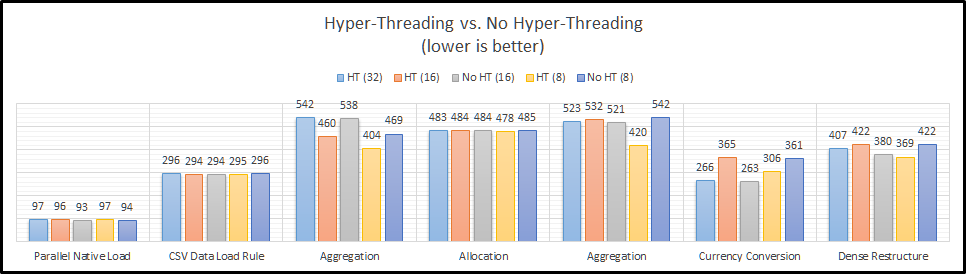

Essbase and Hyper-Threading

Let’s start by taking a look at each step of EssBench individually. I have some theories on each of these…but they could also just be the ramblings of a nerd recovering from Kscope17.

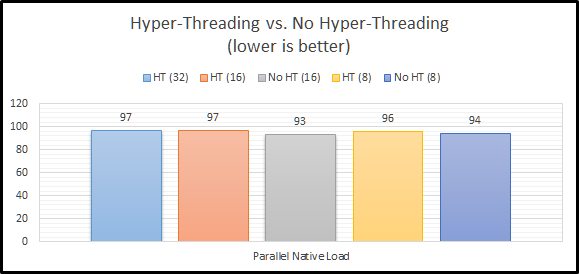

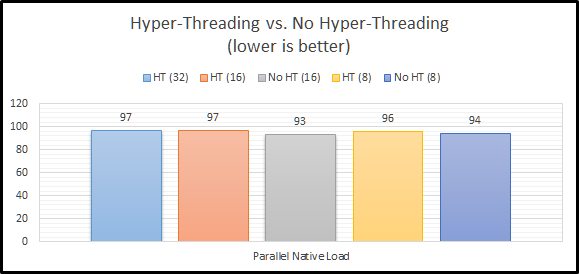

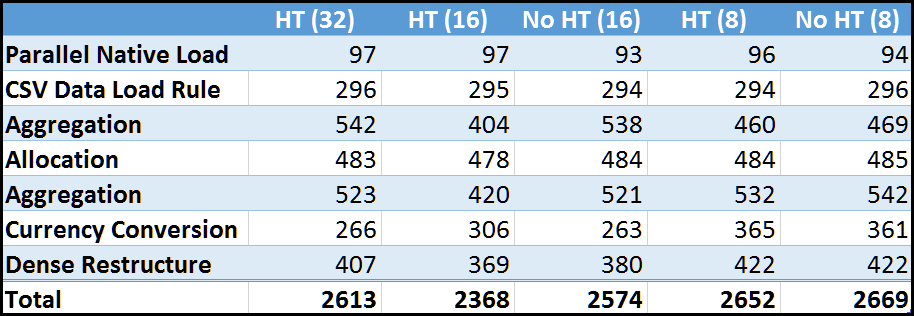

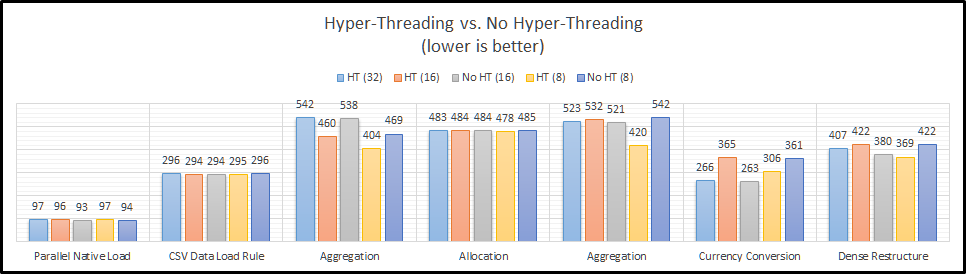

Parallel Native Load

The parallel native load uses eight (8) text files and loads them into the cube in parallel. This means that the difference between our CALCPARALLEL and RESTRUCTURETHREADS should have no bearing on the results. The most interesting part about this benchmark is that all of the results are within 5% of each other. The first thing I notice is that they are not massively different when comparing Hyper-Threading enabled and Hyper-Threading disabled. Let’s move on to a single-threaded load.

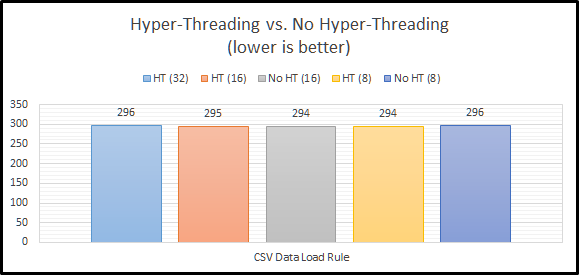

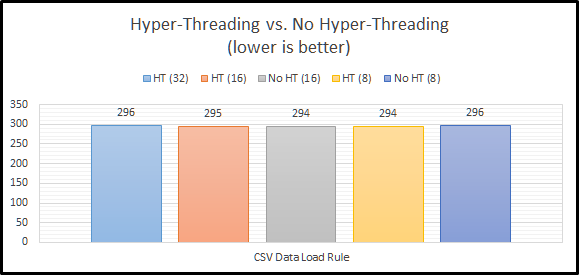

CSV Data Load Rule

The next step in the benchmark simply loads a CSV file using an Essbase load rule. This is a single-threaded operation and again our settings outside of Hyper-Threading should have no bearing. And again, the most interesting thing about these results…they are within 1% of of each other. So far…Hyper-Threading doesn’t seem to make a difference either way. What about a command that can use all of our threads?

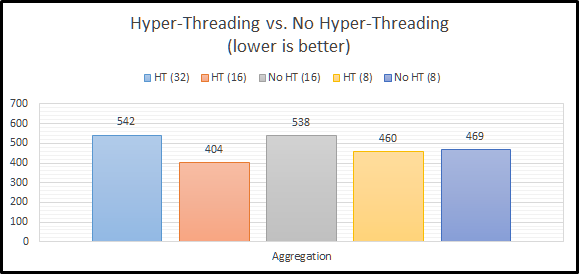

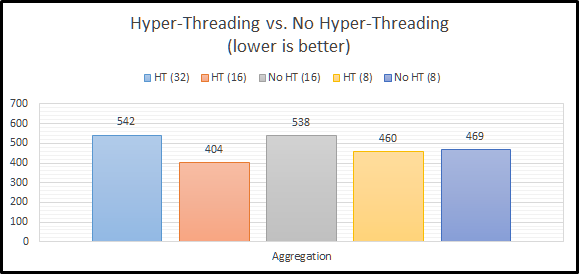

Aggregation

Now we have something to look at! We can clearly see that Hyper-Threading appears to massively improve performance for aggregations. This actually makes sense. With Hyper-Threading enabled, we have 32 logical cores for Essbase to use. With it disabled, we have 16. If we max out the settings for both, it would seem that we don’t have enough I/O performance to keep up. We can somewhat confirm this theory by looking at the results for CALCPARALLEL 8. When we attempt to only use half of our available CPU resources, there’s basically no difference in performance.

So is Hyper-Threading better, or worse for aggregation performance? The answer actually appears to be…indifferent. If we compare our two CALCPARALLEL 16 results, you might think that Hyper-Threading is what seems to be making things faster. But if we look at our setting of 8, we know that doesn’t make sense. Instead, I think what we are witnessing is the ability of Hyper-Threading to allow our system to really use cycles that otherwise go to waste. Our two settings that push our system to the max have the worst results. Essbase is basically taking all of the threads and keeping them to itself.

However, using 16 threads and having Hyper-Threading turned on seems to allow the operating system to properly use the additional 16 threads to good use for any overhead going on in the background. Basically, the un-used CPU time can be used if Hyper-Threading is turned on. The system seems to get overcome by Essbase and things slow down. So far, it looks our results tell us we should definitely use Hyper-Threading. They also indicate that Essbase doesn’t really seem to do well when it takes the entire server! If we consult the Essbase documentation, it actually suggests that CALCPARALLEL works best at 8.

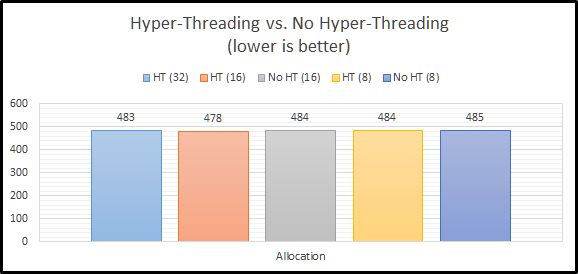

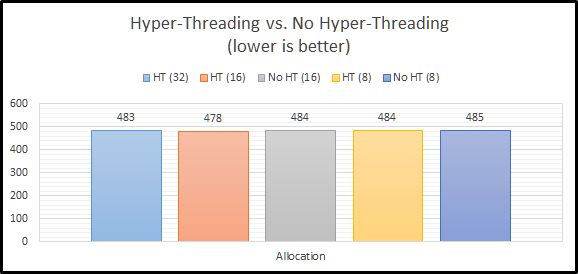

Allocation

The allocation script actually ignores our setting for CALCPARALLEL altogether. How? It uses FIXPARALLEL. On a non-Exalytics system, FIXPARALLEL has a maximum setting of 8. We again see an instance of Hyper-Threading having no impact on performance. I believe that there are two reasons for this. First, we have plenty of I/O performance to keep up with 8 threads. Second, there is no need to use the un-used CPU time. There are plenty of CPU resources to go around. The good news here is that performance doesn’t go down at all. This again seems to support leaving Hyper-Threading enabled.

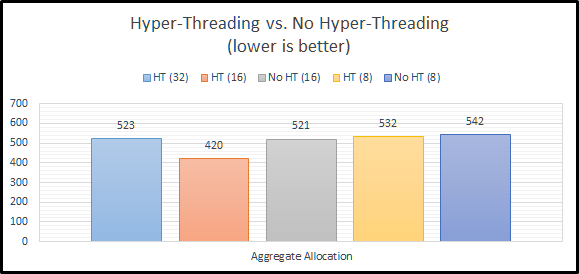

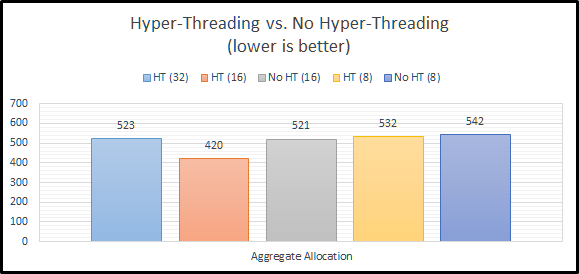

Aggregate Allocation

With our allocation calculation complete, the next step in EssBench is to aggregate that newly created data. These results should mirror the results from our initial allocation. And…they do for the most part. The results with CALCPARALLEL 8 are slower here. The results for CALCPARALLEL 16 are also slower. This is likely due to the additional random read I/O required to find all newly stored allocated data. The important thing to note here…CALCPARALLEL seems to love Hyper-Threading…as long as you have enough I/O performance, and you don’t eat the entire server.

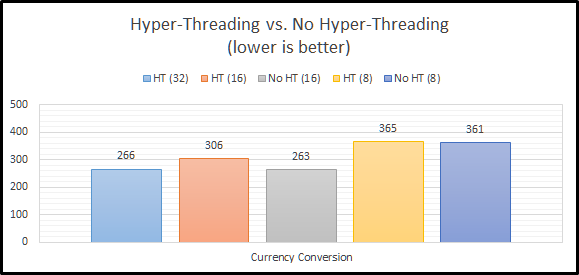

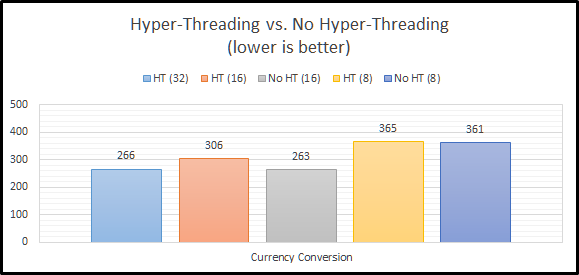

Currency Conversion

Our next step in the EssBench process…currency conversion. In this instance, it seems that the efficiency that Hyper-Threading gives us doesn’t actually help. Why? I’m guessing here, but I believe Essbase is getting more out of each core and I/O is not limiting performance here. If we look at CALCPARALLEL 8, the results are essentially the same. It seems that Essbase can actually use more of each processing core with the available I/O resources. So…does this mean that Hyper-Threading is actually slower here? On a per core basis…yes. But, we can achieve the same results with Hyper-Threading enabled, we just need to use more logical cores to do so.

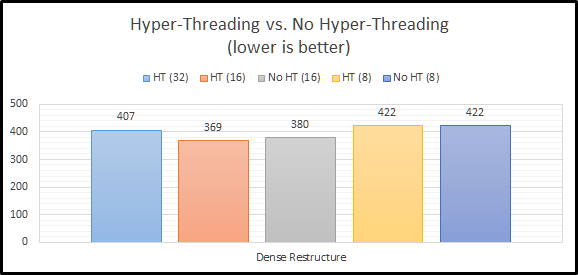

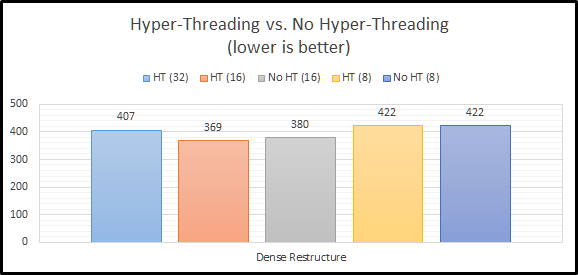

Dense Restructure

The last step in the EssBench benchmark is to force a dense restructure. This seems to bring us back to Essbase being somewhat Hyper-Threading indifferent. The Hyper-Threaded testing shows a very small gain, but nothing meaningful.

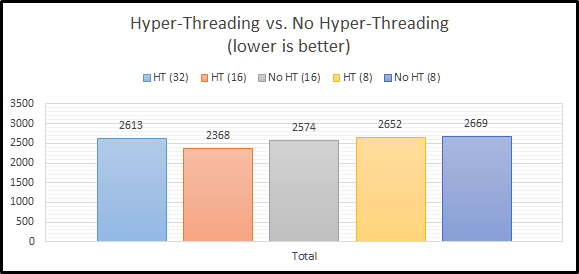

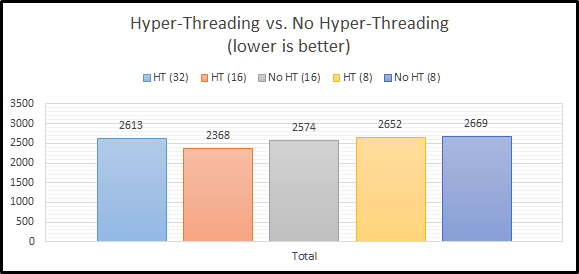

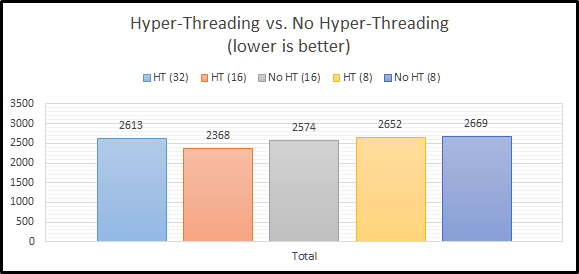

Total Benchmark Time

When we look at the final total results, we can see that Hyper-Threading overall performs better than or basically the same as no Hyper-Threading. This definitely goes against the long-standing recommendation to turn off Hyper-Threading.

Summarized Results

Let’s look at the all of the results in a grid:

And as a graph:

Conclusion

When I started down the path of EssBench and my Essbase Performance Series, I had a set of preconceived notions. Essbase admins should disable Hyper-Threading. Right? Testing on the other hand seems to completely disagree. The evidence is pretty clear that for this application, Hyper-Threading is at worst…a non-factor and at best…a benefit. This means that we no longer have to tell IT to disable Hyper-Threading. More importantly, this means that we don’t have to do something different and give IT a reason to question us.

The other important thing to take away from this…test your application. Try different settings on calculations to see what setting works the best for that calculation. Clearly we shouldn’t just rely on a single setting for CALCPARALLEL. If we wanted the absolute fastest performance, we would leave Hyper-Threading enabled and then use CALCPARALLEL set to 16 for everything but our currency conversion. For currency conversion we can save ourselves 40 seconds (over 10%) of our processing time by setting CALCPARALLEL to 32.

Brian Marshall

July 5, 2017

I hope everyone enjoys their day off! Adobe gave me some great free stock art for the 4th…so I had to use it! The History Channel has a nice page dedicated to the History of Independence Day.

</shortest blog post ever>

Brian Marshall

July 4, 2017

Introduction to Weekly Offenders

I had a lot of luck doing a weekly update about the blogging community in the Oracle EPM space. You may have all noticed that I had a lot less luck doing a monthly update. I found that everyone posts too much content to keep up with doing an update just once a month. So…back to weekly! Now that Kscope17 is in the rear-view mirror, I can finally get back to bogging more regularly. As part of that, the week in review is back! To keep with the corny theme of my newly branded website, welcome to the first in a weekly series I’m naming Weekly Offenders. For the first post back, we’ll put particular emphasis on Kscope17-related blog posts. Let’s get started!

Cameron has a LOT of posts regarding Kscope17. Thanks for the coverage!

Keith also has a lot of posts about Kscope17. He also sneaks in a post about splitting multi-period load riles into single period load files:

I had a pair of posts this week. First a quick wrap-up of Kscope17…and then the introduction of my EssBench project.

Eric was at Kscope17…but instead he posted about FCCS! Specifically, he shows us how to replace your logo. At least he used a Kscope17 logo?

Sibin has a pair of non-Kscope17 posts. First he covers connecting to Oracle databases using FDMEE. While on the topic of FDMEE, he also goes into importing adapters.

Glen discusses Kscope17 while blogging about Smart View and issues related to Office 2016. He gives us a nice round-up of Oracle articles about the topic.

Ludovic has a pair of posts about Kscope17 as well:

Eric has a post on two divergent topics. He covers the future of On-Prem and then…talks about SaaS products coming from Oracle in the Data and Meta-Data Management space. On a more Kscope17 related not, be sure to congratulate Eric on winning a Best Speaker aware for Business Content. Congrats Eric! I guess you didn’t need more gong after all. 😉

John Goodwin doesn’t let Kscope17 taking place get in the way of more great content. He starts off with a continuation of his EPM Cloud series on Managing Application with Smart View. He also gives us a public service announcement about the new 11.1.2.4.018 patch and new behavior that has been included.

Dayalan has a post about using substitution variables in a direct page link to a form or dashboard. I love stuff like this…

Last…but not least, Vijay has a piece on using ODI to import data from Essbase produced using a calculation script. I feel like I’ve noticed this before…but I was never smart enough to write it down. Thanks for the reminder that hopefully Google will find for me next time!

Be On the Lookout!

That’s it for this week! Be on the lookout for more great EPM Blog content next week!

Brian Marshall

July 3, 2017