My posts seem to be getting a little further apart each week… This week, we’ll continue our dashboard series by adding in some pretty graphs for FreeNAS. Before we dive in, as always, we’ll look at the series so far:

- An Introduction

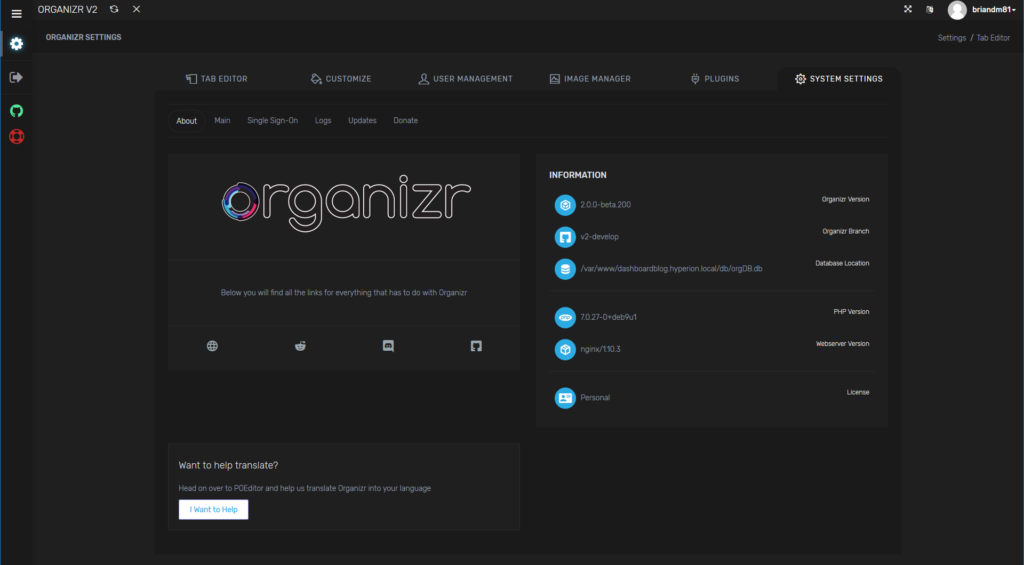

- Organizr

- Organizr Continued

- InfluxDB

- Telegraf Introduction

- Grafana Introduction

- pfSense

- FreeNAS

FreeNAS and InfluxDB

FreeNAS, as many of you know, is a very popular storage operating system. It provides ZFS and a lot more. It’s one of the most popular storage operating systems in the homelab community. If you were so inclined, you could install Telegraf on FreeNAS. There is a version available for FreeBSD and I’ve found a variety of sample configuration files and steps. But…I could never really get them working properly. Luckily, we don’t actually need to install anything in FreeNAS to get things working. Why? Because FreeNAS already has something built in: CollectD. CollectedD will send metrics directly to Graphite for analysis. But wait…we haven’t touched Graphite at all in this series, have we? No…but thanks to InfluxDB’s protocol support for Graphite.

Graphite and InfluxDB

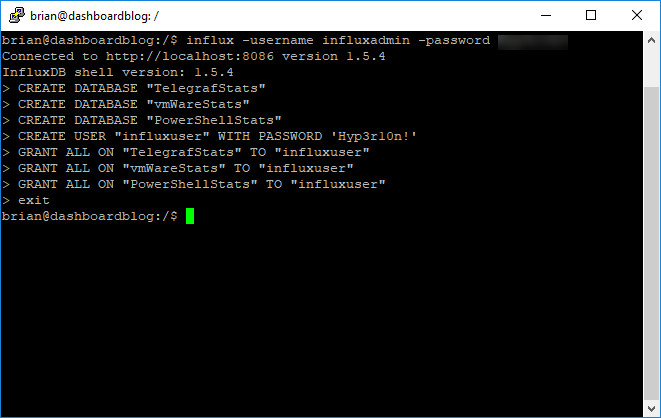

To enable support for Graphite, we have to modify the InfluxDB configuration file. But, before we get to that, we need to go ahead and create our new InfluxDB and provision a user. If you take a look back at part 4 of this series, we cover this in more depth, so we’ll be quick about it now. We’ll start by logging into InfluxDB via SSH:

influx -username influxadmin -password YourPassword

Now we will create the new database for our Graphite statistics and grant access to that database for our influx user:

CREATE DATABASE "GraphiteStats"

GRANT ALL ON "GraphiteStats" TO "influxuser"

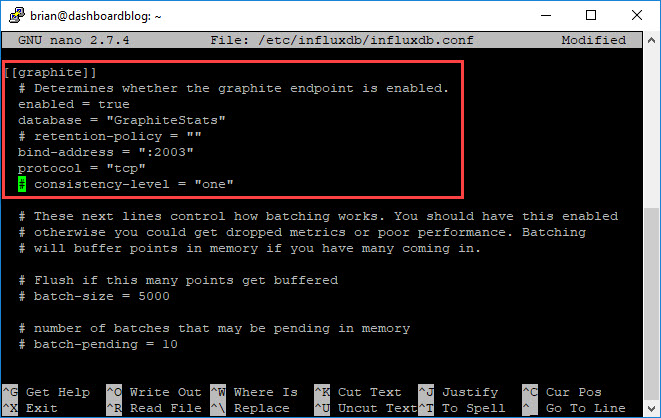

And now we can modify our InfluxDB configuration:

sudo nano /etc/influxdb/influxdb.conf

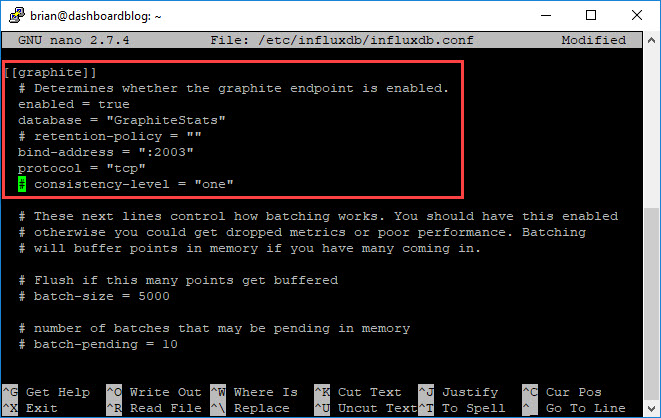

Our modifications should look like this:

And here’s the code for those who like to copy and paste:

[[graphite]]

# Determines whether the graphite endpoint is enabled.

enabled = true

database = "GraphiteStats"

# retention-policy = ""

bind-address = ":2003"

protocol = "tcp"

# consistency-level = "one"

Next we need to restart InfluxDB:

sudo systemctl restart influxdb

InfluxDB should be ready to receive data now.

Enabling FreeNAS Remote Monitoring

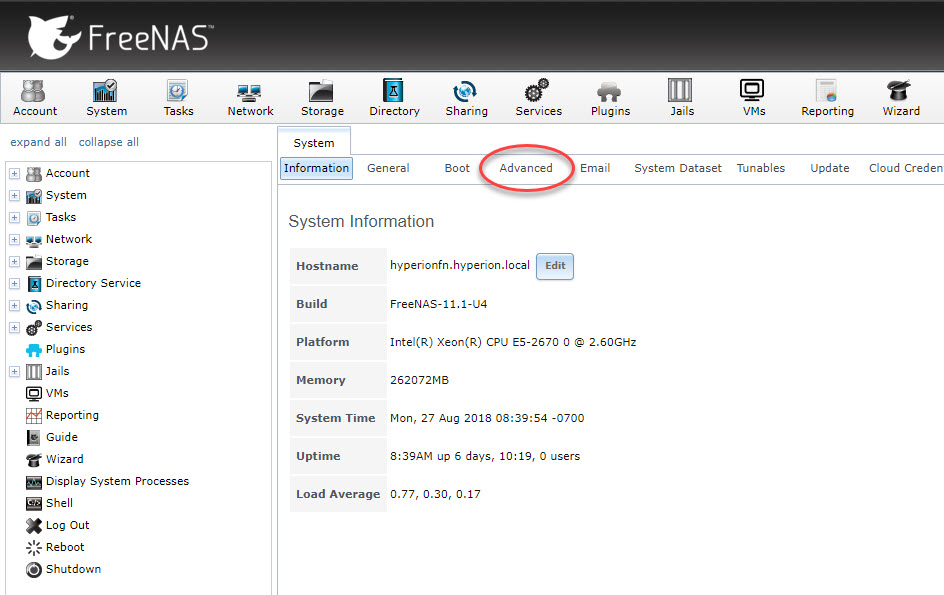

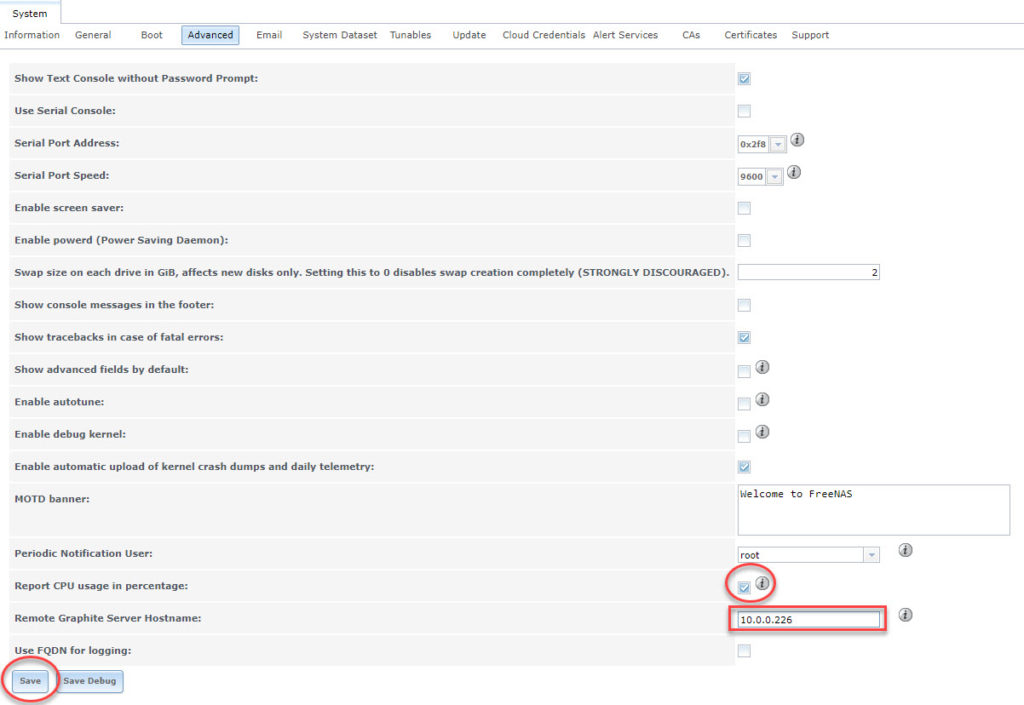

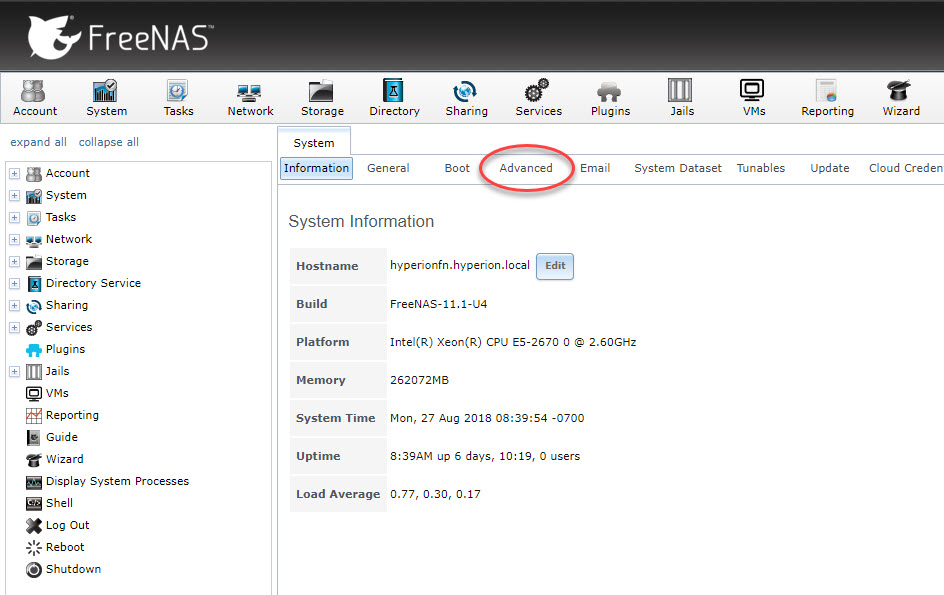

Log into your FreeNAS via the web and click on the Advanced tab:

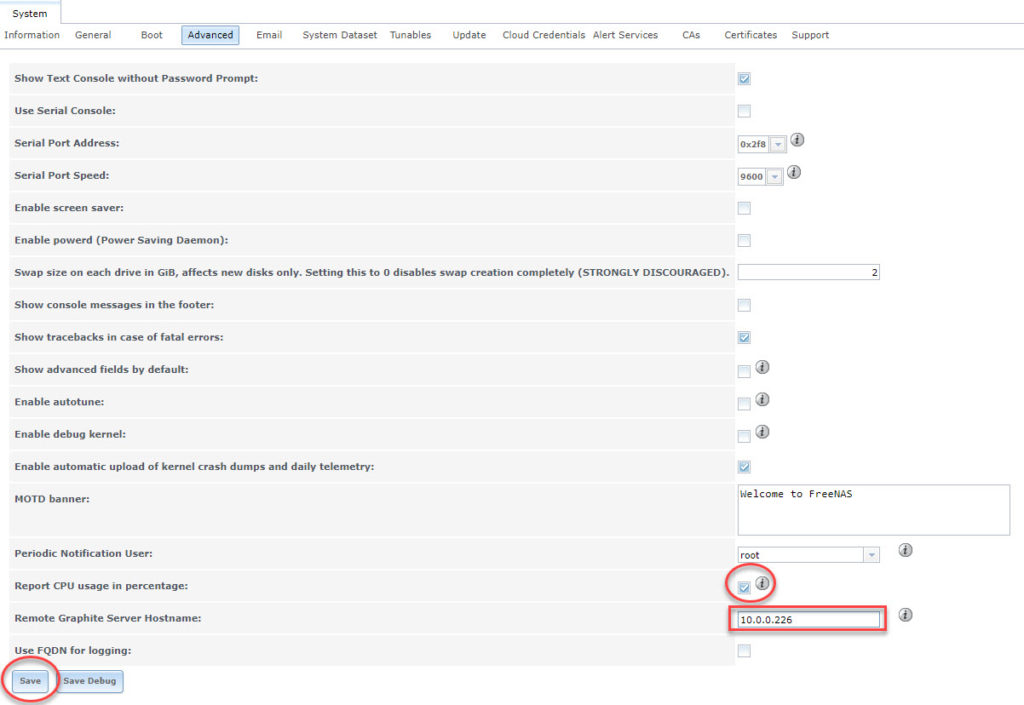

Now we simply check the box that reports CPU utilization as a percent and enter either the FQDN or IP address of our InfluxDB server and click Save:

Once the save has completed, FreeNAS should start logging to your InfluxDB database. Now we can start visualizing things with Grafana!

FreeNAS and Grafana

Adding the Data Source

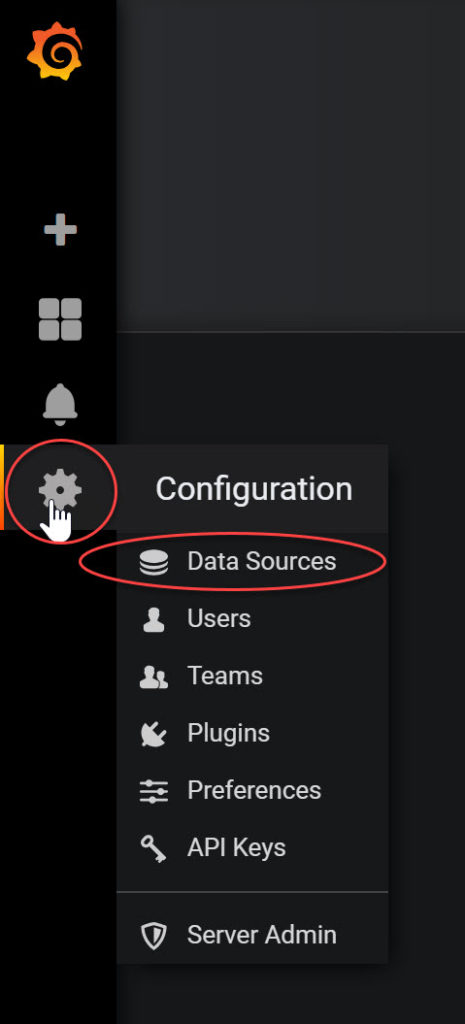

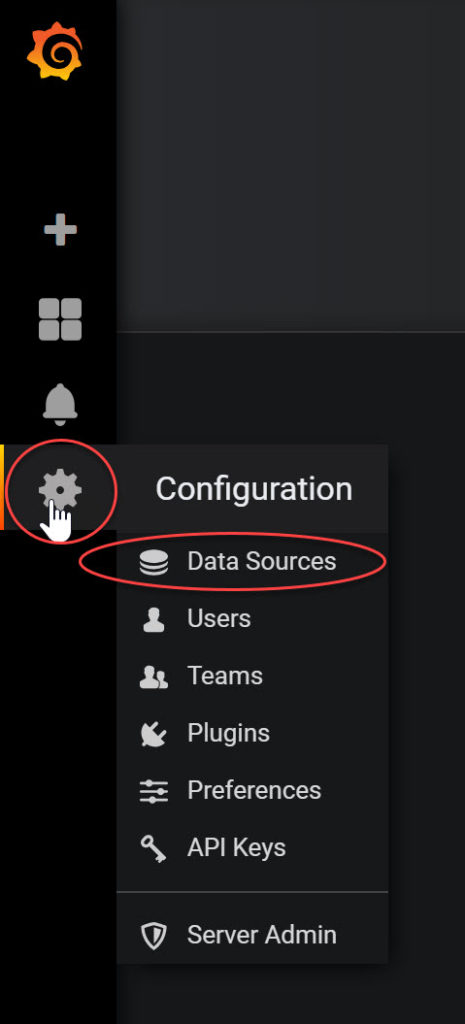

Before we can start to look at all of our statistics, we need to set up our new data source in Grafana. In Grafana, hover over the settings icon on the left menu and click on data sources:

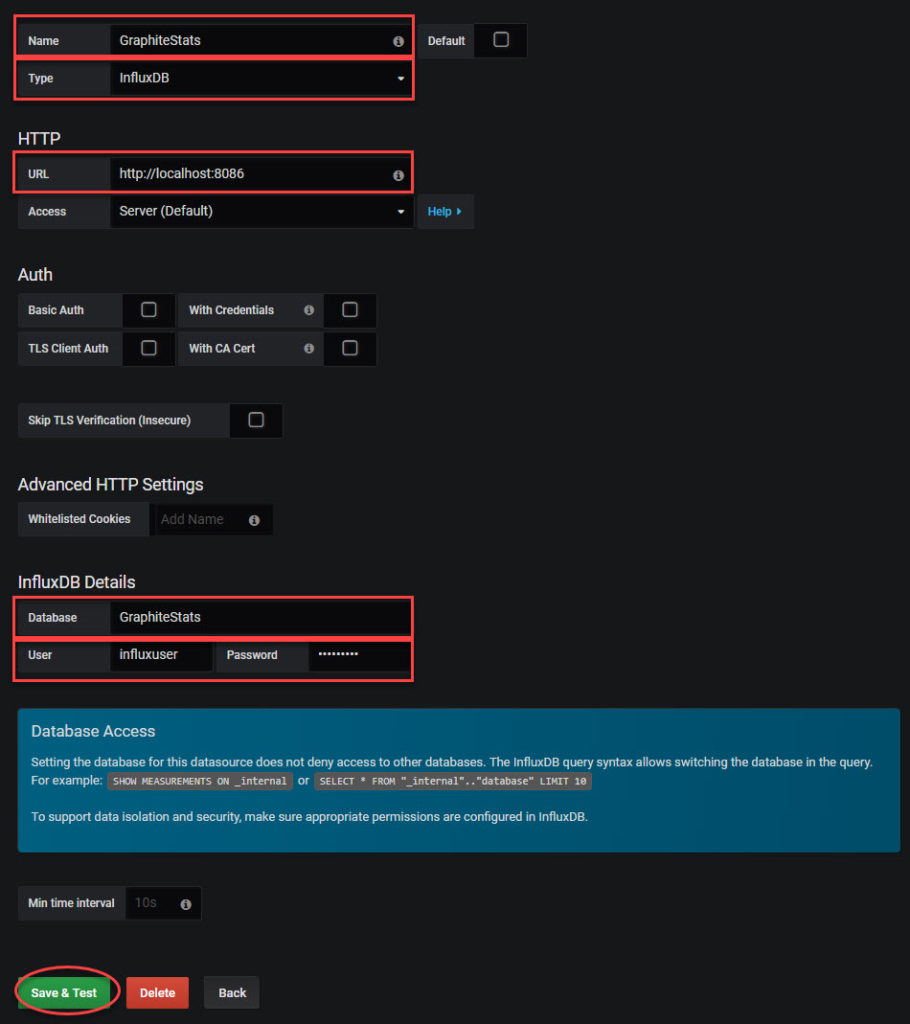

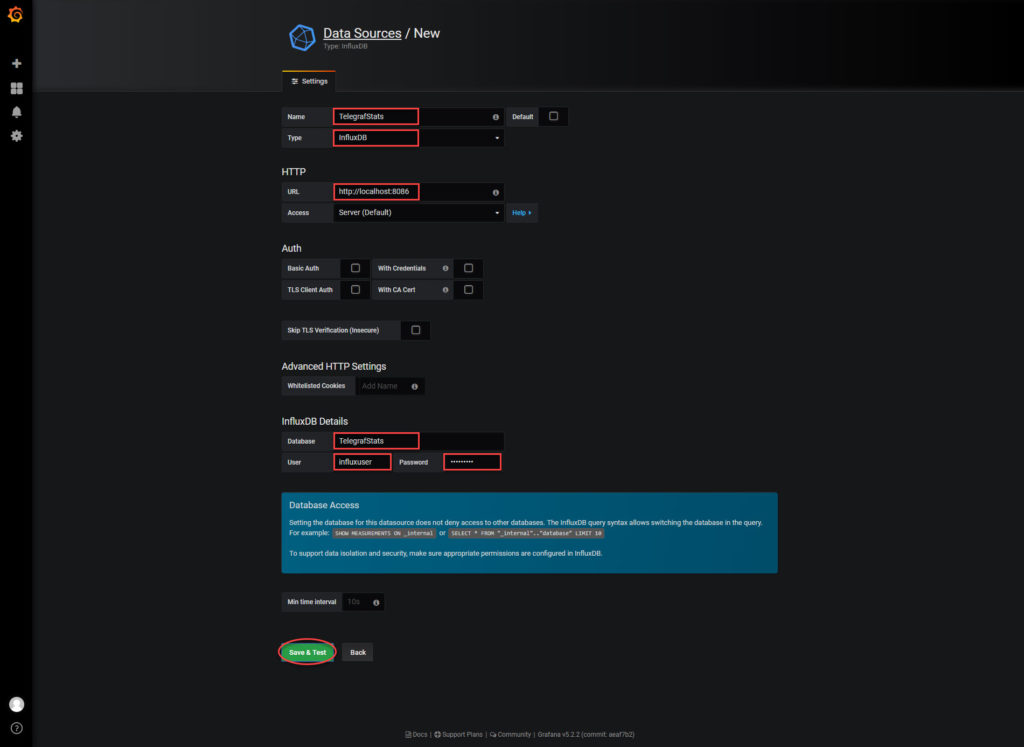

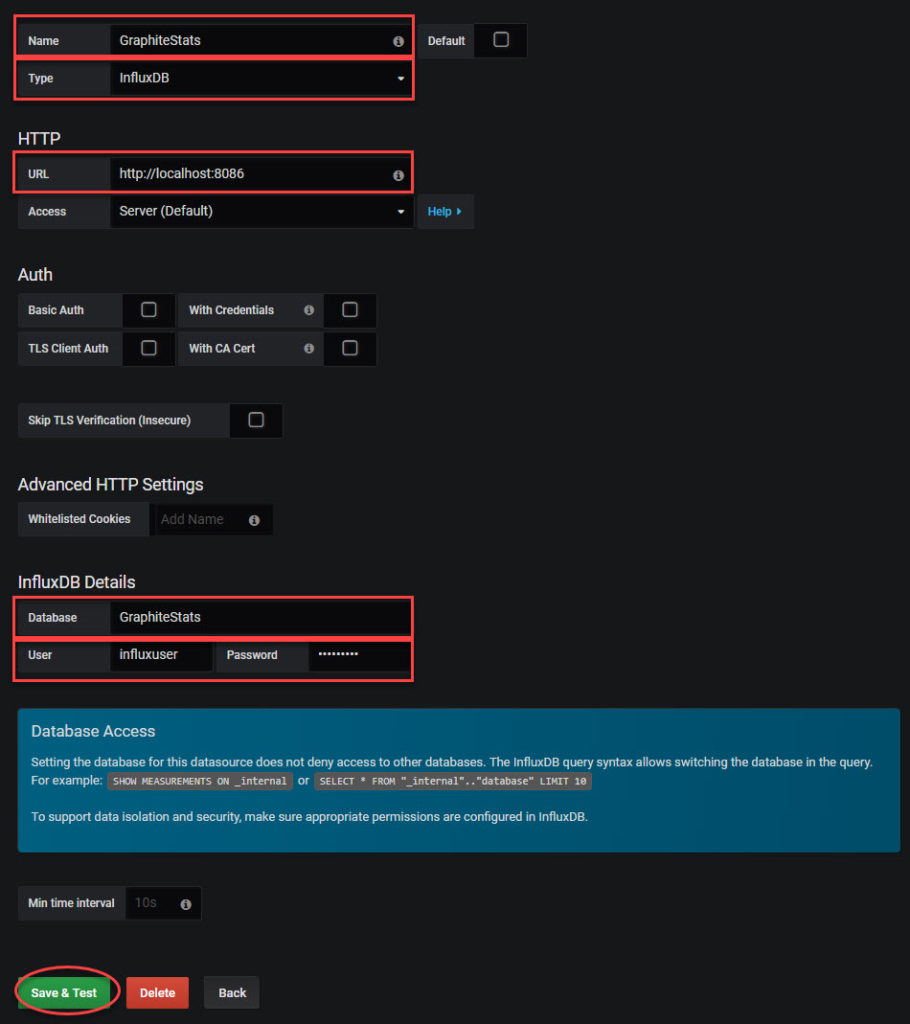

Next click the Add Data Source button and enter the name, database type, URL, database name, username, and password and click Save & Test:

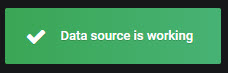

Assuming everything went well, you should see this:

Finally…we can start putting together some graphs.

CPU Usage

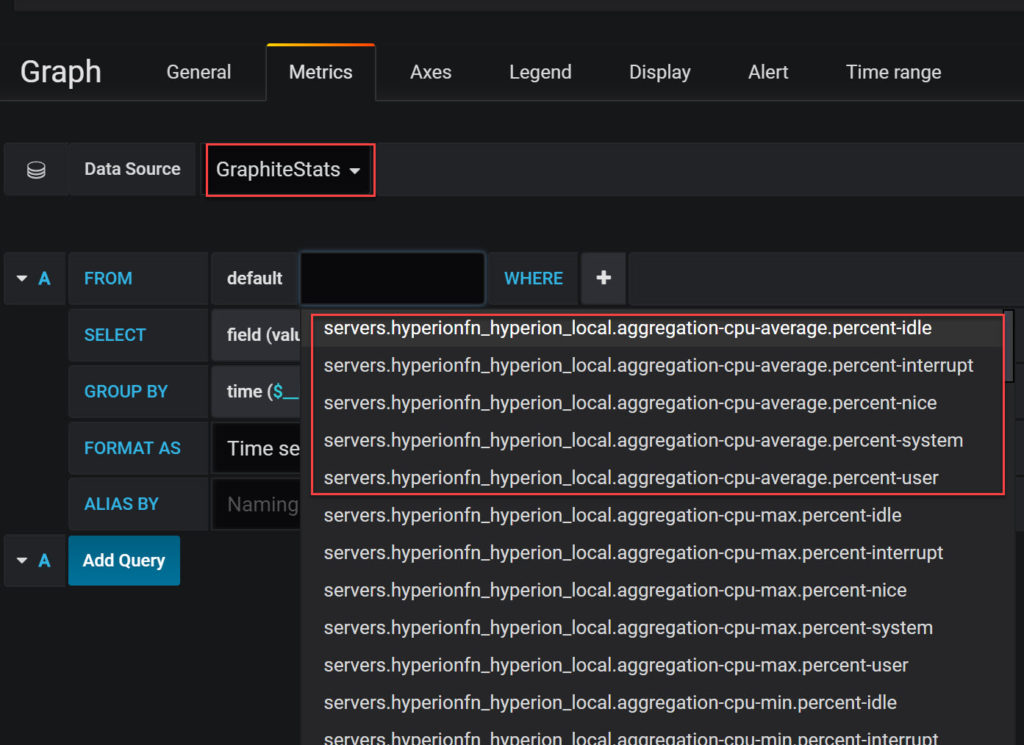

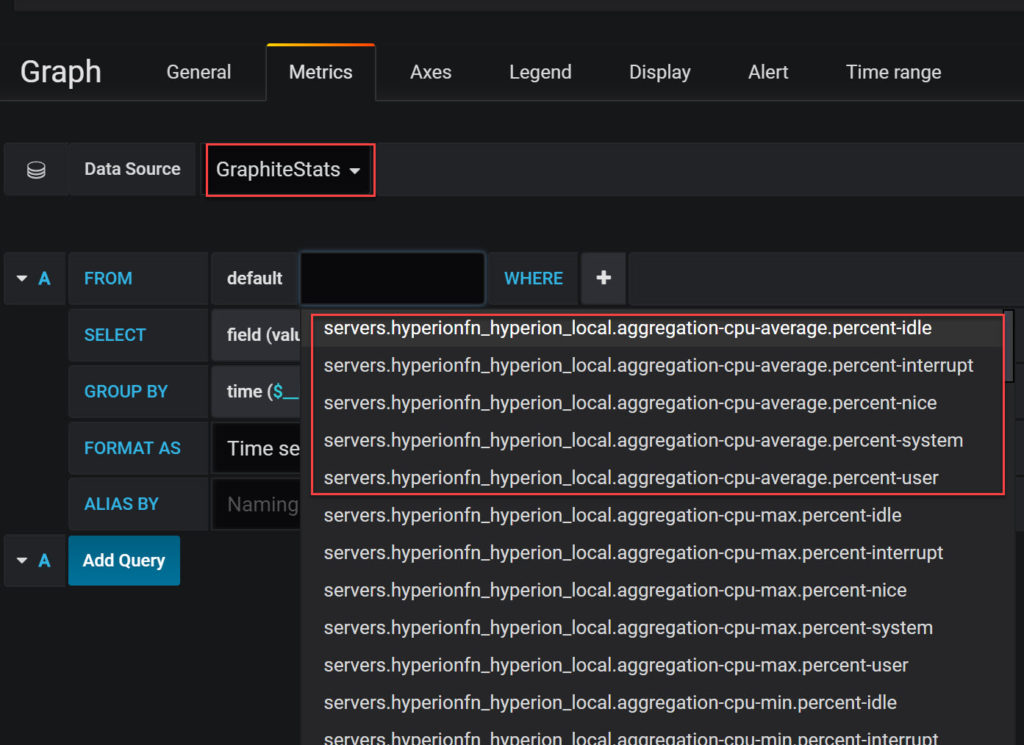

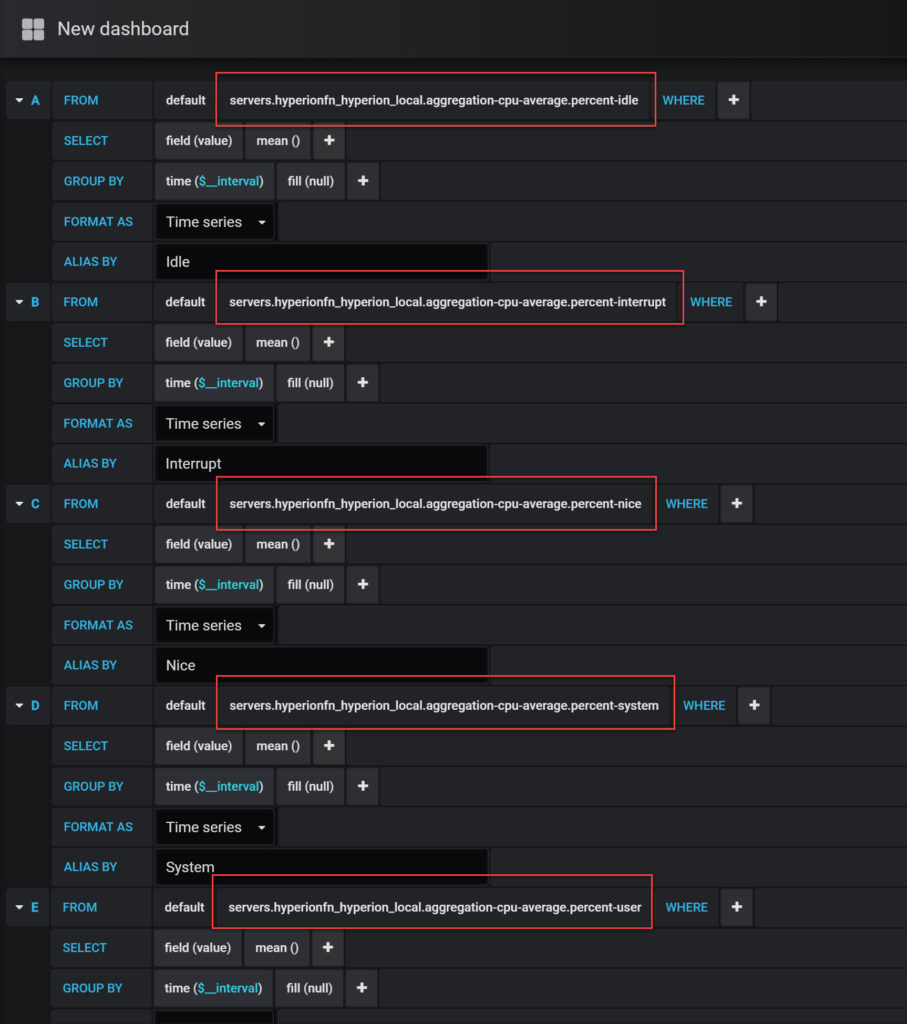

We’ll start with something basic, like CPU usage. Because we checked the percentage box while configuring FreeNAS, this should be pretty straight forward. We’ll create a new dashboard and graph and start off by selecting our new data source and then clicking Select Measurement:

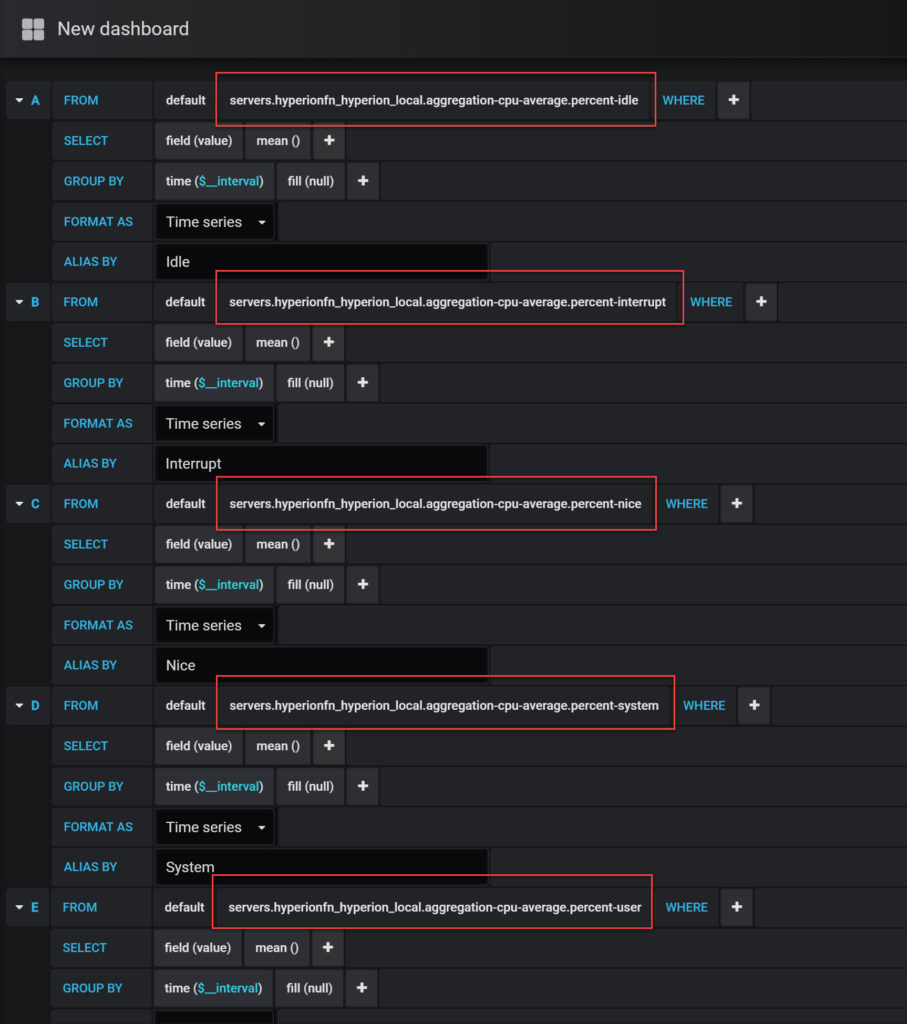

The good news is that we are starting with our aggregate CPU usage. The bad news is that this list is HUGE. So huge in fact that it doesn’t even fit in the box. This means as we look for things beyond our initial CPU piece, we have to search to find them. Fun… But let’s get start by adding all five of our CPU average metrics to our graph:

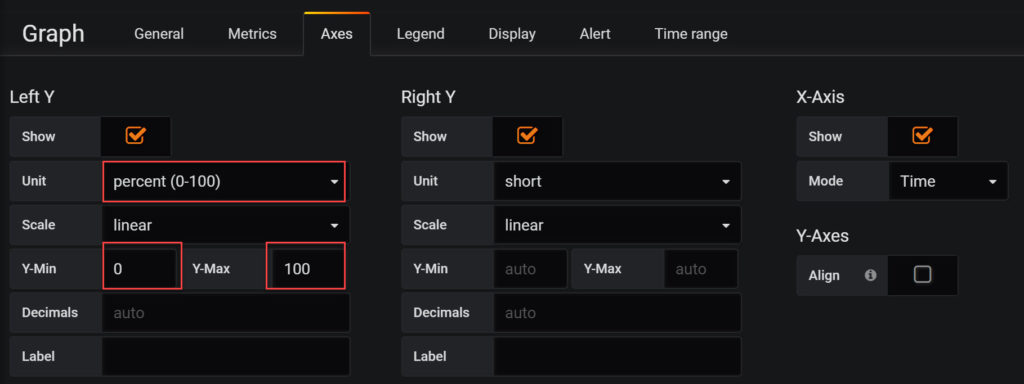

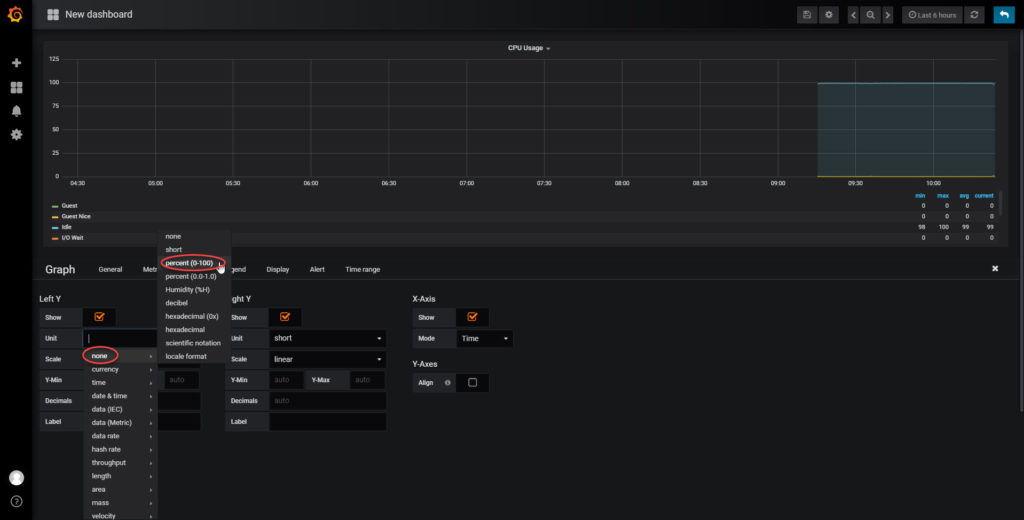

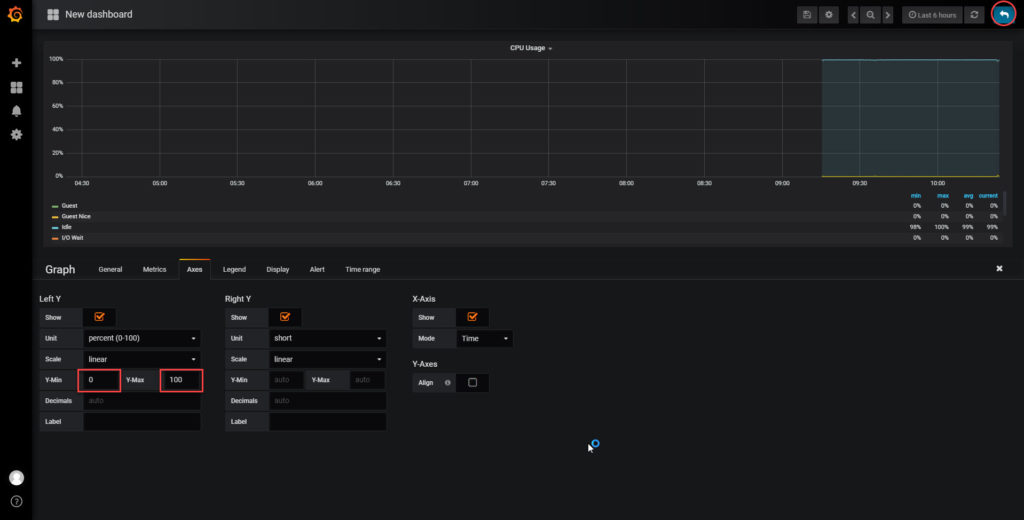

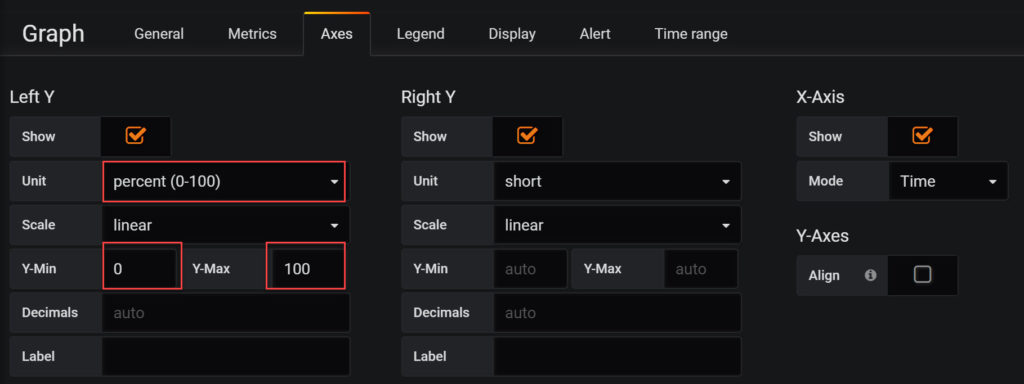

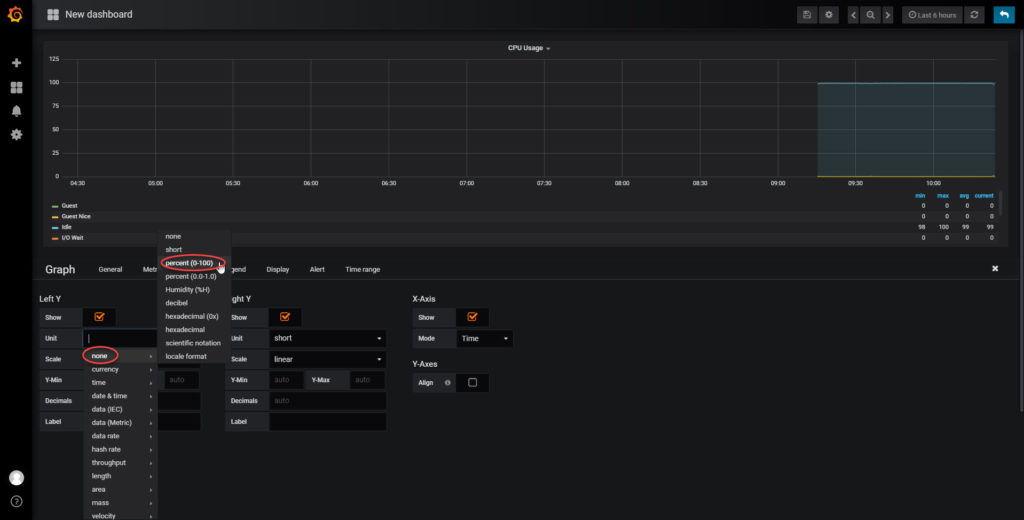

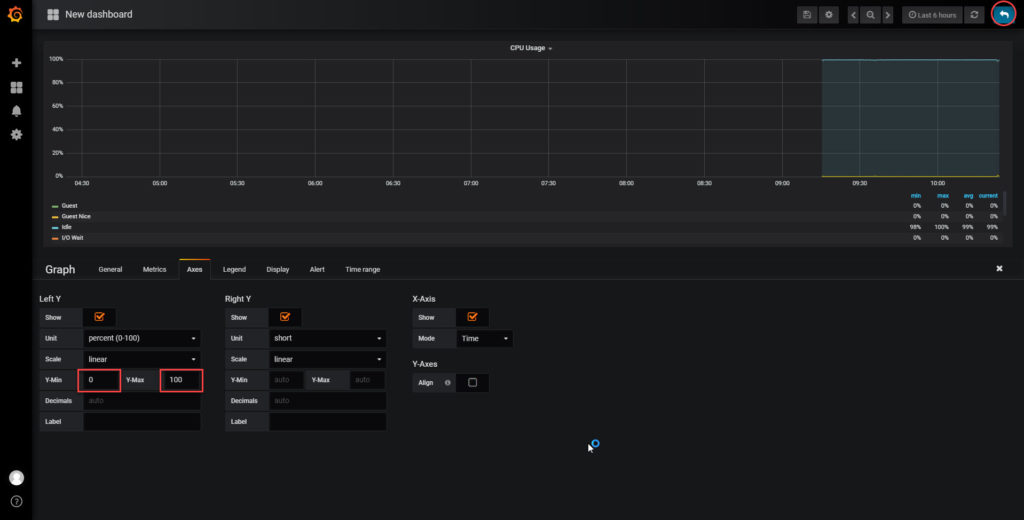

We also need to adjust our Axis settings to match up with our data:

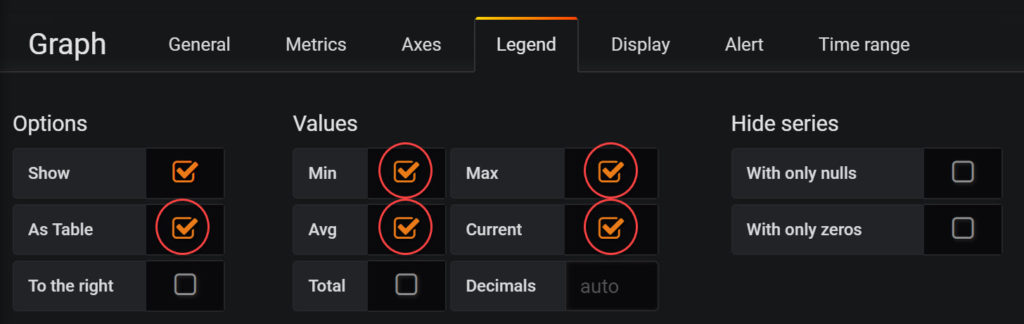

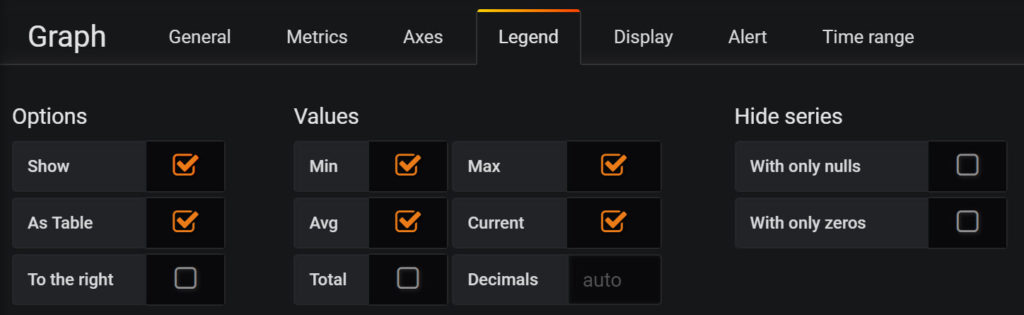

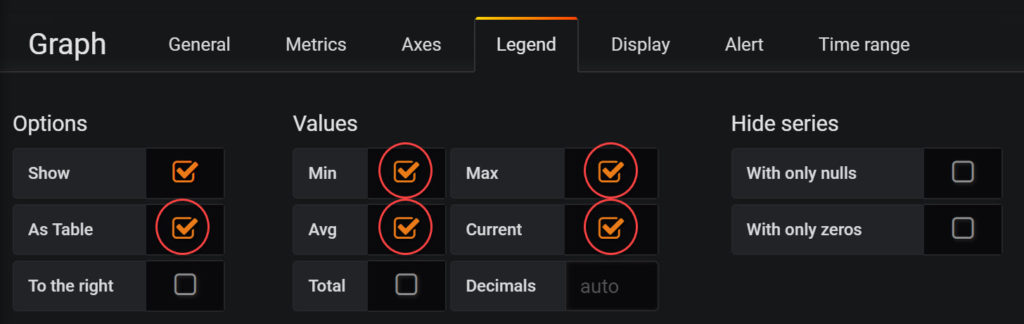

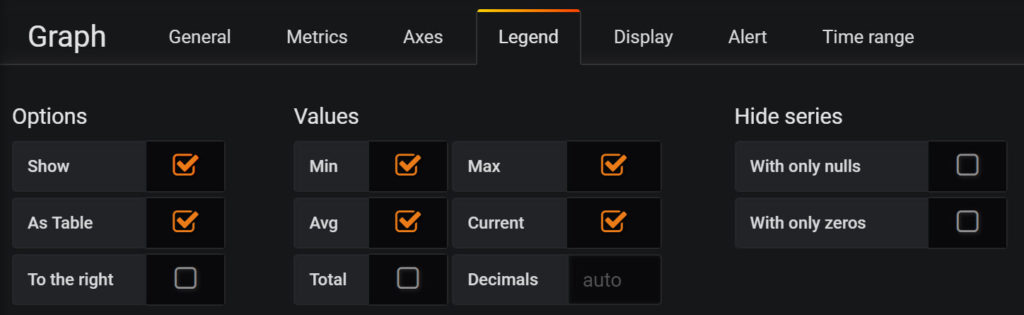

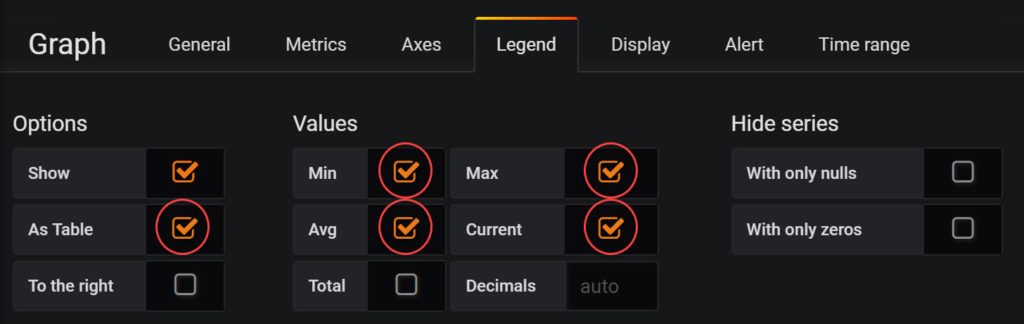

Now we just need to set up our legend. This is optional, but I really like the table look:

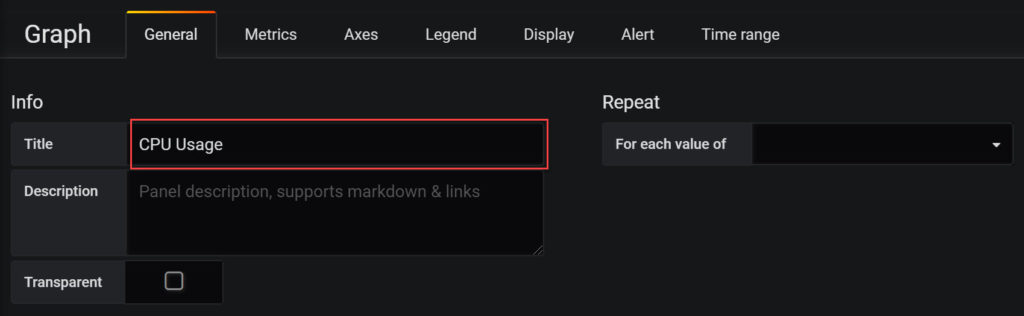

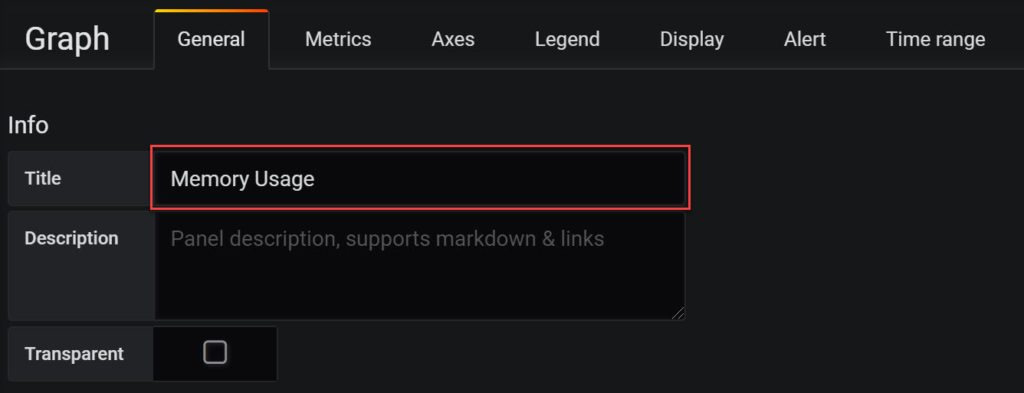

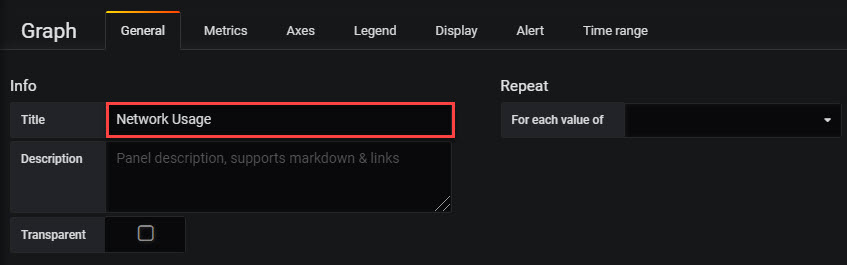

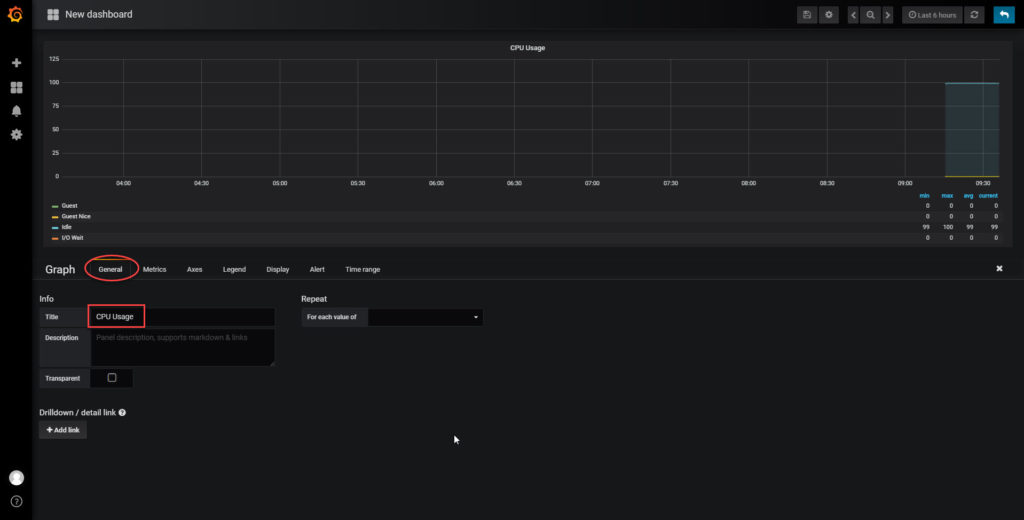

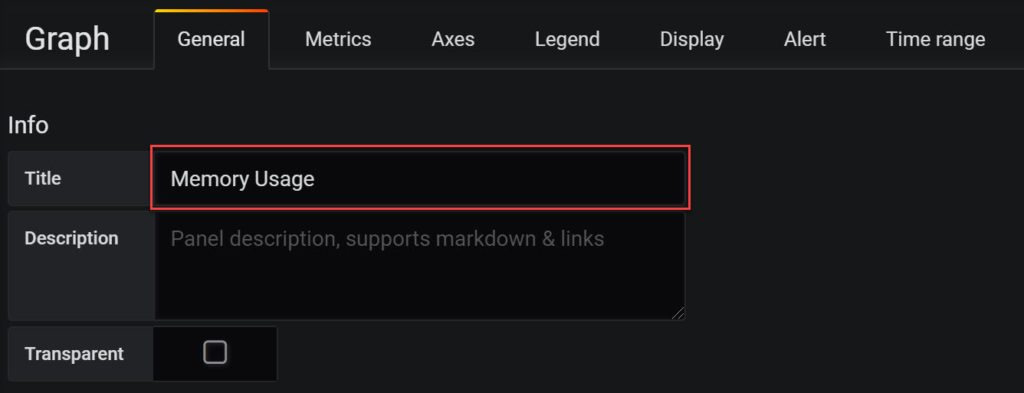

Finally, we’ll make sure that we have a nice name for our graph:

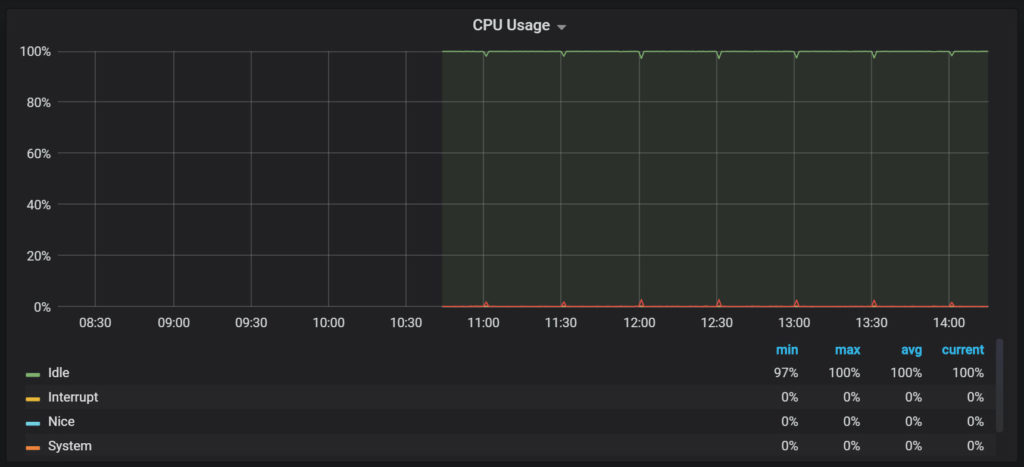

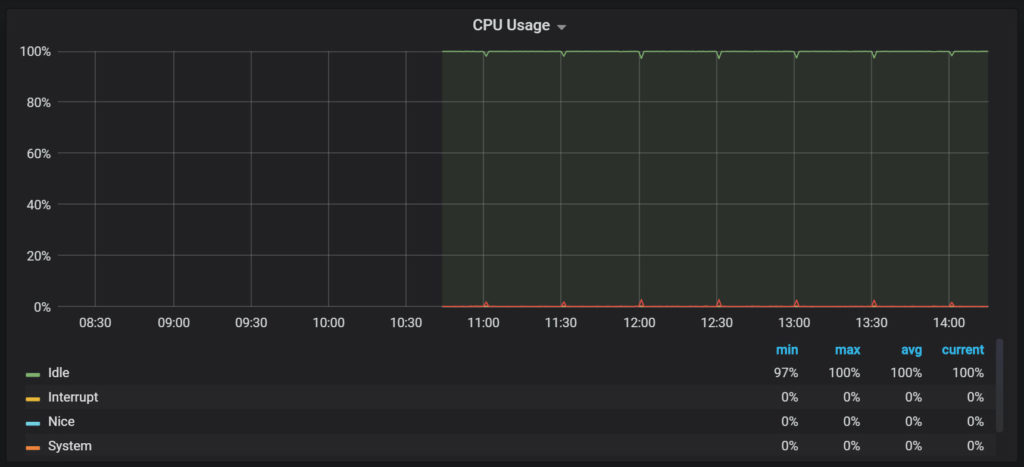

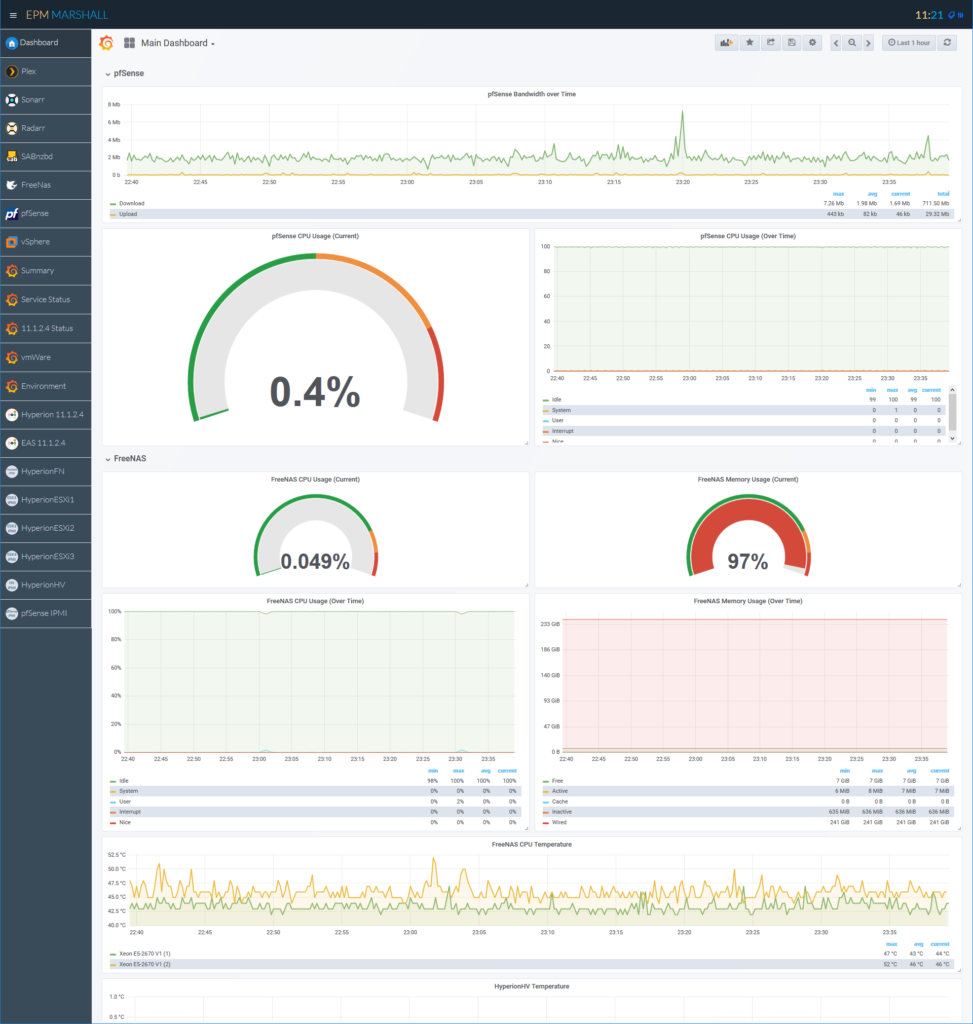

This should leave us with a nice looking CPU graph like this:

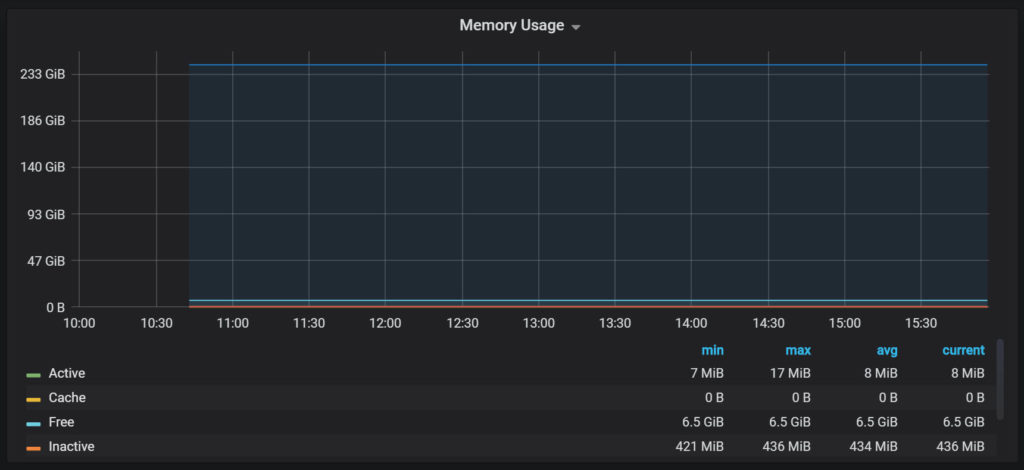

Memory Usage

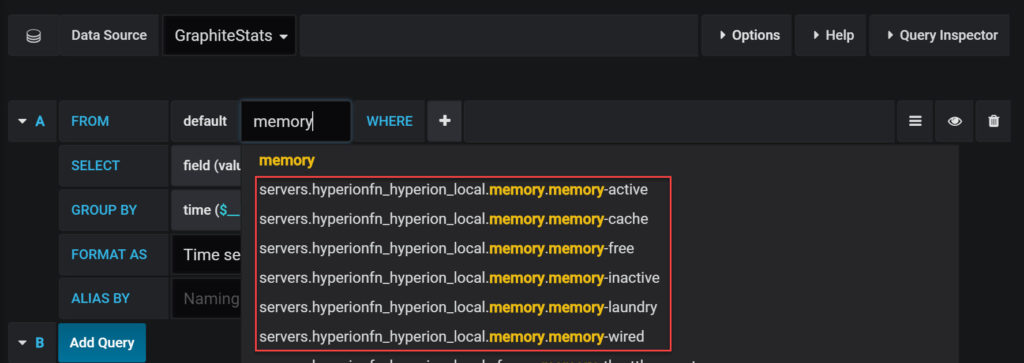

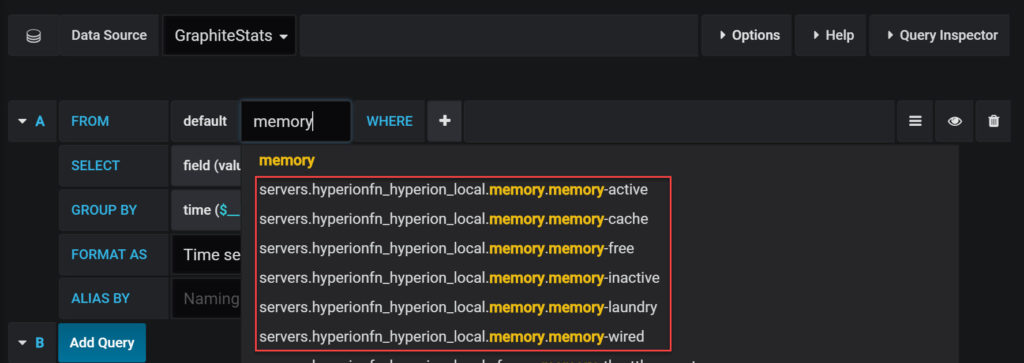

Next up, we have memory usage. This time we have to search for our metric, because as I mentioned, the list is too long to fit:

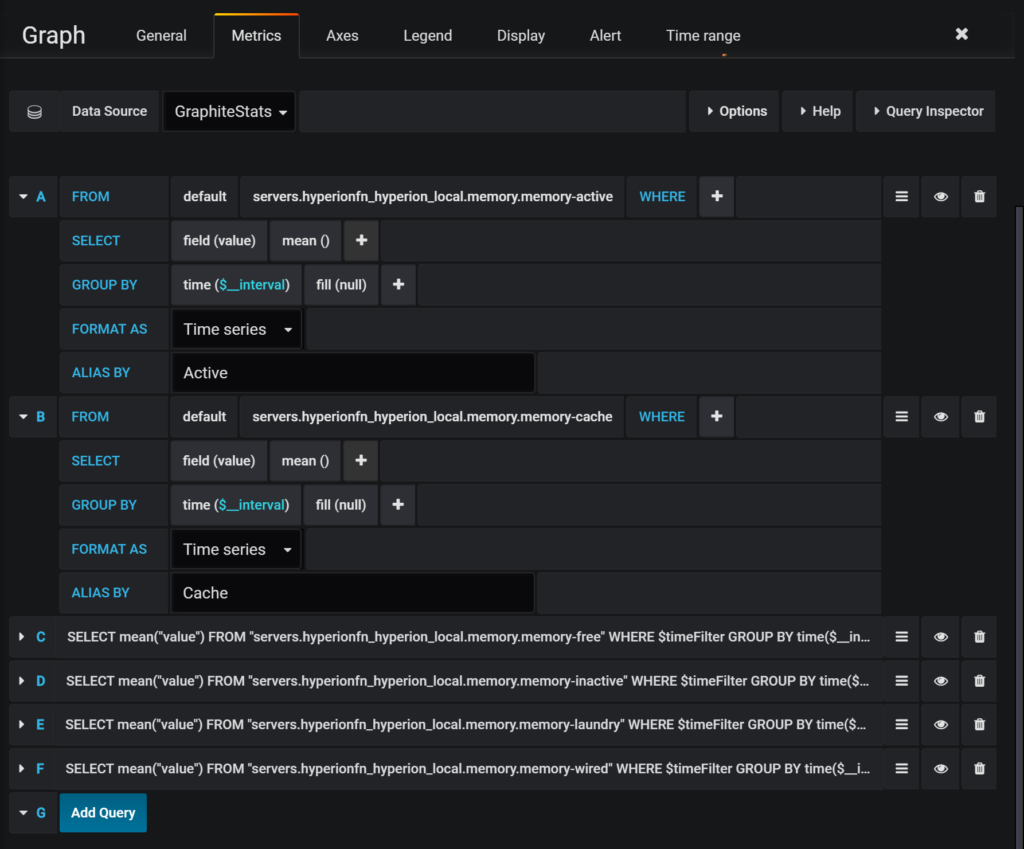

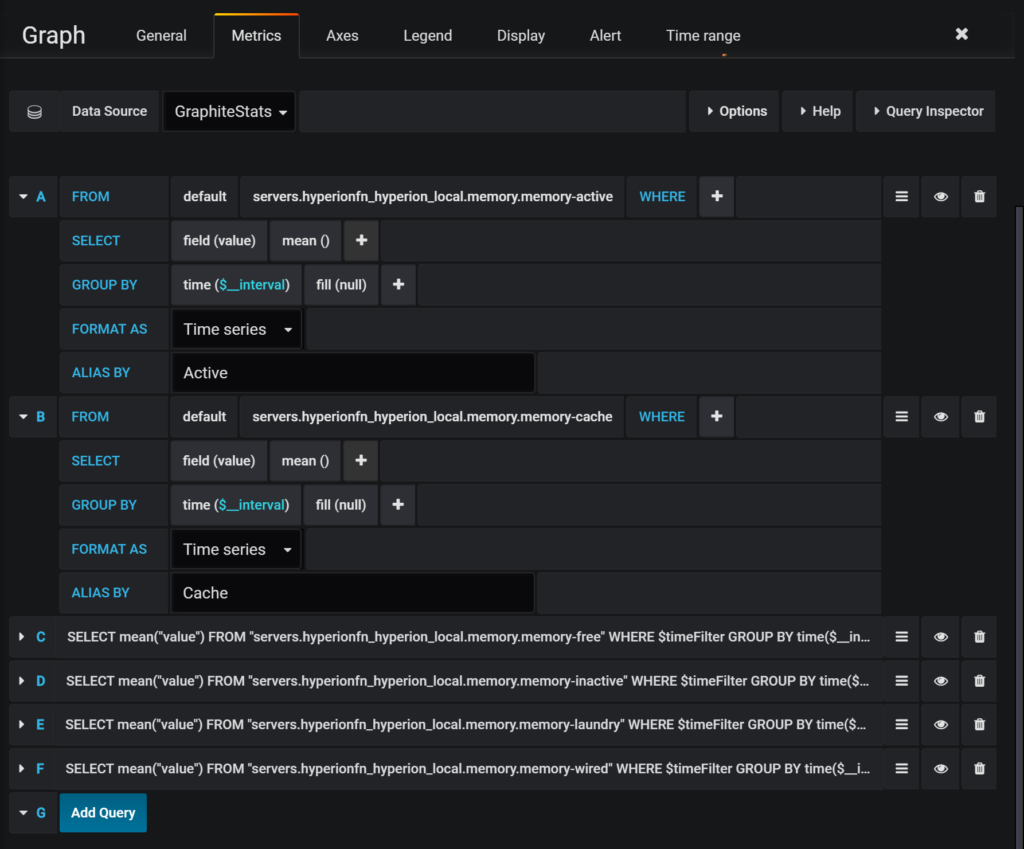

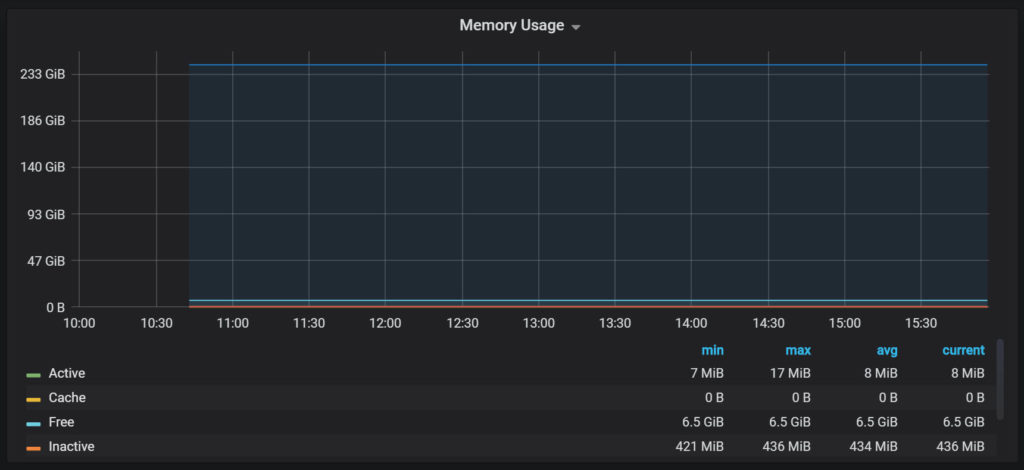

We’ll add all of the memory metrics until it looks something like this:

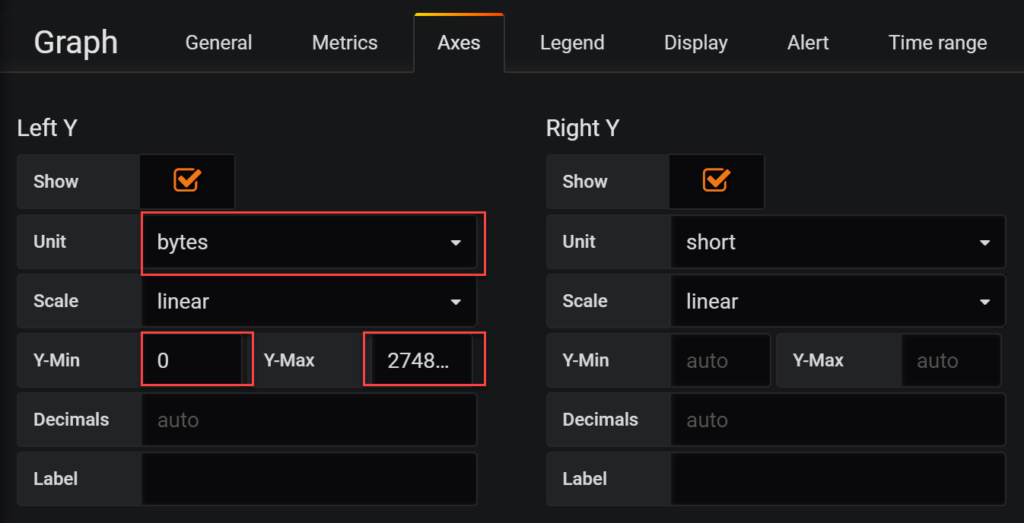

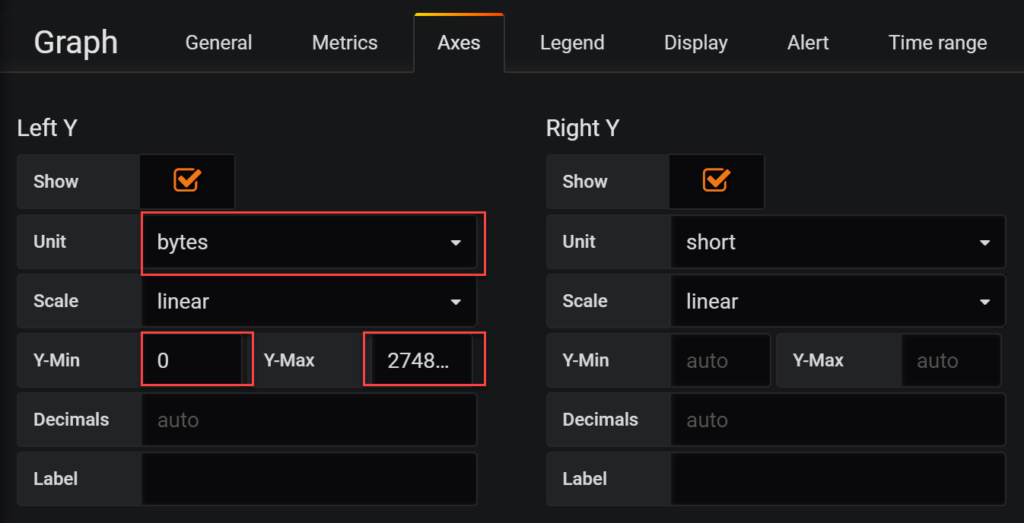

As with our CPU Usage, we’ll adjust our Axis settings. This time we need to change the Unit to bytes from the IEC menu and enter a range. Our range will not be a simple 0 to 100 this time. This time we set the range from 0 to the amount of ram in your system in bytes. So…if you have 256GB of RAM, its 256*1024*1024*1024 (274877906944):

And our legend:

Finally a name:

And here’s what we get at the end:

Network Utilization

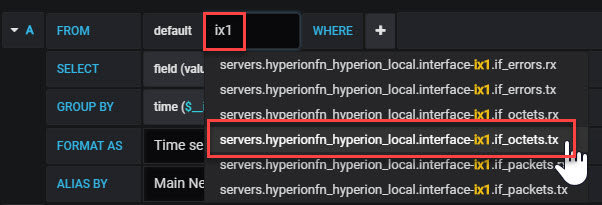

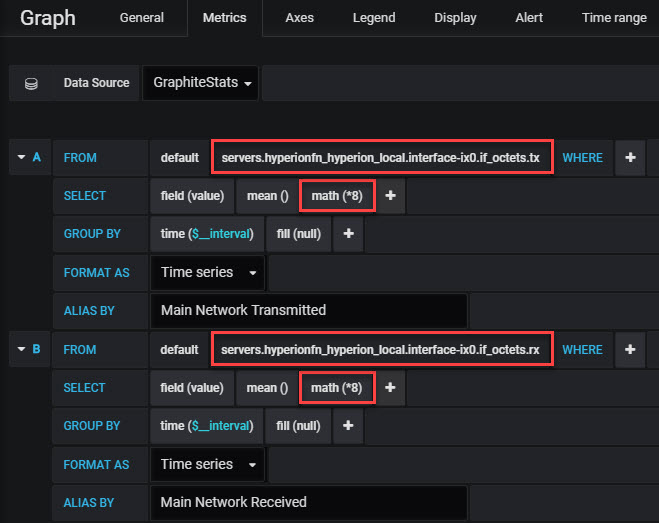

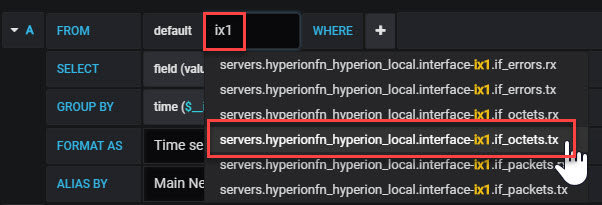

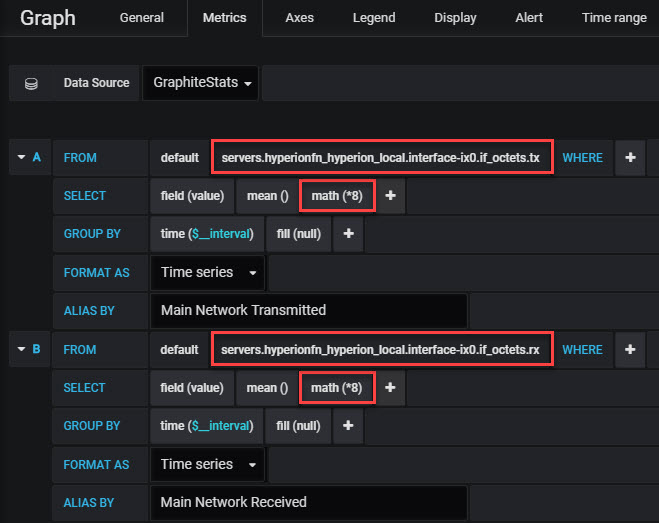

Now that we have covered CPU and Memory, we can move on to network! Network is slightly more complex, so we get to use the math function! Let’s start with our new graph and search for out network interface. In my case this is ix1, my main 10Gb interface:

Once we add that, we’ll notice that the numbers aren’t quite right. This is because FreeNAS is reporting the number is octets. Now, technically an octet should be 8 bits, which is normally a byte. But, in this instance, it is reporting it as a single bit of the octet. So, we need to multiply the number by 8 to arrive at an accurate number. We use the math function with *8 as our value. We can also add our rx value while we are at it:

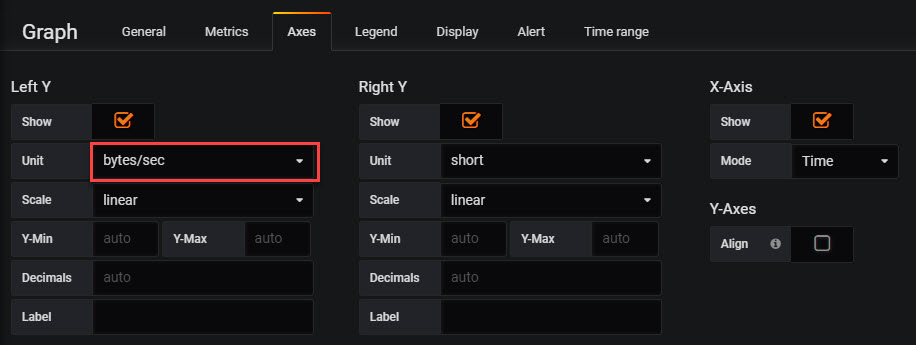

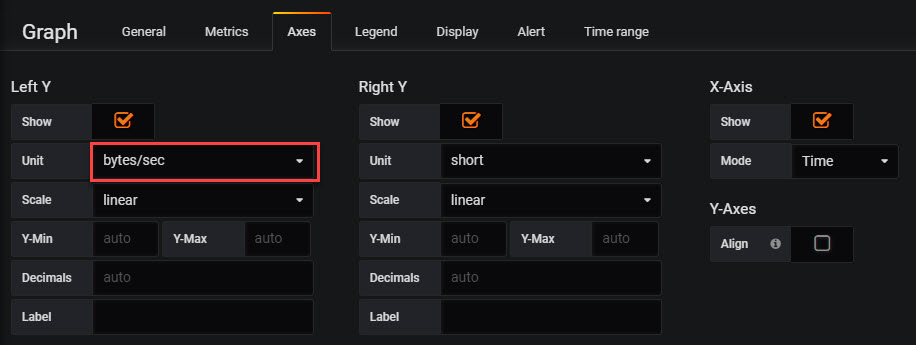

Now are math should look good and the numbers should match the FreeNAS networking reports. We need to change our Axis settings to bytes per second:

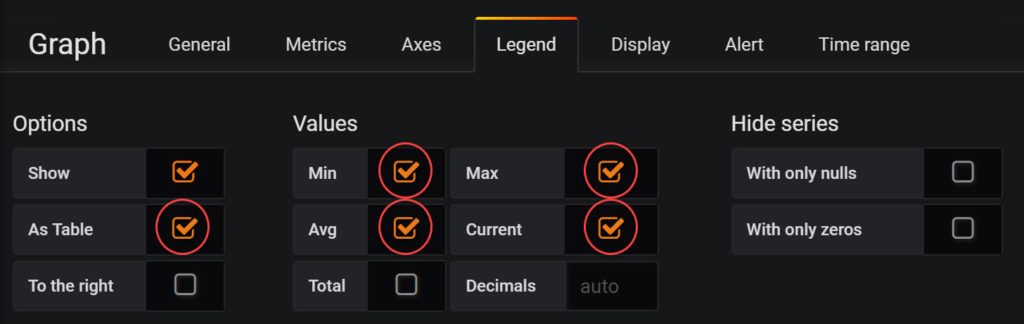

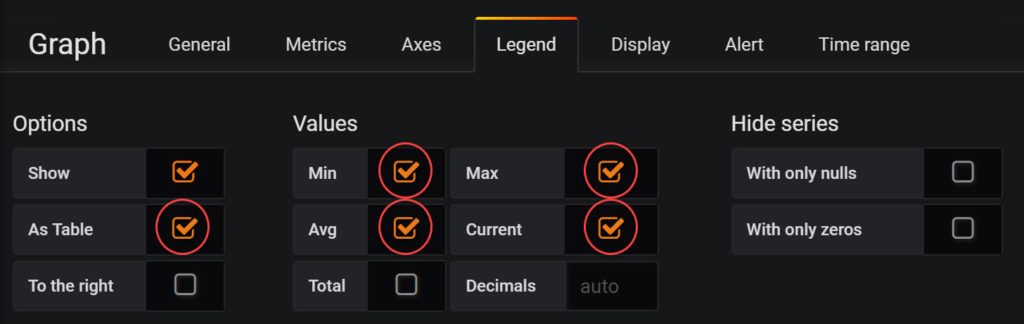

And we need our table (again optional if you aren’t interested):

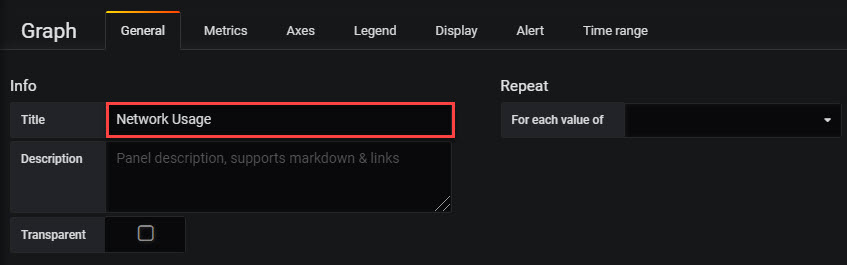

And finally a nice name for our network graph:

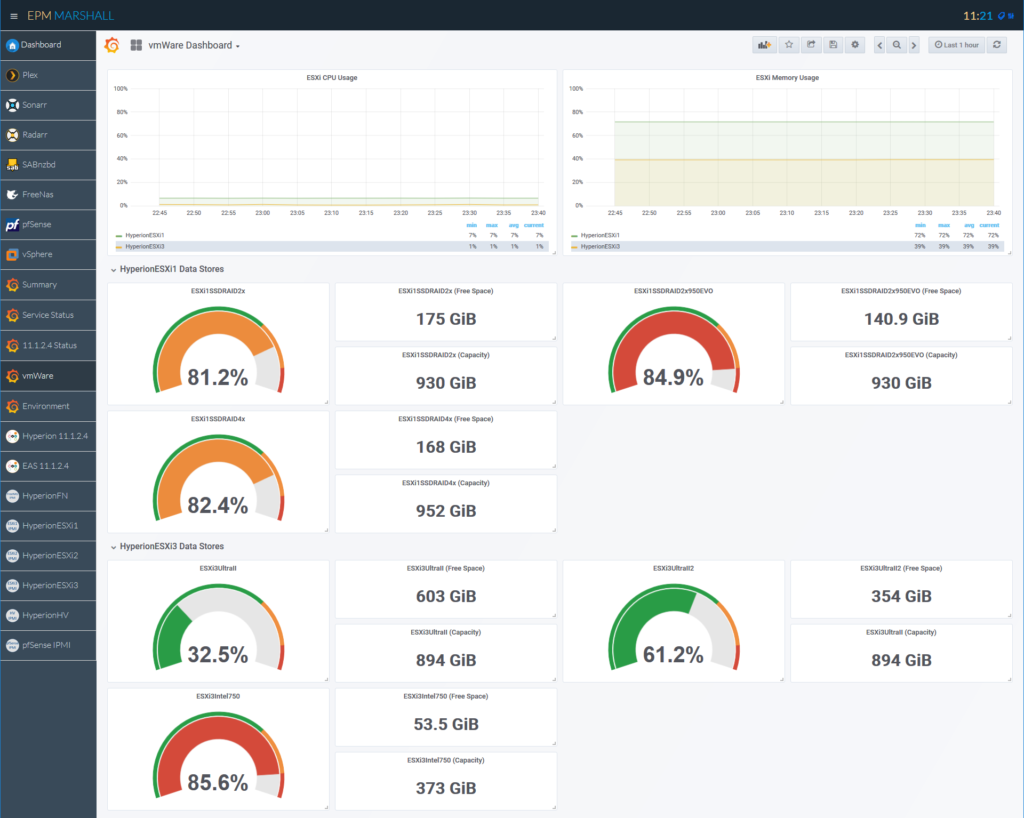

Disk Usage

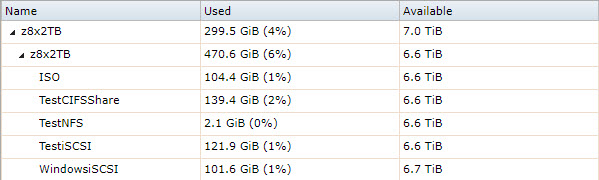

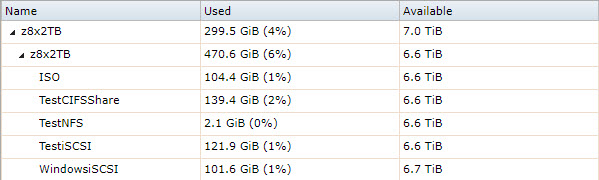

Disk usage is a bit tricky in FreeNAS. Why? A few reasons actually. One issue is the way that FreeNAS reports usage. For instance, if I have a volume that has a data set, and that data set has multiple shares, free disk space is reported the same for each share. Or, even worse, if I have a volume with multiple data sets and volumes, the free space may be reporting correctly for some, but not for others. Here’s my storage configuration for one of my volumes:

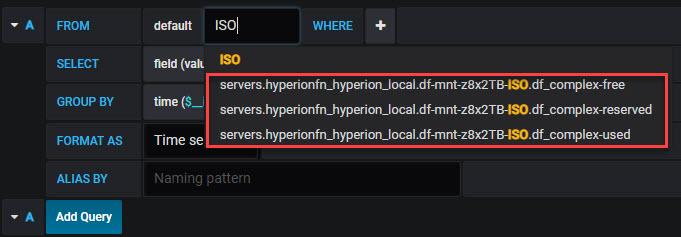

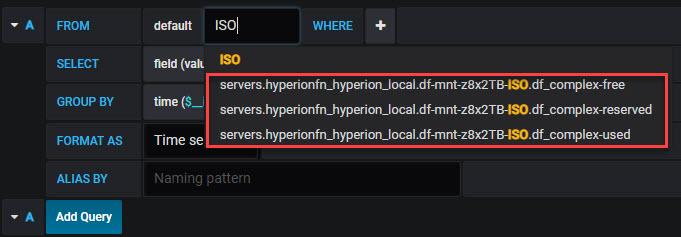

Let’s start by looking at each of these in Grafana so that we can see what the numbers tell us. For ISO, we see the following options:

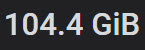

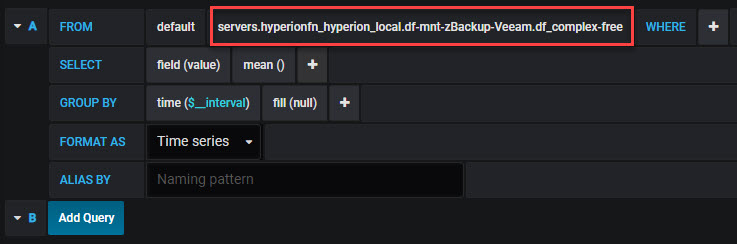

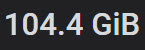

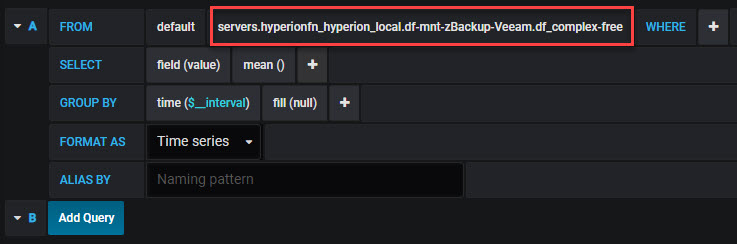

So far, this looks great, my ISO dataset has free, reserved, and used metrics. Let’s look at the numbers and compare them to the above. We’ll start by looking at df_complex-free using the bytes (from the IEC menu) for our units:

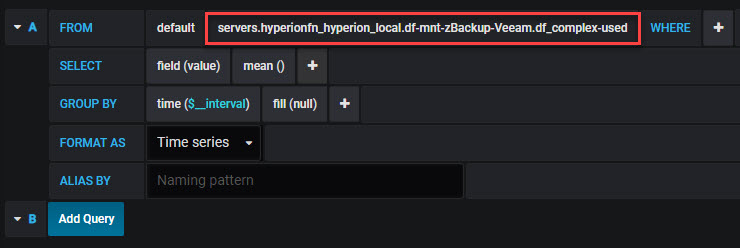

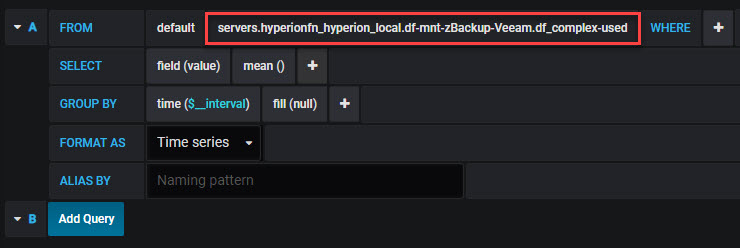

Perfect! This matches our available number from FreeNAS. Now let’s check out df_complex-used:

Again perfect! This matches our used numbers exactly. So far, we are in good shape. This is true for ISO, TestCIFSShare, and TestNFS which are all datasets. The problem is that TestiSCSI and WindowsiSCSI don’t show up at all. These are all zVols. So apparently, zVols are not reported by FreeNAS for remote monitoring from what I can tell. I’m hoping I’m just doing something wrong, but I’ve looked everywhere and I can’t find any stats for a zVol.

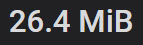

Let’s assume for a moment that we just wanted to see the aggregate of all of our datasets on a given volume. Well..that doesn’t work either. Why? Two reasons. First, in Grafana (and InfluxDB), I can’t add metrics together. That’s a bit of a pain, but surely there’s an aggregate value. So I looked at the value for df_complex-used for my z8x2TB dataset, and I get this:

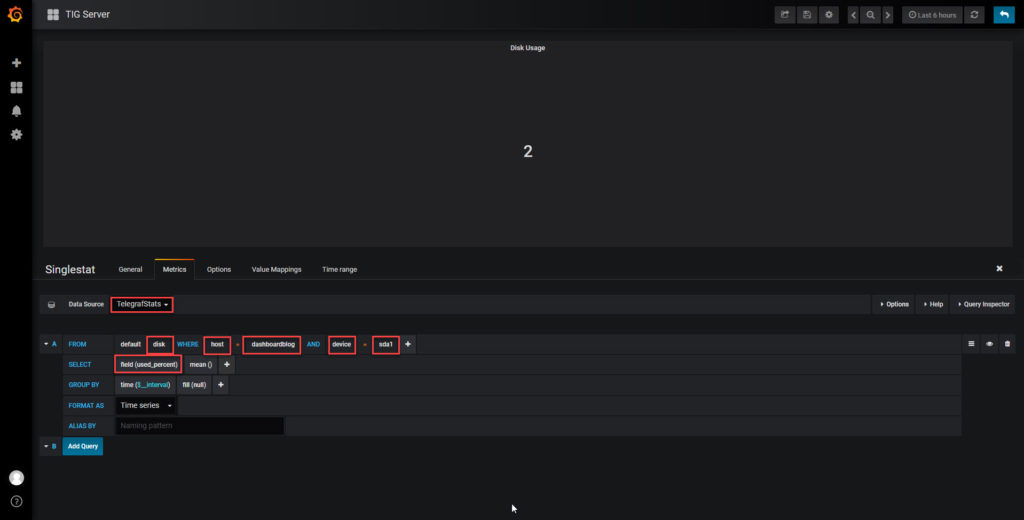

Clearly 26.4 MB does not equal 470.6GB. So now what? Great question…if anyone has any ideas, let me know, as I’d happily update this post with better information and give credit to anyone that can provide it! In the meantime, we’ll use a different share that only has a single dataset, so that we can avoid this annoying math and reporting issues. My Veeam backup share is a volume with a single dataset. Let’s start by creating a singlestat and pulling in this metric:

This should give us the amount of free storage available in bytes. This is likely a giant number. Copy and paste that number somewhere (I chose Excel). My number is 4651271147041. Now we can switch to our used number:

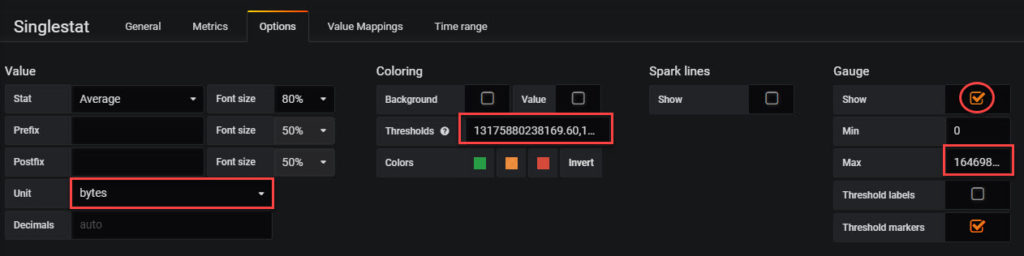

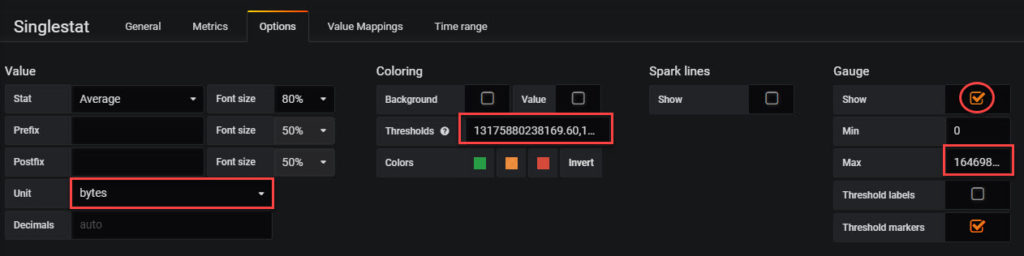

For me, this is an even bigger number: 11818579150671, which I will also copy and paste into Excel. Now I will do simple match to add the two together which gives a total of 16469850297712. So why did we go through that exercise in basic math? Because Grafana and InfluxDB won’t do it for us…that’s why. Now we can turn our singlestat into a gauge. We’ll start with our used storage number from above. Now we need to change our options:

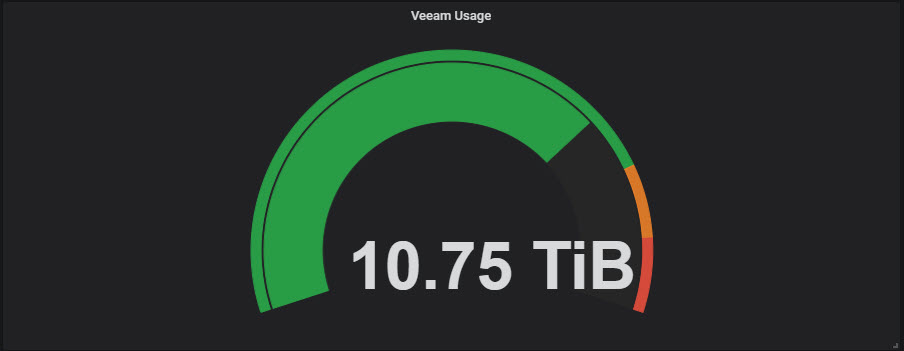

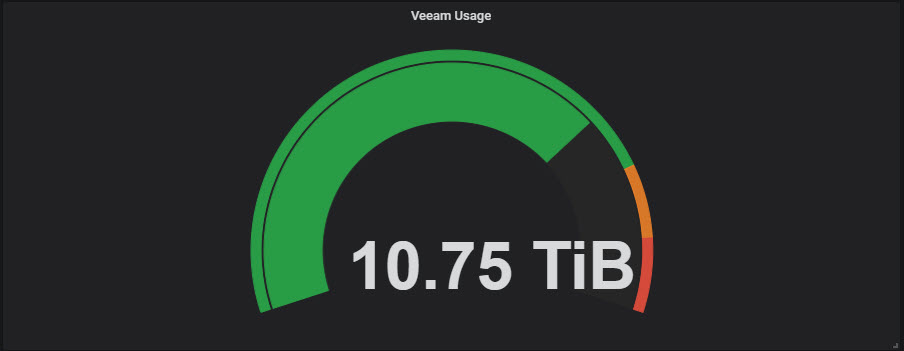

We start by checking the Show Gauge button and leave the min set to 0 and change our max to the value we calculated as our total space, which in my case is 16469850297712. We can also set thresholds. I set my thresholds to 80% and 90%. To do this, I took my 16469850297712 and multiplied by .8 and .9. I put these two numbers together, separated by a comma and put it in for thresholds: 13175880238169.60,14822865267940.80. Finally I change the unit to bytes from the IEC menu. The final result should look like that:

Now we can see how close we are to our max along with thresholds on a nice gauge.

CPU Temperature

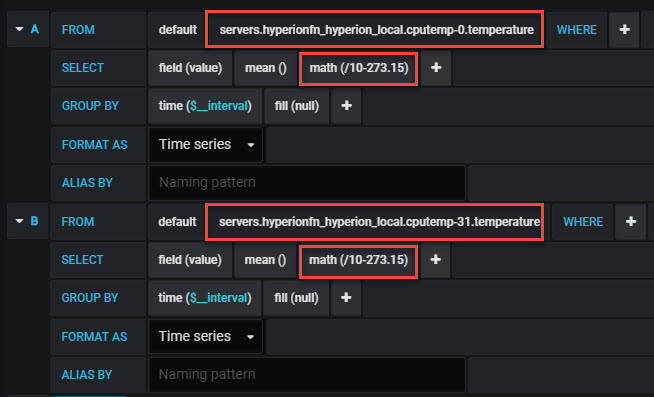

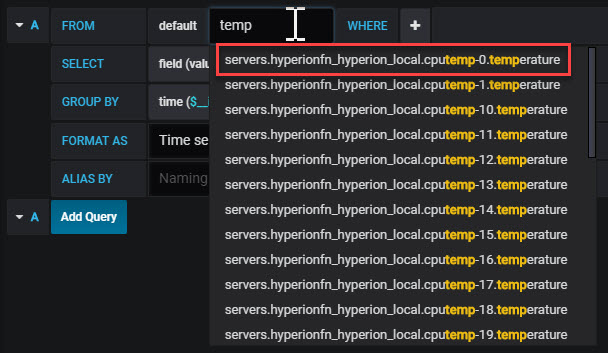

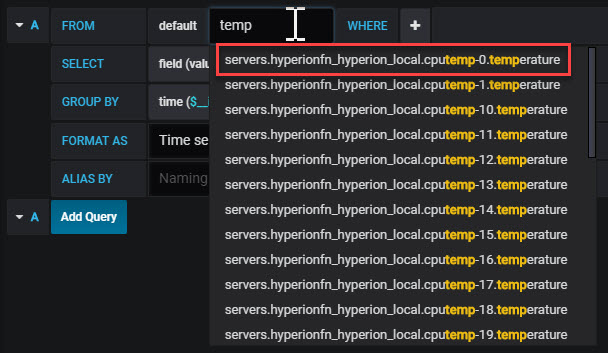

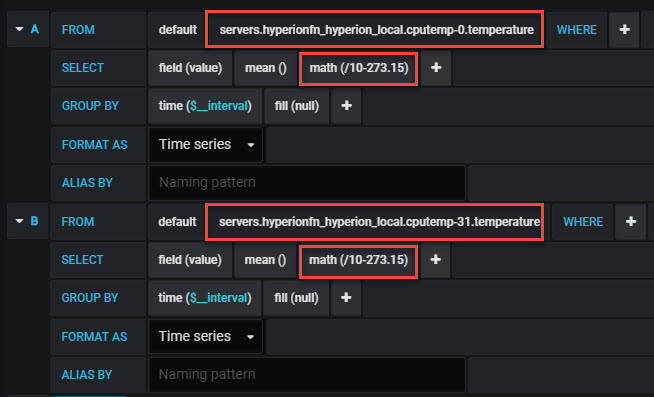

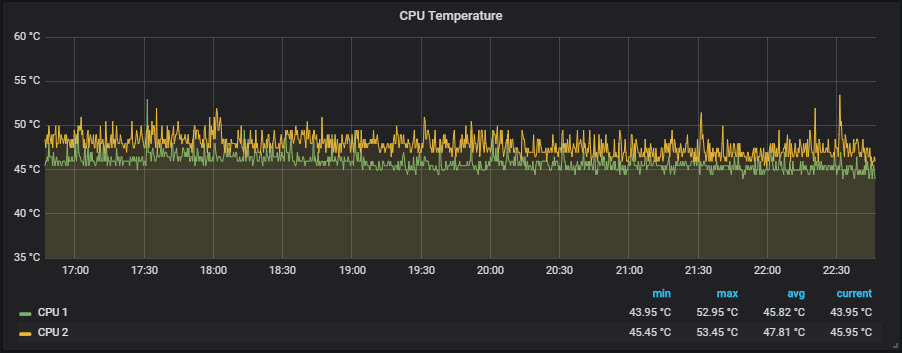

Now that we have the basics covered (CPU, RAM, Network, and Storage), we can move on to CPU temperatures. While we will cover temps later in an IPMI post, not everyone running FreeNAS will have the luxury of IPMI. So..we’ll take what FreeNAS gives us. If we search our metrics for temp, we’ll find that every thread of every core has its own metric. Now, I really don’t have a desire to see every single core, so I chose to pick the first and last core (0 and 31 for me):

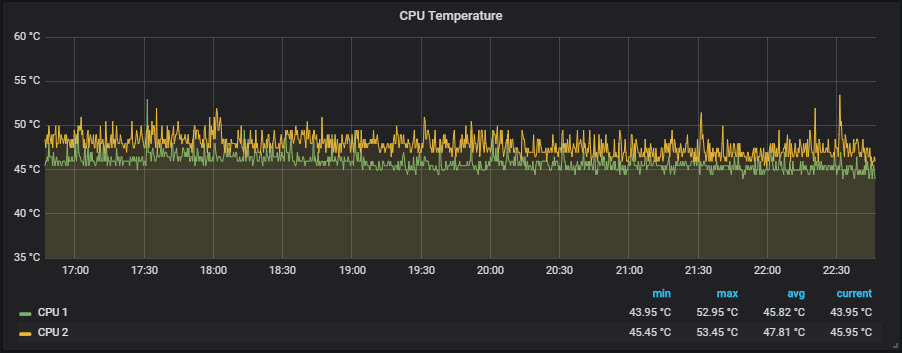

The numbers will come back pretty high, as they are in kelvin and multiplied by 10. So, we’ll use our handy math function again (/10-273.15) and we should get something like this:

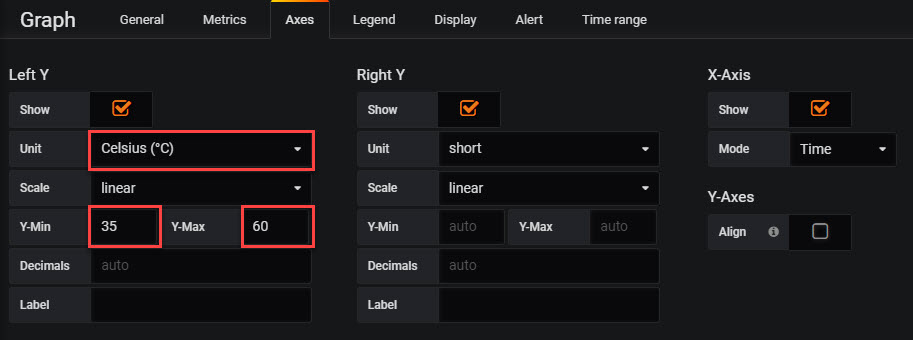

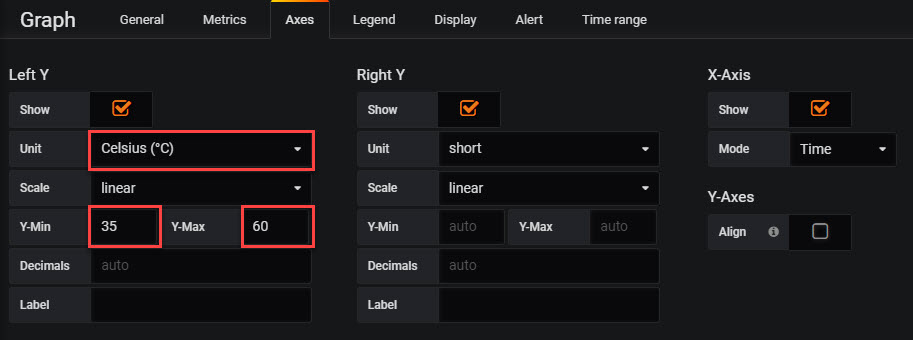

Next we’ll adjust our Axis to use Celsius for our unit and adjust the min and max to go from 35 to 60:

And because I like my table:

At the end, we should get something like this:

Conclusion

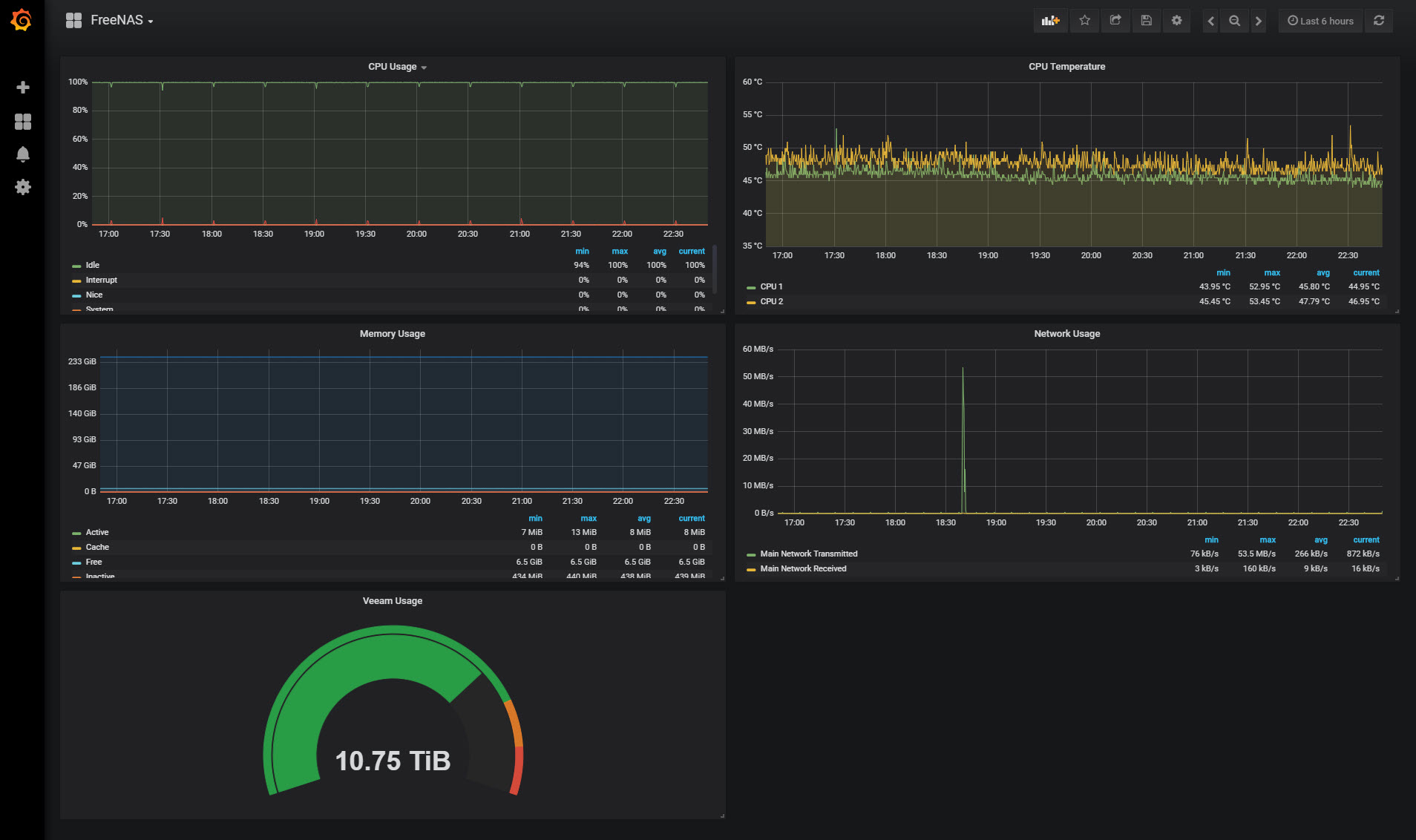

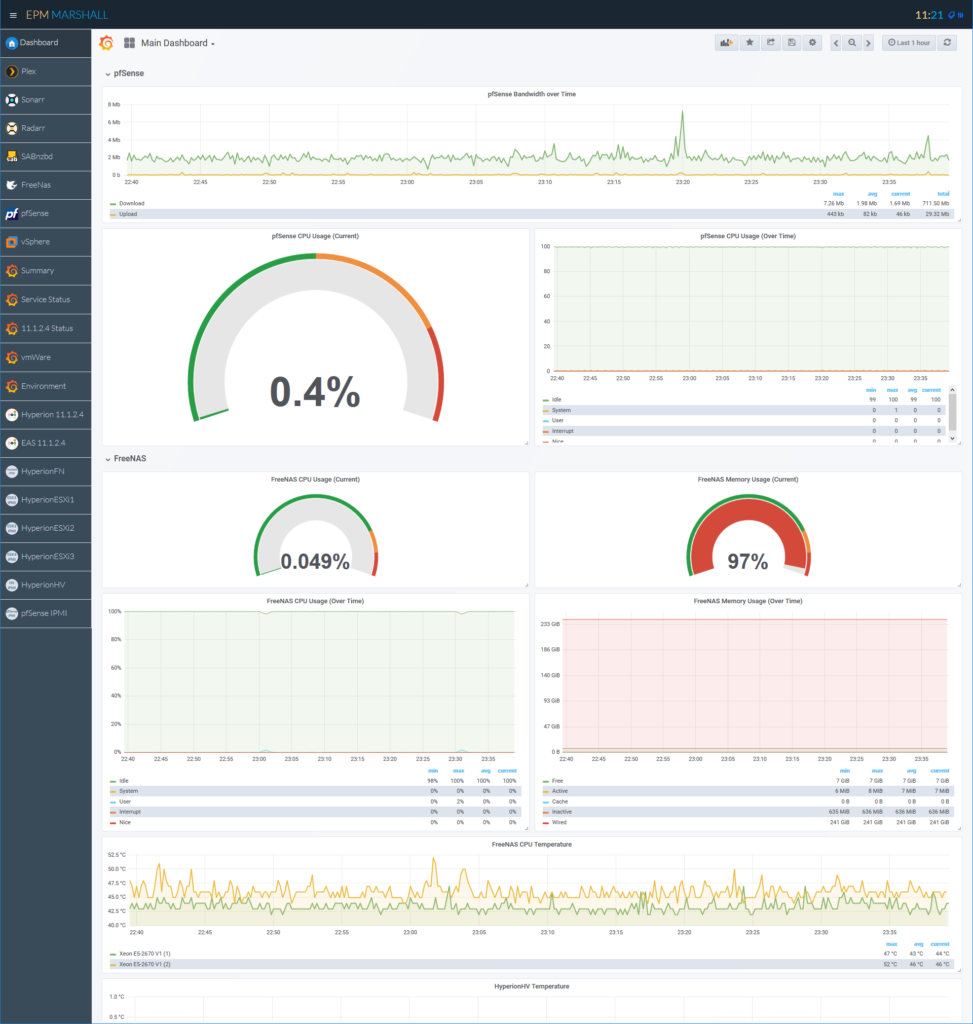

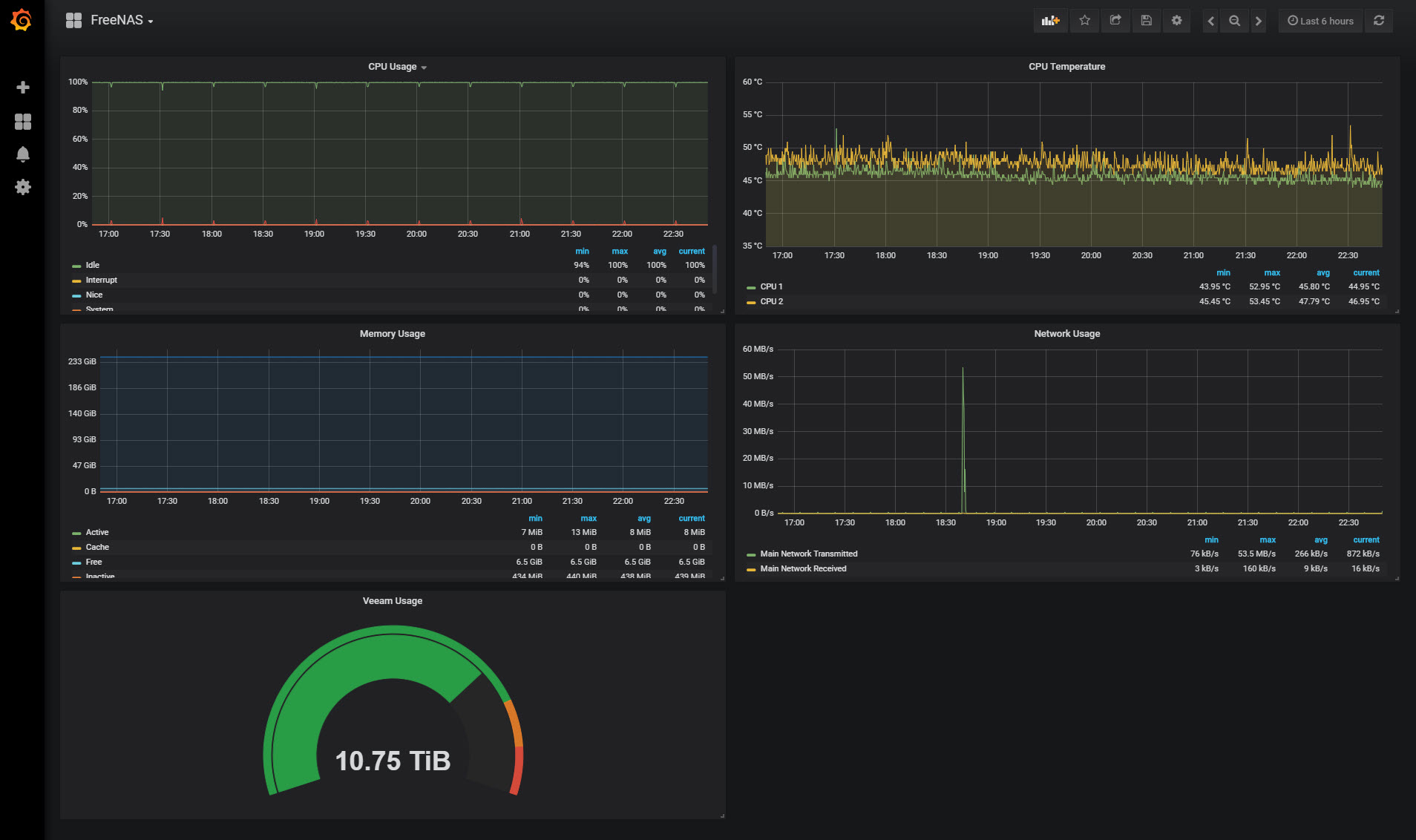

In the end, my dashboard looks like this:

This post took quite a bit more time than any of my previous posts in the series. I had built my FreeNAS dashboard previously, so I wasn’t expecting it to be a long, drawn out post. But, I felt as I was going through that more explanation was warranted and as such I ended up with a pretty long post. I welcome any feedback for making this post better, as I’m sure I’m not doing the best way…just my way. Until next time…

Brian Marshall

August 27, 2018

After a small break, I’m ready to continue the homelab dashboard series! This week we’ll be looking at pfSense statistics and how we add those to our homelab dashboard. Before we dive in, as always, we’ll look at the series so far:

- An Introduction

- Organizr

- Organizr Continued

- InfluxDB

- Telegraf Introduction

- Grafana Introduction

- pfSense

pfSense and Telegraf

If you are reading this blog post, I’m going to assume you have at least a basic knowledge of pfSense. In short, pfSense is a firewall/router used by many of us in our homelabs. It is based on FreeBSD (Unix) and has many available built-in packages. One of those packages just happens to be Telegraf. Sadly, it also happens to be a really old verison of Telegraf, but more on that later. Having this built in makes it very easy to configure and get up and running. Once we have Telegraf running, we’ll dive into how we visualize the statistics in Grafana, which isn’t quite as straight forward.

Installing Telegraf on pfSense

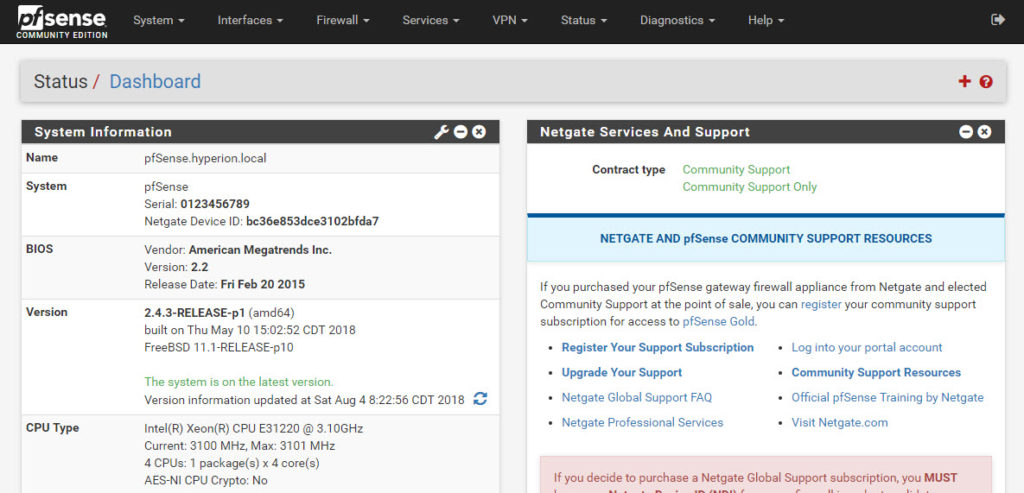

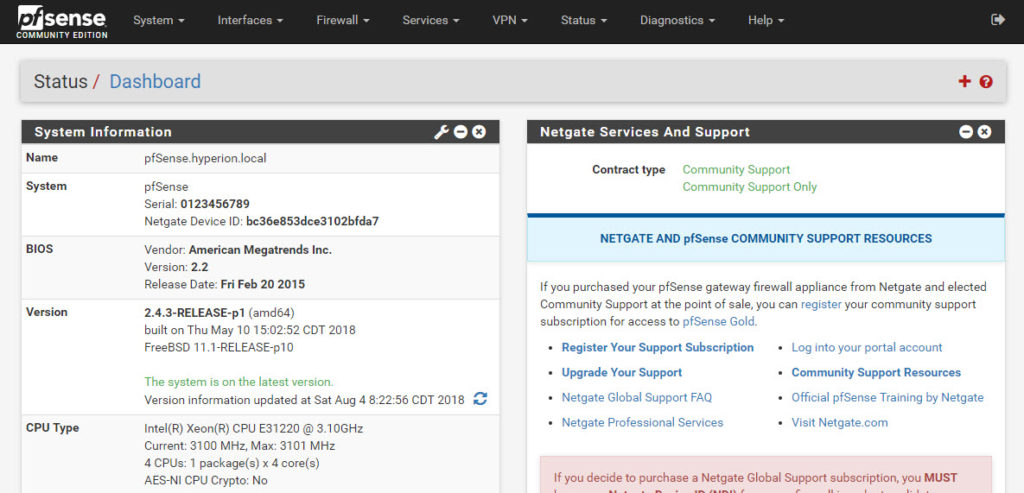

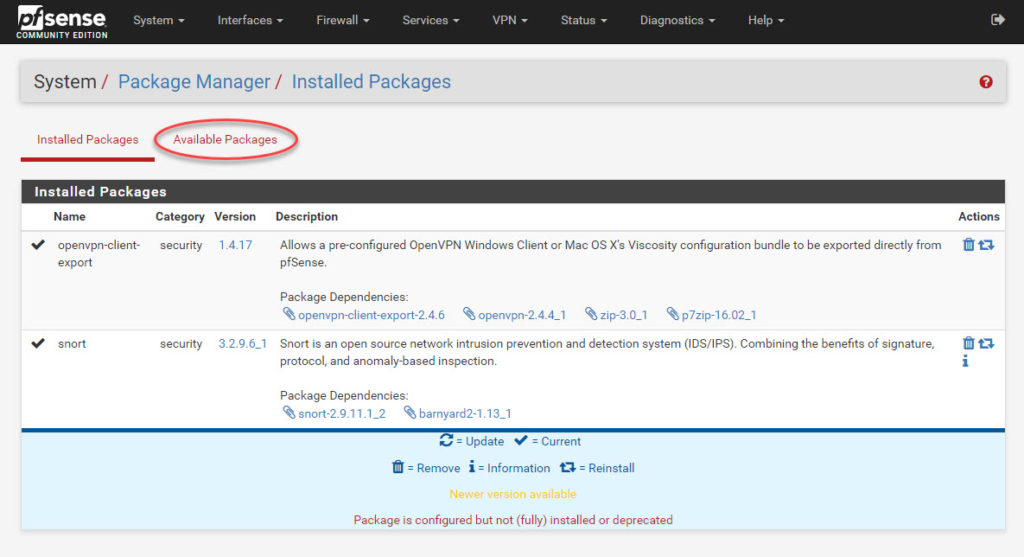

Installation of Telegraf is pretty easy. As I mentioned earlier, this is one of the many packages that we can easily install in pfSense. We’ll start by opening the pfSense management interface:

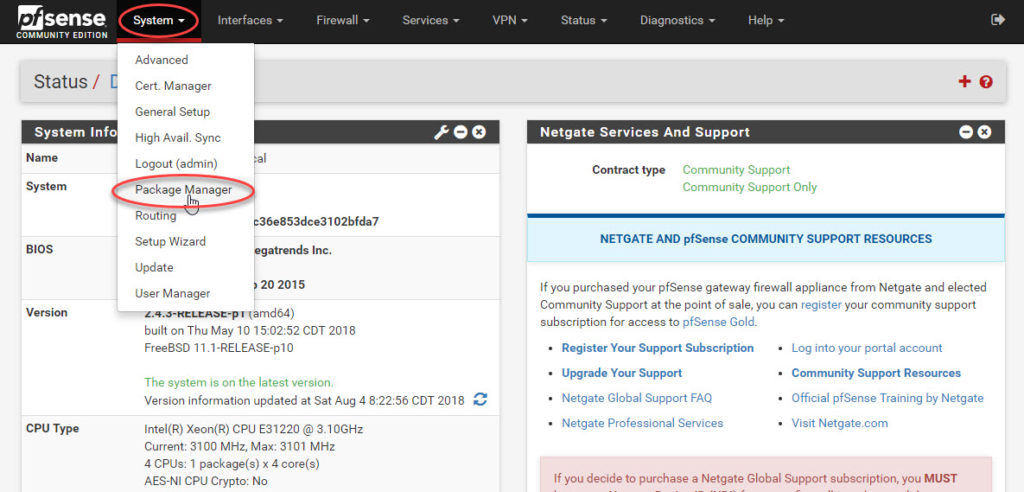

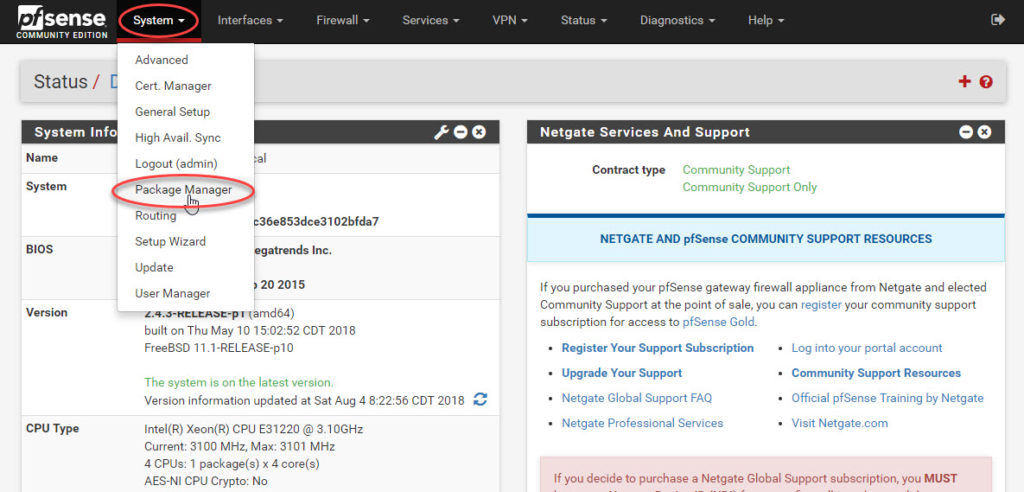

For most of us, we’re looking at our primary means to access the internet, and as such I would recommend verifying that you are on the latest version before proceeding. Once you have completed that task, you can move on to clicking on System and then Package Manager:

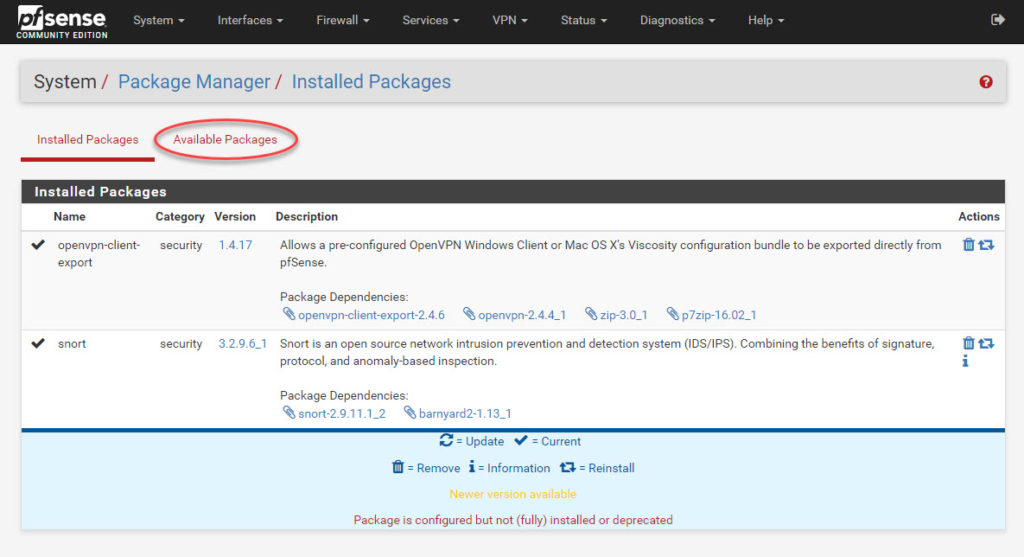

Here we can see all of our installed packages. Next we’ll click on Available Packages:

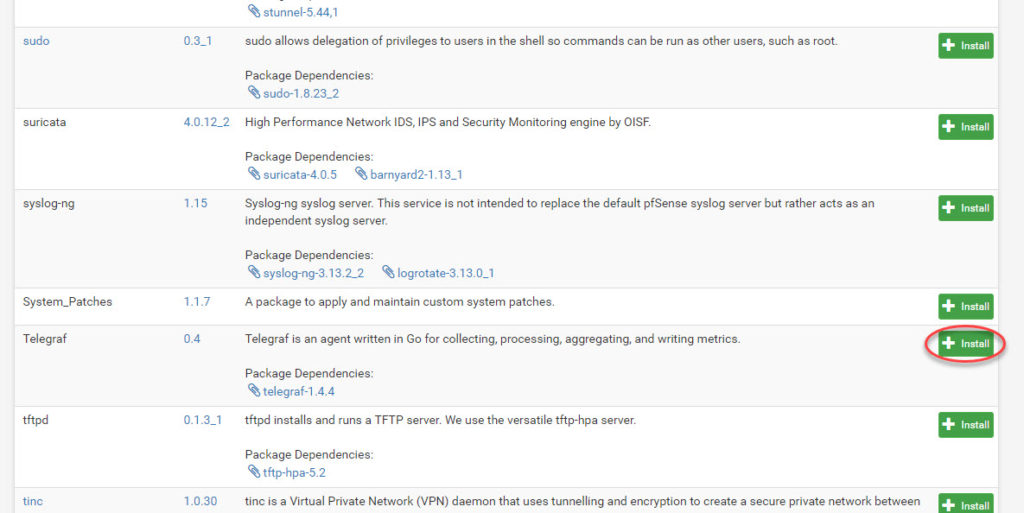

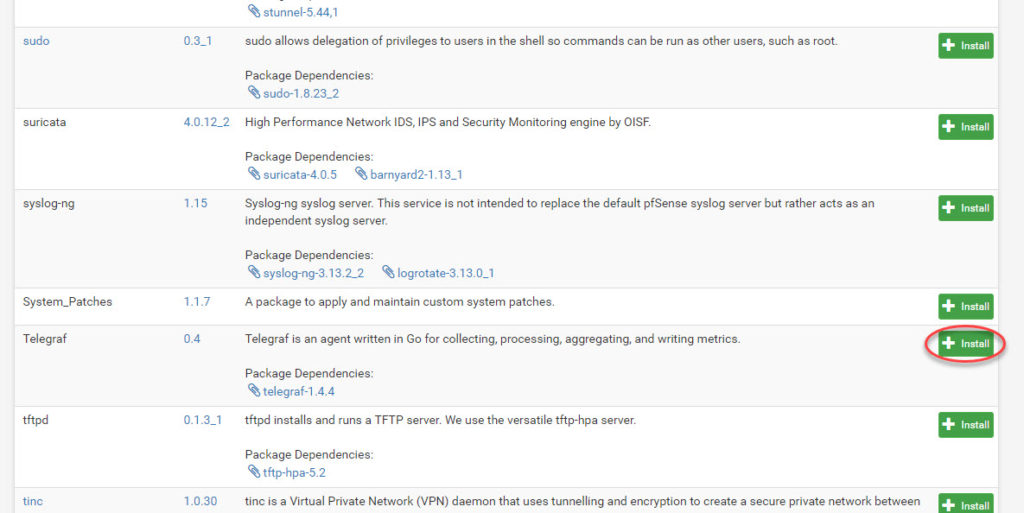

If we scroll way down the alphabetical list, we’ll find Telegraf and click the associated install button:

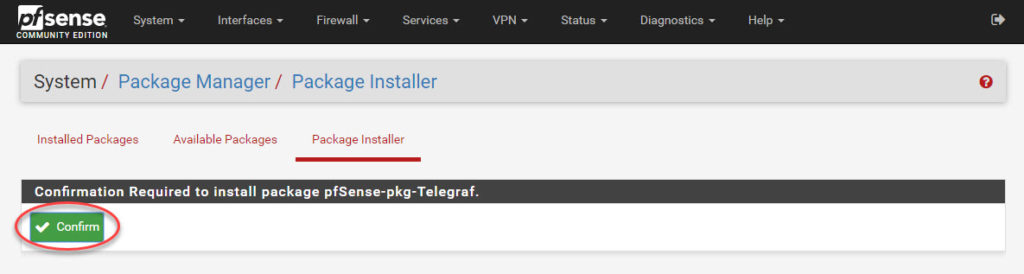

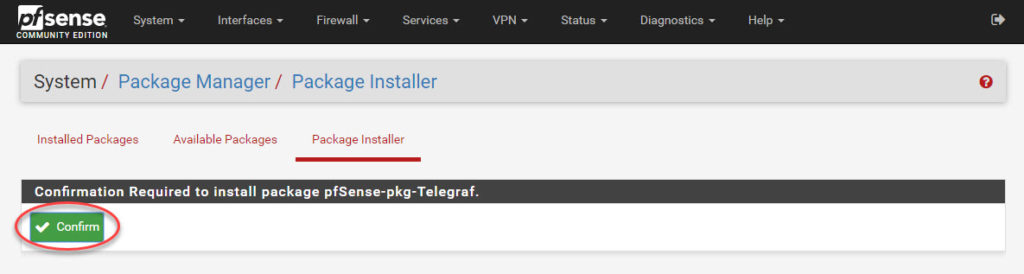

Finally, click the Confirm button and watch the installer go:

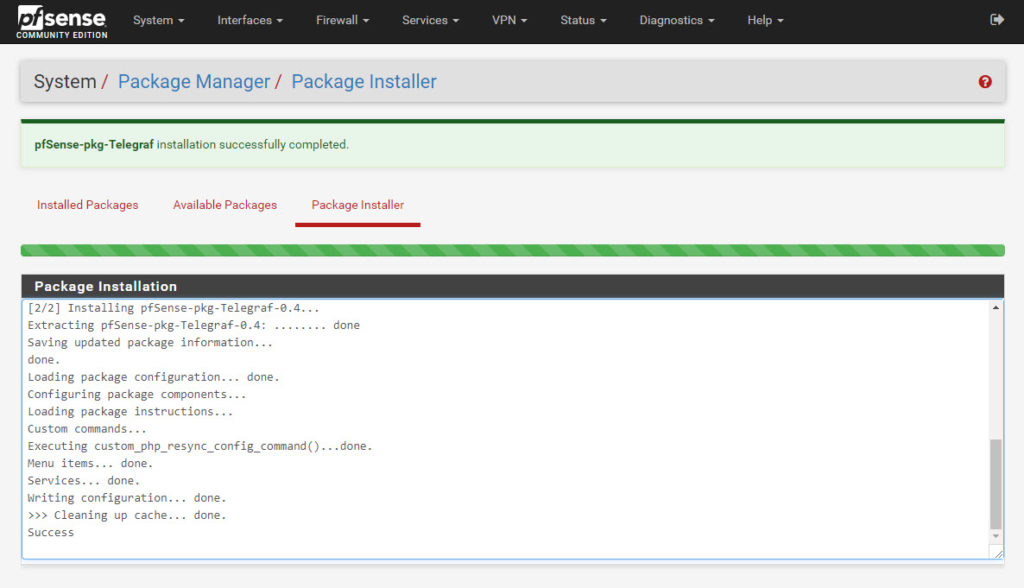

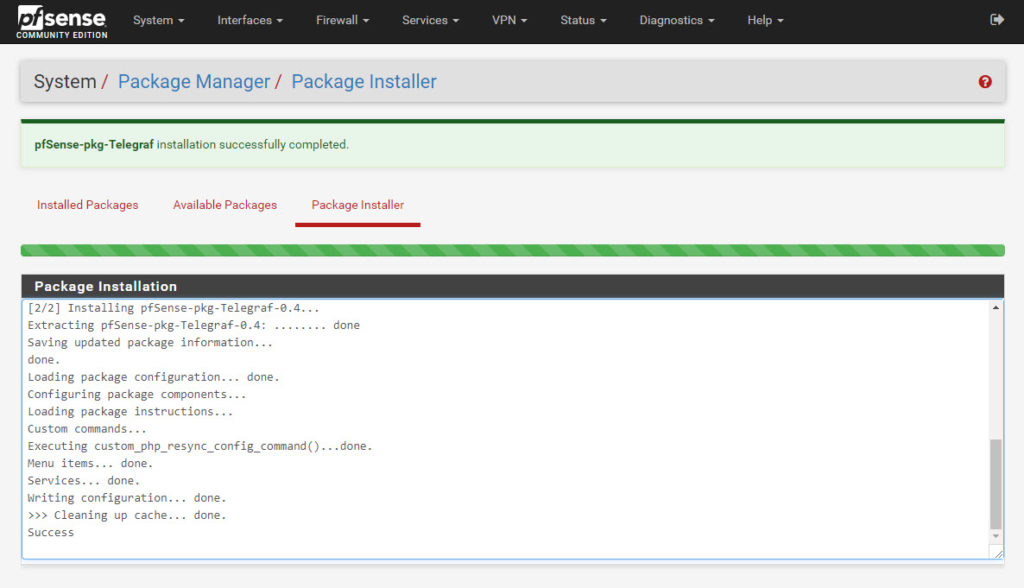

We should see a success message:

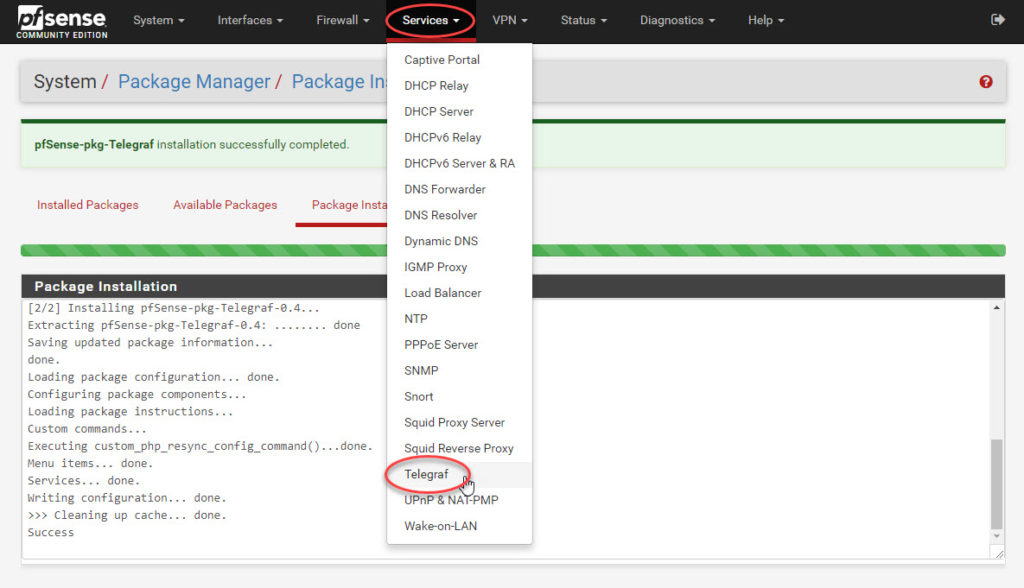

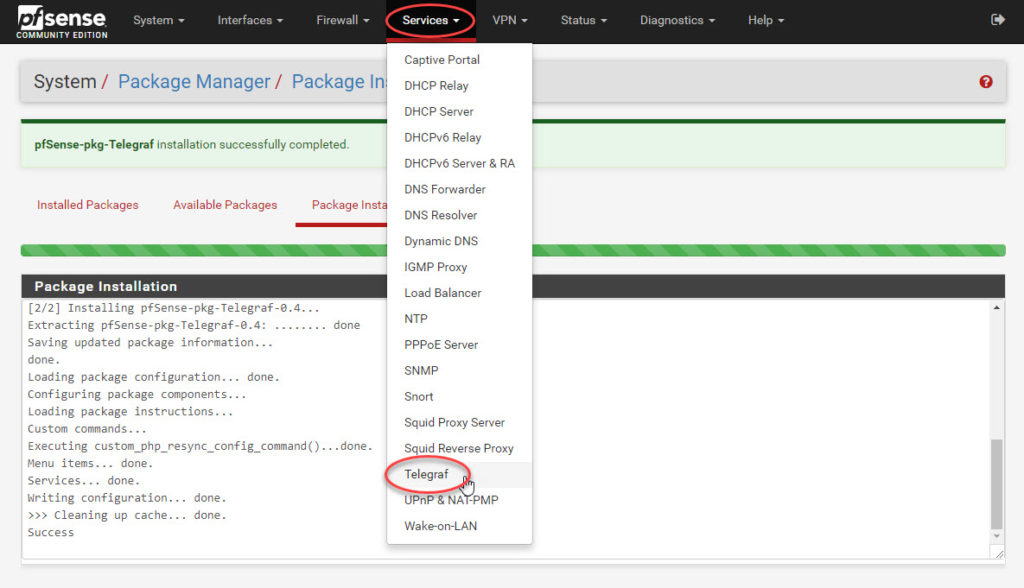

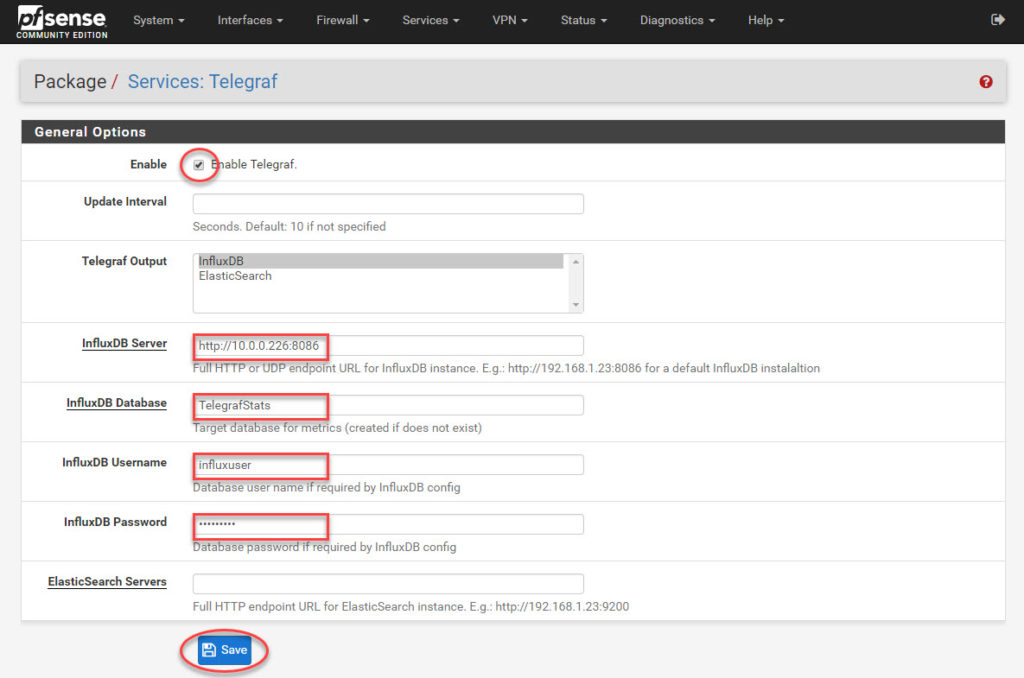

Now we are ready to configure Telegraf. Click on the Services tab and then click on Telegraf:

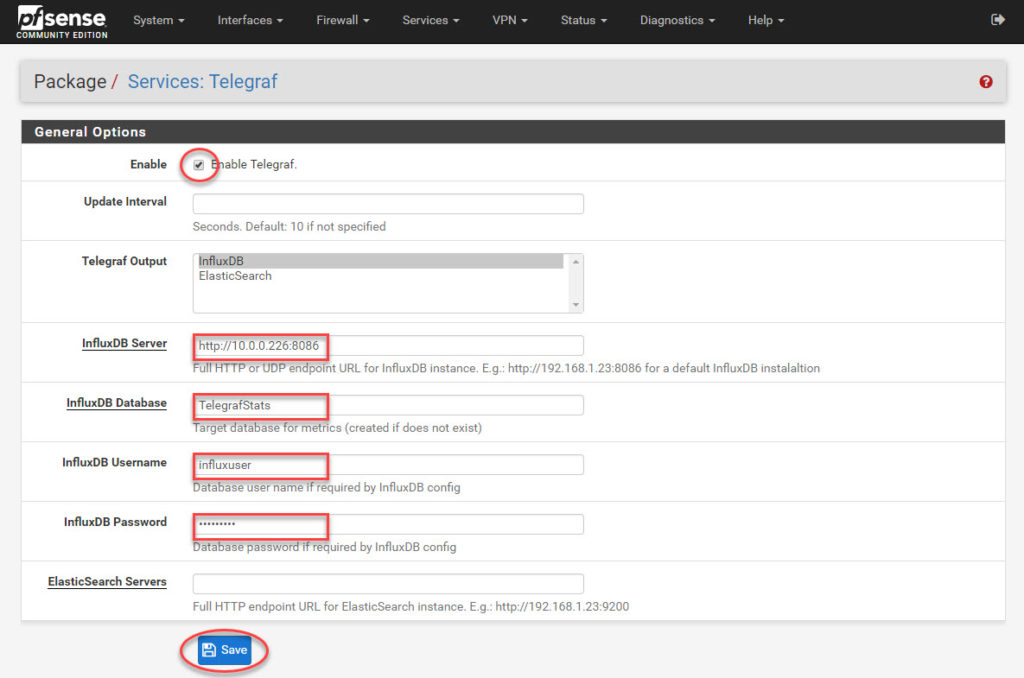

Ensure that Telegraf is enabled and add in your server name, database name, username, and password. Once you click save, it should start sending statistics over to InfluxDB:

pfSense and Grafana

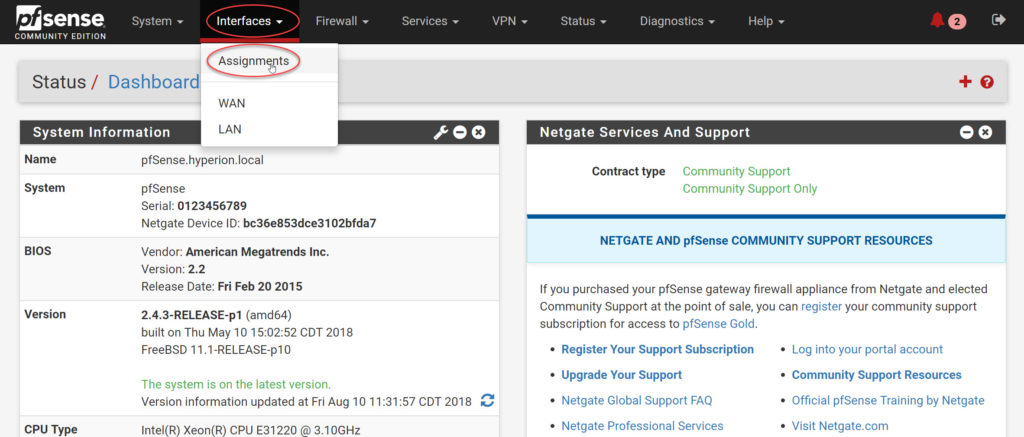

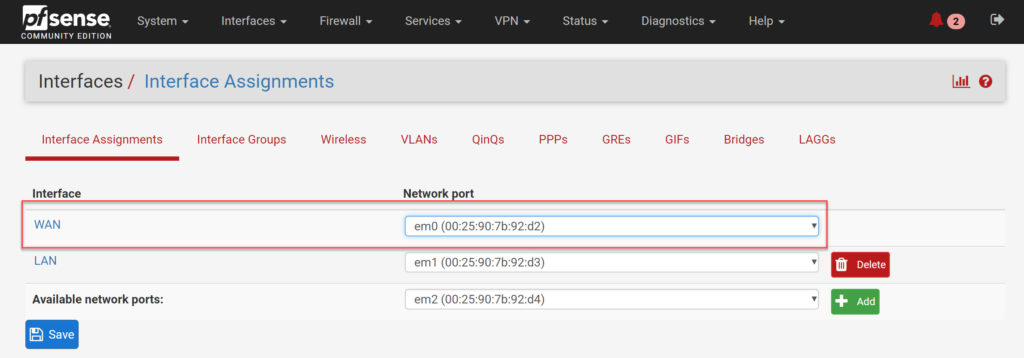

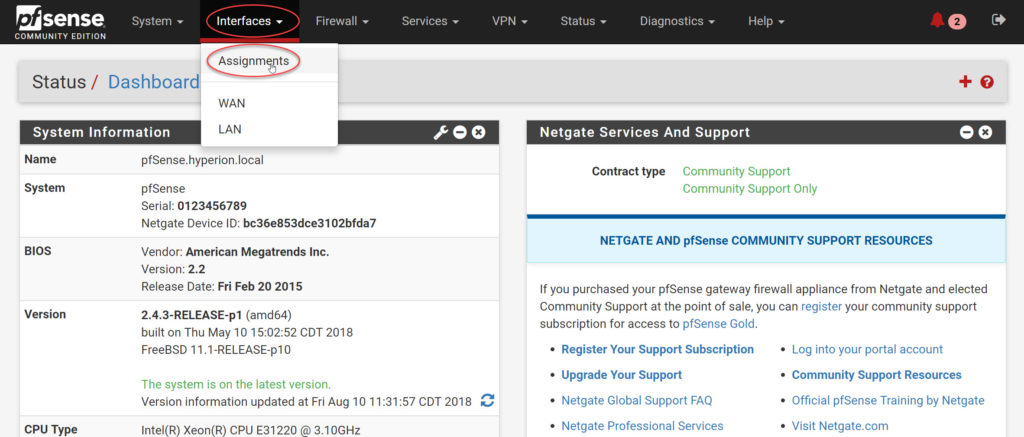

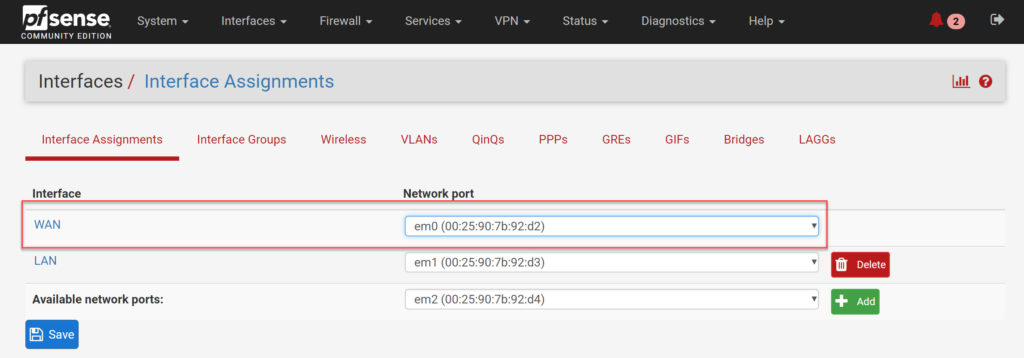

Now that we have Telegraf sending over our statistics, we should be ready to make it pretty with Grafana! But, before we head into Grafana, let’s make sure we understand which interface is which. At the very least, pfSense should have a WAN and a LAN interface. To see which interface is which (if you don’t know offhand), you can click on Interfaces and then Assignments:

Once we get to our assignments screen, we can make note of our WAN interface (which is what I care about monitoring). In my case, its em0:

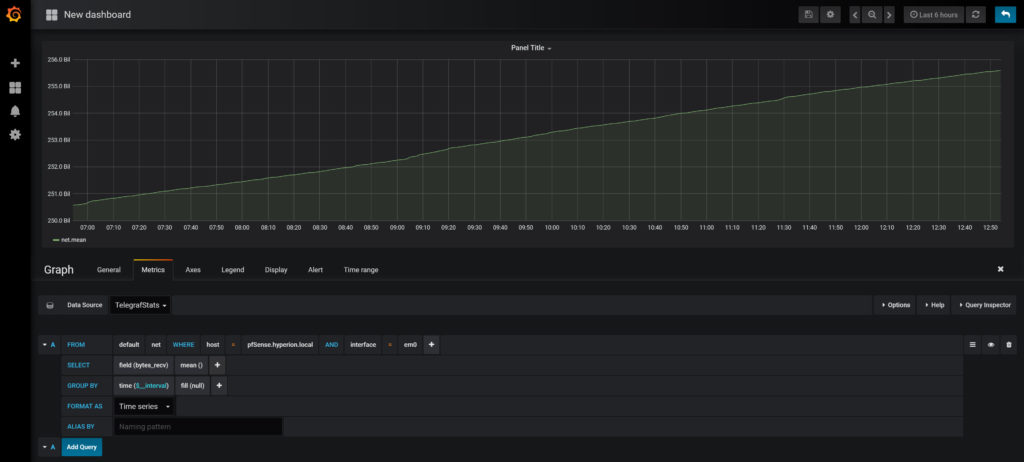

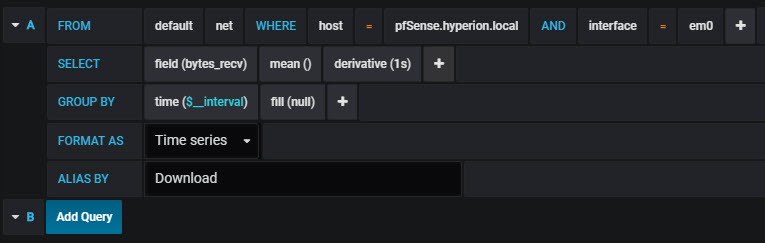

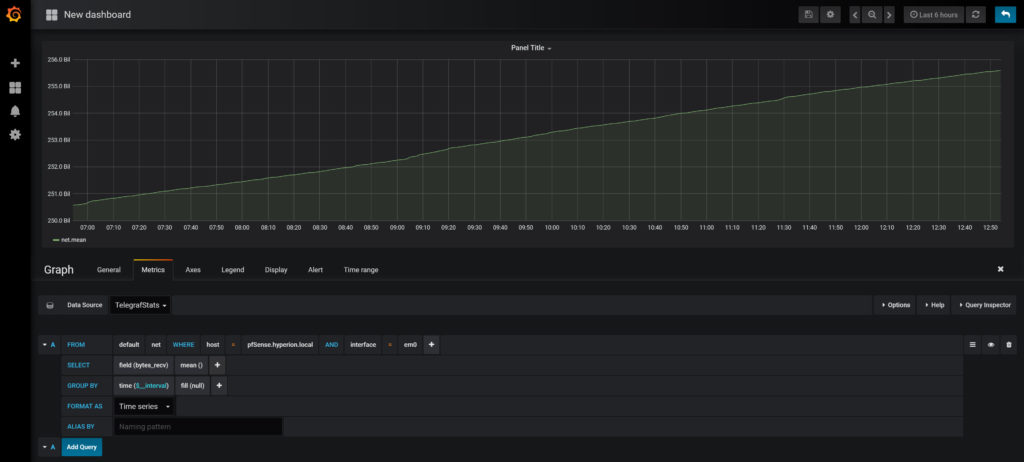

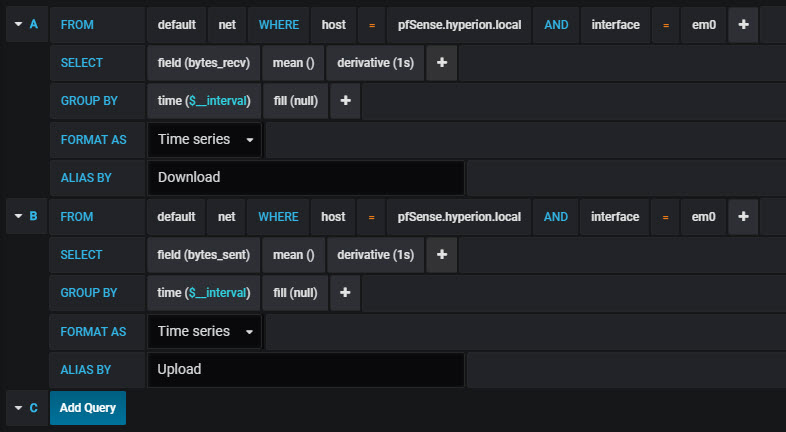

Now that we have our interface name, we can head over to Grafana and put together a new dashboard. I’ve started with a graph and selected my TelegrafStats datasource, my table of net, and filtered by my host of pfSense.Hyperion.local and my interface of em0. Then I selected Bytes_Recv for my field:

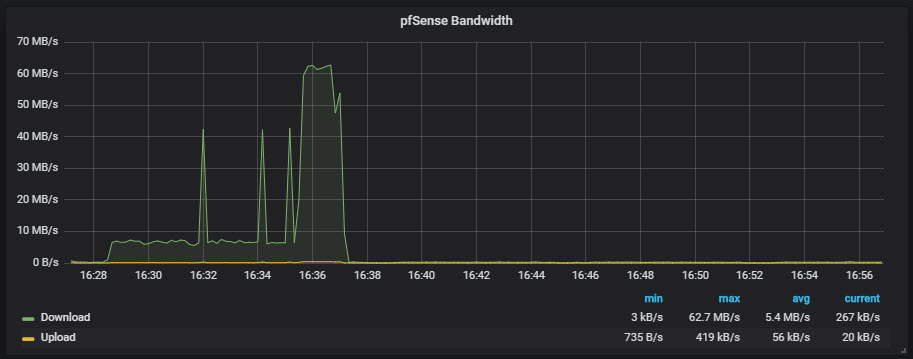

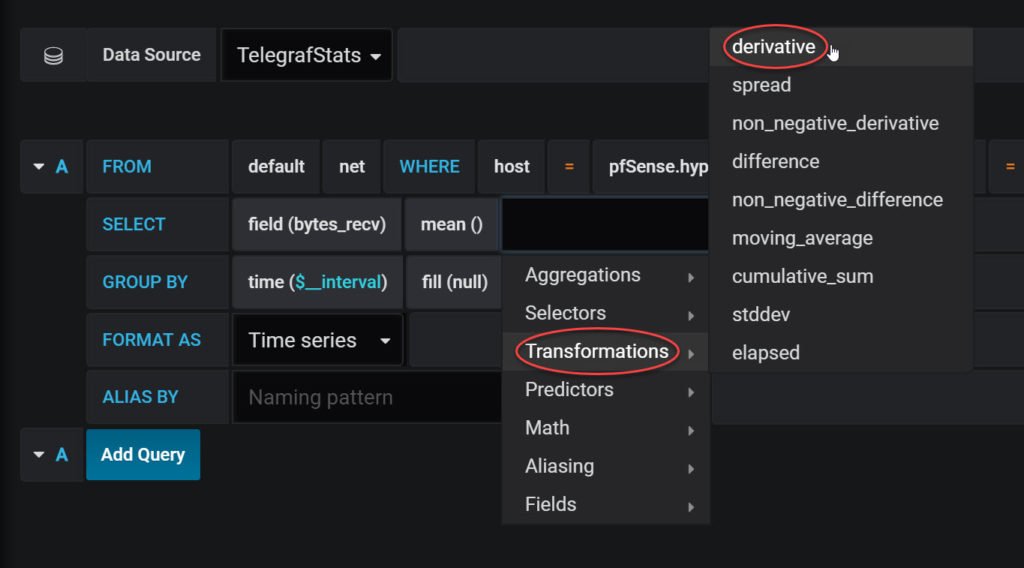

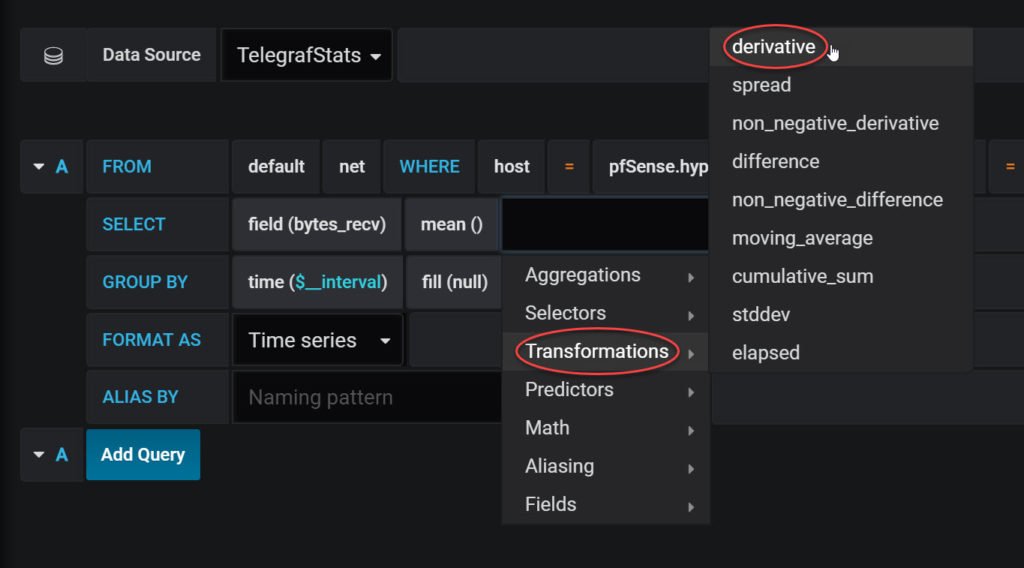

If you’re like me, you might think that you are done with your query. But, if you take a look at the graph, you will notice that you are in fact…not done. We have to use some more advanced features of our query language to figure out what this should really look like. We’ll start with the derivative function. So why do we need this? If we look at the graph, we’ll see that it just continues to grow and grow. So instead of seeing the number, we need to see the change in the number over time. This will give us our actual rate, which is what the derivative function does. It looks at the current value and provides the difference between that value and the value prior. Once we add that, we should start to see a more reasonable graph:

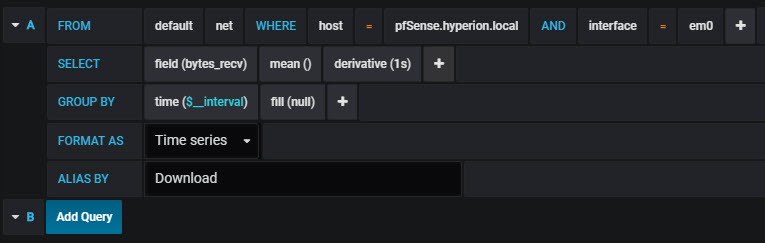

Our final query should look like this:

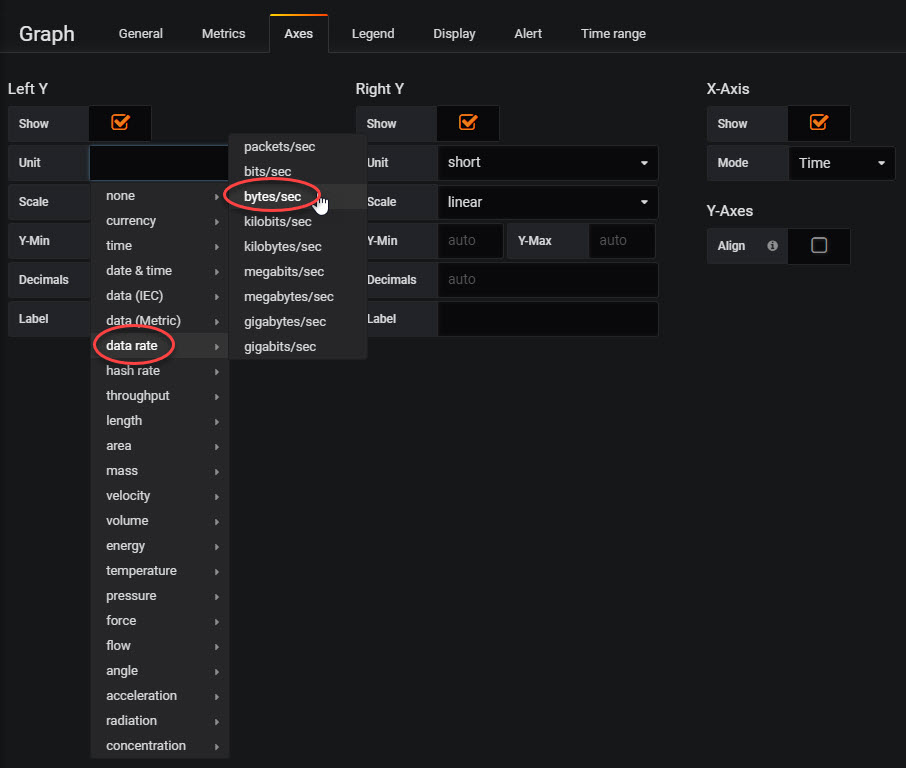

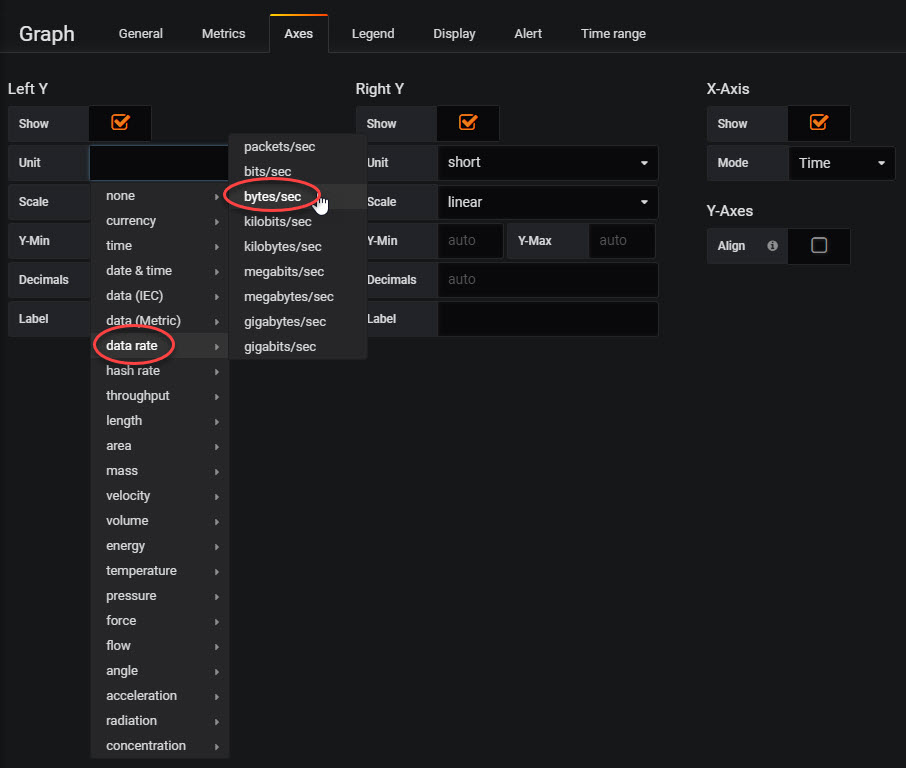

Next we can go to our axes settings and set it to bytes/sec:

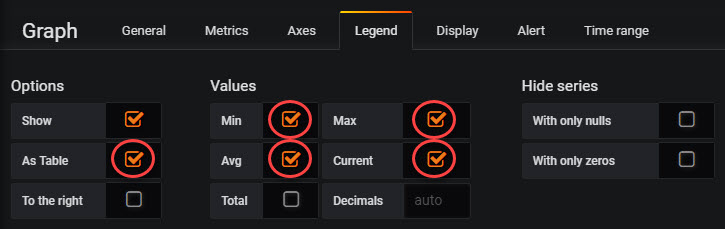

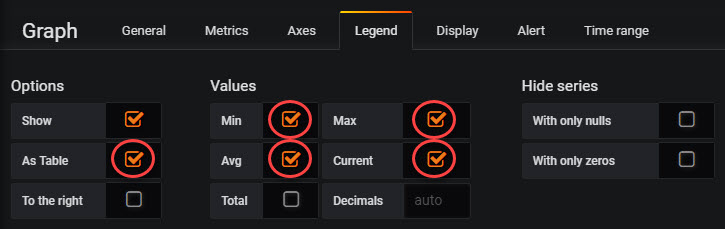

Finally, I like to set up my table-based legend:

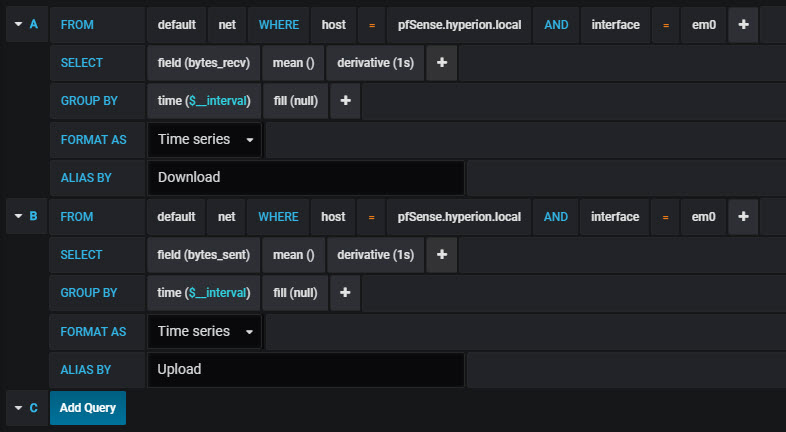

Now let’s layer in bytes_sent by duplicating our first query:

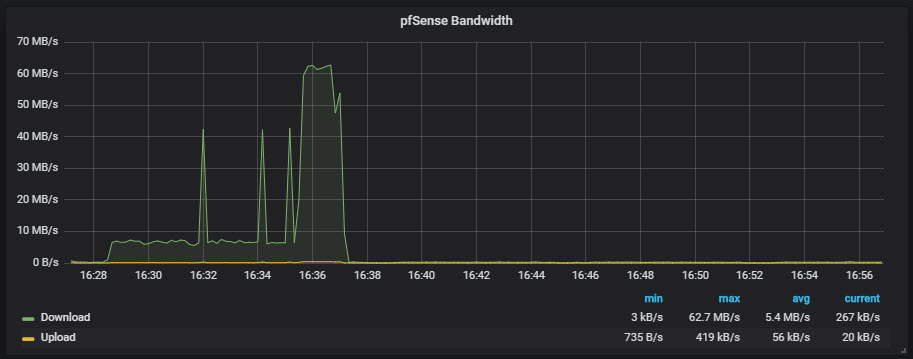

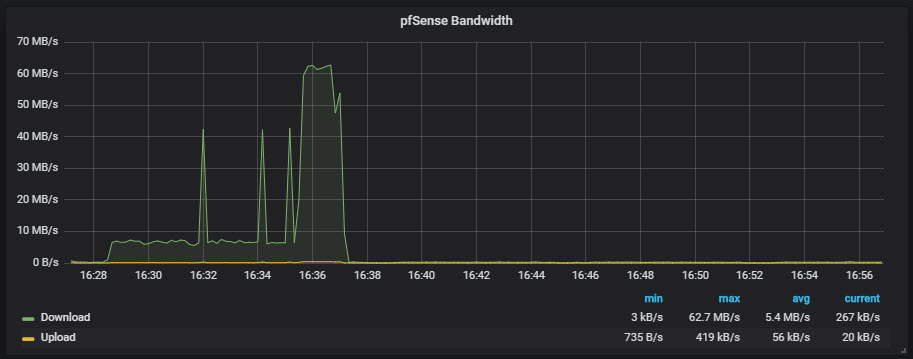

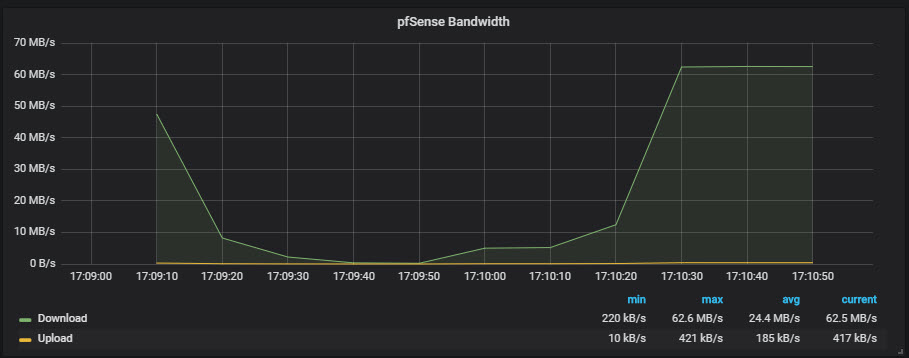

And our final bandwidth graph should look like this:

Confirming Our Math

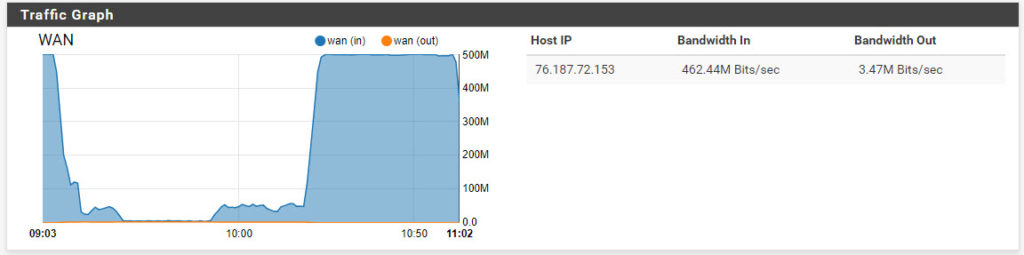

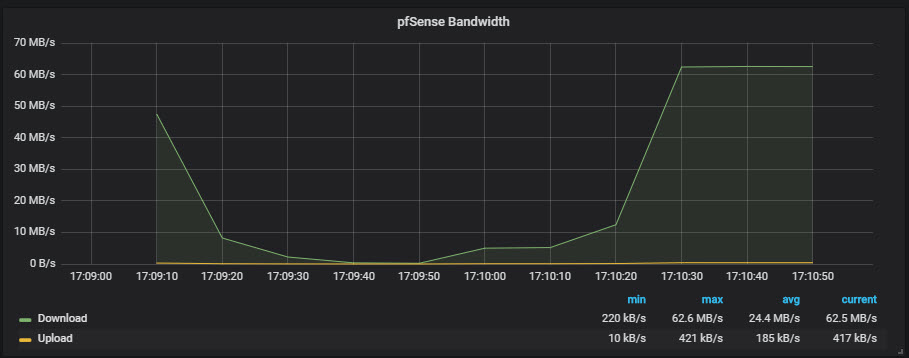

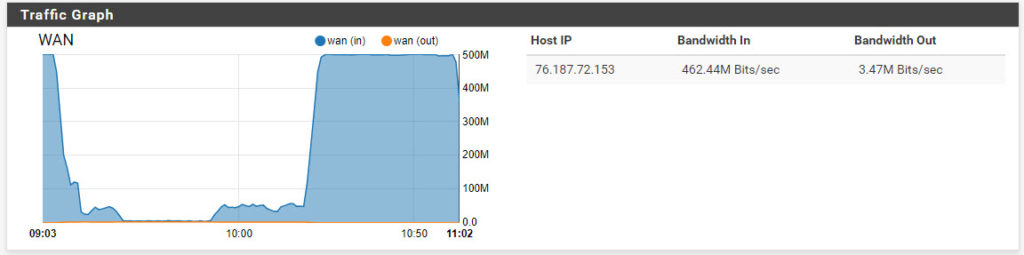

I spent a lot of time making sure I got the math right and the query, but just to check, here’s a current graph from pfSense:

This maxes out at 500 megabits per second. Now let’s check the same time period in Grafana:

If we convert 500 megabits to megabytes by dividing by 8, we get 62.5. So…success! The math checks out! This also tells me that my cable provider upgraded my from 400 megabit package to 500 megabit.

Conclusion

You should be able to follow my previous guide for CPU statistics. One thing you may notice is that there are no memory statistics for your pfSense host. This is a bug that should be fixed at some point, but I’m on the latest version and it still hasn’t been fixed. I’ve yet to find a decent set of steps to fix it, but if I do, or it becomes fixed with a patch, I’ll update this post! Until next time…when we check out FreeNAS.

Brian Marshall

August 10, 2018

Another week, another part of the homelab dashboard series! This week we will finally bring all of our work into Grafana so that we can see some pretty pictures. Before we dive in, let’s take a look at the series so far:

- An Introduction

- Organizr

- Organizr Continued

- InfluxDB

- Telegraf Introduction

- Grafana Introduction

What is Grafana?

Grafana is the final piece of our TIG stack. This is the part you’ve been waiting for, as it provides the actual results of our labors in the form of beautiful dashboards. Like Telegraf and InfluxDB, Grafana is also open source, which makes it even more awesome. Grafana really does two things, first it build (or allows you to build) a query back to a data source. In our case, this means building an InfluxQL query. Once the query has been prepared, Grafana then gives you the ability to make it look nice…very nice. Let’s get started!

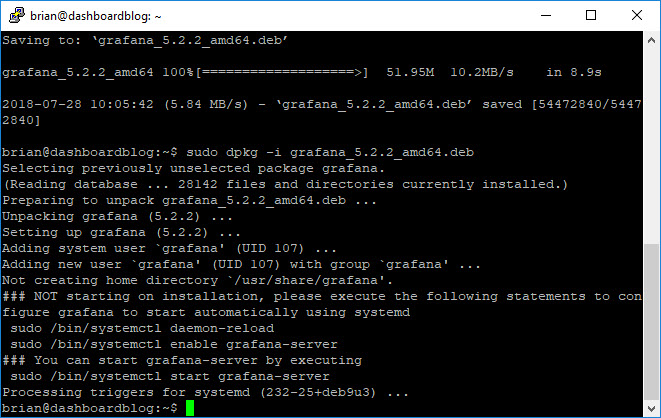

Installing Grafana on Linux

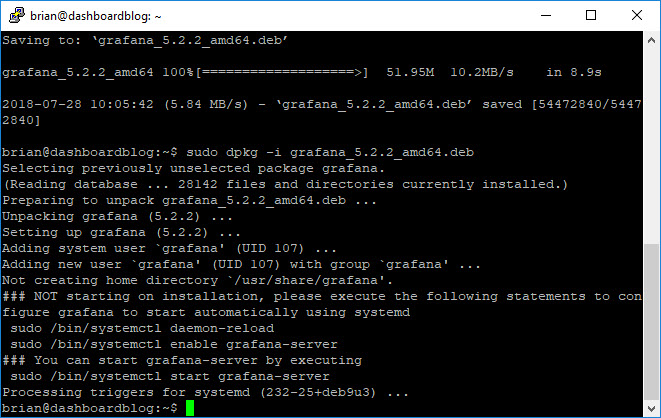

Installation, like everything else we’ve installed so far in this series is pretty straight forward. We’ll start by downloading and installing Grafana using these commands:

sudo wget https://s3-us-west-2.amazonaws.com/grafana-releases/release/grafana_5.2.2_amd64.deb

sudo dpkg -i grafana_5.2.2_amd64.deb

Executing those commands should look something like this:

Now we need to start the service:

sudo service grafana-server start

Finally we need to make sure the service starts automatically at boot each time:

sudo systemctl enable grafana-server.service

Installation complete…now let’s do some configuration.

Configuring Grafana

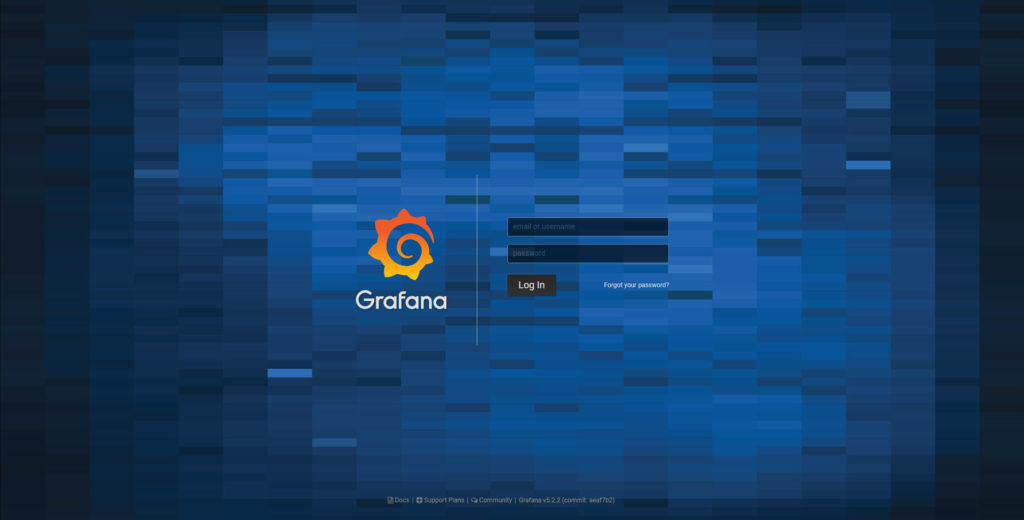

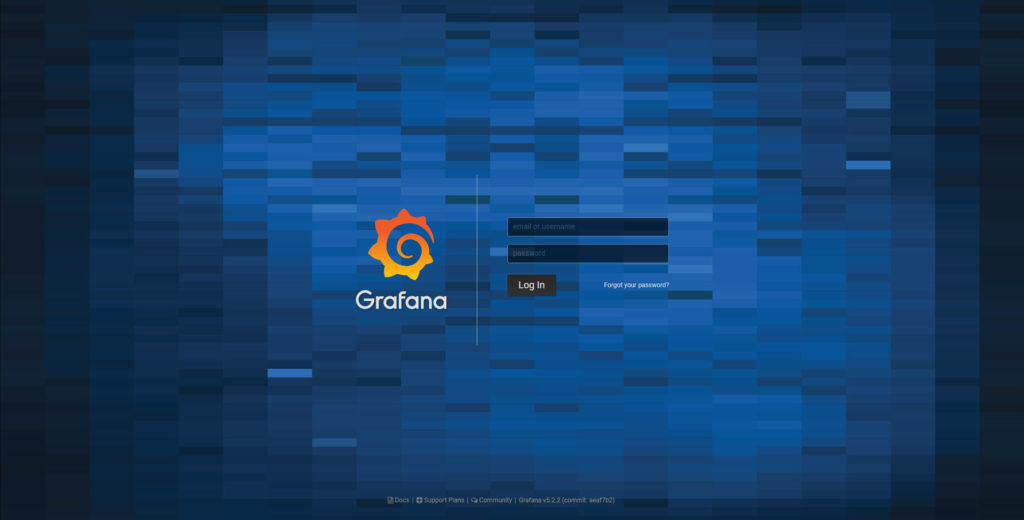

We’ll start by opening our browser and going to http://youripaddressorhostname:3000/:

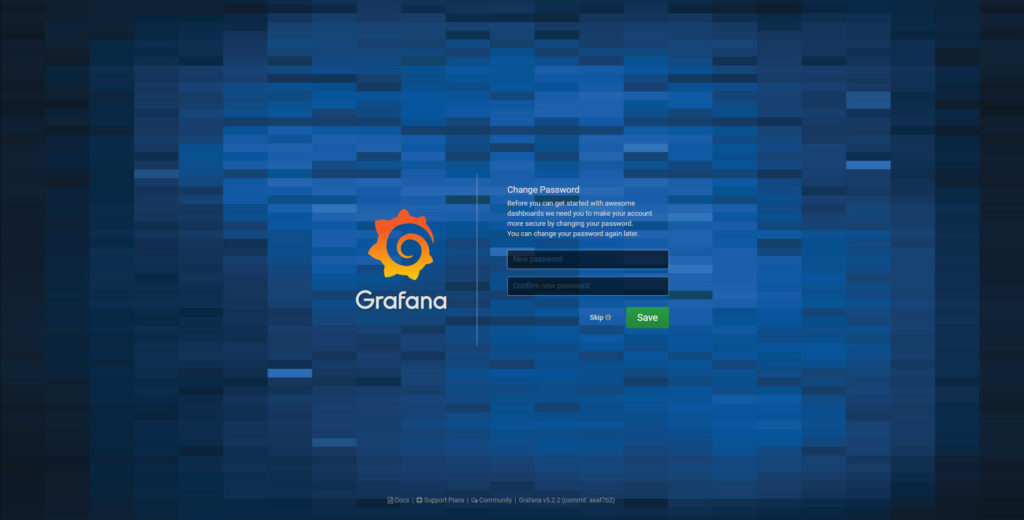

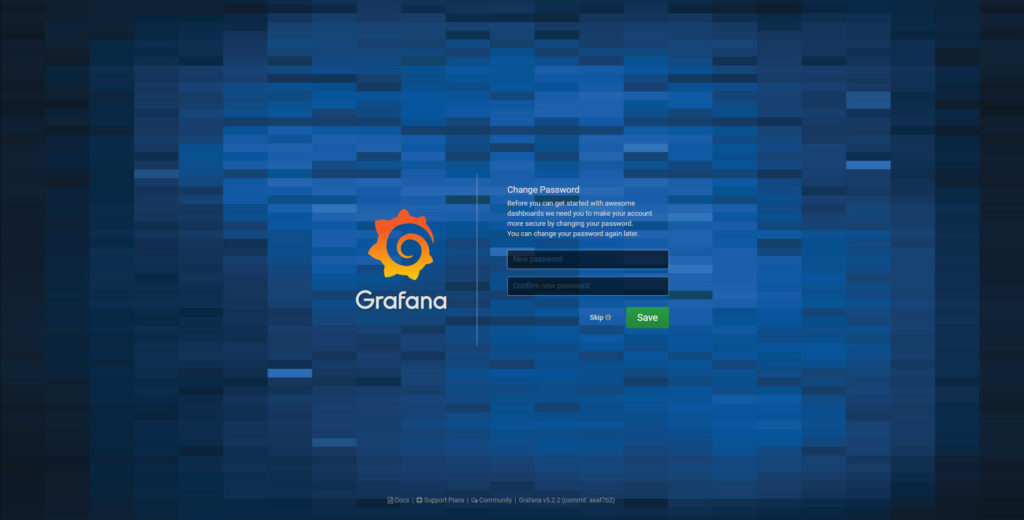

The default username and password for the administrator account will be admin. Once logged in, we will be prompted to change our password. I highly recommend that you do not click the skip button:

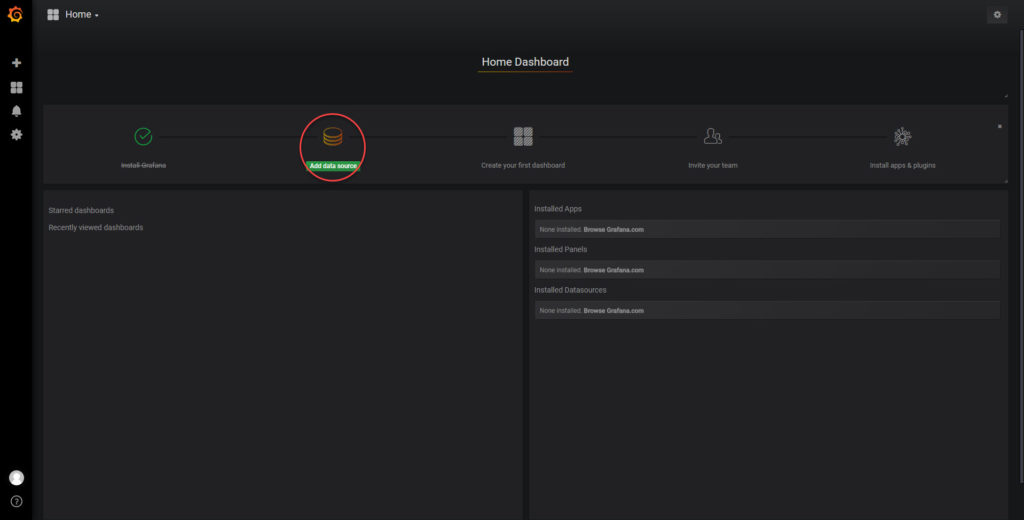

Once you are logged in, we’ll see that we’ve only completed the first step in the five that they have listed:

- Install Grafana

- Add data source

- Create your dashboard

- Invite your team

- Install apps & plugins

We’ll focus on the first three as its assumed that this is a homelab where you don’t have a lot of users and for now won’t need any apps or plugins. Now we can move on to the next step.

Adding a Data Source

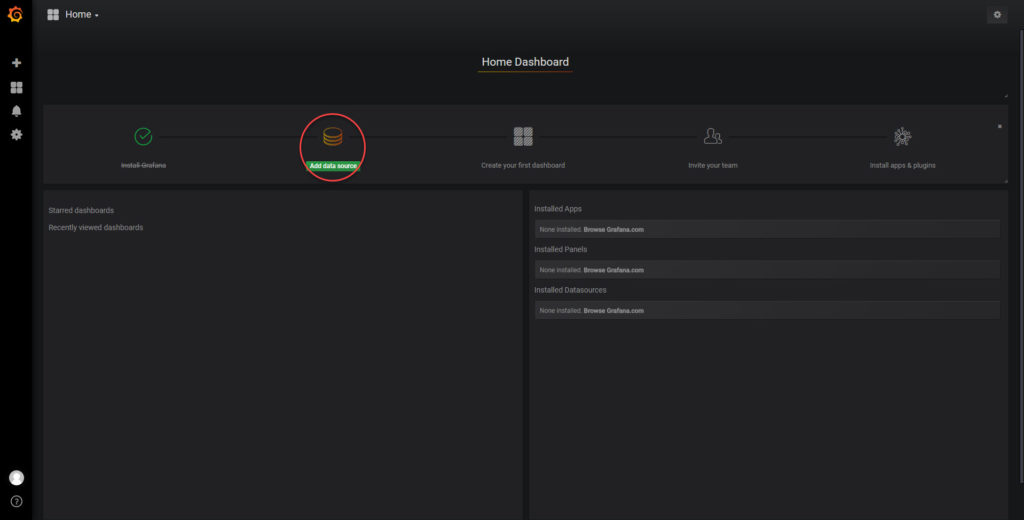

Now that we have Telegraf feeding stats to InfluxDB, we can start with that database as our source. Start by clicking on Add data source:

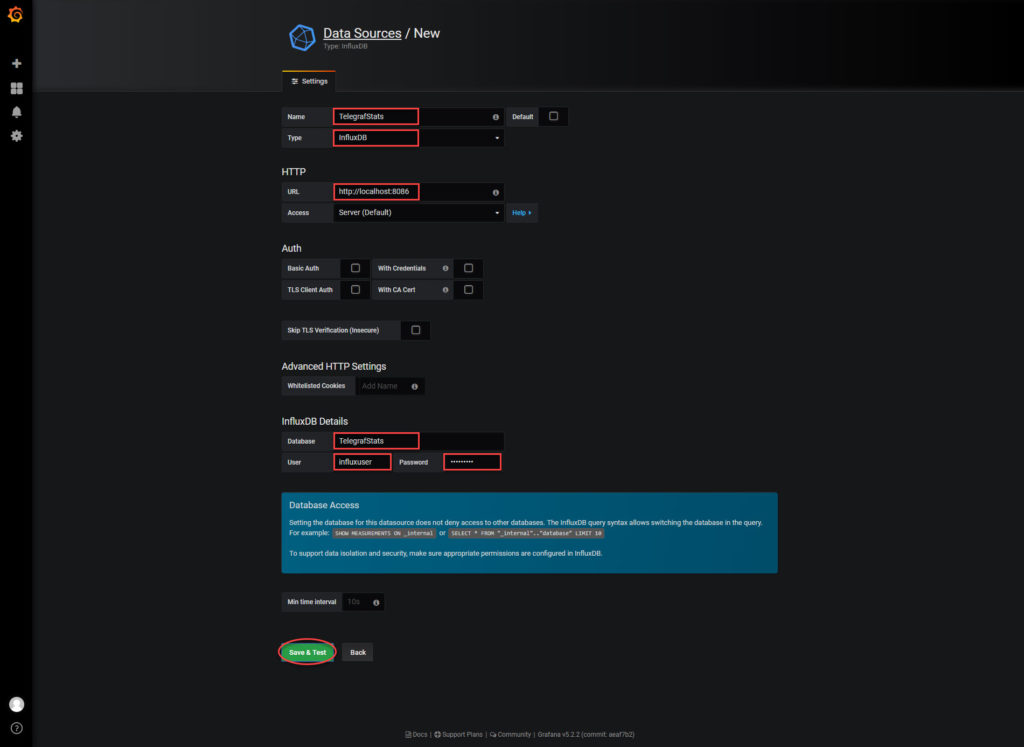

Enter a name for the data source, choose InfluxDB for the type, enter the URL (I used localhost as I have it all on the same system), enter the name of the database, enter the username and password used to access the database. Finally, click Save & Test:

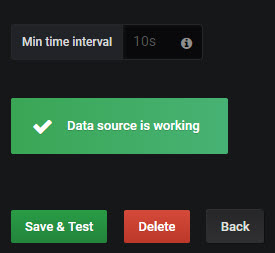

Assuming everything went well, you should see the following:

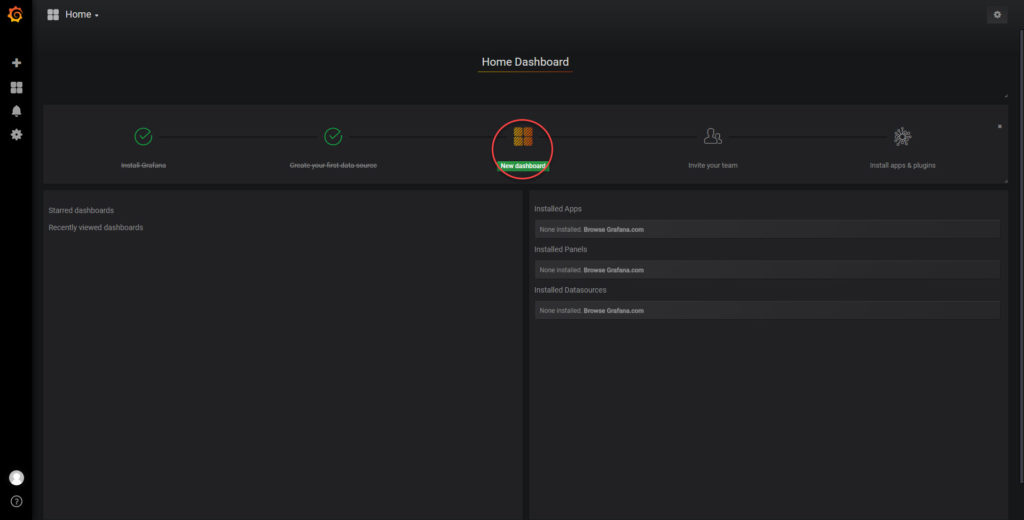

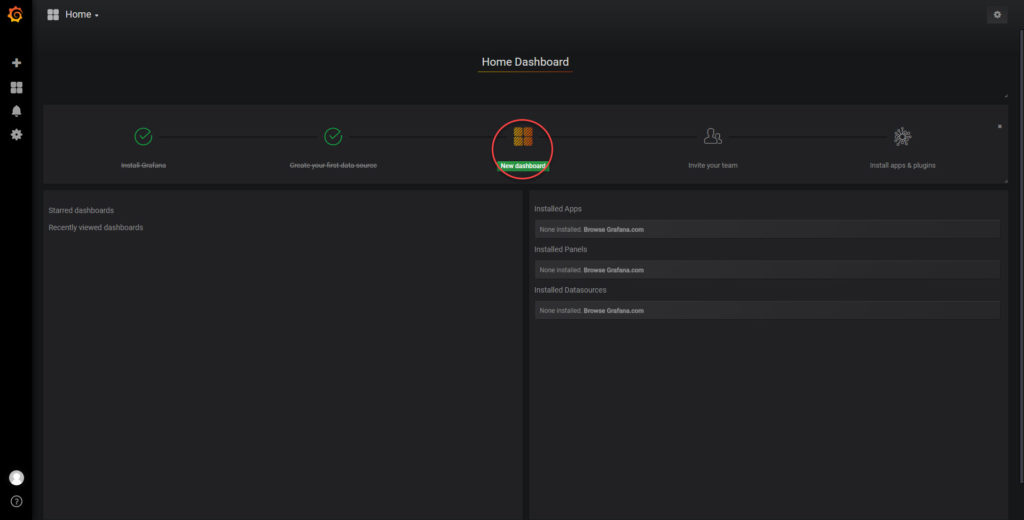

Creating a Dashboard

Finally…after six parts of this series, we are to a point where we get to see a pretty picture. The moment we have all been waiting for! While I could just upload my JSON file for this dashboard, I learn much better if I actually go through and build it myself. So we’ll go that route. If we go back to our home dashboard, we can click on the next box:

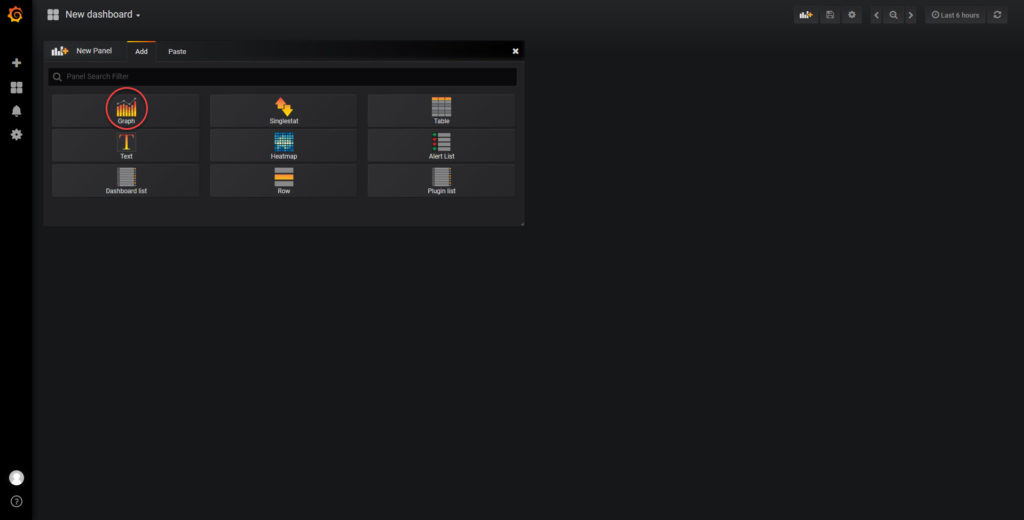

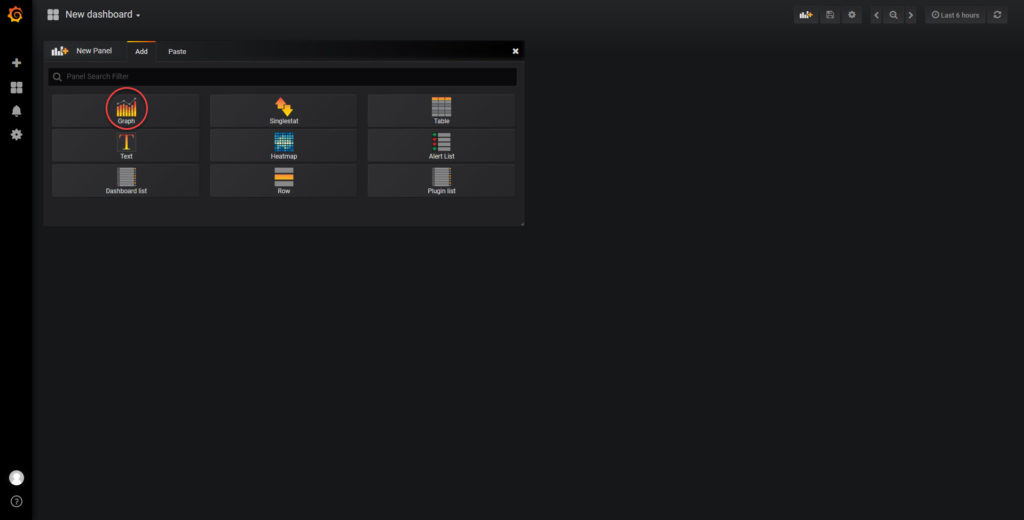

Simple Line Graph

We’ll start with something simple, so we’ll click on Graph:

This will give you a nice looking random sample data graph:

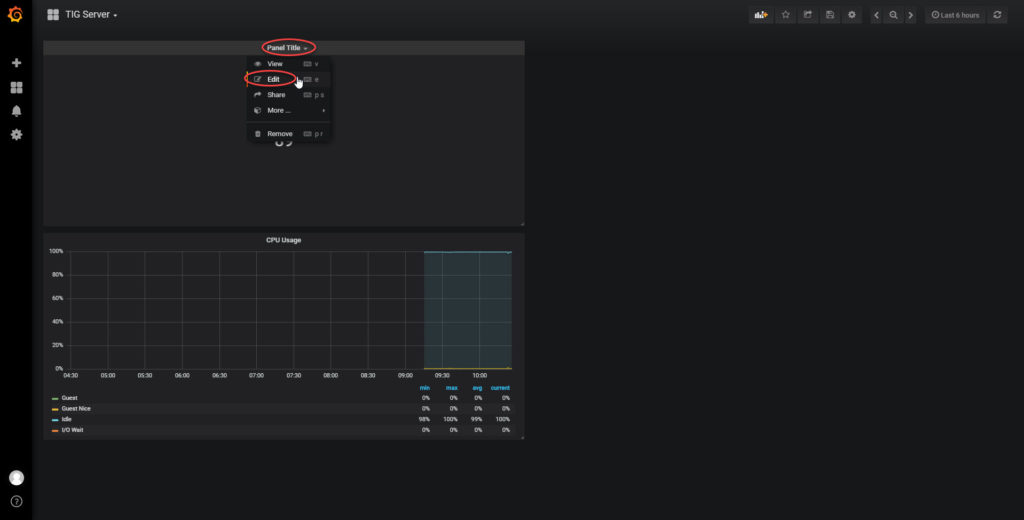

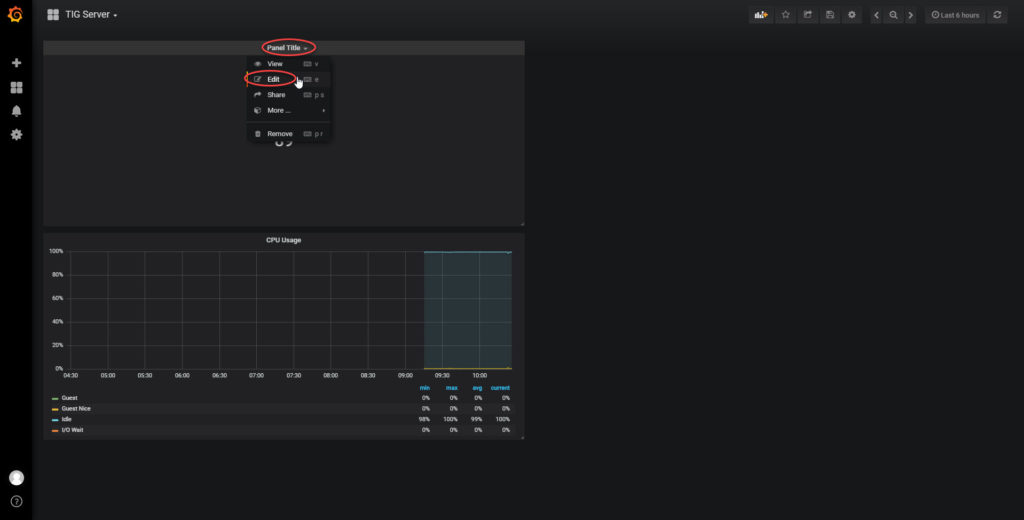

While this is cool looking, it wasn’t exactly intuitive that you click on the panel title to get editing options:

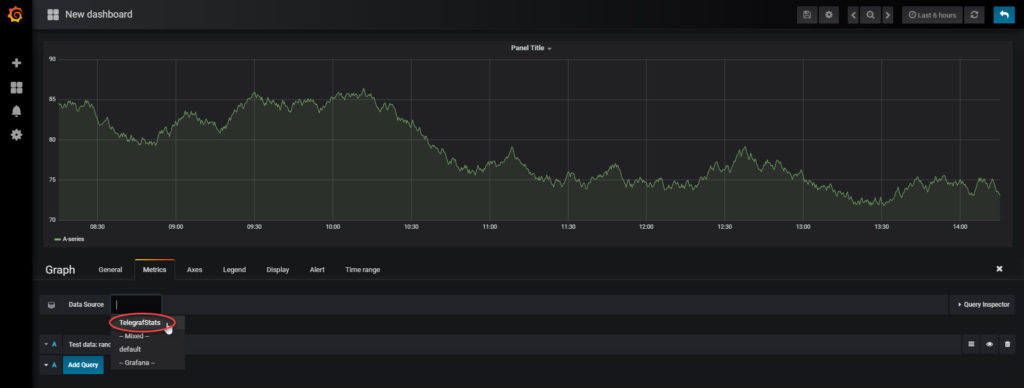

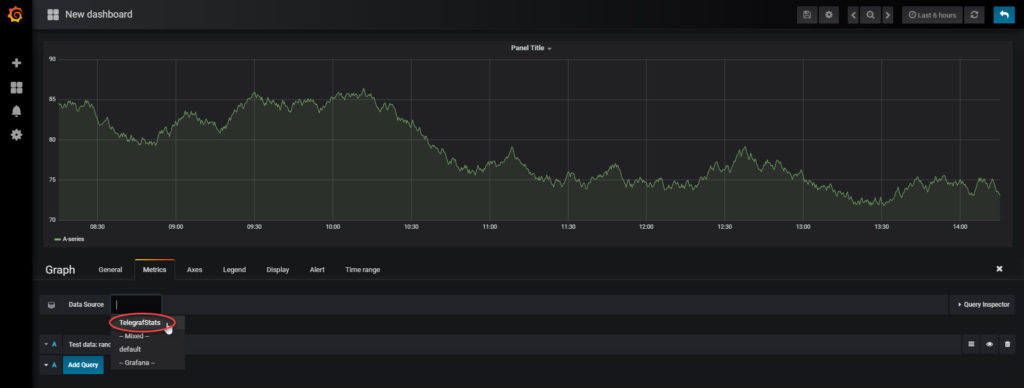

Once you’ve clicked edit, you should have a full screen of editing options. We’ll start by changing the data source to our newly created data source, TelegrafStats in my case:

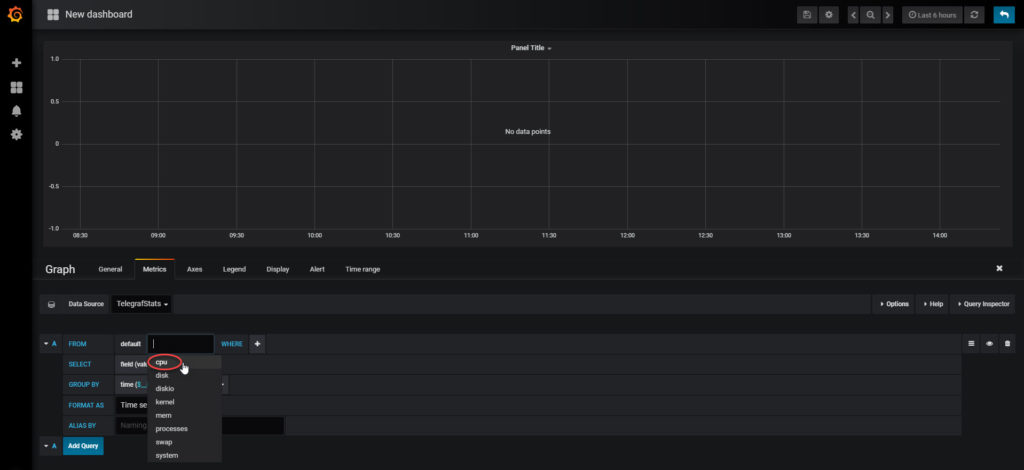

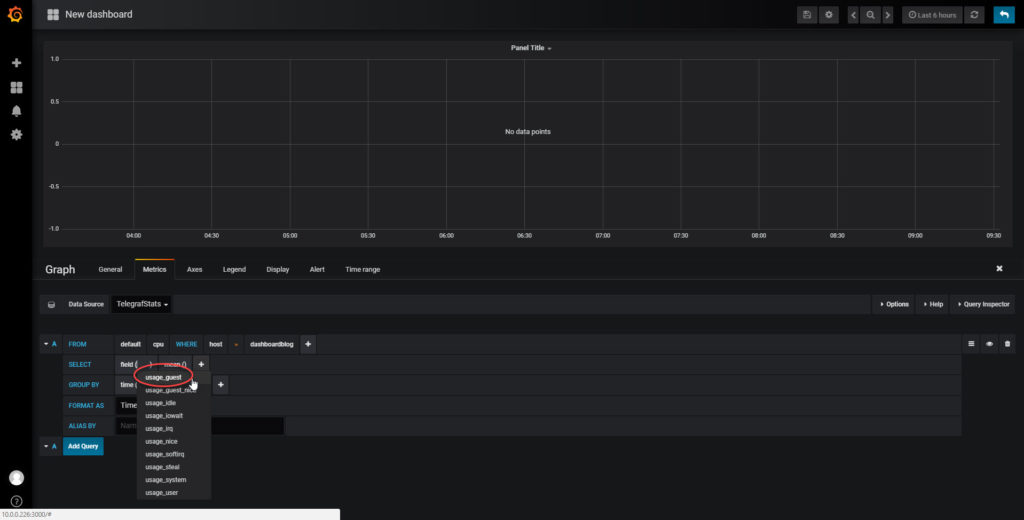

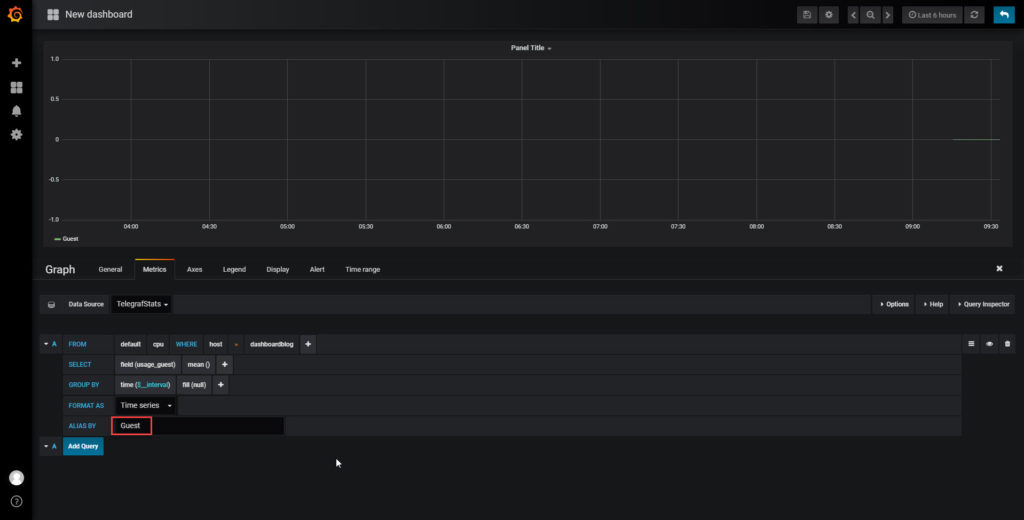

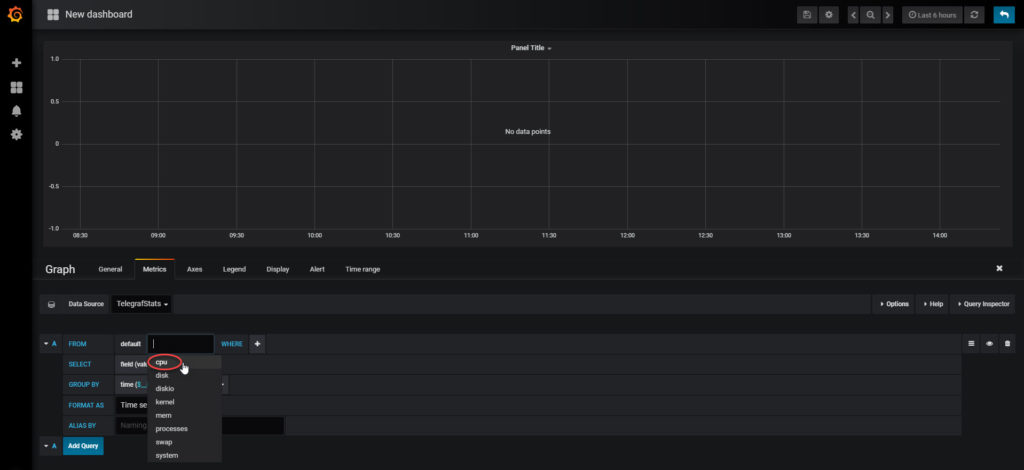

Now we need to pick a table to pull data from. We’ll start with something that seems simple like CPU statistics from the cpu table:

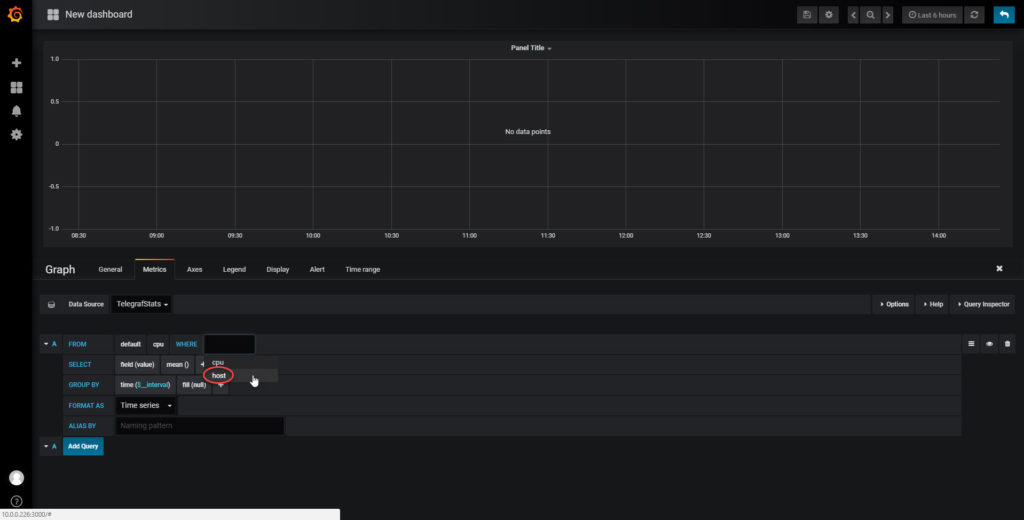

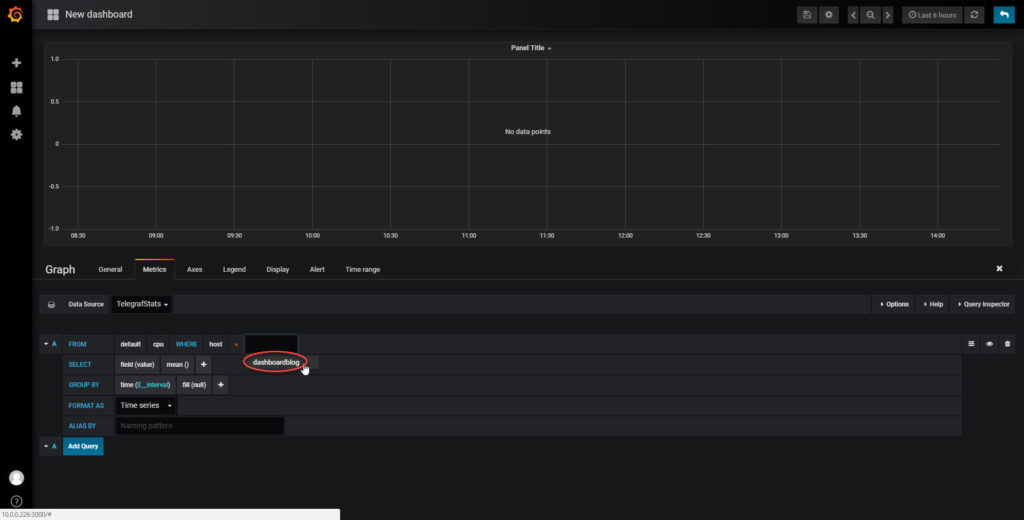

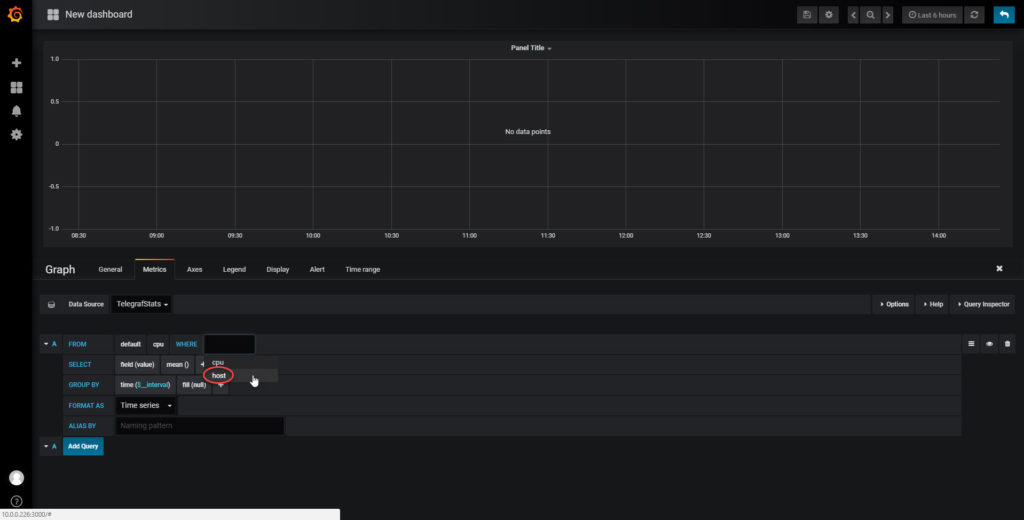

Next we’ll add host to our where clause so that we don’t try to aggregate multiples as we add in future devices:

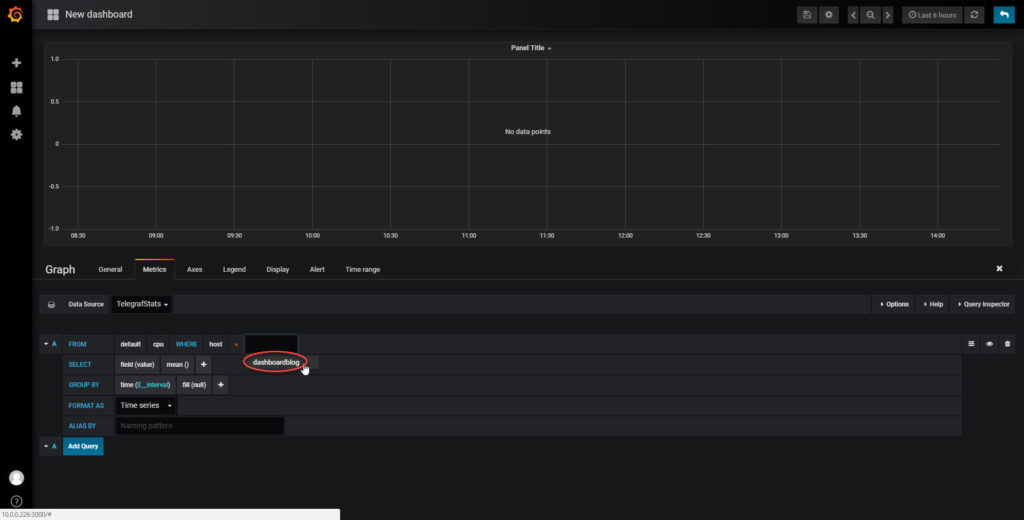

And now we need to specify the host we wish to filter on:

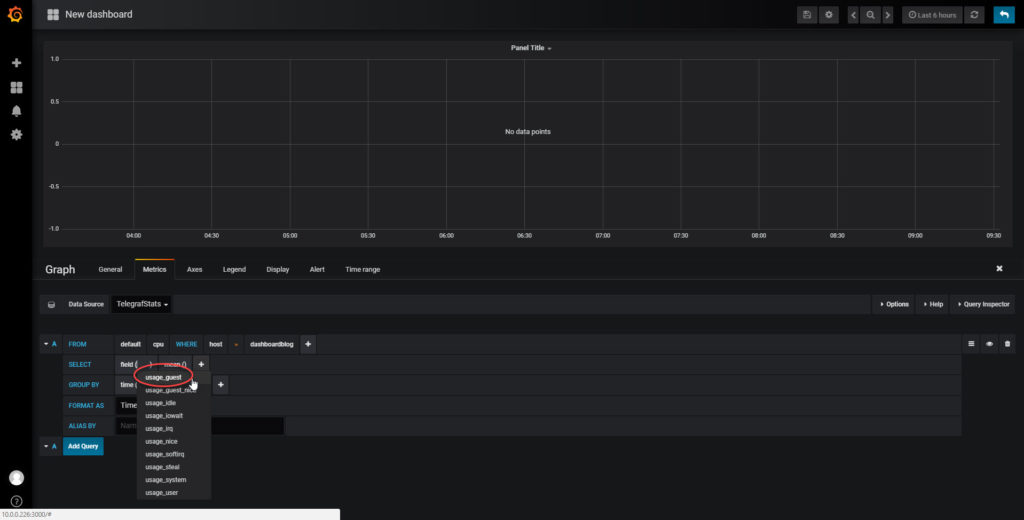

Finally, we can select a field. In some cases, this will a single value of interest. As we look at CPU options, we’ll notice that there are quite a few to select from. This will vary based on the operating system that we are using, but in my case (Debian Stretch), I have a lot of choices. We’ll start by picking a single item from the list:

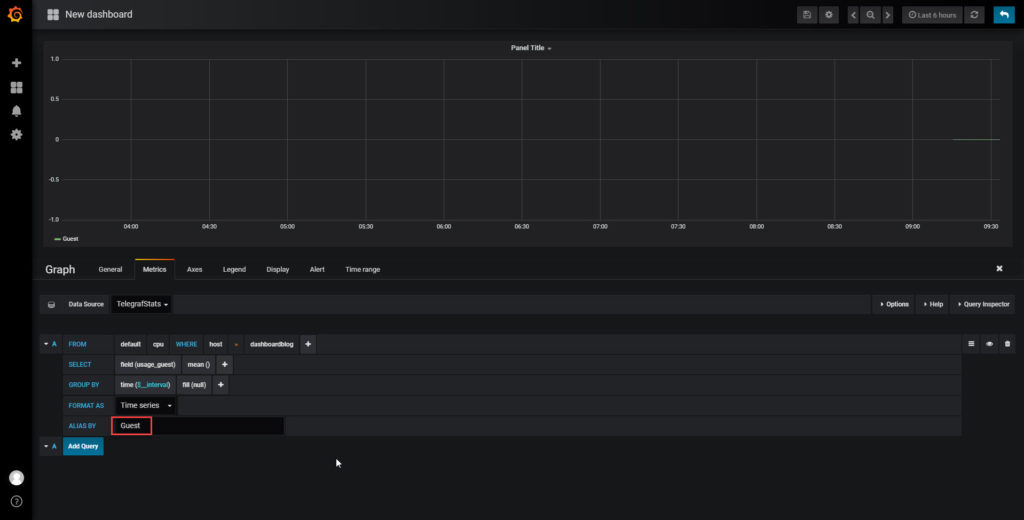

Before we get into the rest of our CPU options, let’s give our series a name:

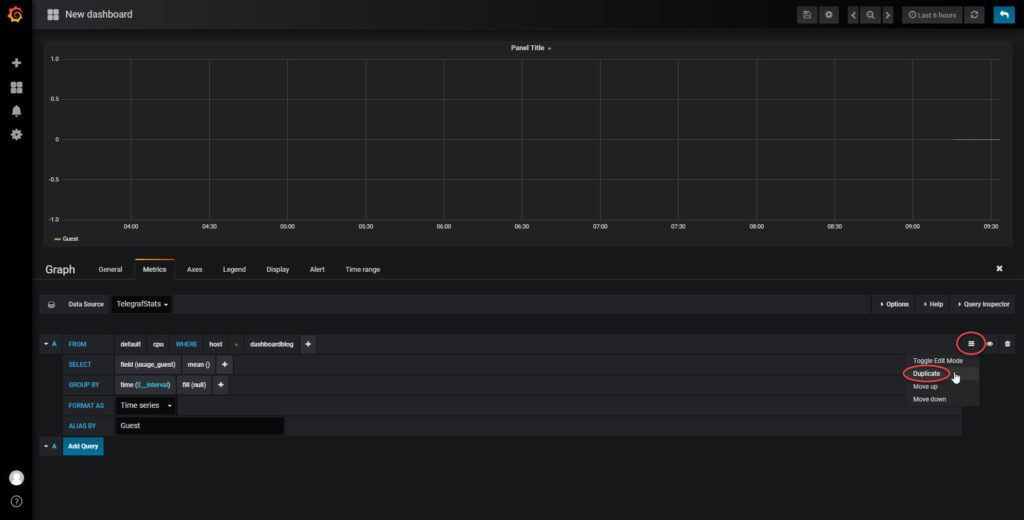

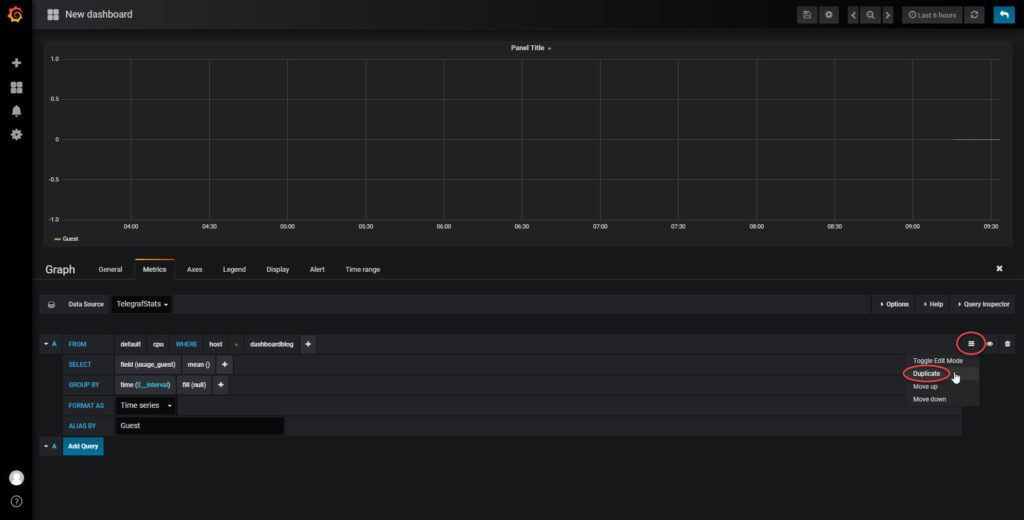

Given that we have all of these choices, we really need all of them on the graph to adequately illustrate CPU utilization. To make this a faster process, we’ll simply duplicate our first entry. We’ll click the menu button on the query and select Duplicate:

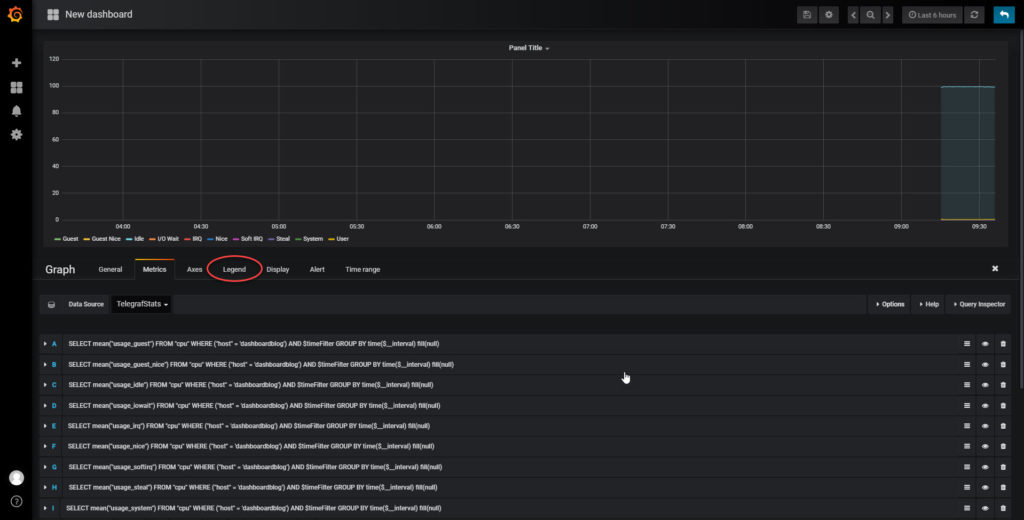

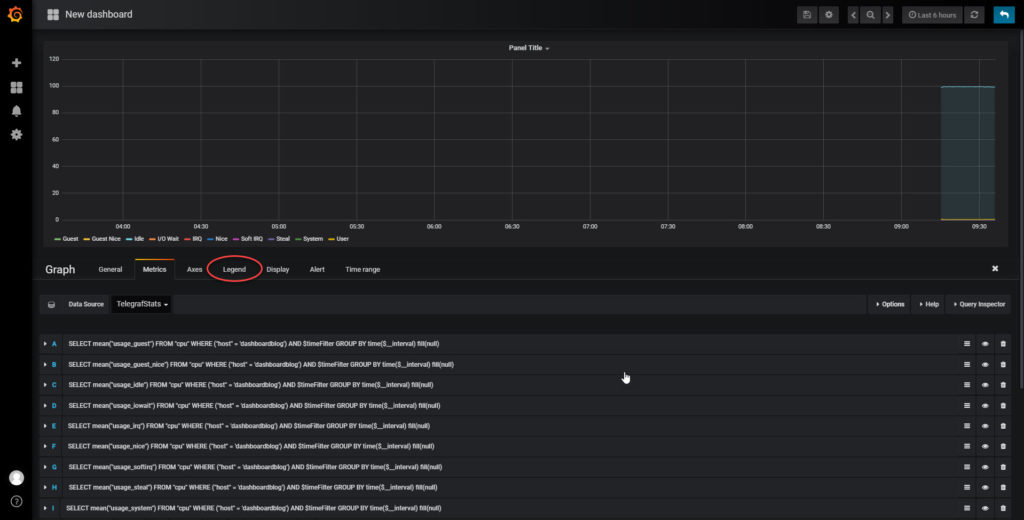

We’ll do this for every field available so that we can represent all of the possible ways our CPU will be utilized. Now that we’ve added all of our data, its time to make things look a little more polished. We’ll start with our legend. Click on the Legend tab:

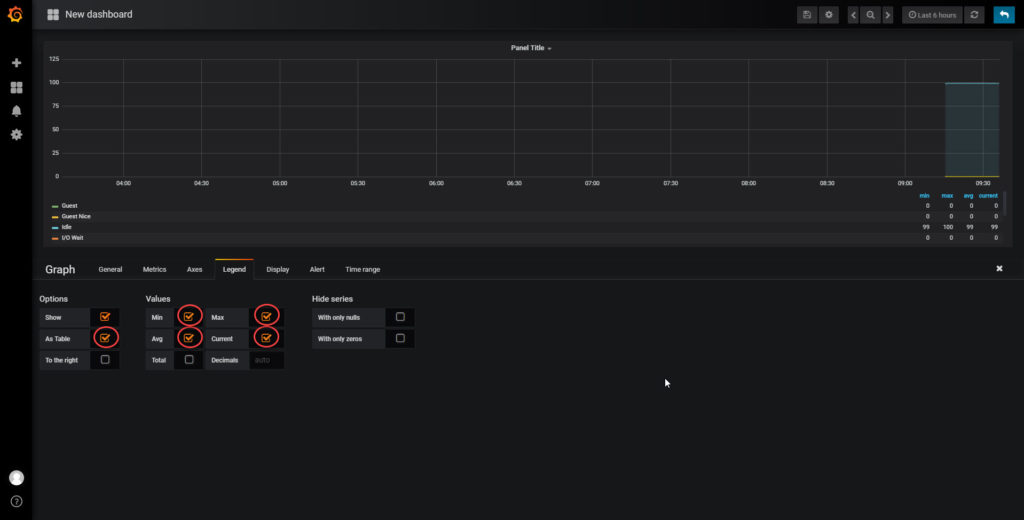

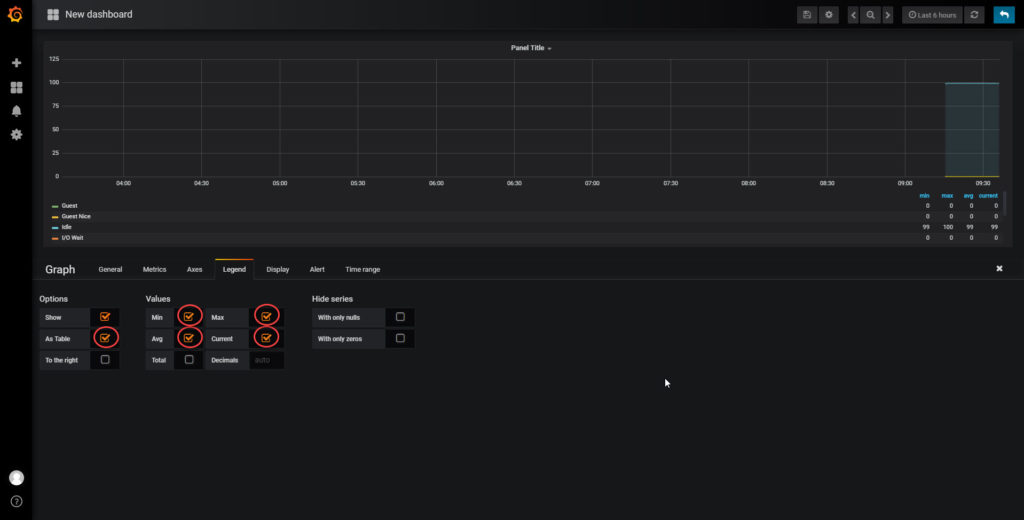

I prefer to see more information on my legend. Grafana gives us a great selection of options. I’ve chosen to display my legend as a table with min, max, avg, and current values:

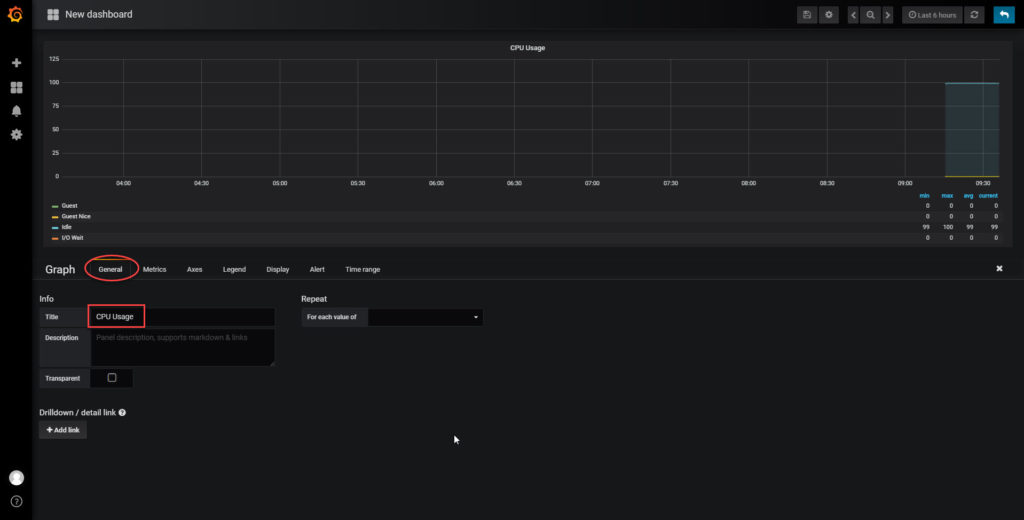

Next we’ll click on our General tab so that we can adjust our title:

The only thing left to do now is to adjust a few settings about our axes. From the Axes tab, we’ll select the unit of none followed by the unit of percent (0-100):

Now that we have it set to percent, we’ll also want to set the range from 0 to 100 as our usage should never exceed 100:

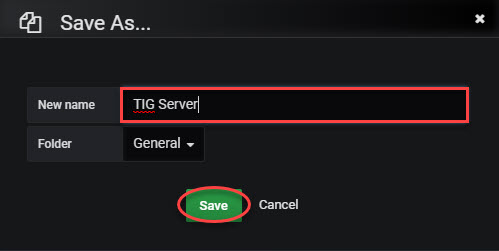

Next we need to click back and actually save our work. Up until this point, nothing we have done has been saved. Once we have clicked the back button in the top right corner, we should see our completed panel and we’re ready to click the save button:

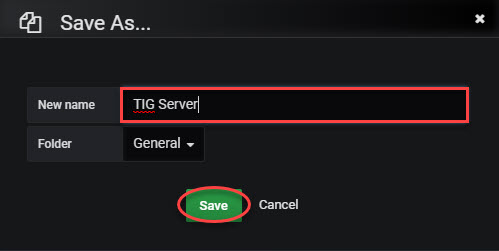

Now we just need to enter a name for the new dashboard and click Save:

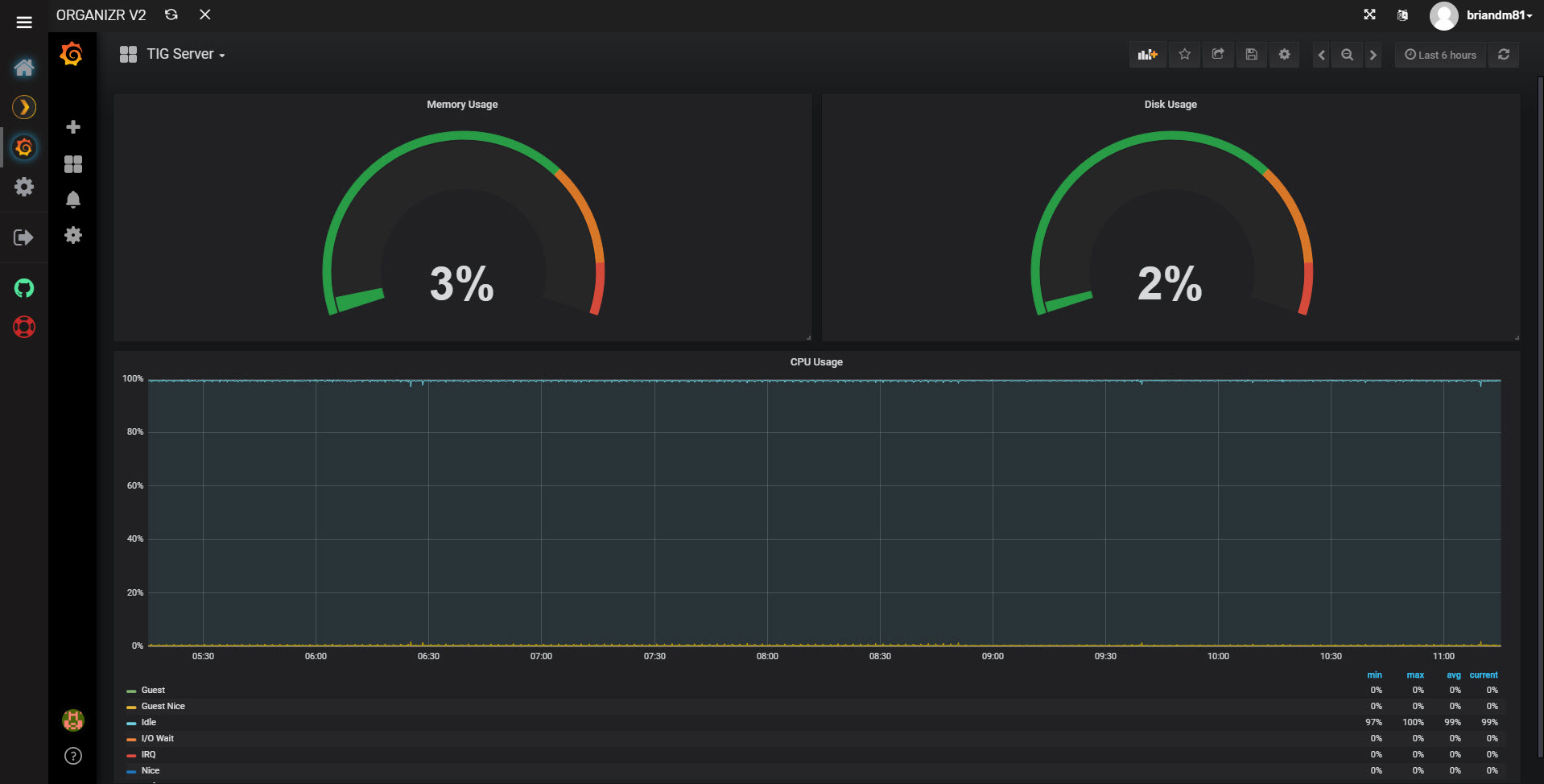

We have officially created our first dashboard in Grafana! But wait, that will be a pretty boring dashboard. Let’s add some memory and disk metrics next. To do this, we’ll use a different type of visualization.

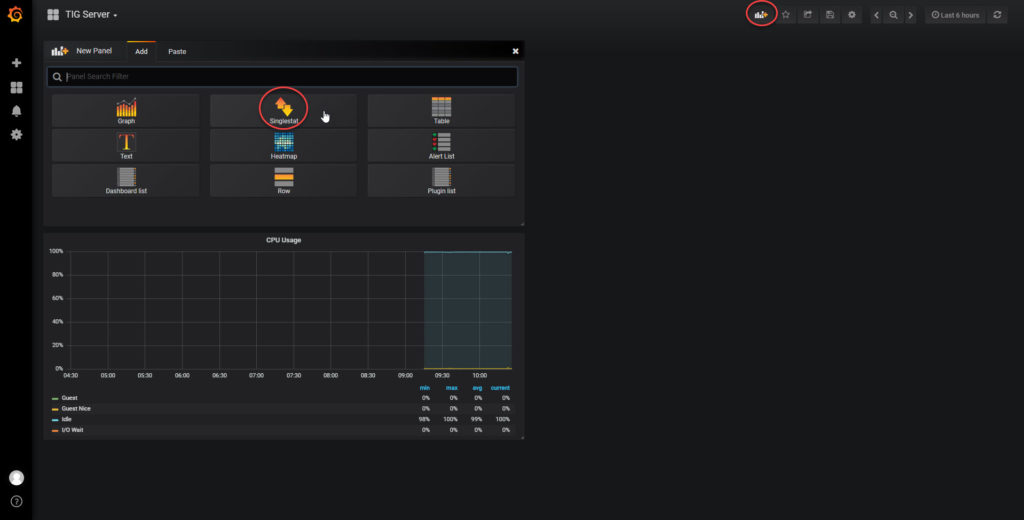

Singlestat (Gauge)

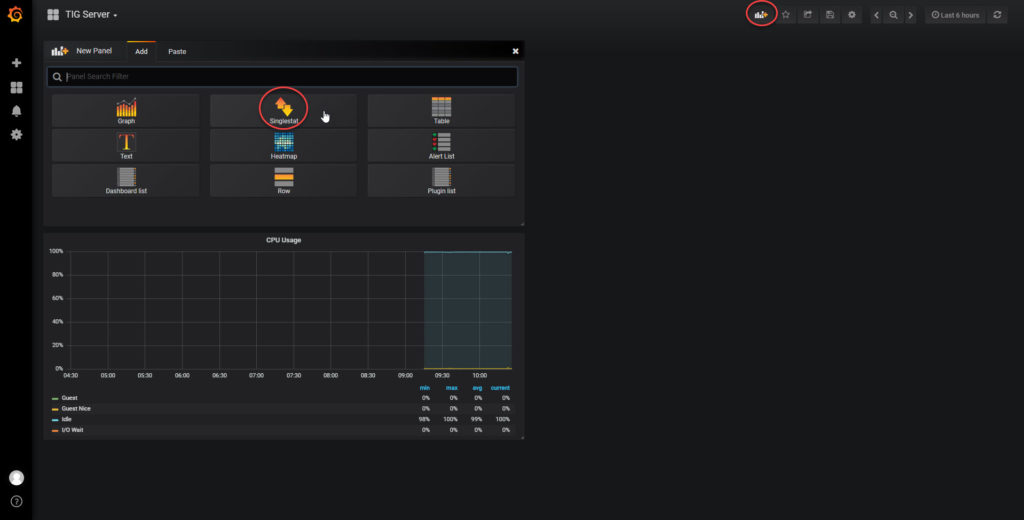

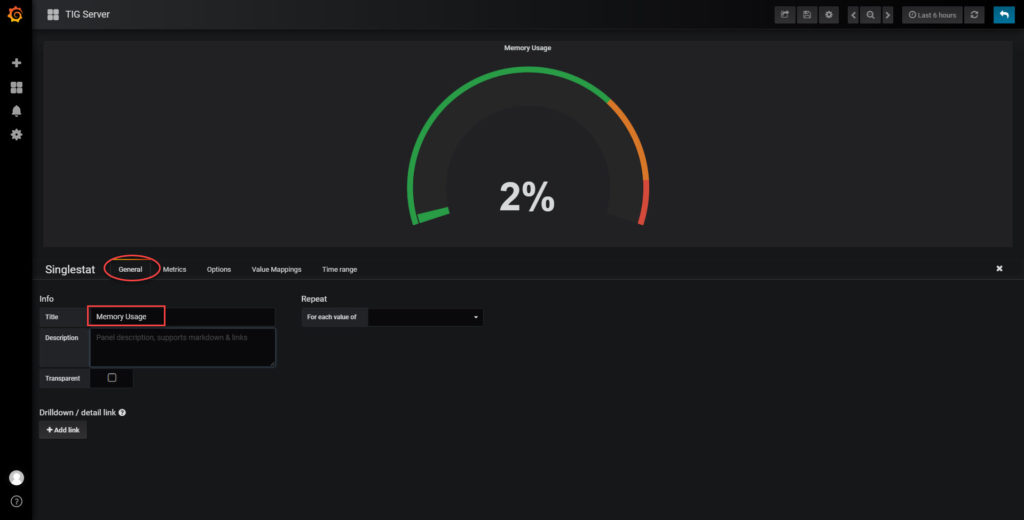

Everyone loves pretty gauges, so let’s add one or two of those to our dashboard. To create a gauge we add a new panel:

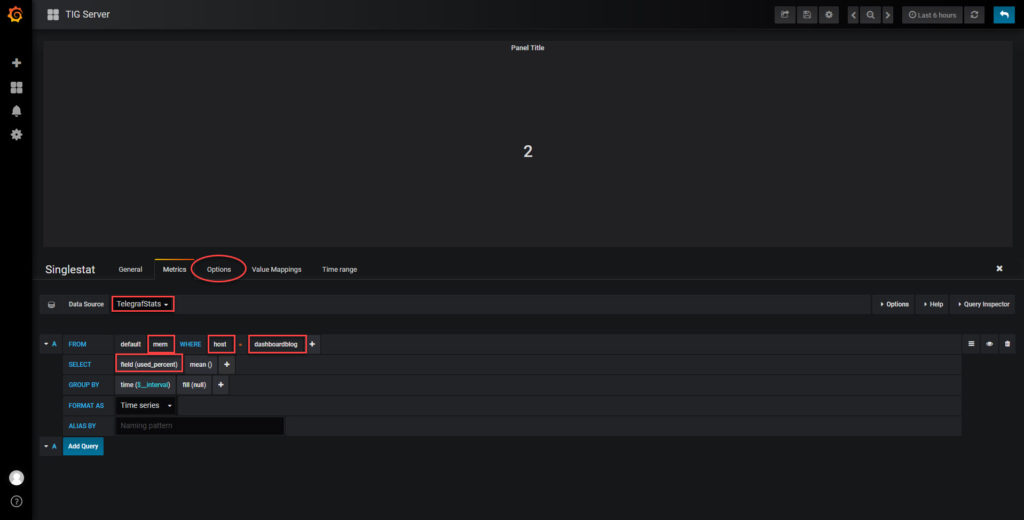

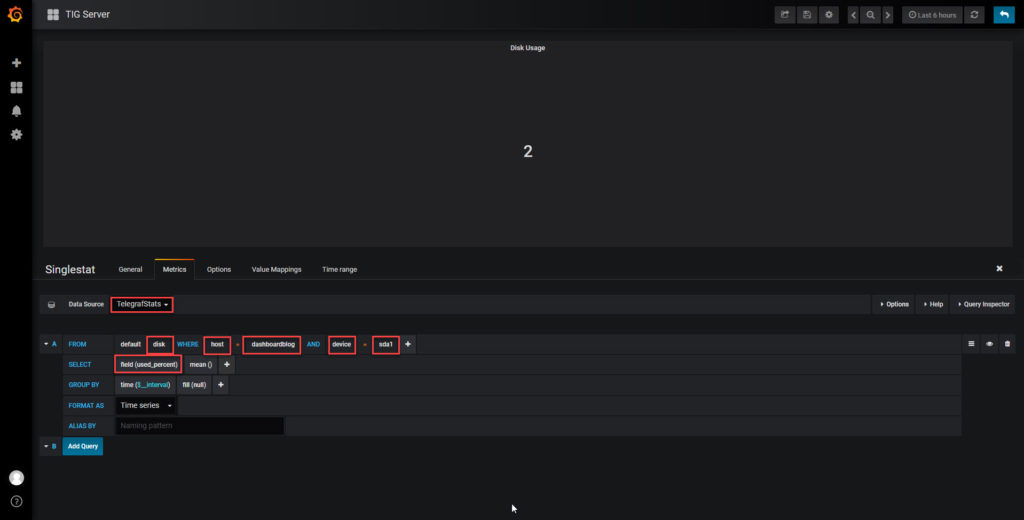

The Singlestat panel can be modified the same way as our graph:

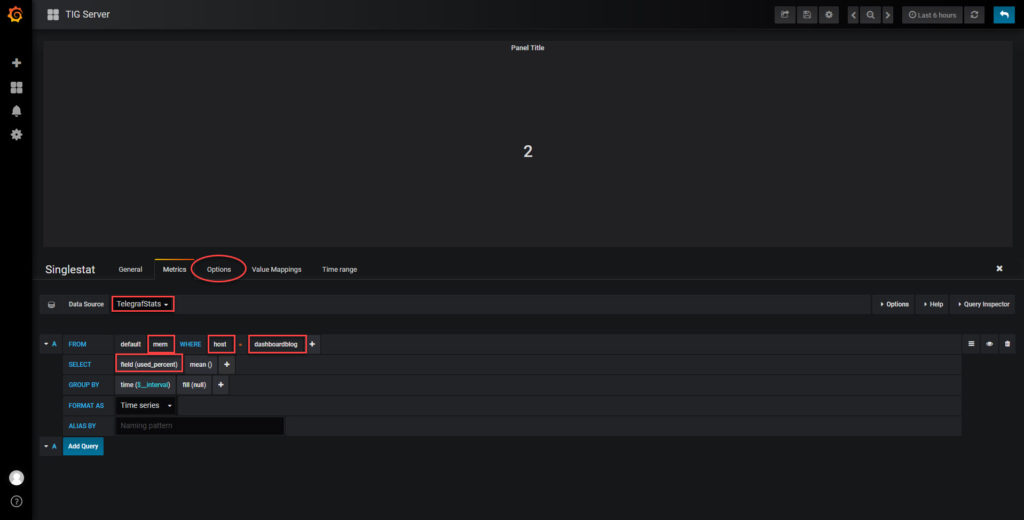

Now we modify our query just like we did with a graph and then we’ll go to the options tab:

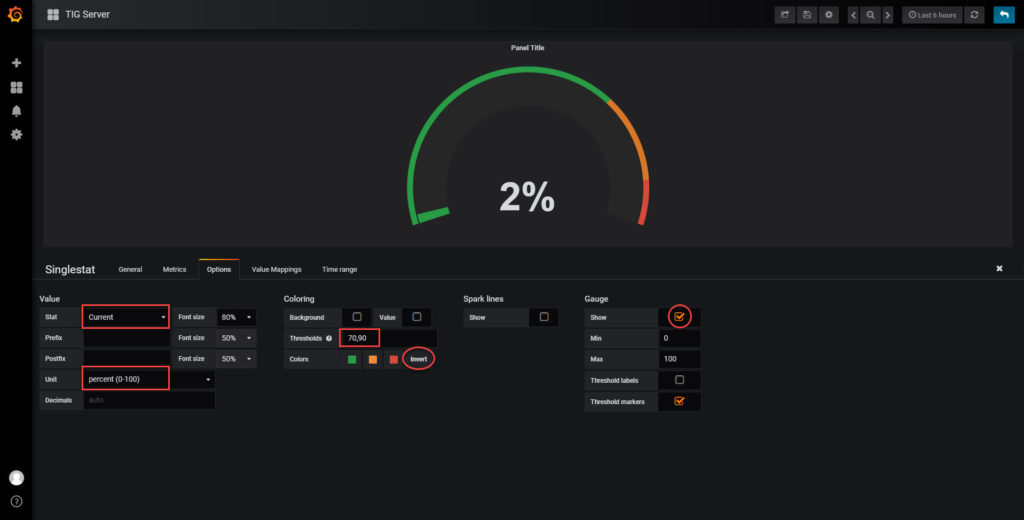

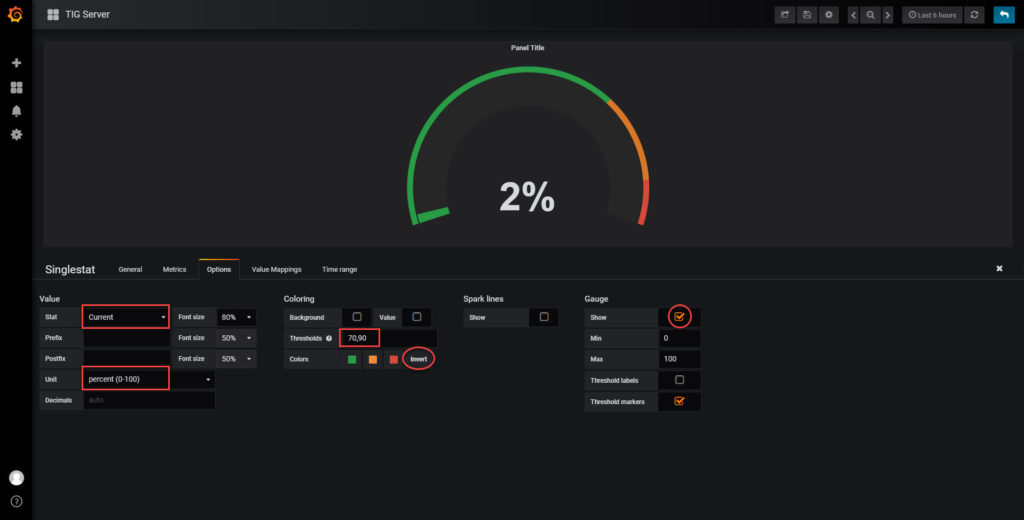

We’re going to first change our stat. Essentially we want to change it from the default of Average to the setting of Current. This ensures that our gauge will always show the most recent value rather than an average of the time period selected. We also need to change our thresholds, I chose 70 and 90 for my orange and red. We’ll also set our gauge to Show and set our units to percent. If your colors are reversed, just click the Invert button:

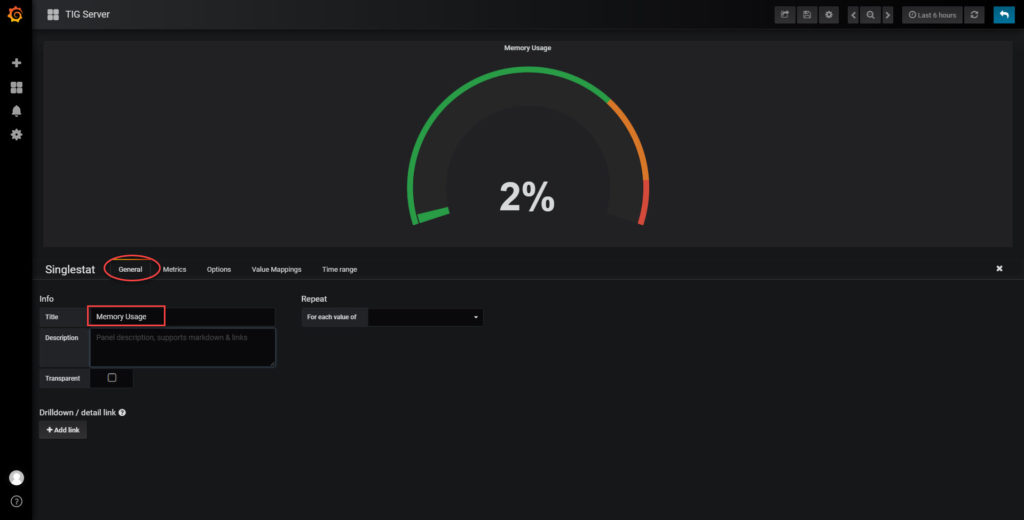

Once we have our gauge configured, we just need to name our panel:

One More SingleStat

I won’t go step by step, but here are the settings I used for the disk space gauge:

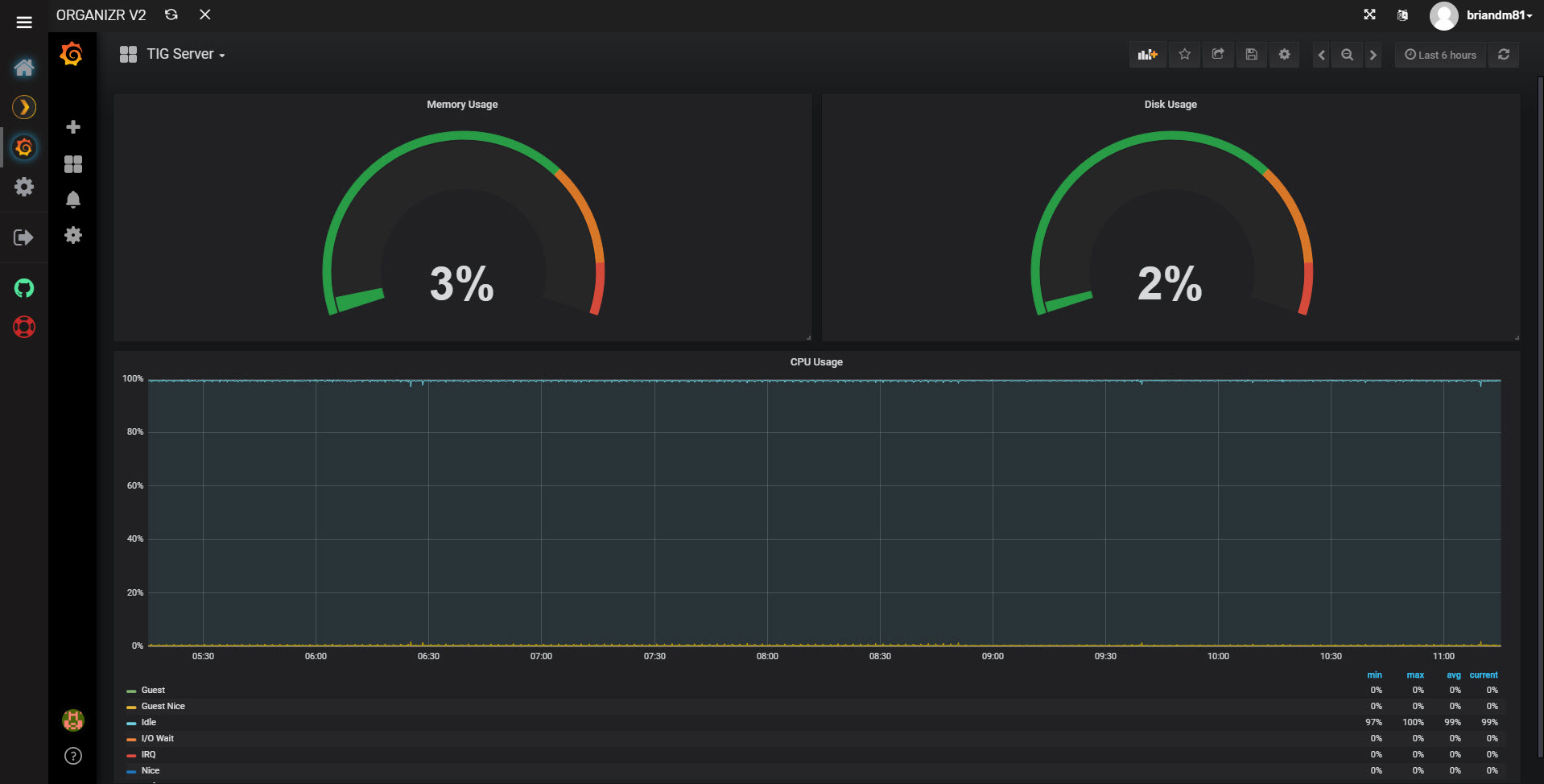

The Dashboard

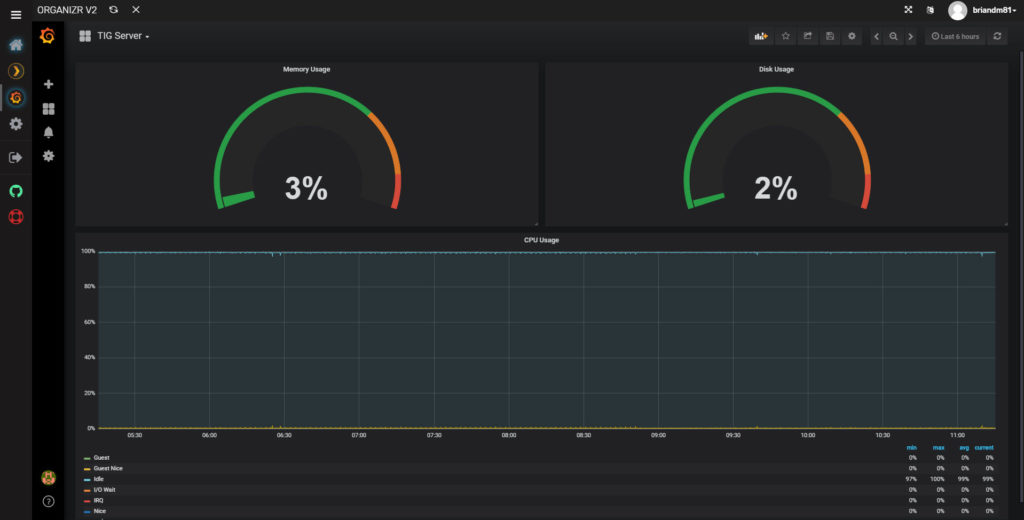

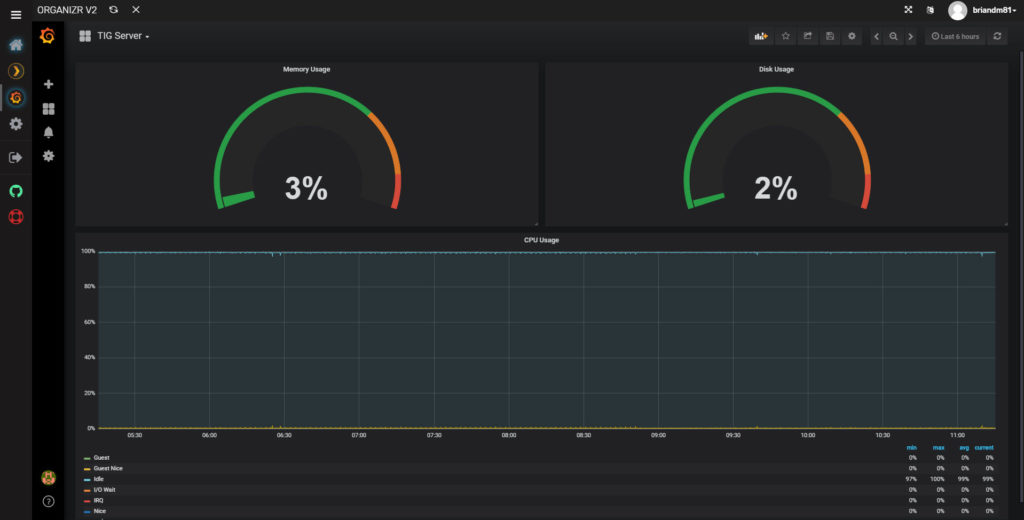

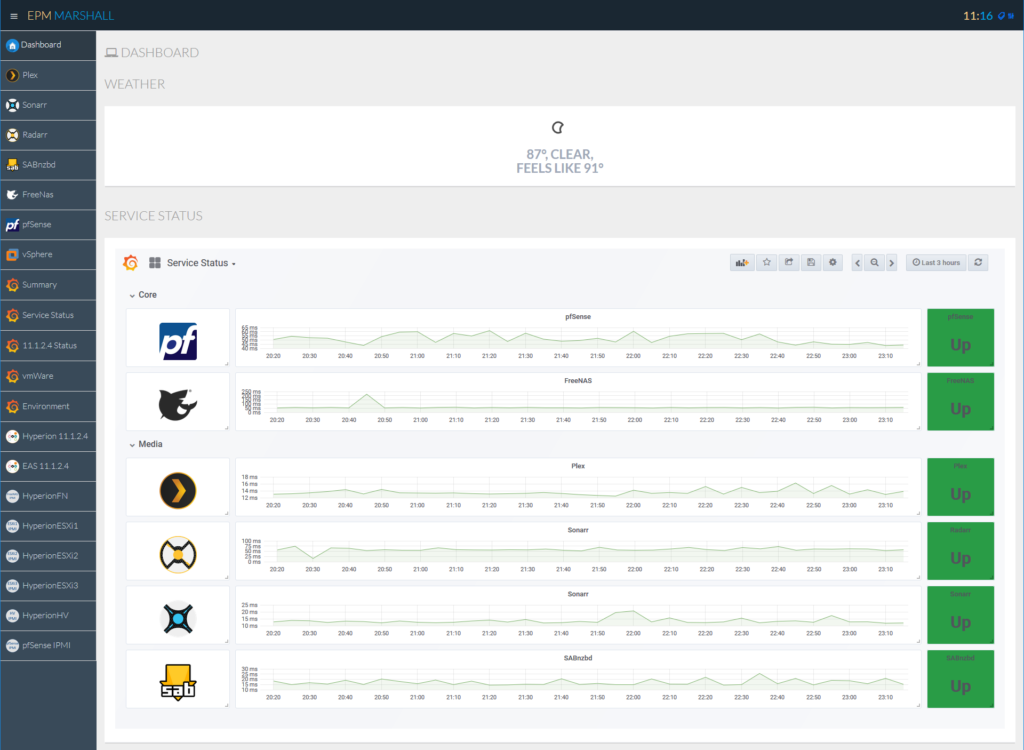

Finally, we have a dashboard. I moved things around a bit and ended up with this:

Putting It All Together

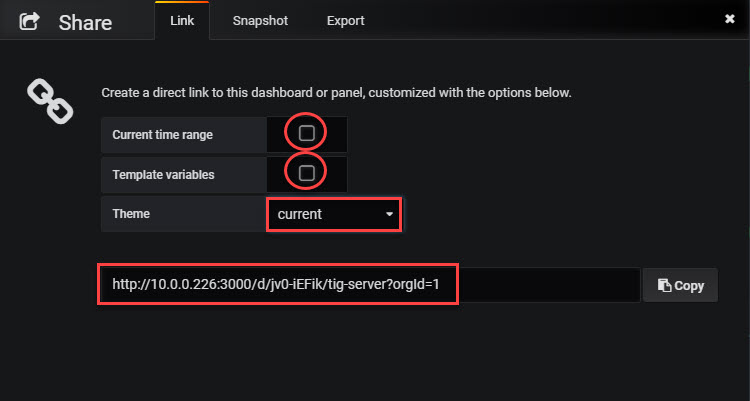

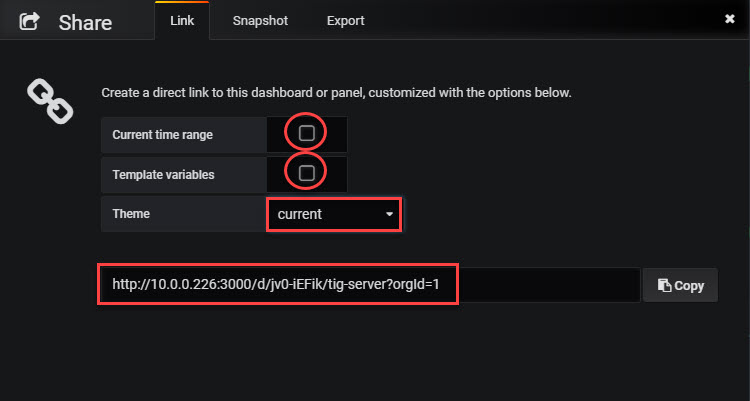

Now that we have a dashboard, we should be ready to put it all together. This means all the way back to Organizr. Before we head over there, we need to copy a link. Click on the share button:

Next we will deselect Current time range and Template variables. Finally we’ll copy the link:

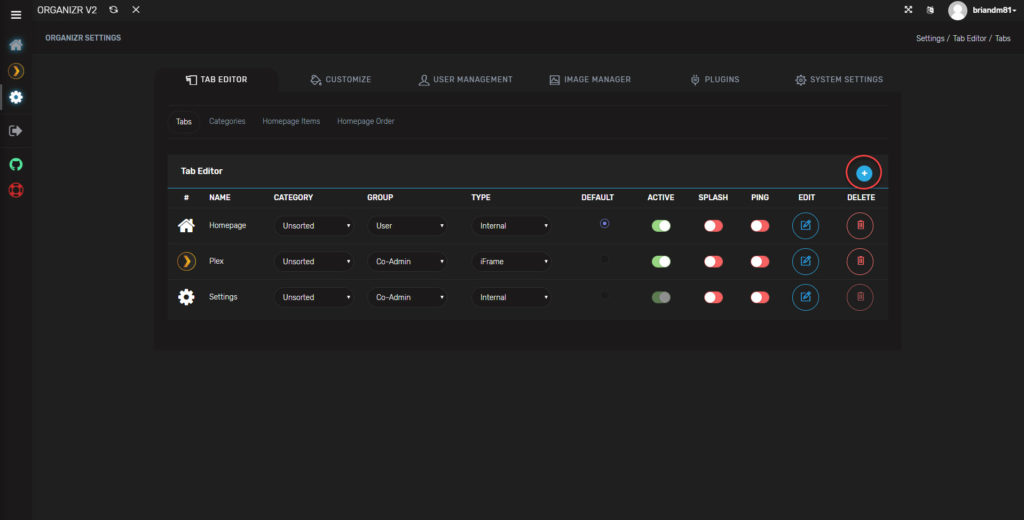

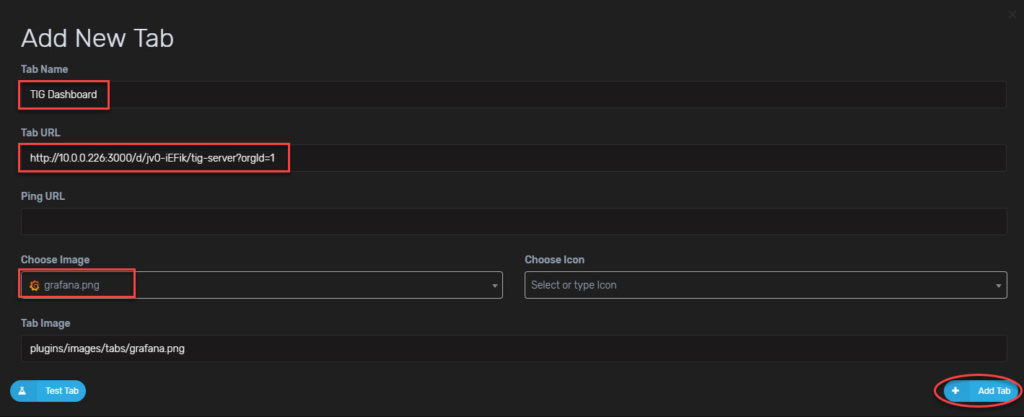

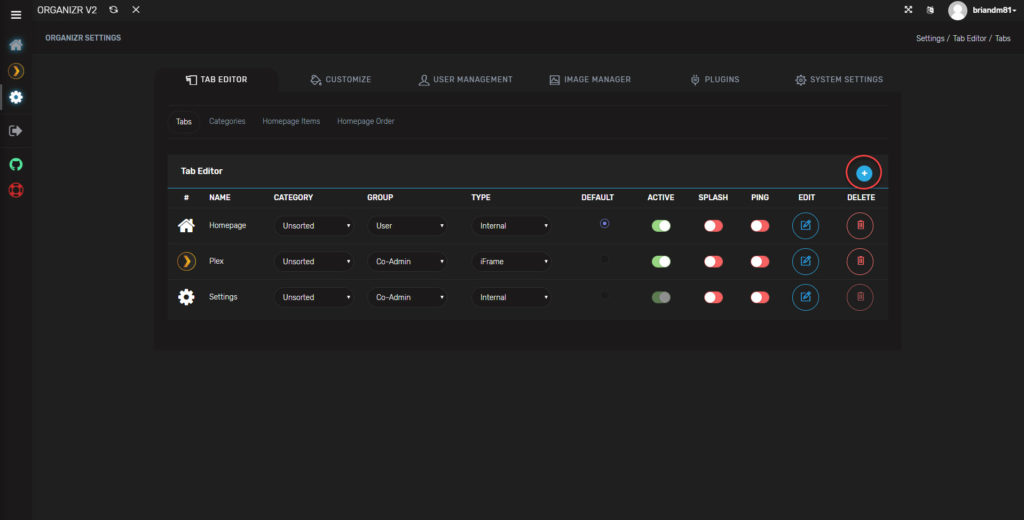

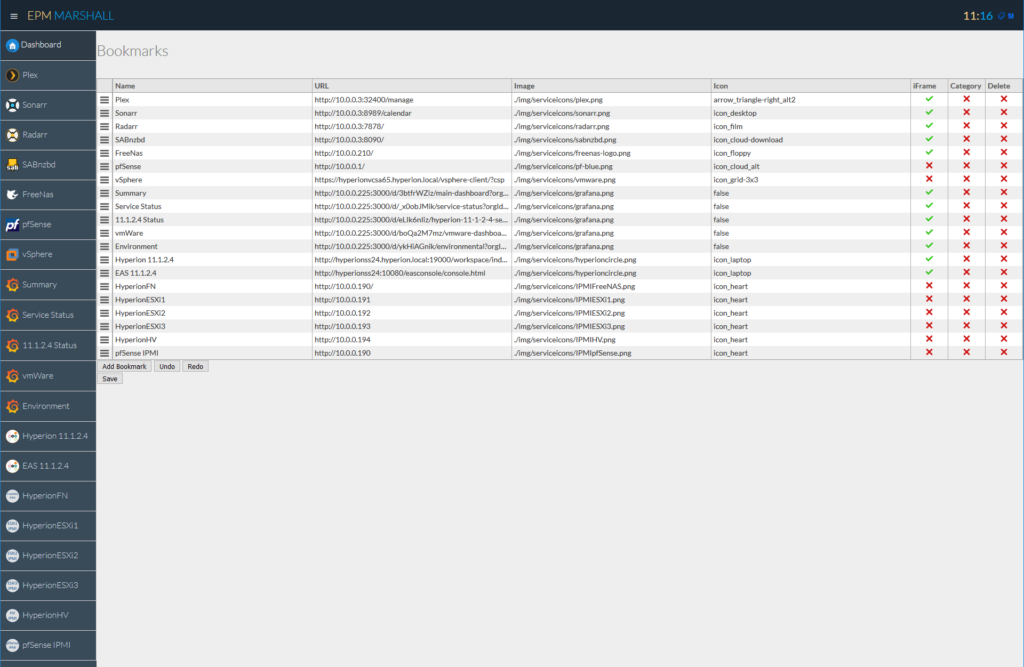

We’ll head back over the Organizr and go to our Tab Editor and click the add new tab button:

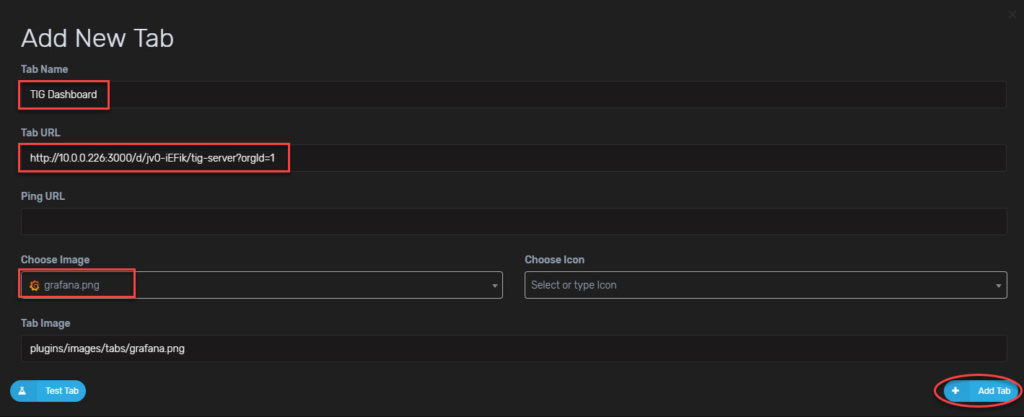

Now we just need to name our tab, paste our URL, and choose the Grafana logo:

And once we reload Organizr, here we go:

Conclusion

If you have followed the entire series so far, you should have a fully functionally dashboard inside of Organizr. Soup to nuts as promised. We’ll continue the series by adding in more and more devices and data into InfluxDB and Grafana…another day.

Brian Marshall

July 30, 2018

As we continue on our homelab dashboard journey, we’re ready to start populating our time-series database (InfluxDB) with some actual data. To do this, we’ll start by installing Telegraf. But, before we dive in, let’s take a at the series so far:

- An Introduction

- Organizr

- Organizr Continued

- InfluxDB

- Telegraf Introduction

What is Telegraf

In part 1 of this series, I gave a brief overview of Telegraf, but as we did with InfluxDB in our last post, let’s dig a little deeper. Telegraf is a server agent designed to collect and report metrics. We’ll look at Telegraf from two perspectives. The first perspective is using Telegraf to gather statistics about the server on which it has been installed. This means that Telegraf will provide us data like CPU usage, memory usage, disk usage, and the like. It will take that data and send it over to our InfluxDB database for storage and reporting.

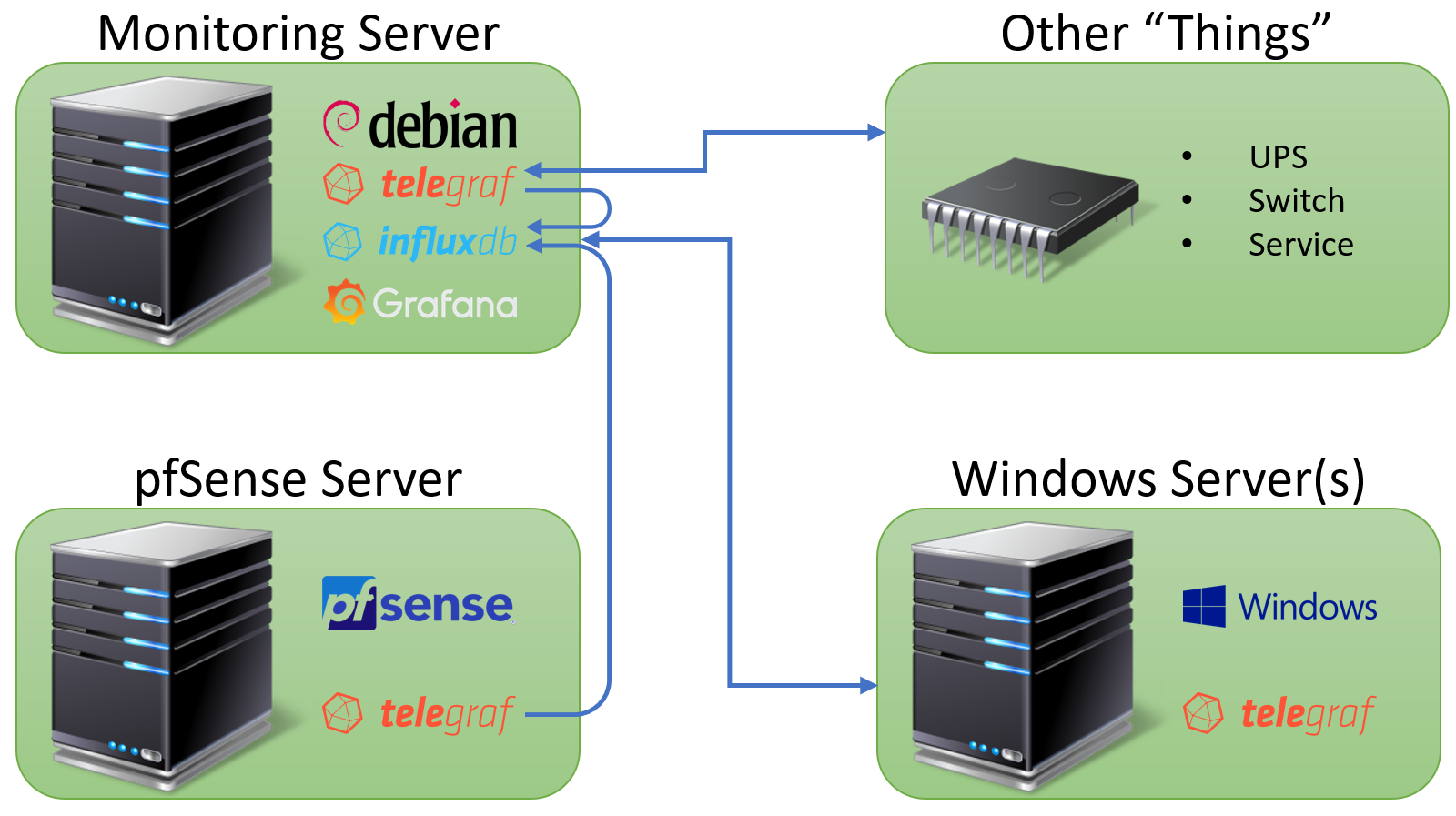

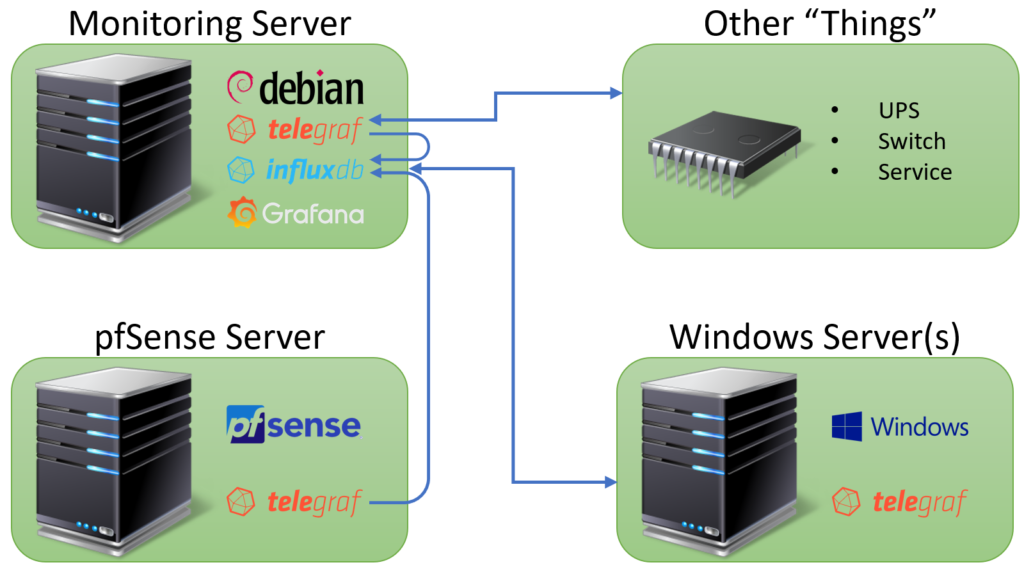

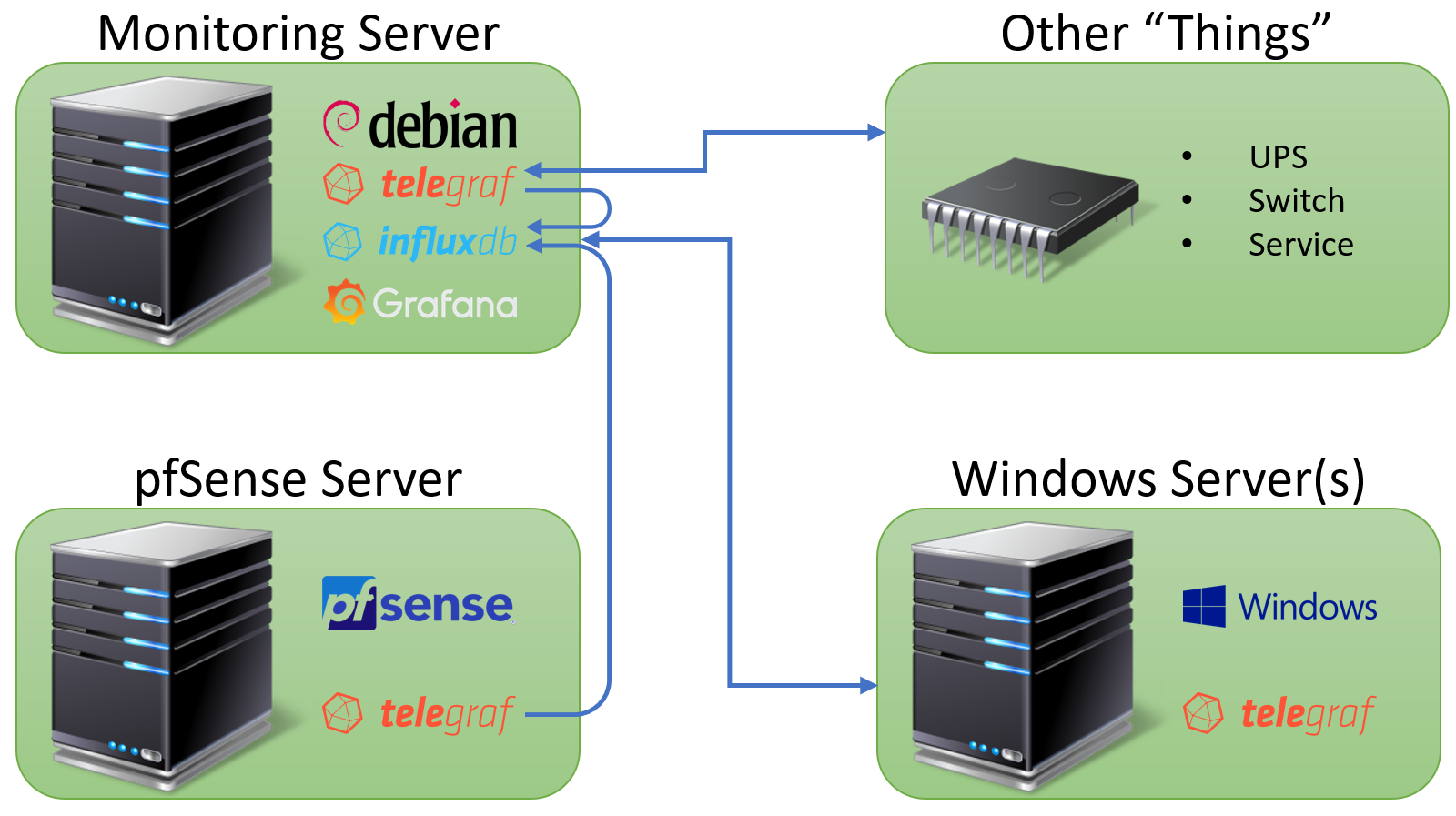

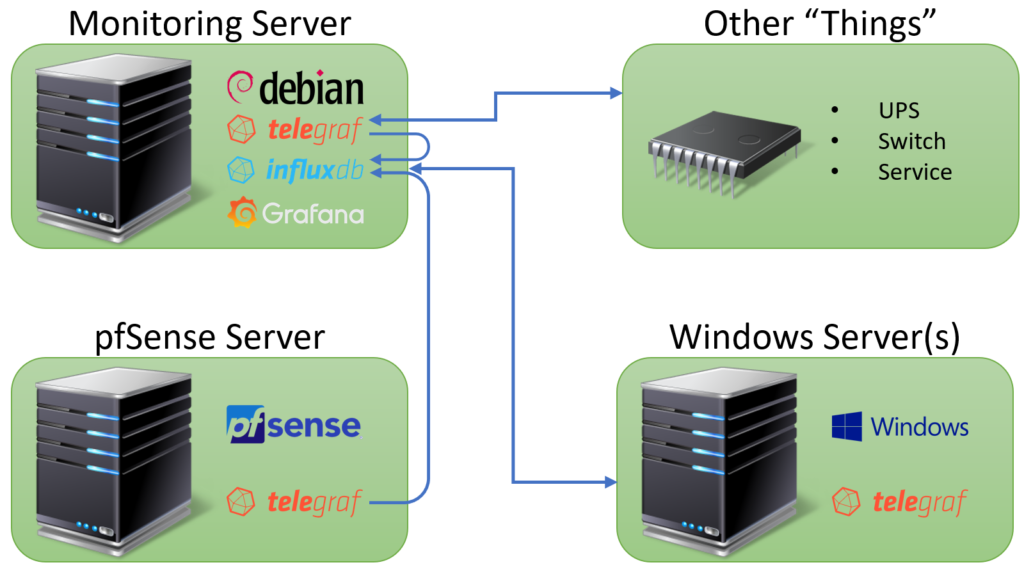

The second perspective is using Telegraf to connect to other external systems and services. For instance, we can use Telegraf to connect to a Supermicro system using IPMI or to a UPS using SNMP. Each of these sets of connectivity represents an input plugins The list of plugins is extensive and far too long to list. We’ll cover several of the plugins in future posts, but today we’ll focus the basics. Before we get into the installation, let’s take see what this setup looks like in the form of a diagram:

Looking at the diagram, we’ll see that we have our monitoring server with InfluxDB, Telegraf, and Grafana. Next we have a couple of examples of systems running the Telegraf agent on both Windows and FreeBSD. Finally, we have the other “things” box. This includes our other devices that Telegraf monitors without needing to actually be installed. The coolest part about Telegraf for my purposes is that it seems to work with almost everything in my lab. The biggest miss here is vmWare, which does not have a plugin yet. I’m hoping this changes in the future, but for now, we’ll find another way to handle vmWare.

Installing Telegraf on Linux

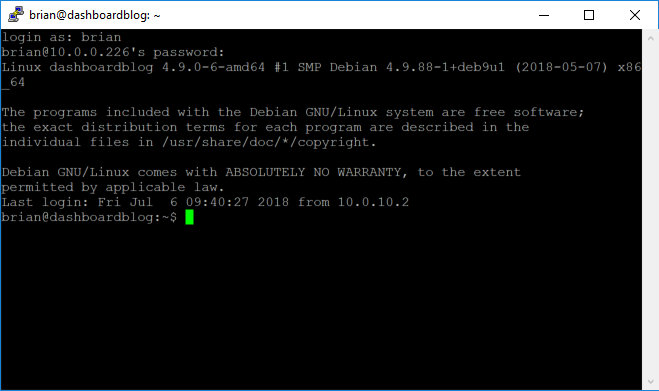

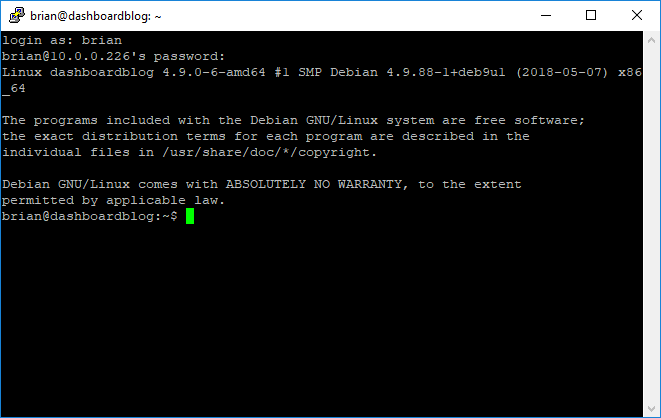

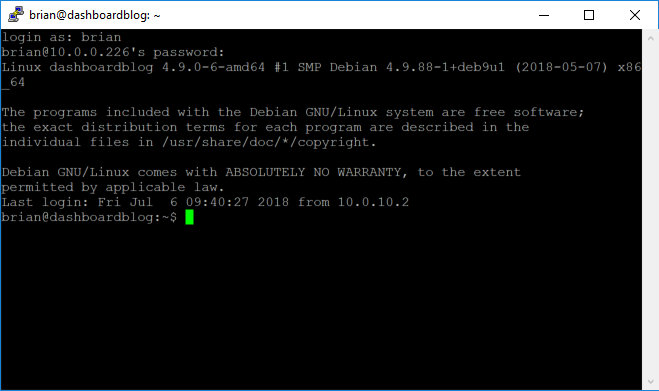

We’ll start by installing Telegraf onto our monitoring server that we started configuring way back in part 2 of this series. First we’ll log into our Linux box using PuTTY:

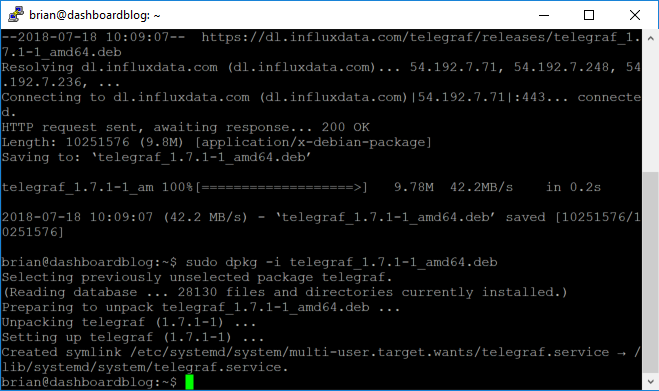

Next, we’ll download the software using the following commands:

sudo wget https://dl.influxdata.com/telegraf/releases/telegraf_1.7.1-1_amd64.deb

sudo dpkg -i telegraf_1.7.1-1_amd64.deb

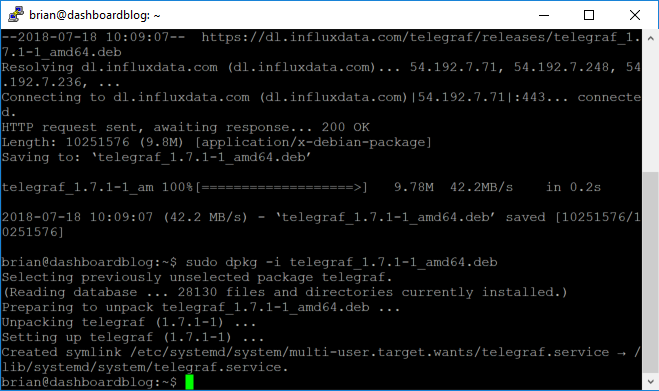

The download and installation should look something like this:

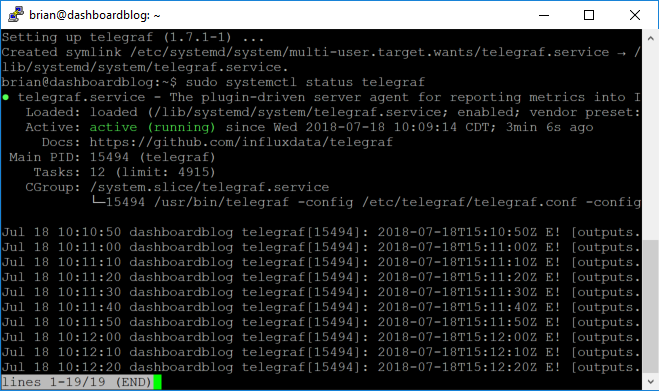

Much like our InfluxDB installation…incredibly easy. It’s actually even easier than InfluxDB in that the service should already be enabled and running. Let’s make sure:

sudo systemctl status telegraf

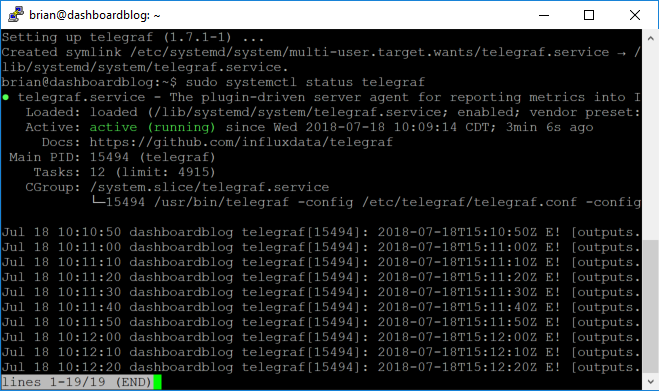

Assuming everything went well we should see “active (running)” in green:

Now that we have completed the installation, we can move on to configuration.

Configuring Telegraf

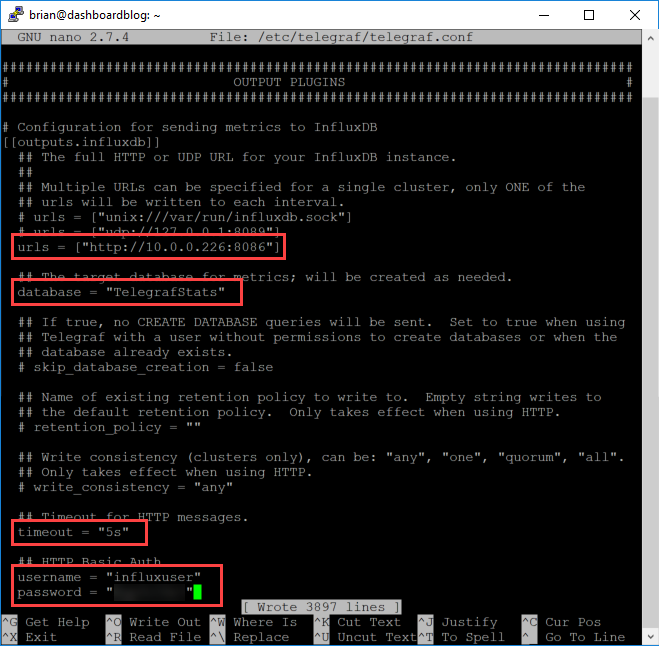

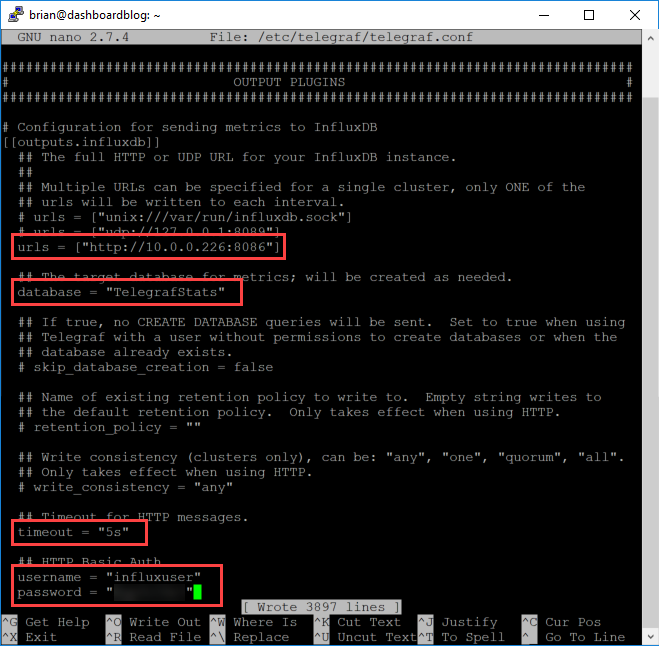

For the purposes of this part of the series, we’ll just get the basics set up. In future posts we’ll take a look at all of the more interesting things we can do. We’ll start our configuration by opening the config file in nano:

sudo nano /etc/telegraf/telegraf.conf

We mentioned input plugins earlier as it related to getting data, but now we’ll look at output plugins to send data to InfluxDB. We’ll uncomment and change the lines for urls, database, timeout, username, and password:

Save the file with Control-O and exit with Control X. Now we can restart the service so that our changes will take effect:

sudo systemctl restart telegraf

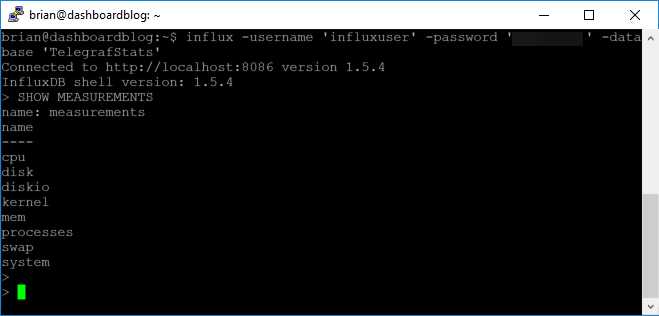

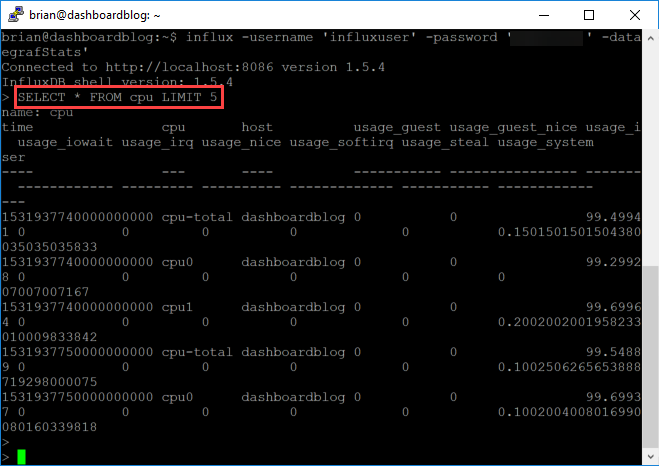

Now let’s log in to InfluxDB make sure we are getting data from Telegraf. We’ll use this command:

influx -username 'influxuser' -password 'influxuserpassword' -database 'TelegrafStats'

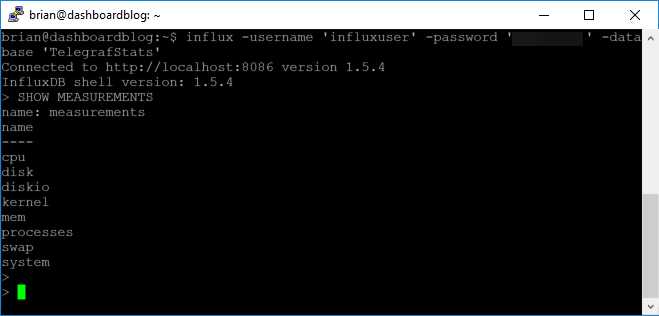

Once logged in, we can execute a command to see if we have any measurements:

SHOW MEASUREMENTS

This should all look something like this:

By default, the config file has settings ready to go for the following:

- CPU

- Disk

- Disk IO

- Kernel

- Memory

- Processes

- Swap

- System

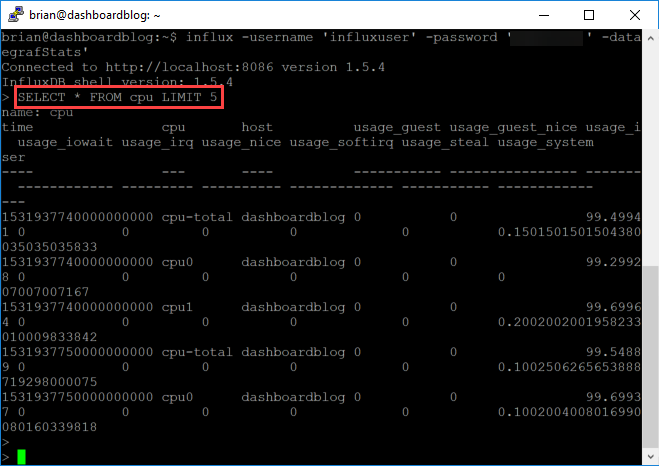

These will be metrics only for the system on which we just installed Telegraf. We can also take a look at the data just to get a look before we make it over to Grafana:

SELECT * FROM cpu LIMIT 5

This should show us 5 records from our cpu table:

Conclusion

With that, we have completed our configuration and will be ready to move on to visualizations using Grafana…in our next post.

Brian Marshall

July 23, 2018

Now that we have our foundation laid with a fresh installation of Debian and Organizr, we can now move on to the data collection portion of our dashboard. After all, we have to get the stats about our homelab before we can make them into pretty pictures. Before we can go get the stats, we need a place to put them. For this, we’ll be using the open source application InfluxDB. Before we dive in, let’s take a at the series so far:

What is InfluxDB?

In part 1 of this series, I gave a brief overview of InfluxDB, but let’s dig a little deeper. At the very basic level, InfluxDB is a time-series database for storing events and statistics. The coolest part about InfluxDB is the HTTP interface that allows virtually anything to write to it. Over the next several posts we’ll see Telegraf, PowerShell, and Curl as potential clients to write back to InfluxDB. You can download InfluxDB directly from GitHub where it is updated very frequently. It supports authentication with multiple users and levels of security and of course multiple databases.

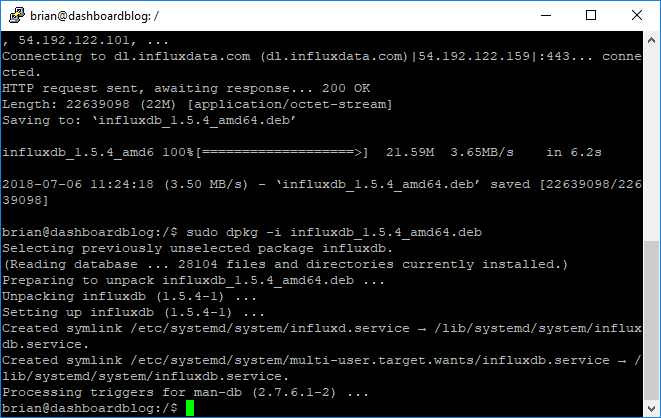

Installing InfluxDB

Installing InfluxDB is a pretty easy operation. We’ll start by logging into our Linux box using PuTTY:

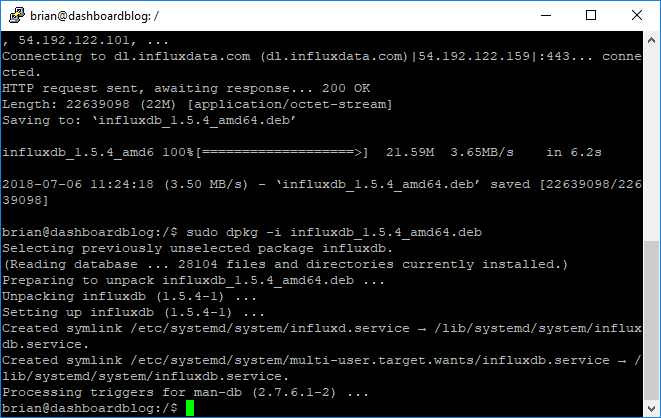

We’ll issue this command (be sure to check here for the latest download link):

sudo wget https://dl.influxdata.com/influxdb/releases/influxdb_1.5.4_amd64.deb

sudo dpkg -i influxdb_1.5.4_amd64.deb

The download and installation should look something like this:

Almost too easy, right? I think that’s the point! InfluxDB is meant to be completely dependency free. Let’s make sure everything really worked by enabling the service, starting the service, and checking the status of the service:

sudo systemctl enable influxdb

sudo systemctl start influxdb

systemctl status influxdb

If all went well, we should see that the service is active and running:

Configuring InfluxDB

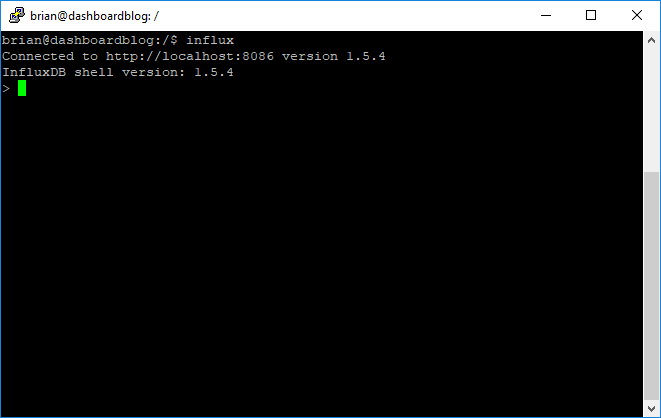

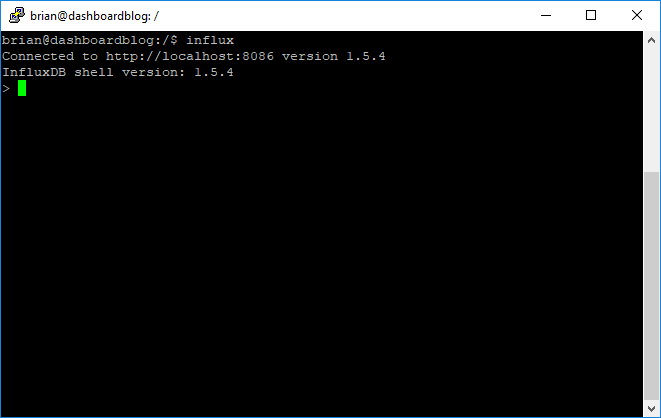

We’ll stay in PuTTY to complete much of our configuration. Start influx:

influx

This should start up our command line interface for InfluxDB:

Authentication

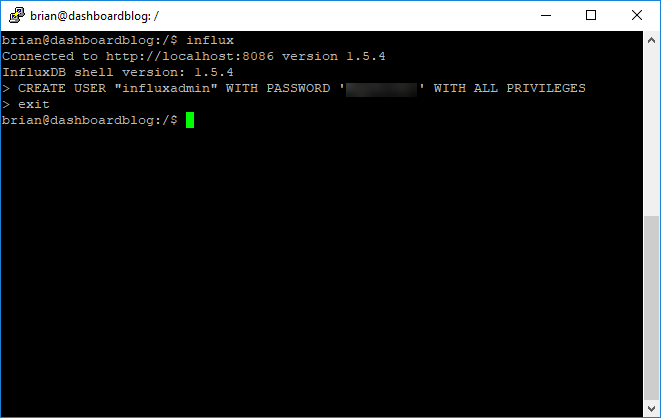

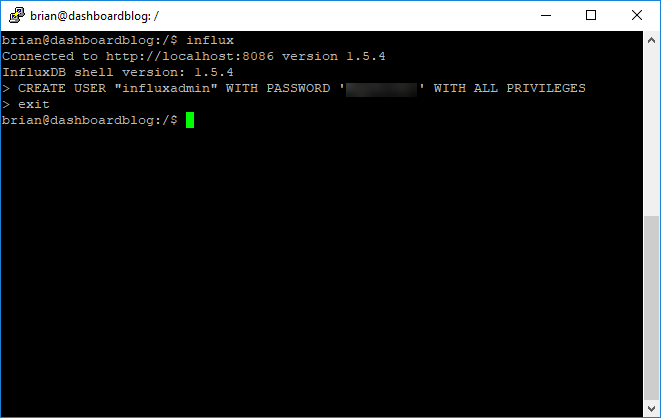

By default, InfluxDB does not require authentication. So let’s fix that by first creating an admin account so that we can enable authentication:

CREATE USER "influxadmin" WITH PASSWORD 'influxadminpassword' WITH ALL PRIVILEGES

exit

You’ll notice that it isn’t terribly verbose:

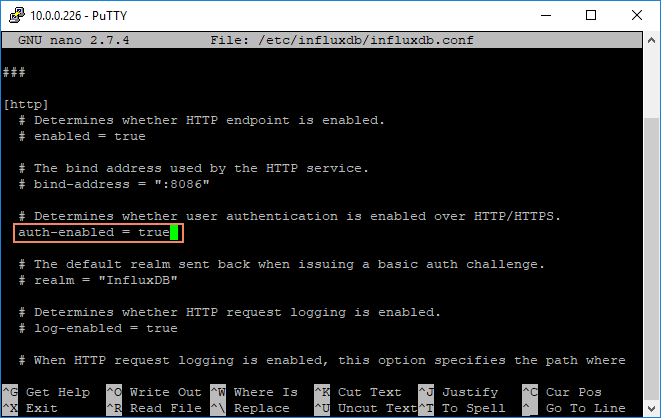

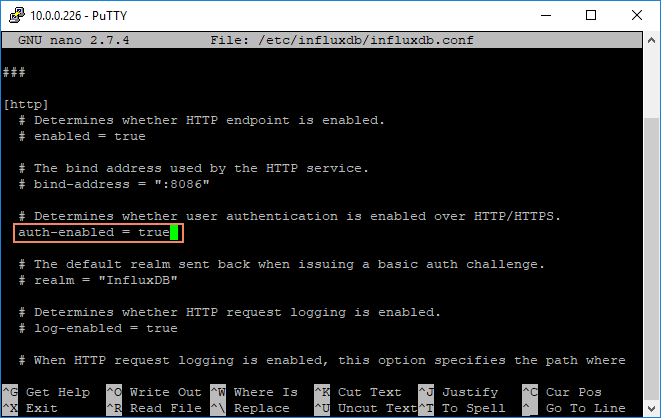

Once we have our user created, we should be ready to enable authentication. Let’s fire up nano and modify the configuration file:

sudo nano /etc/influxdb/influxdb.conf

Scroll through the file until you find the [http] section and set auth-enabled to true:

Write out the file with control-o and exit with control-x and you should be ready to restart the service:

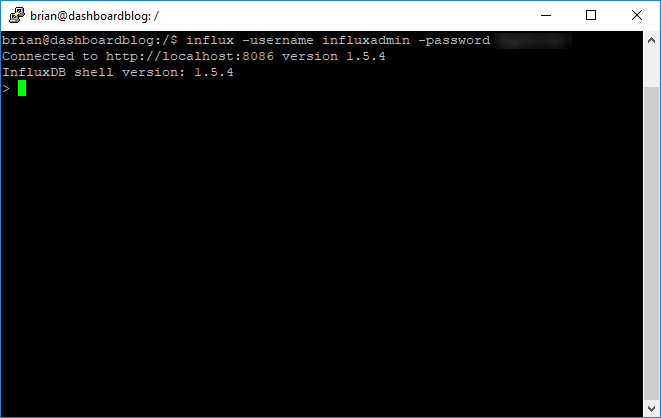

sudo systemctl restart influxdb

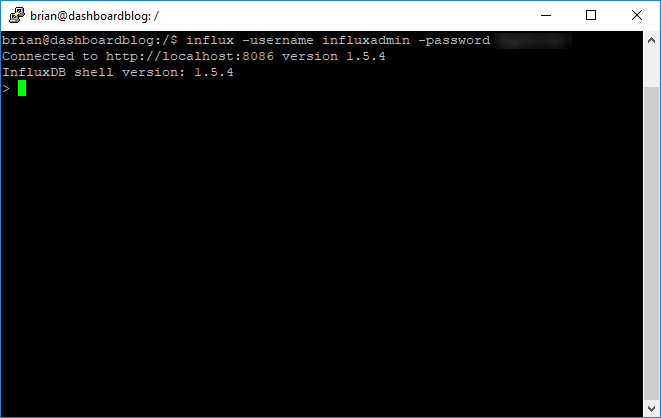

Now we can log back in using our newly created username and password to make sure that things work:

Create Databases

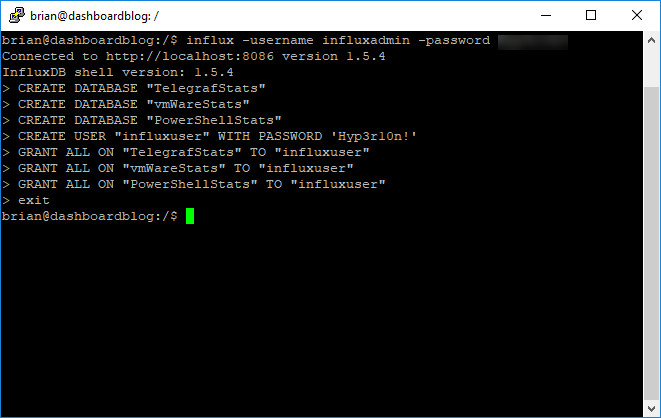

The final steps are to create a few databases finally a user to access them. You can just use the admin use you created, but generally its better to have a non-admin account:

CREATE DATABASE "TelegrafStats"

CREATE DATABASE "vmWareStats"

CREATE DATABASE "PowerShellStats"

I created three databases for my setup. One for use with Telegraf, one to store various vmWare specific metrics, and one for all of the random stuff I like to do with PowerShell. All of these will get their own set of blog posts in time.

Grant Permissions

Finally, we can create our user or users and grant access to the newly created databases:

CREATE USER "influxuser" WITH PASSWORD 'influxuserpassword'

GRANT ALL ON "TelegrafStats" TO "influxuser"

GRANT ALL ON "vmWareStats" TO "influxuser"

GRANT ALL ON "PowerShellStats" TO "influxuser"

Again…not terribly verbose:

Retention

By default, when you create a database in InfluxDB, it sets the retention to infinite. For me, being a digital packrat, this is exactly what I want. So I’m going to leave my configuration alone. But…for everyone else, you can find a guide on retention and downsampling here in the official InfluxDB documention. You can find the specific command details here.

Conclusion

That’s it! InfluxDB is now ready to receive information. In our next post, we’ll move on to Telegraf so that we can start sending it some data!

Version Update

When this blog post was written, InfluxDB 1.5.4 was the latest release. Before I was able to publish this blog post, InfluxDB 1.6 was released. Feel free to install that version instead of the version above:

sudo wget https://dl.influxdata.com/influxdb/releases/influxdb_1.6.0_amd64.deb

sudo dpkg -i influxdb_1.6.0_amd64.deb

Brian Marshall

July 16, 2018

I frequent /r/homelab and recently I’ve read a number of posts regarding how to get licensing for your homelab. Obviously, there are plenty of unscrupulous ways to get access to software, but I prefer to keep everything on my home network legit. So, how do you do that? Software licensing is somewhat difficult for regular software and it isn’t any easier for a homelab. We’ll talk through how to get low-cost, totally legitimate licensing for vmWare, Microsoft, and a few backup solutions for your homelab. We will not talk about all of the software that you might use in your homelab in general. For instance, we will not cover storage server software like FreeNAS. If you would like to see a great list of things people use in their homelabs, I would suggest checking out the software page of the /r/homelab wiki here.

vmWare Software Licensing

vmWare still offers a free Hypervisor in the form of vmWare vSphere Hypervisor. The downside is that you don’t get a fully featured vmWare experience. Namely, you don’t get access to the API’s. This means much of the backup functionality won’t be available and general management is more difficult without vCenter. The cheapest way to get a production copy of vmWare is through the Essentials packages. The regular package is only $495 and includes a basic version of vCenter along with three server licenses for ESXi (2 sockets per server). It’s not a terrible deal at all, but vCenter is very limited. And for a homelab, who needs production licensing anyway?

So we have an option, but it isn’t cheap and doesn’t give us the full stack. Enter VMUG Advantage. For only $200 per year (yes, you have to pay it every year), you get basically everything. VMUG Advantage gives you all of this:

- EVALExperience

- 20% Discount on VMware Training Classes

- 20% Discount on VMware Certification Exams

- 35% Discount on VMware Certification Exam Prep Workshops (VCP-NV)

- 35% Discount on VMware Lab Connect

- $100 Discount on VMworld Attendance

All of those things are great, but the very first one is the one that matters. EVALExperience gives us all of the following:

- VMware vCenter Server v6.x Standard

- VMware vSphere® ESXi Enterprise Plus with Operations Management™ (6 CPU licenses)

- VMware NSX Enterprise Edition (6 CPU licenses)

- VMware vRealize Network Insight

- VMware vSAN™

- VMware vRealize Log Insight™

- VMware vRealize Operations™

- VMware vRealize Automation 7.3 Enterprise

- VMware vRealize Orchestrator

- VMware vCloud Suite® Standard

- VMware Horizon® Advanced Edition

- VMware vRealize Operations for Horizon®

- VMware Fusion Pro 10

- VMware Workstation Pro 14

That’s more like it. Granted, we have the on-going annual expense of $200, but you can really go learn every aspect of vmWare with EVALExperience.

Microsoft Software Licensing

Microsoft licensing is about as complex as you can find. Like vmWare, Microsoft offers a free version of their Hypervisor (Hyper-V), but Microsoft has a much broader set of software to offer in general. Once upon a time, we had an inexpensive Technet subscription which gave us the world in evaluation software. This is but a memory at this point so we have to find other options. There are two great options on this front that are perhaps not as inexpensive, but will still give most of us what we need.

Microsoft Action Pack

We’ll start, as we did with vmWare licensing, with production-use licensing. The Microsoft Action Pack is essentially a very low level version of being a Microsoft Partner. It gives you access to a host of software for production use, but doesn’t really have a dev/test option. For a homelab, this is still pretty good, because we get the latest Microsoft software at a fraction of the cost of individual licensing. There are gotchas of course. You do have to renew every year, and the initial fee is $475. If you are lucky, you can find coupons to get that number way down. So what do you get? Here’s a sub-set:

- Office 365 for 5 users

- Windows Server 2016 for 16 cores

- This is basically one server, which Microsoft requires that you purchase 16 cores minimum per physical server

- Even if you physical server is running ESXi, you must have a Windows License if you are going to run a Windows VM

- This license only allows you to run 2 Windows VM per physical host

- You must purchase 16 core licenses per 2 VM’s you need per physical host

- SQL Server 2017 for 2 servers (10 CALs)

- Office 2016 Professional Plus for 10 computers

- Visual Studio Professional for 3 users

- Plenty of other great software like SharePoint, Exchange, etc.

But wait…there’s a downside. First, those are all current versions of the software. Many of us are forced to work with older version of Windows and SQL Server for our internal testing an development. So this doesn’t work great. Second, these are again, production licenses. So we are paying a very low price, but this is software intended for a business to operate. It’s a great deal, but not the best fit for every homelab. You can find a full list of software included here. I’ve had this subscription for years, but let’s move on to another option.

Visual Studio Subscriptions

So Technet is dead and the Action Pack isn’t for everyone…never fear, there is another option: Visual Studio Subscriptions. This is really designed for a developer and is the new branding of what was once an MSDN Subscription. The good new is that many of us with a homelab use software more like a developer anyway. So with the right subscription, we get access to basically everything, unlimited, for development and testing purposes. Of course, everything is expensive, so we have to find the right software selection at a price that we can afford. There are two main flavors of Visual Studio Subscriptions: Cloud and Standard.

Cloud

Cloud is sold as a monthly or annual subscription. You only get to use the license keys while you are paying the subscription. The annual option includes subscriber benefits while the monthly service basically just includes Visual Studio-related software. So what are subscriber benefits? The biggest benefit for a homelab is “software for dev/test.” What you get depends entirely on how much you shell out for your annual subscription.

- Visual Studio Enterprise

- Basically everything…but it cost $2,999 per year

- Visual Studio Professional

- Limited to Operating Systems and SQL Server for the most part…but costs only $539 per year

Obviously, Enterprise sounds great, but is likely cost prohibitive unless you have a lot of disposable income. It can be tax deductible for those of you that have your own business. For me, the Professional subscription gives me the two most important things, my operating systems and databases. Not only that, it gives you basically every version of both back to the year 2000. What it doesn’t give you is Office. This is a bit of a bummer if you are looking for a catch-all for your homelab and productivity software.

Standard

Standard is different than the cloud subscription in that it comes with a perpetual license. So, if you decide after the first year you are no longer interested, anything you licensed during your first year will still be yours to use. It of course come with a higher price. Here’s the breakout:

- Visual Studio Enterprise

- Basically everything, but for the OMG price of $5,999 for the first year and $2,569 to renew each year after that

- Visual Studio Professional

- Again limited to Operating Systems and SQL Server for the most part, but way more reasonably priced at $1,199 for the first year and $799 to renew each year after that

- Visual Studio Test Professional

- I can’t for the life of me figure out why anyone would want this version…but it’s $2,169 for the first year and $899 to renew each year after that

So…this is expensive. The only real benefit here is that you can continue to use your keys if you choose not to renew each year. Of course, if you like to be bleeding edge, this will probably not work too well after the first 6 months into your next year when someone new comes out that you don’t have. You can find the full Microsoft comparison here and I’ve uploaded a current software matrix here.

Educational Licensing

Beyond the paid options from Microsoft, they also offer educational software for those of you that are students. They have the standard program available through Microsoft Imagine. For a homelab, the Window Server 2016 license would be a great place to start. Many educational institutions have deals with Microsoft beyond Imagine. You can search here to find out if your school has this set up.

Oracle Software

Oracle software is the reason this blog exists. This has always been my primary technology to blog about. So, if you are building a homelab for Oracle software, you might need some Oracle software! I suggest two sites: Edelivery and the Oracle Proactive Support Blog for EPM and BI.

eDelivery

eDelivery, for lack of a much better word…sucks. It’s difficult to find exactly what you want, but it does have everything you need, for free. You will need to register for an Oracle account, but once you have one, you should be good to go. You can find eDelivery here.

Patches

What about patches? Patches are a little more tricky. You still need an Oracle account, but generally you will need a support identifier. This can be really simply like using your Oracle account at work or becoming a partner. But, it still isn’t as free as the base software downloads. To make matters worse, finding patches requires an advanced degree in Oracle Support Searching. To make your search easier, Oracle has created a blog that provides updates about patches for EPM and BI software. You can find this blog here.

Backup Software

Now that we have the foundation for our homelab software, what about backing things up? We have a few options here. The best part about this…they are all free. Let’s start with my personal favorite: Veeam.

Veeam Agent

Veeam is the most popular provider of virtual machine backup software out there. But they do more than just virtual machine backup. In fact, they have a free endpoint option. This option backs up both your workstations and servers alike. So if you have physical Windows or Linux Servers or Workstations, Veeam Agent is your best bet for free. You can download is here. Veeam Agent is great, but let’s be honest, the majority of our labs are virutalized. So how do we back those up?

Veeam Availability

Veeam’s primary software set is around virtualization. Veeam offers a variety of products that are built specifically for vmWare ESXi and Microsoft Hyper-V. They have both a free option and a paid option, which is pretty nice. The free option is Veeam Backup and Replication. You can find this product here. But the free option doesn’t do all of the fun things like scheduling. You end up needing PowerShell to automate things. Luckily, in addition to the free option, they also have something called an NFR option.

NFR stands for Not For Resale. Essentially if you go fill out a form, you will get your very own copy of the full solution, Veeam Availability, for free. This has all of the cool features around applications and scheduling. It’s a truly enterprise-class tool for you homelab…for free. You will have to get a new key each year, but it is totally worth the trouble. You can fill out the form here. One last thing…Veeam does require API access to vmWare. So, you need to have a full license of ESXi for this to work.

Nakivo

I’m less familiar with Nakivo, but I wanted to mention another option for backup. Nakivo, like Veeam, offers an NFR license. You can fill out the form here. My understanding is that Nakivo does not use the API, which allows it to work with the free version of ESXi. This is a great benefit for those that doesn’t want to set up a custom solution with lots of moving pieces.

Conclusion

I hope this post can provide a little bit of clarity for the legitimate options out there for homelab software licensing. I personally have a Microsoft Action Pack, VMUG Advantage, and Veeam Availability. I plan to swap out my action pack for Visual Studio Professional when my renewal comes due, as I like having access to older versions of operating systems and SQL Server. Happy homelabbing!

Brian Marshall

July 10, 2018

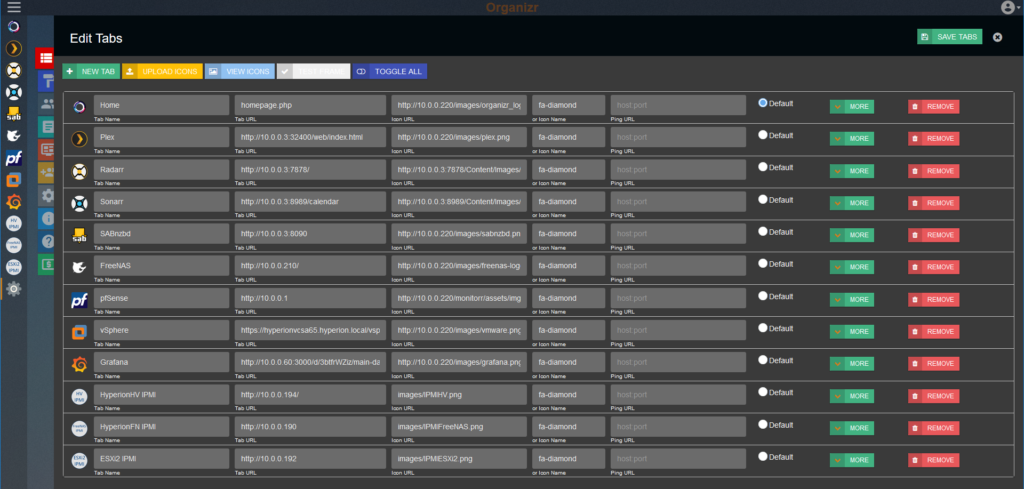

I know, I know…I promised InfluxDB would be my next post. But, I’ve noticed that Organizr is not quite as straight forward to everyone as I thought. So today we’ll be configuring Organizr and InfluxDB will wait until our next post. Before we continue with configuring Organizr, let’s recap our series so far:

Configuring Organizr

Organizr is not always the most straight forward tool to configure. Integration with things like Plex requires a bit of knowledge. It doesn’t help of course that V2 is still in beta and the documentation doesn’t actually exist yet. Let’s get started where we left off. Let’s log in:

Adding a Homepage

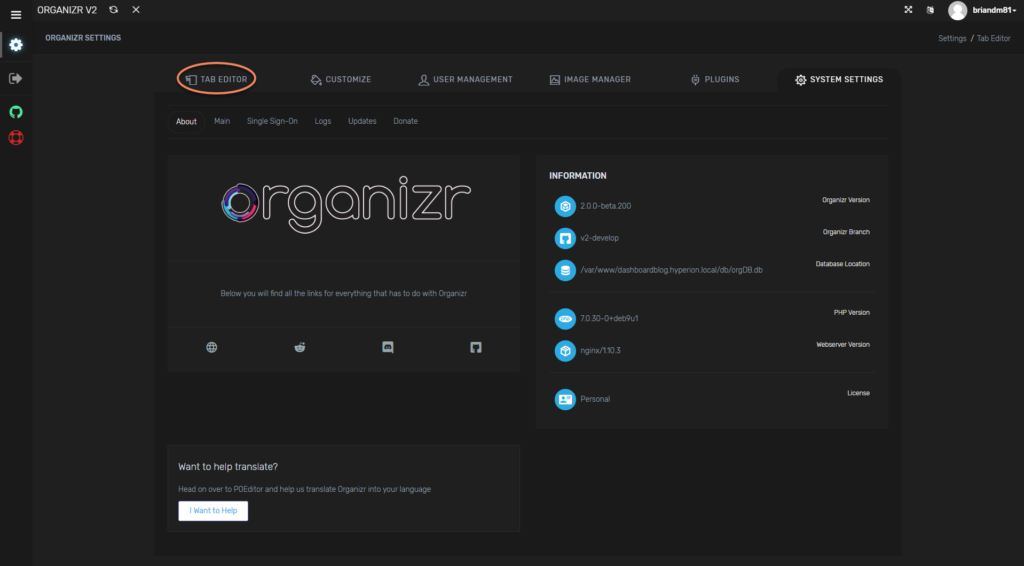

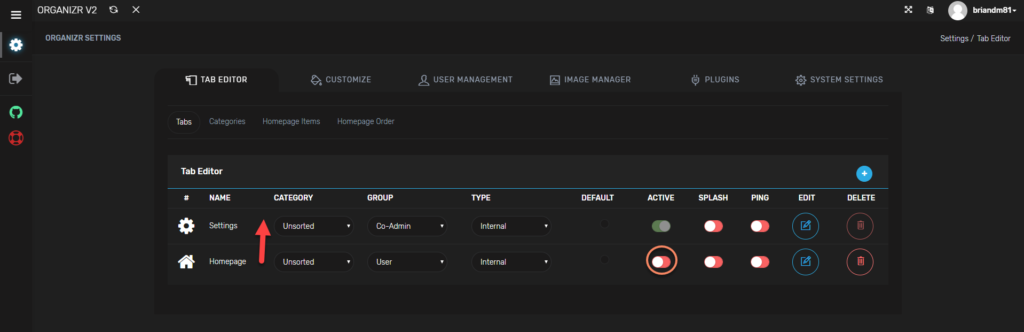

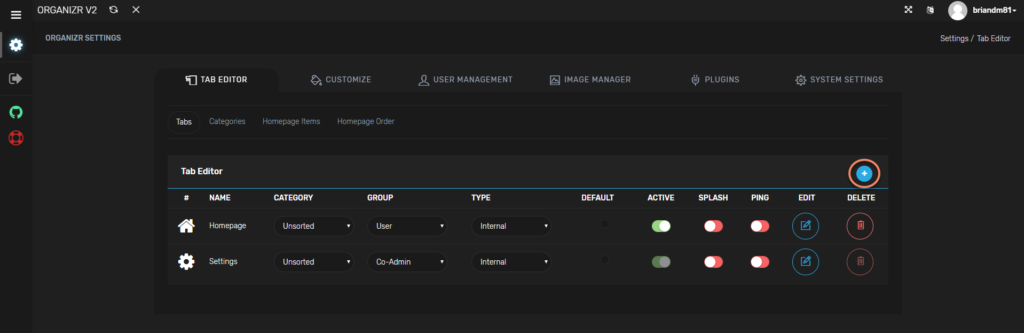

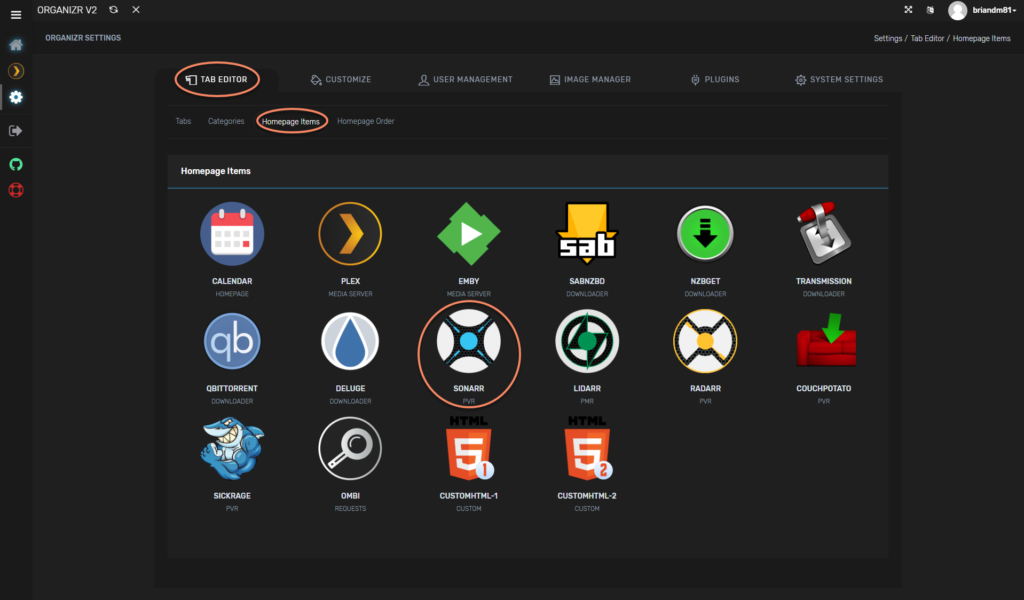

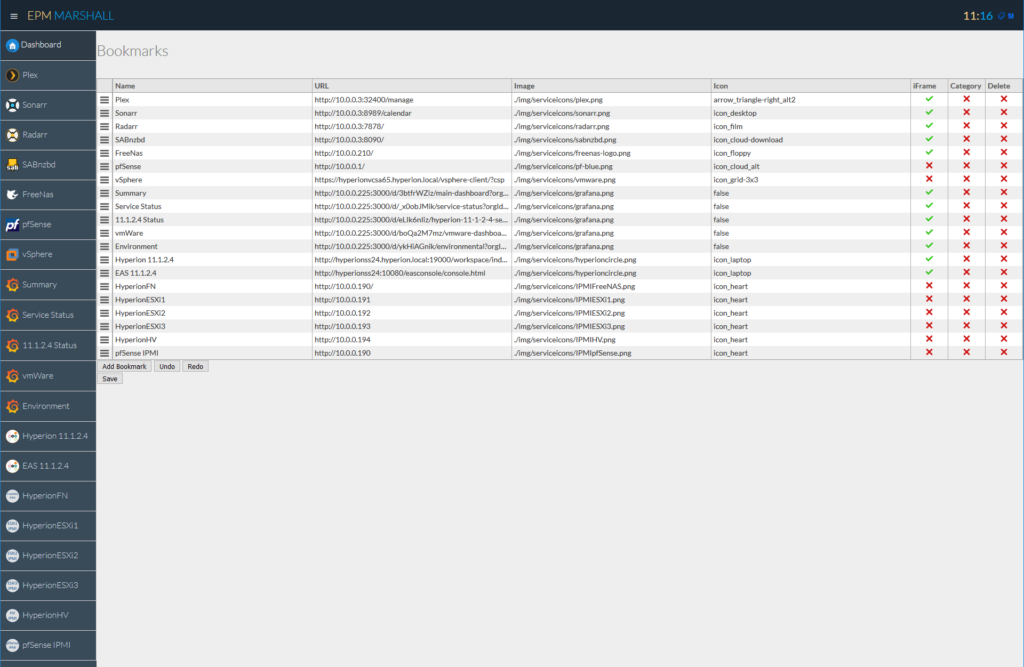

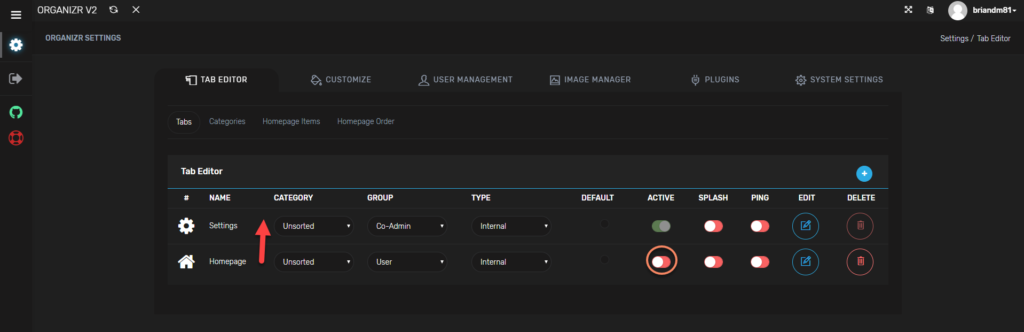

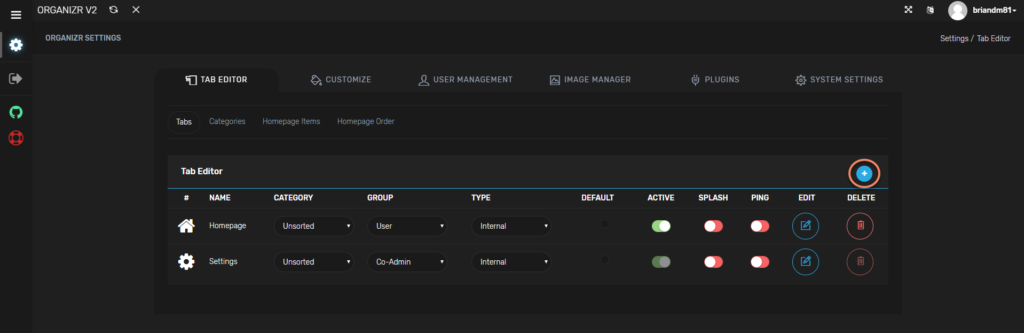

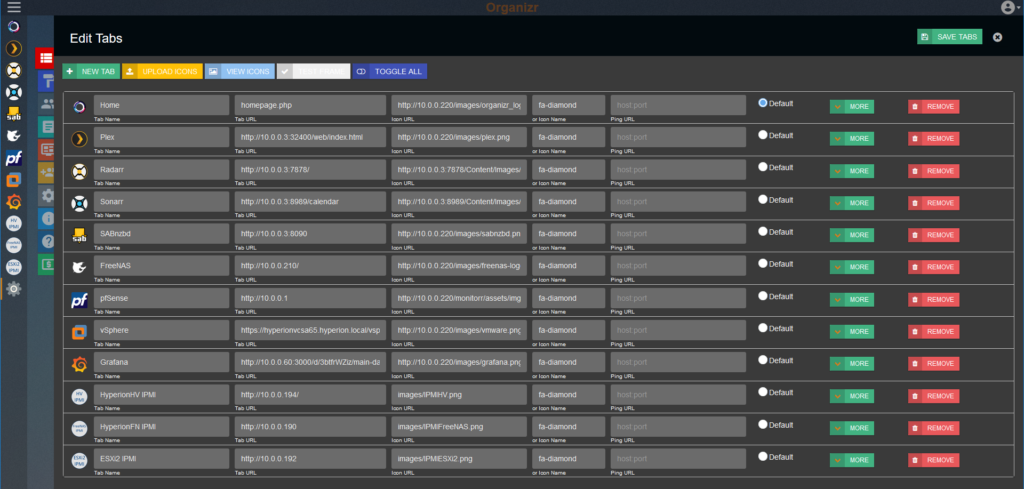

Once logged in, we’re ready to start by adding the homepage to our tabs. Click on Tab Editor:

Click on Tabs and you will notice that the homepage tab doesn’t appear on our tabs, so let’s move it around and make it active. While we’re at it we’ll also make it the default. We’ll get into why a little bit later.

Add a Tab

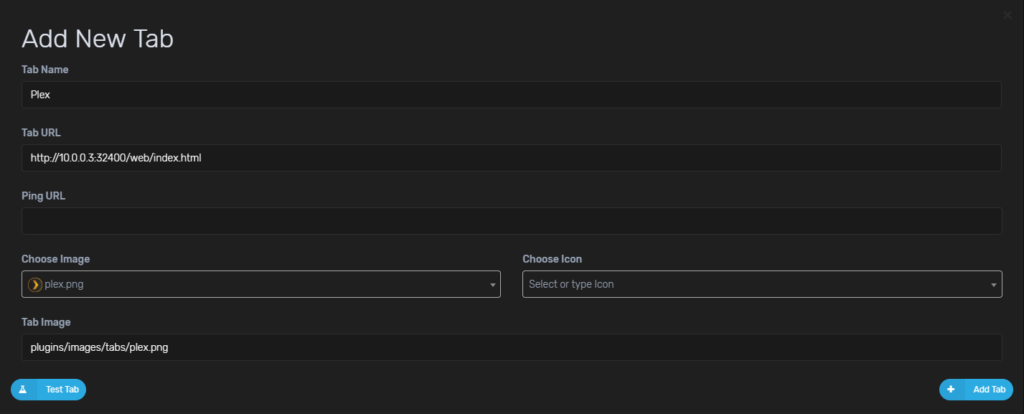

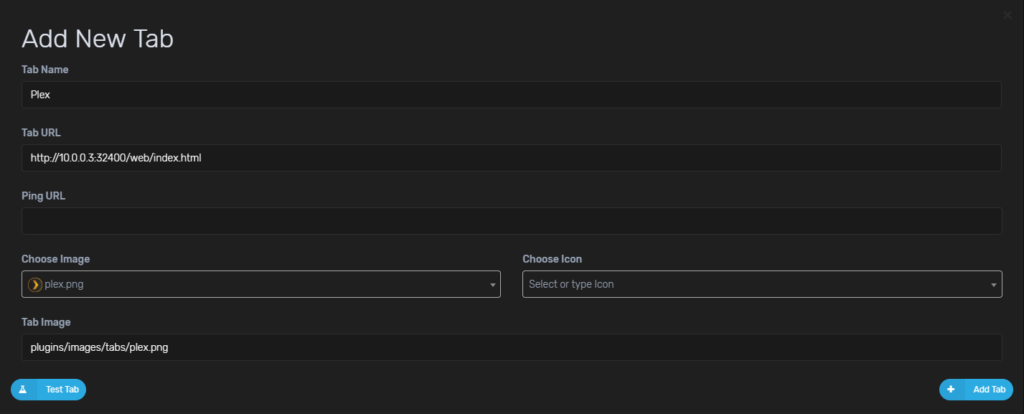

Now we can move on to adding the Plex tab. Click the + sign:

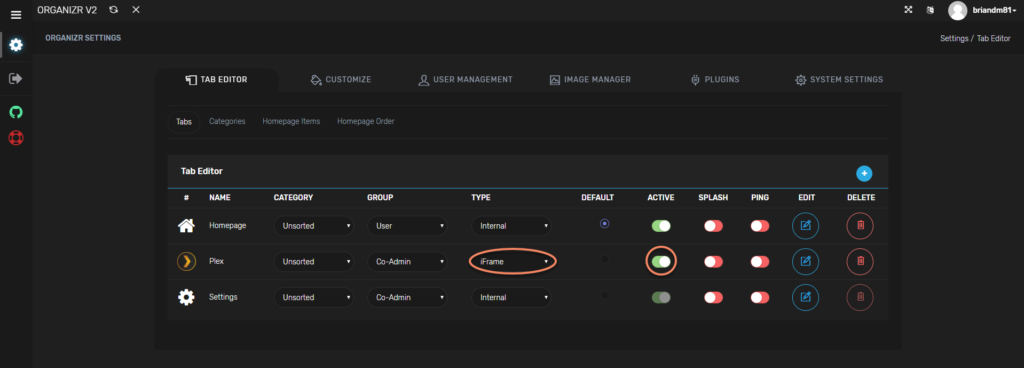

Give the tab a name, in this case we’ll go with Plex. Provide the URL to your Plex instance. Choose an image, and click Add Tab:

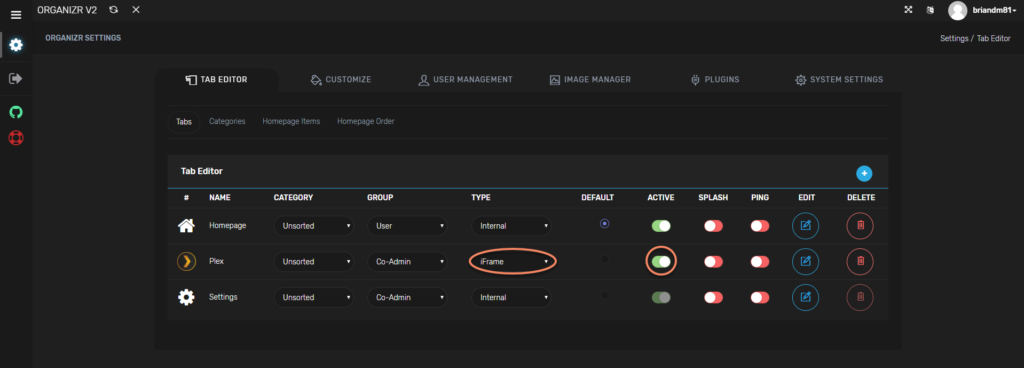

Move the Plex tab up, make it active, and select the type of iFrame:

The different types are iFrame, Internal, or New Window. Two of these are self-explanatory. iFrame provides the URL directly inside of Organizr. New Window opens a new tab in your browser. The third, internal is for things like the homepage and settings that are built-in functionality in Organizr. Many services works just fine in an iFrame, but some may experience issues. For instance, pfSense doesn’t like being in an iFrame while FreeNAS doesn’t mind at all. There are plenty of other options around groups and categories, but for now we’ll keep things simple.

The Homepage

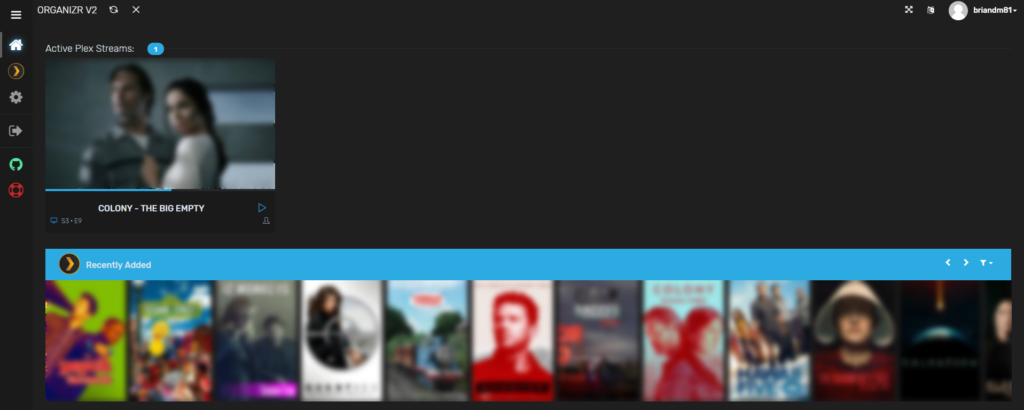

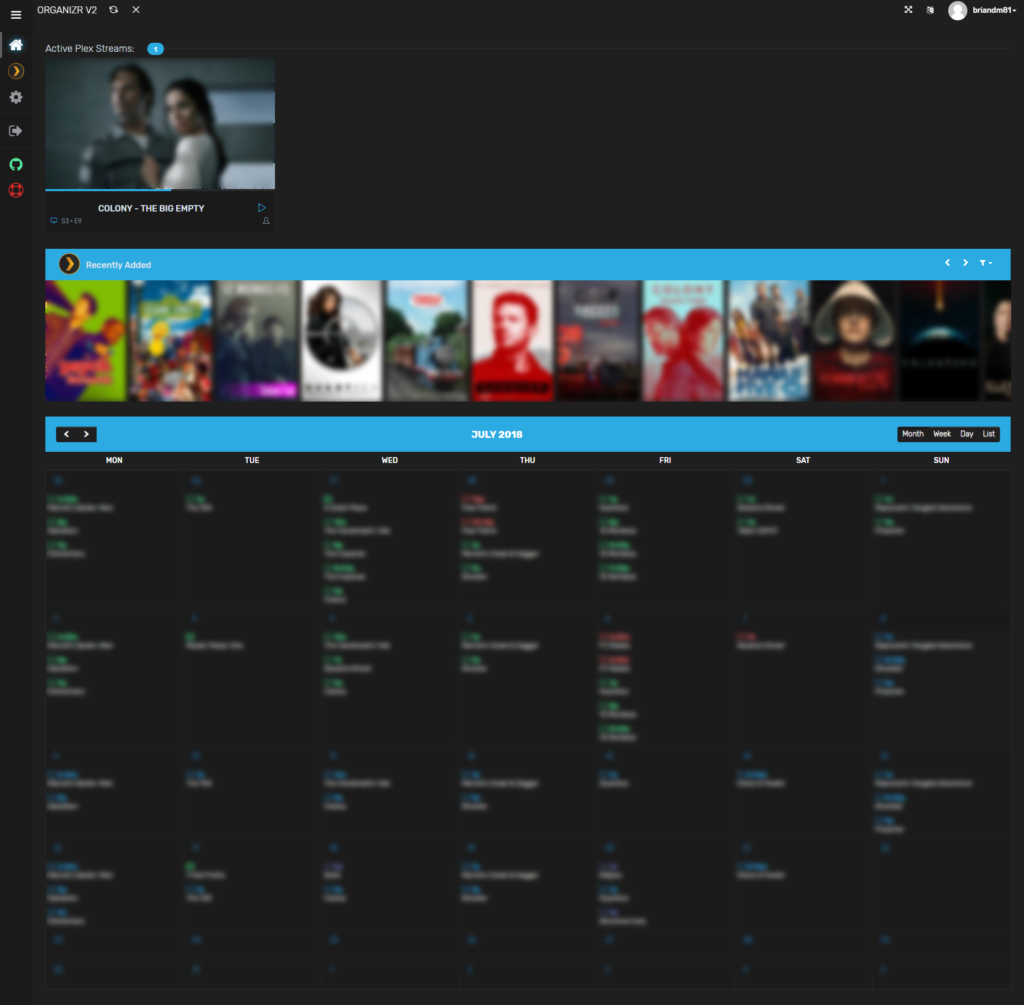

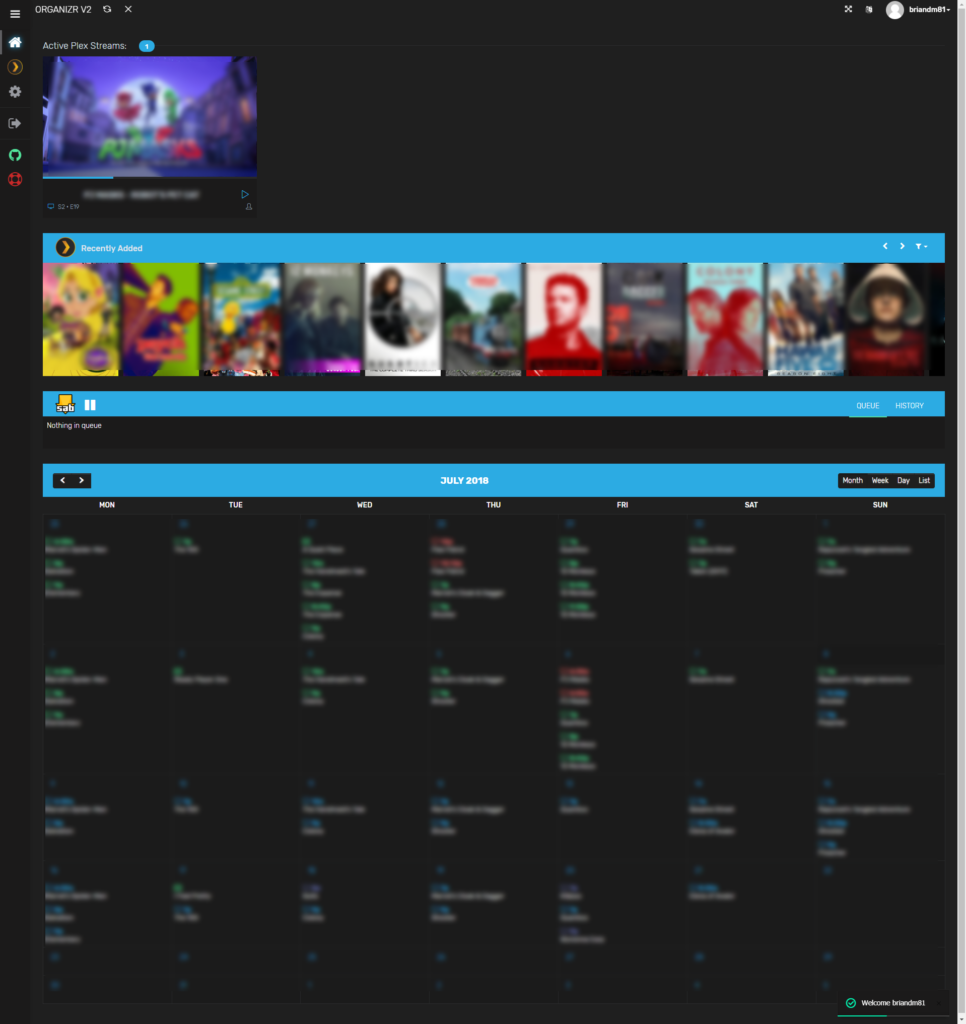

Now that we know how to add tabs, how do we make our homepage look like this:

Getting Plex Tokens

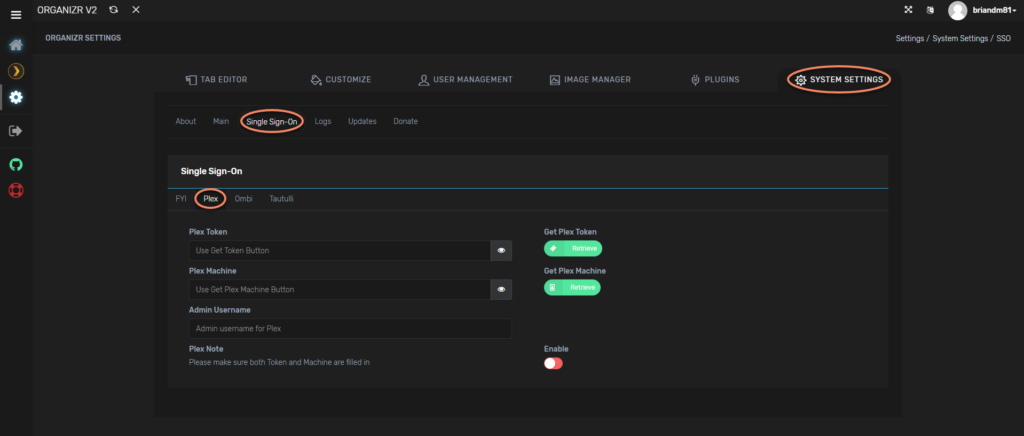

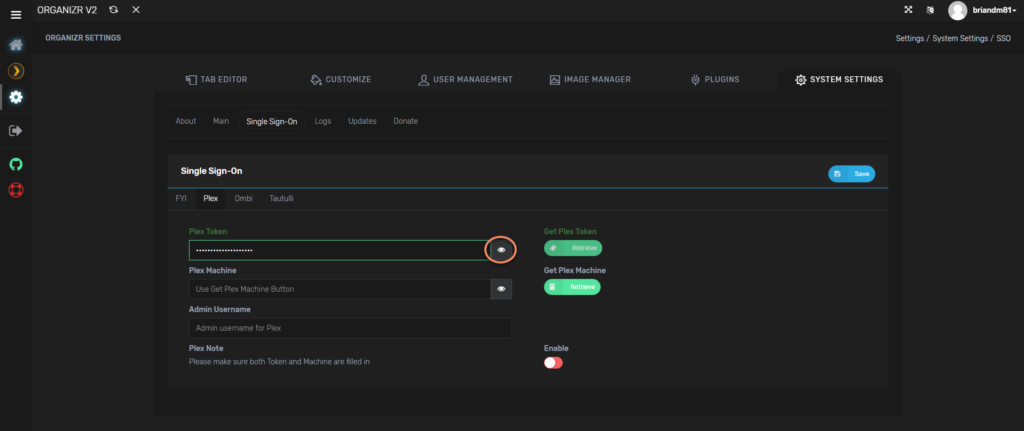

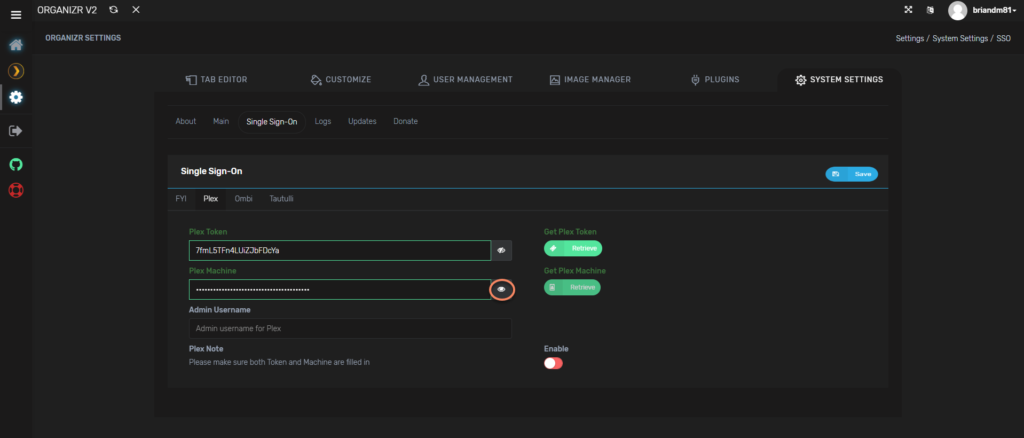

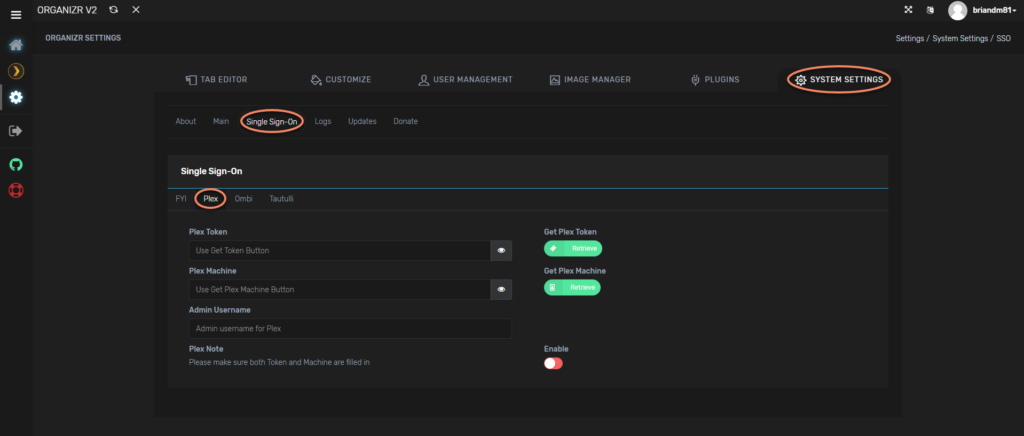

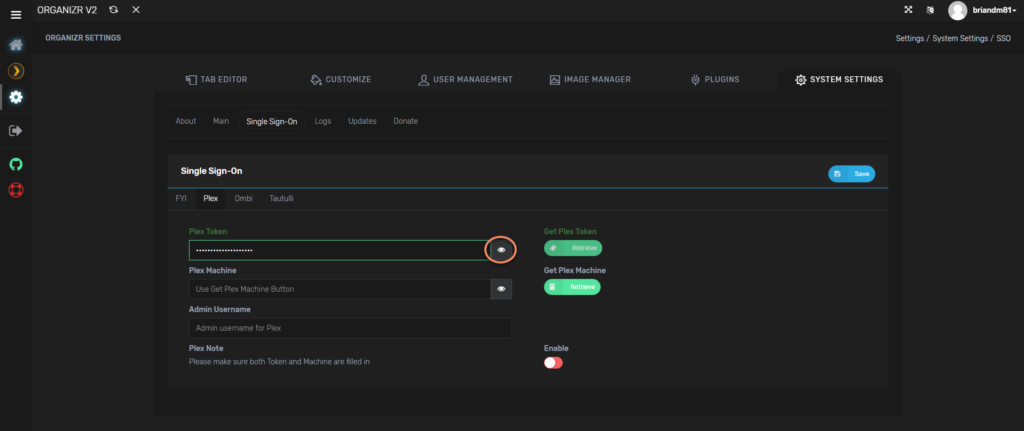

What we see here is one of the main reasons you should consider Organizr. This includes integration with Plex, Sonarr, and Radarr. Let’s start with Plex. Plex has an API that allows external applications like Organizr to integrate. Configuring Plex isn’t all that straight forward unfortunately. We’ll start by going back to our settings page and clicking on System Settings, then Single Sign-On, and finally Plex.

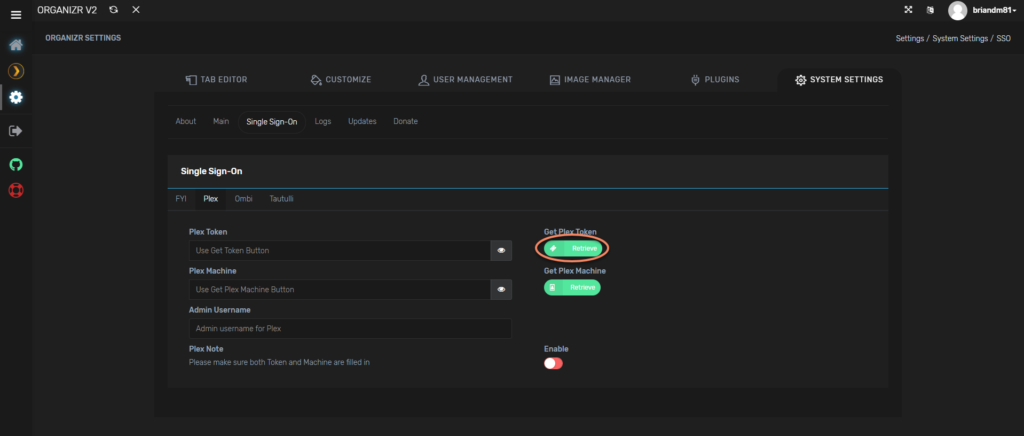

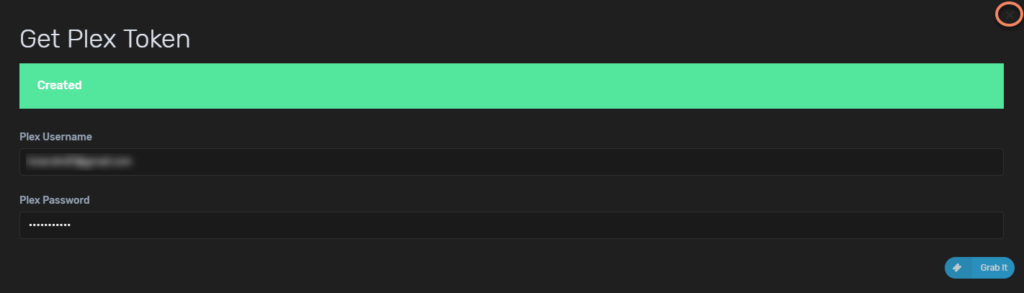

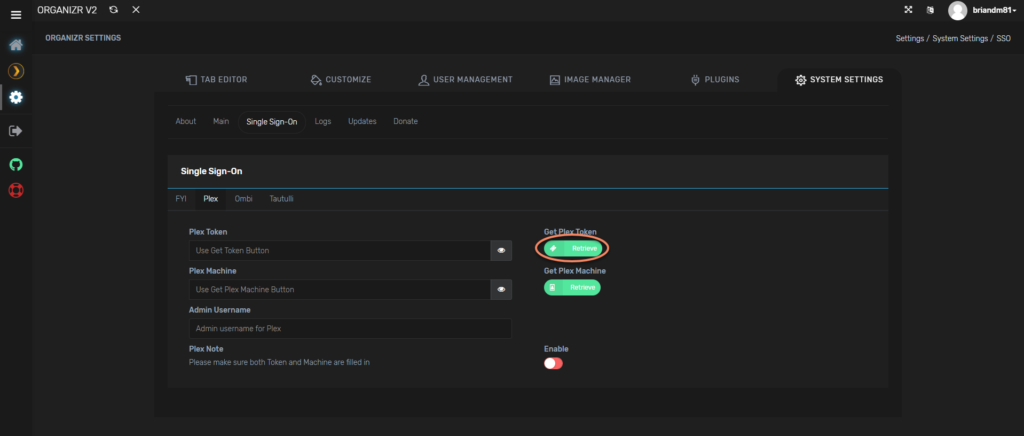

We are not trying to enable SSO right now, though you would likely be able to at the end of this guide with a single click. We are just going to use this page as a facility to give us the Plex API Token and the Plex Machine Name. These are required to enable homepage integration. Click on Retrieve under Get Plex Token:

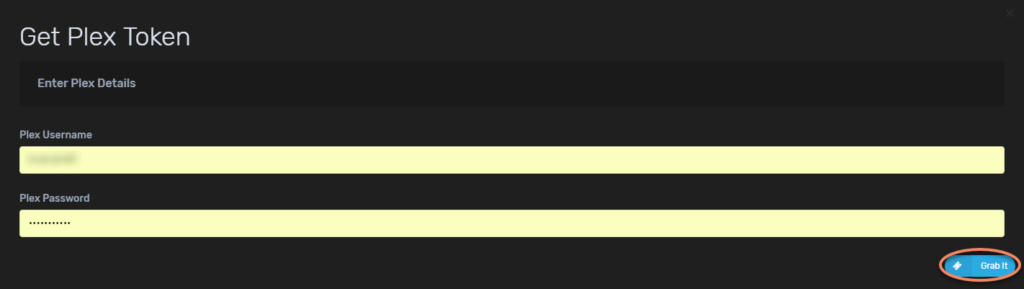

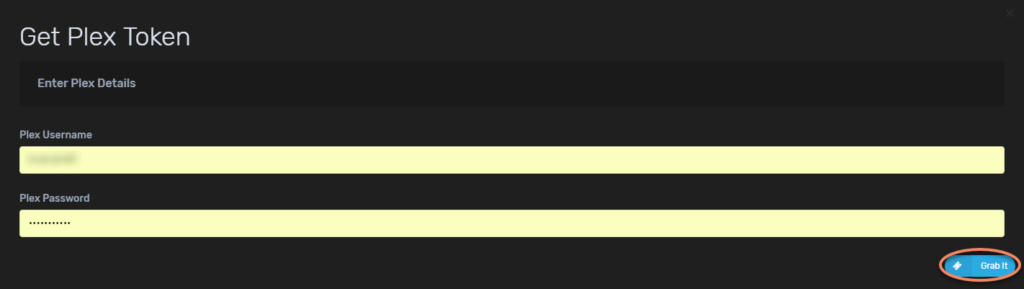

Enter your username and password for Plex and click Grab It:

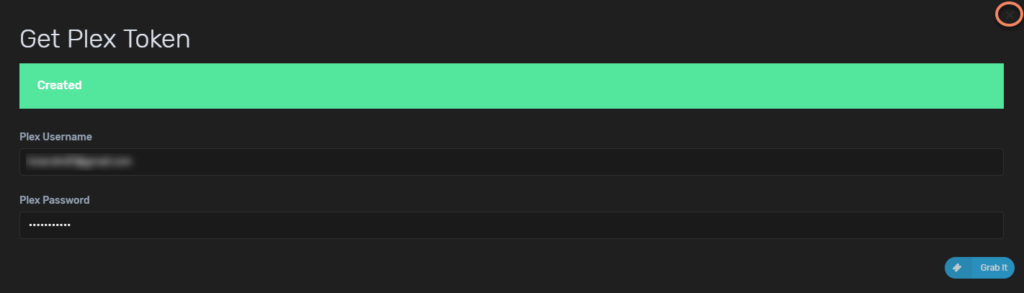

Assuming you remember your username and password correctly, you should get a message saying that it was created and you can now click the x to go see it:

Now we can click on the little eye to see the Plex token. Copy and paste this somewhere as we will need it later.

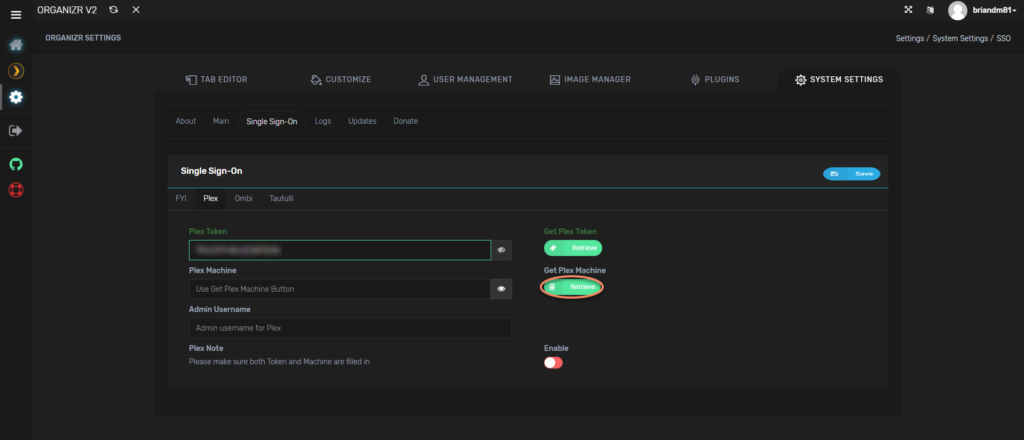

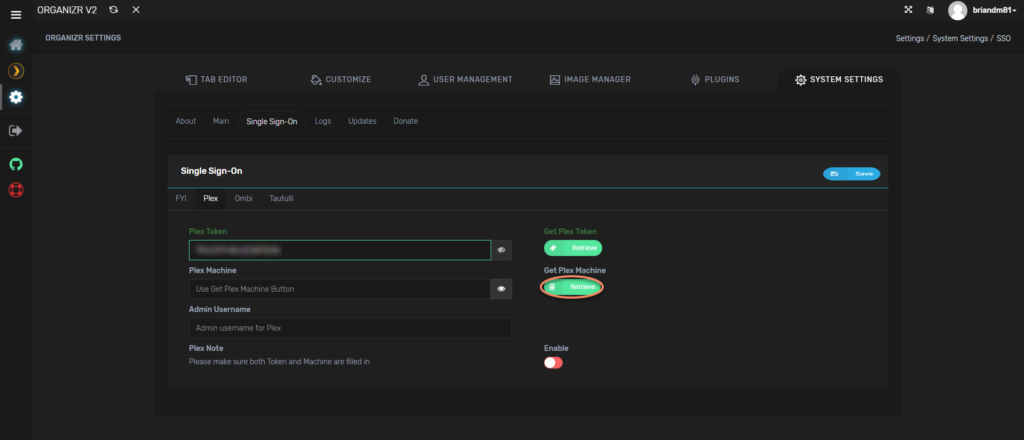

Next, we’ll click the retrieve button under Get Plex Machine:

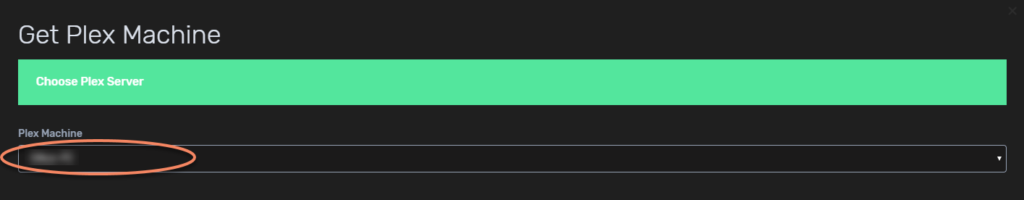

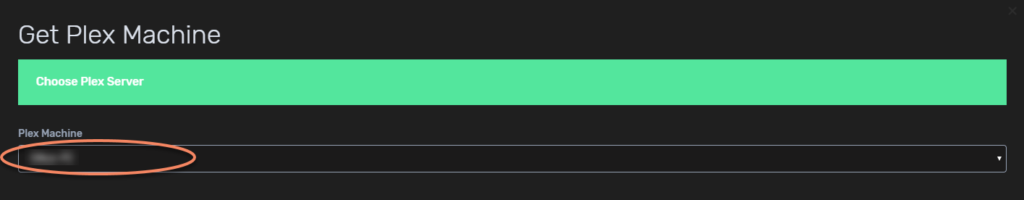

Choose your Plex Machine that you want to integrate into Organizr:

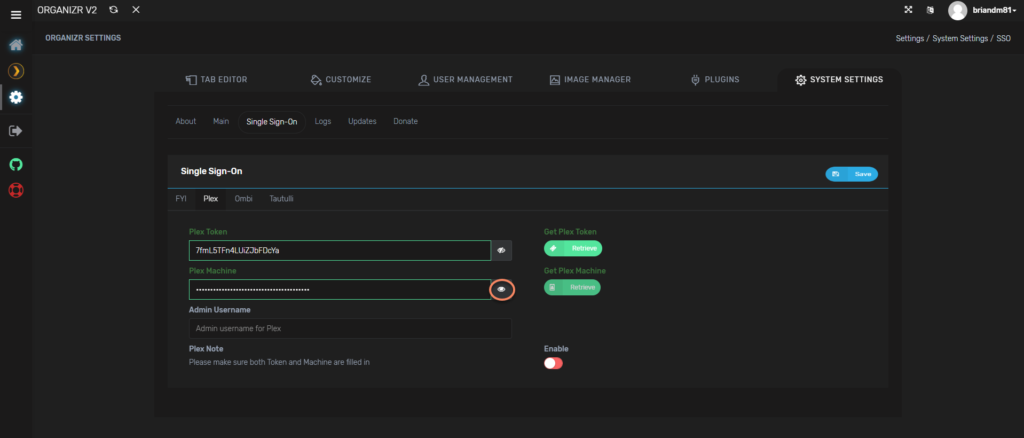

The interesting part here is that it doesn’t actually say it did anything after you make the selection. So just click the x and then we are ready to click the little eye again. This time we will copy and paste the Plex Machine Name:

Plex Homepage Integration

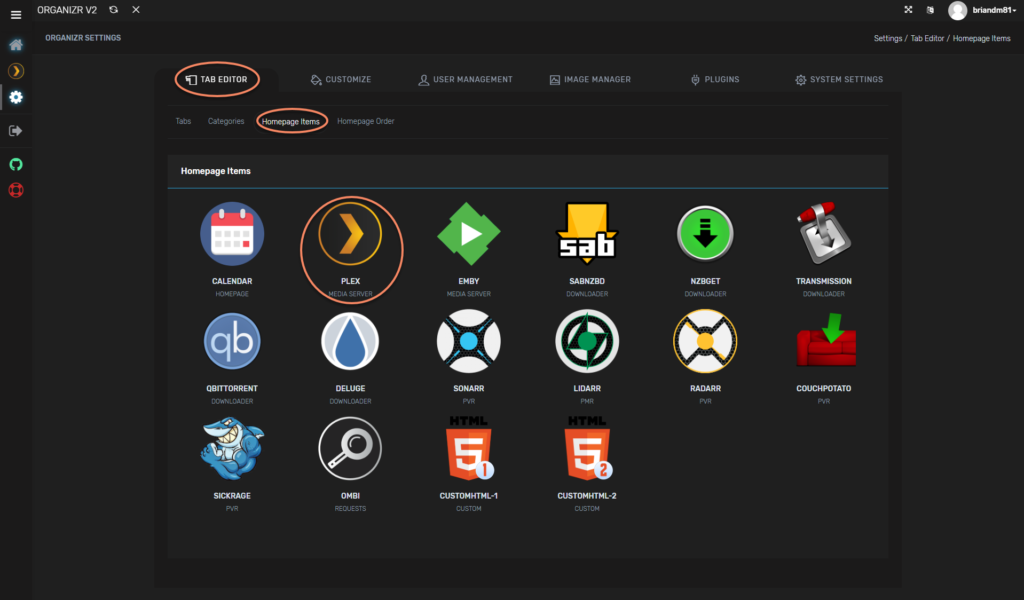

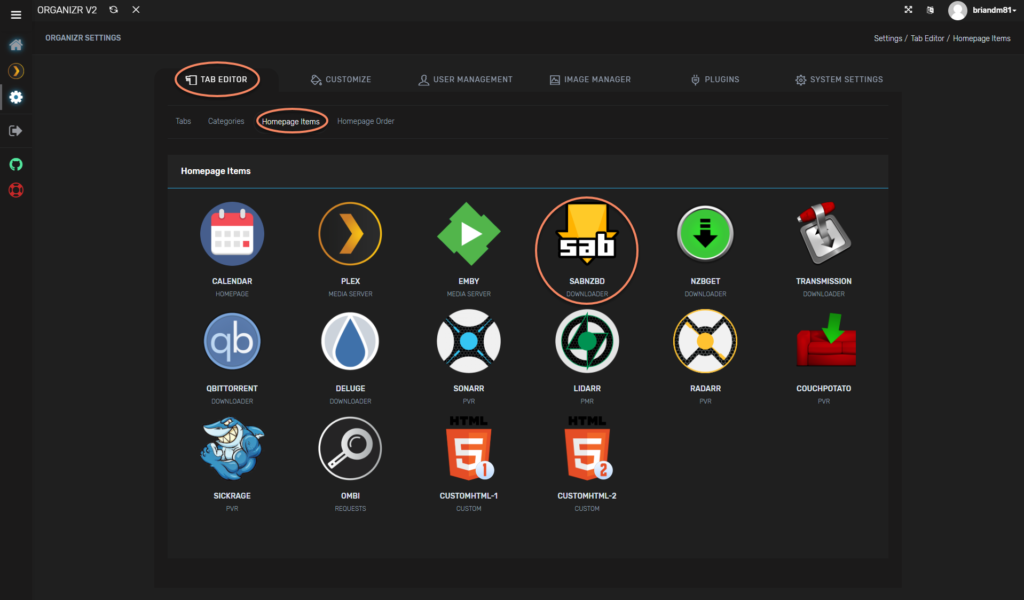

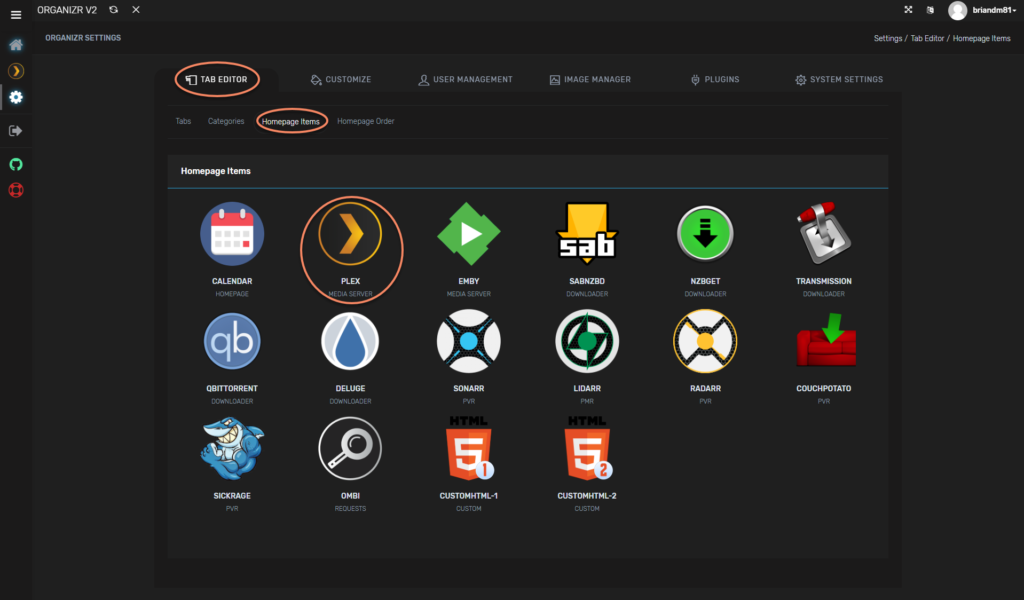

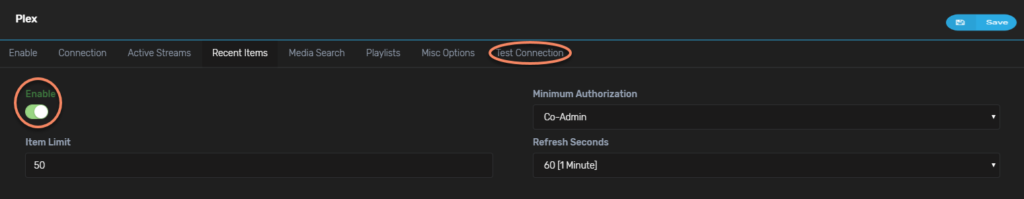

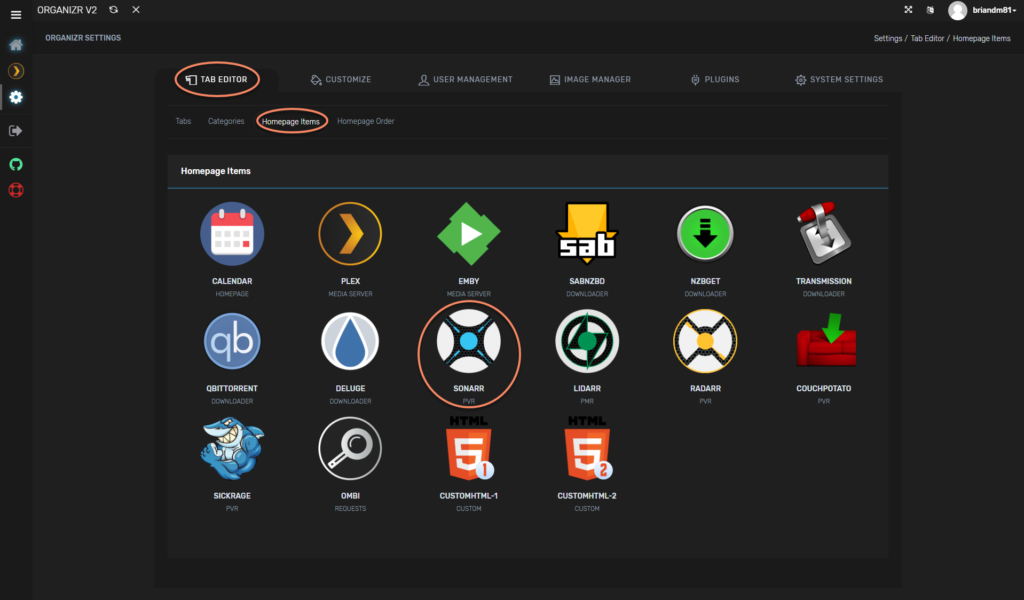

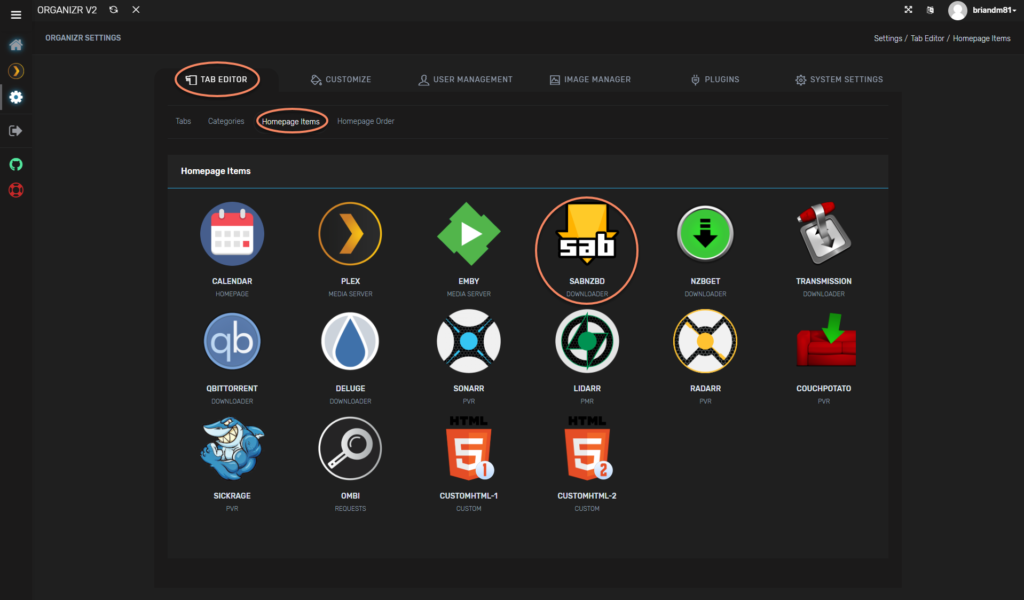

Now that we have our Plex tokens, we can configure the homepage integration with Organizr. Click on System Settings, then Tab Editor, followed by Homepage Items, and finally Plex:

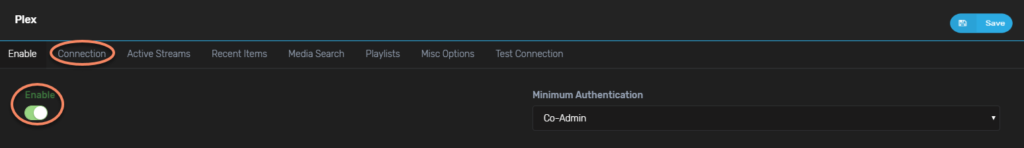

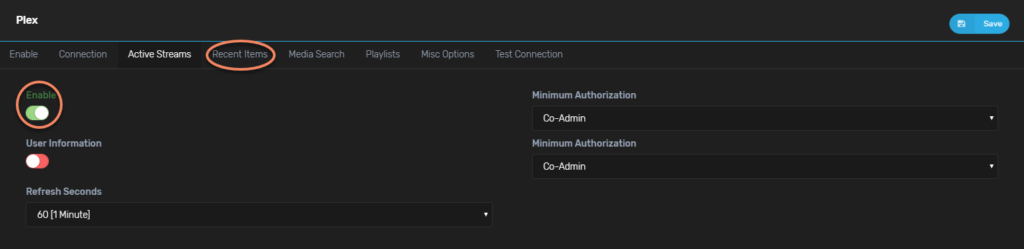

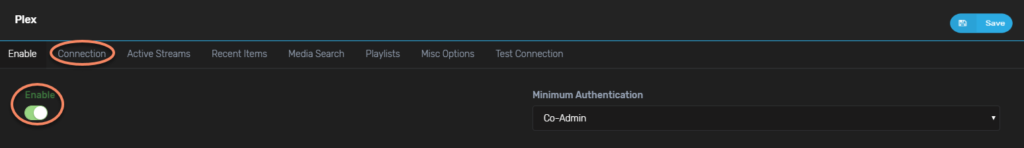

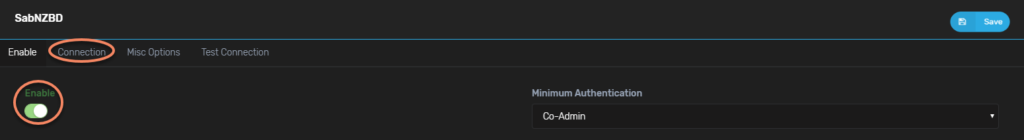

Start by enabling Plex integration and then click on Connection:

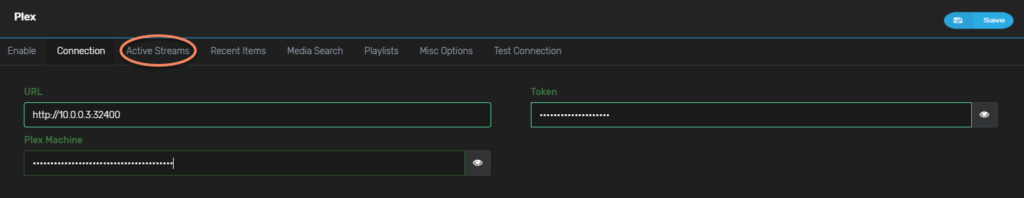

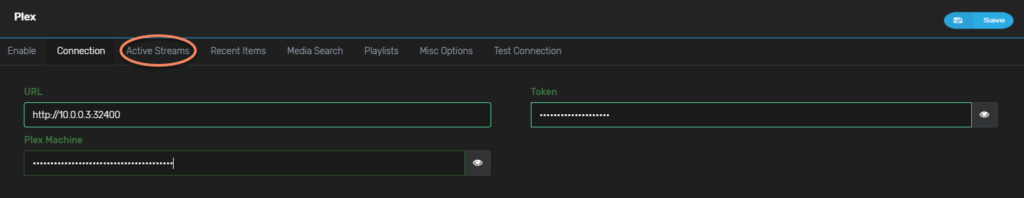

Now enter our Plex URL and then refer back to your Plex tokens that you copied and pasted somewhere. Click on Active Streams:

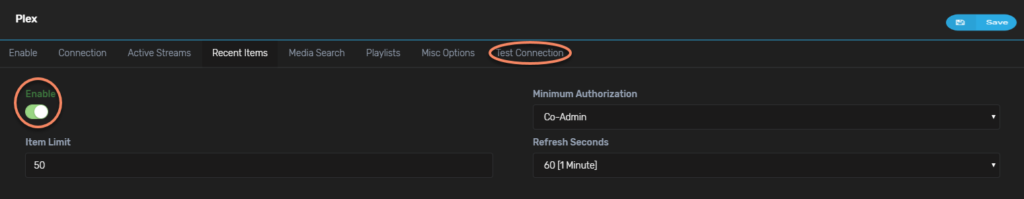

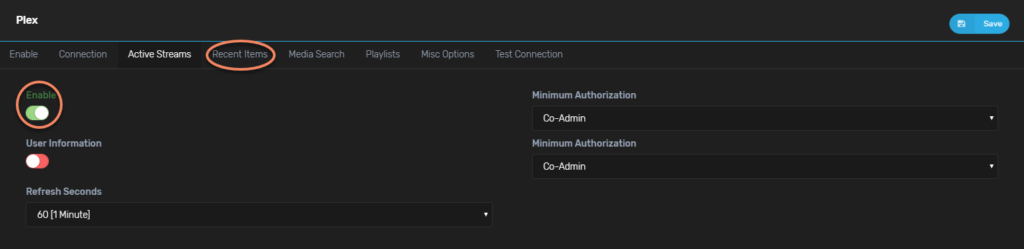

Enable active streams and click on Recent Items:

Enable recent items and click on Test Connection:

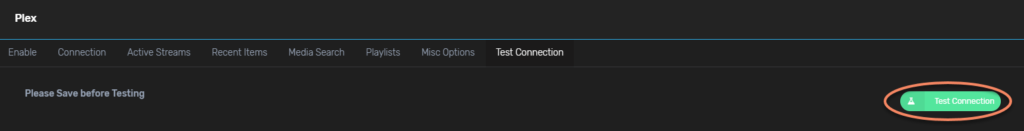

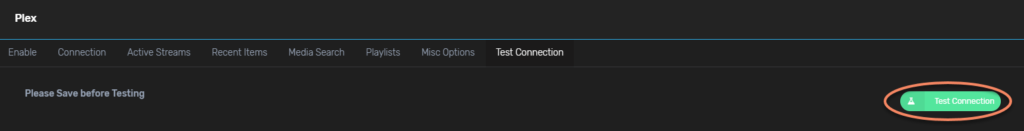

Be sure to click Save before finally clicking Test Connection:

Assuming everything went well, we should see a message in the bottom right corner that states:

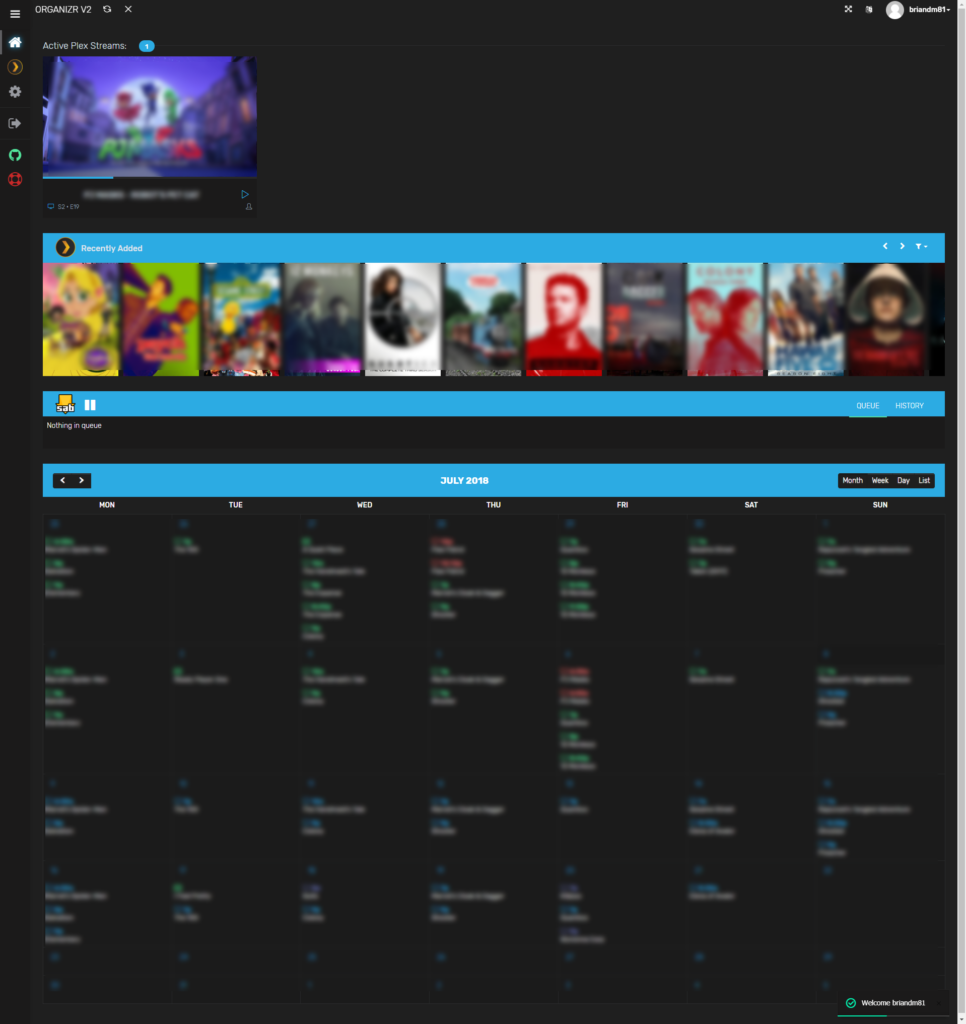

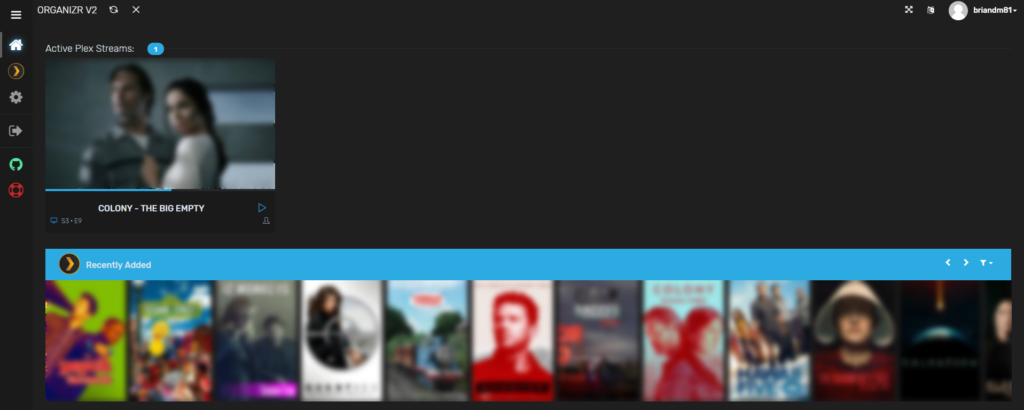

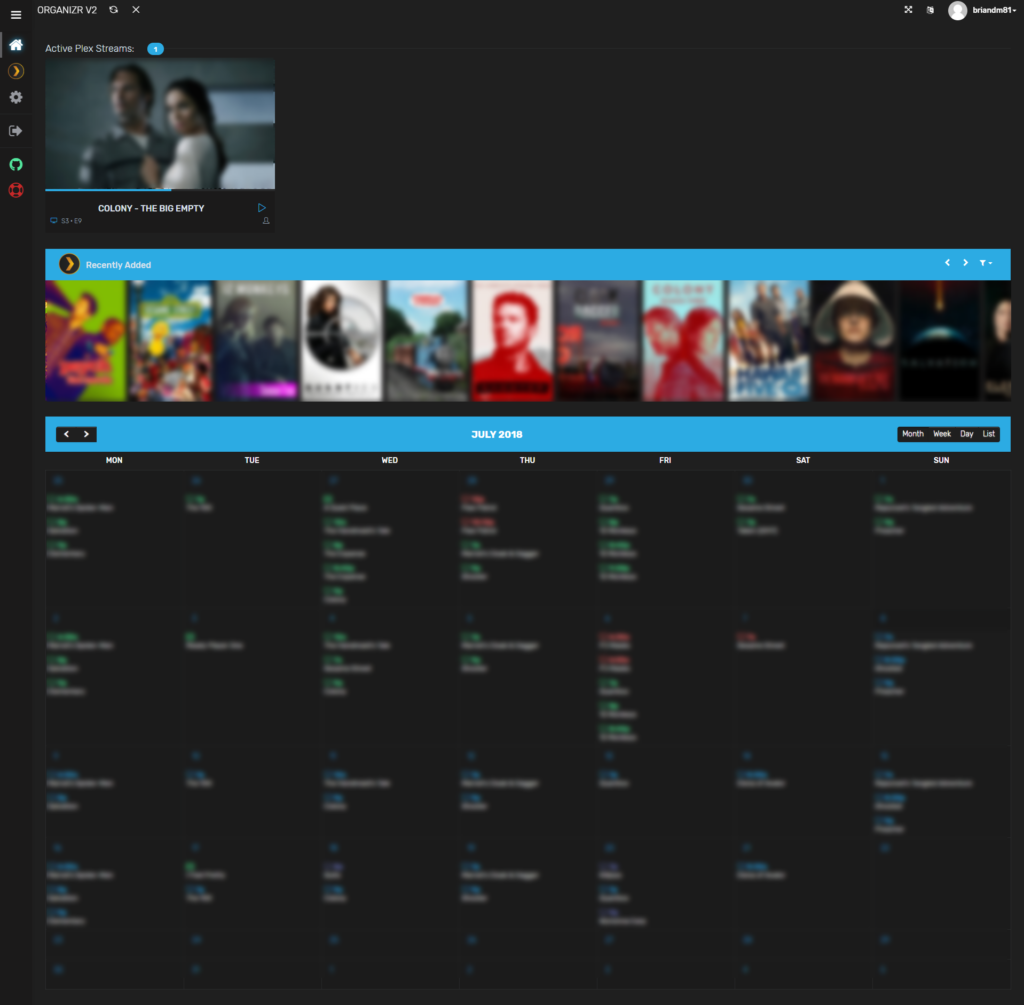

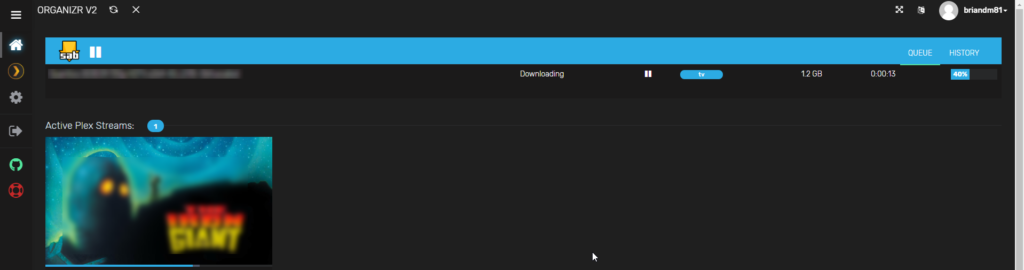

Now let’s go take a look at what we get when we reload Organizr:

Calendar Integration

Another really cool aspect of Organizr is the consolidated calendar. What does it consolidate? Things like Radarr, Sonarr, and Lidarr. It works much like the calendar on an iPhone or Android device in this way. Today we’ll configure Organizr with Radarr and Sonarr.

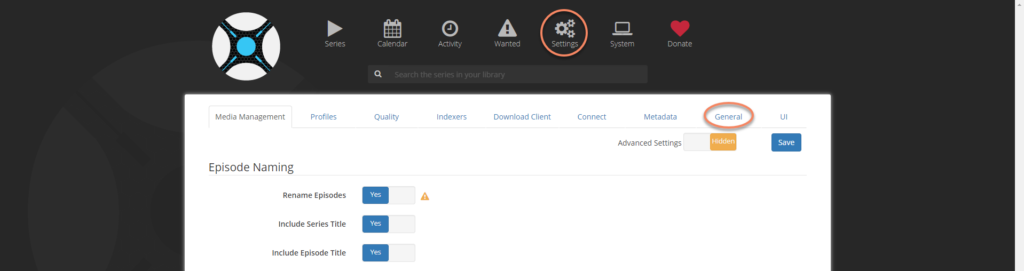

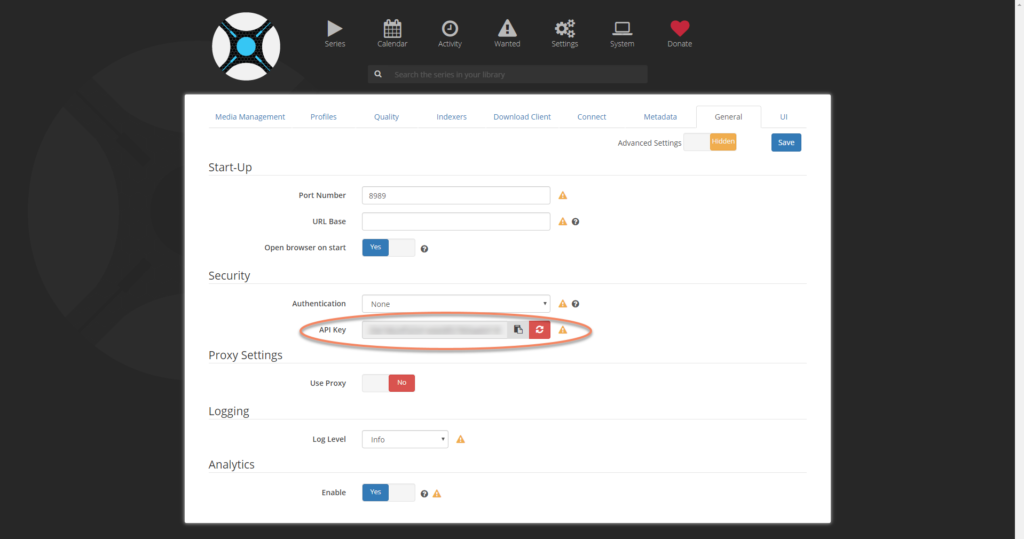

Sonarr

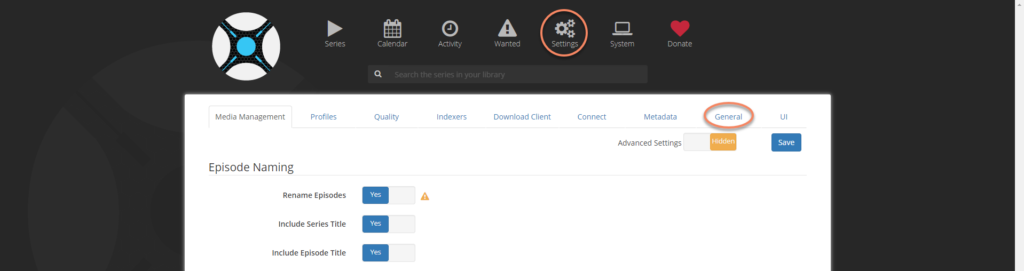

We’ll start by going to our Sonarr site and clicking on Settings and then the General tab:

Once on the general tab, you should see your API key:

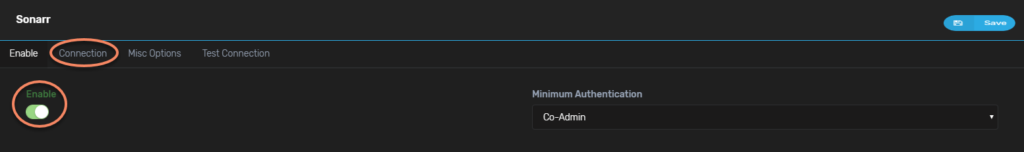

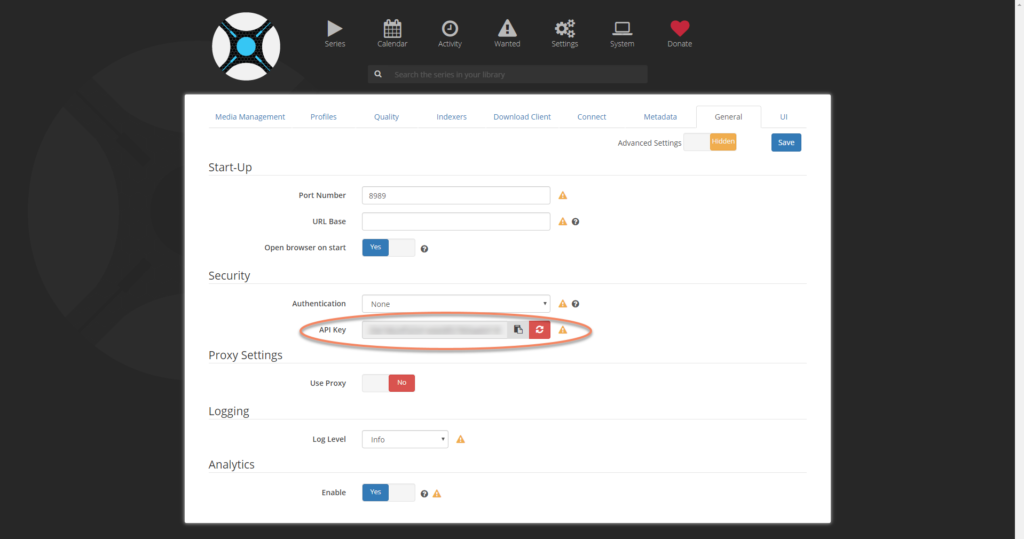

As with out Plex token, we’ll copy the API key and paste it somewhere while we go back into Organizr. Back in Organizr, go to settings and click on Tab Editor, then Homepage Items, and finally Sonarr:

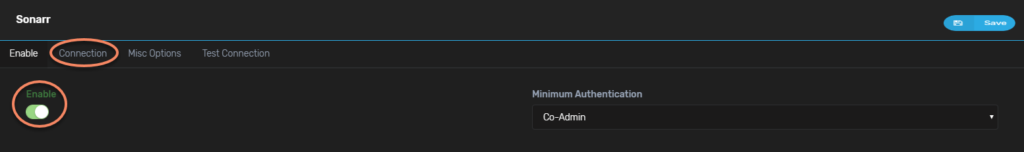

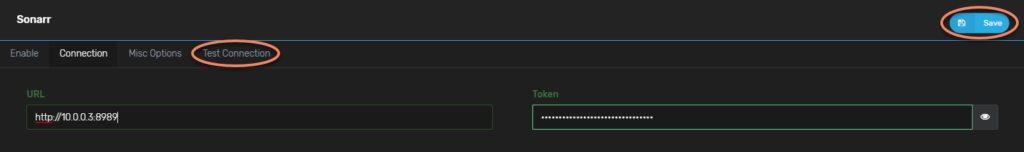

Click enable and then on the Connection tab:

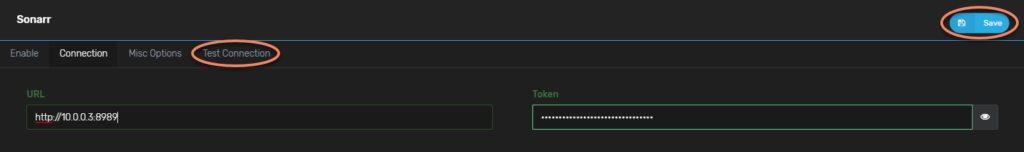

Now enter your Sonarr URL, click Save, and click Test Connection:

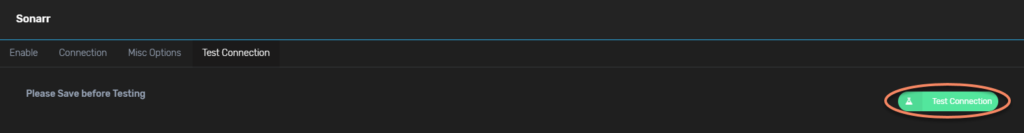

Click Test Connection:

Assuming everything went well, we should see a message in the bottom right corner that states:

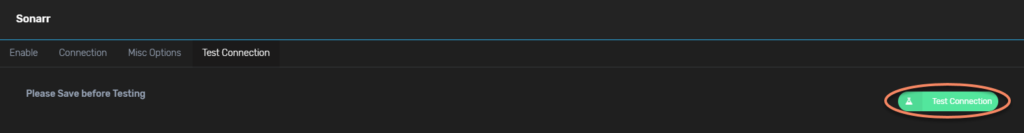

Now we can reload Organizr and check out our homepage:

Excellent! We have a calendar that is linked to Sonarr.

Radarr

Radarr and Sonarr configure exactly the same, so I won’t bore you with the same screenshots with a different logo.

SABnzbd

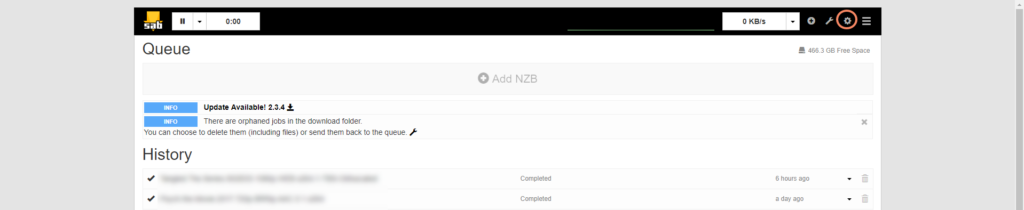

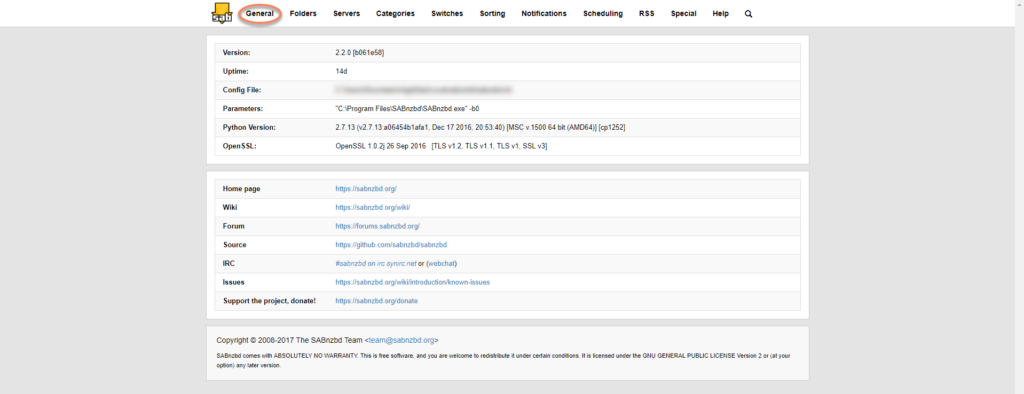

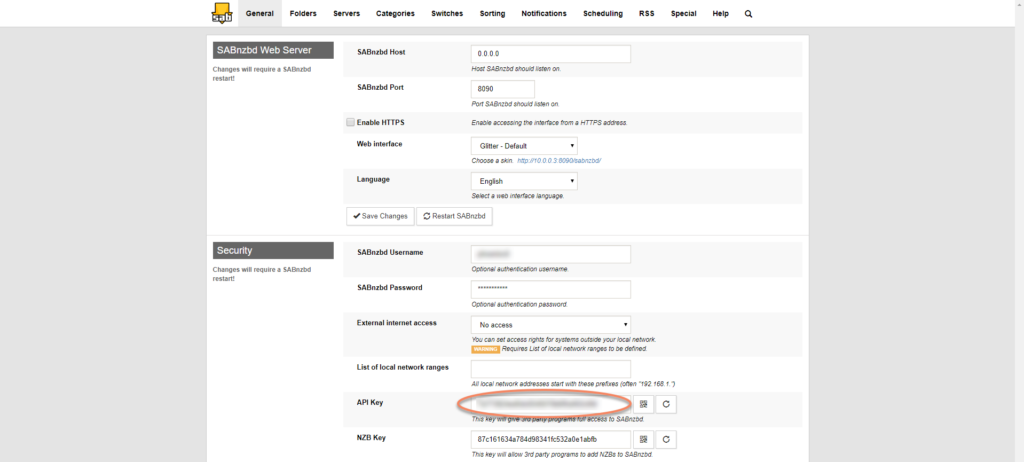

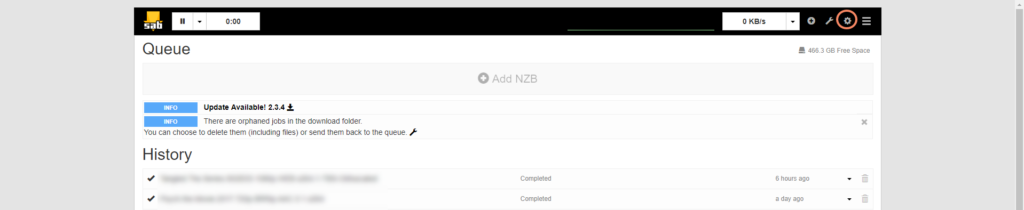

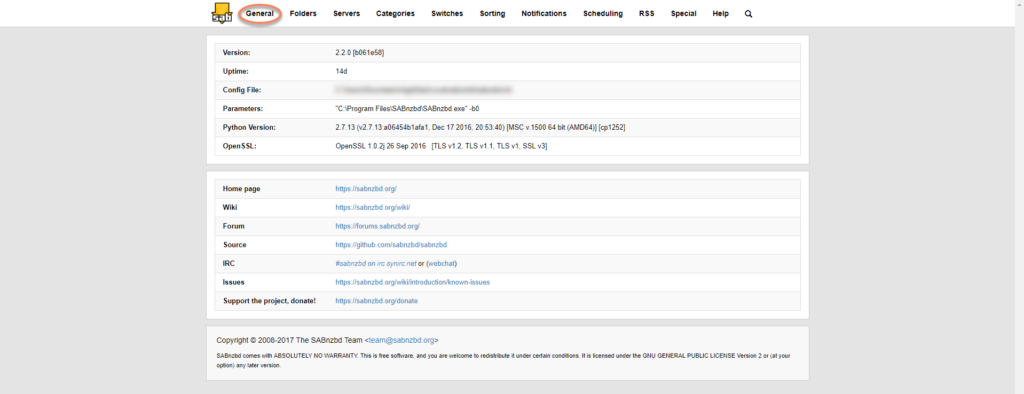

The last Homepage item we will configure is SABnzbd. Before we configure Organizr, we’ll go get our API key just like Plex, Sonarr, and Radarr. Click on configuration:

Click on the General tab:

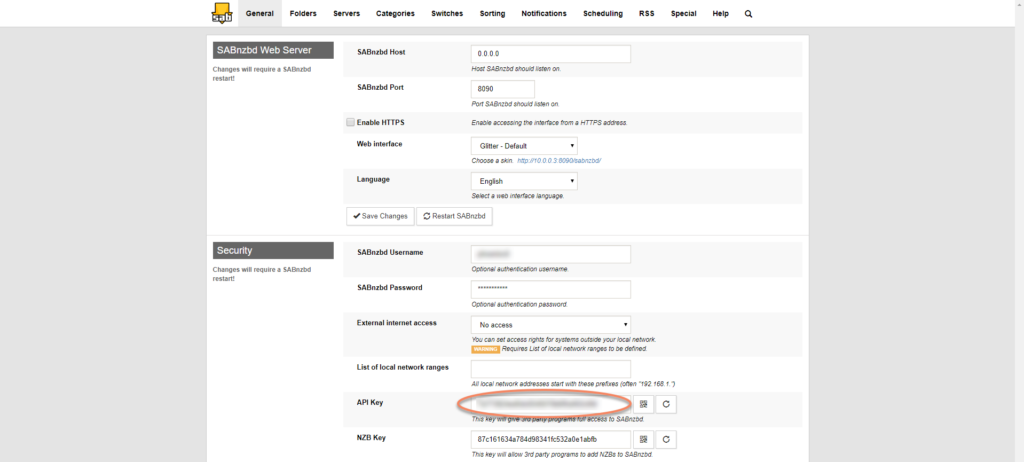

Now we can copy our API Key and paste it somewhere for later:

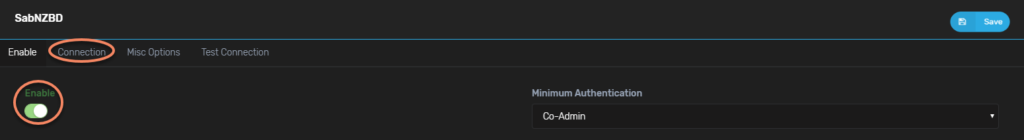

Back in Organizr, go to settings, click Tab Editor, Homepage Items, and finally SABNZBD:

Click enable and then click Connection:

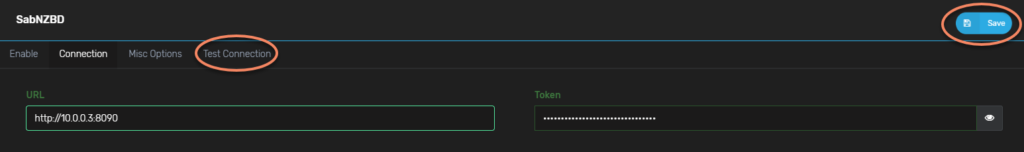

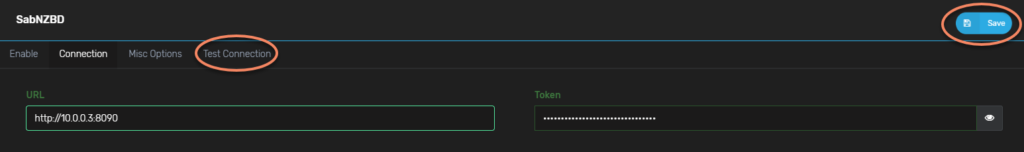

Enter your SABnzbd URL, your API key, click Save, and then Test Connection:

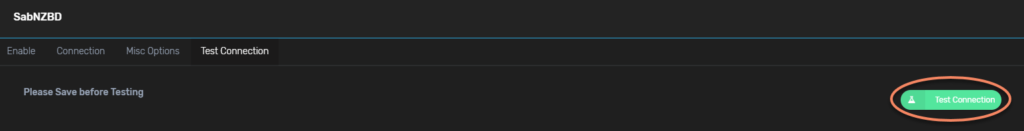

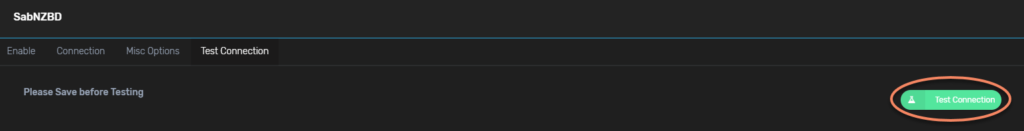

Now click Test Connection:

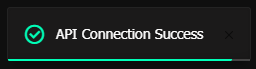

Assuming everything went well, we should see a message in the bottom right corner that states:

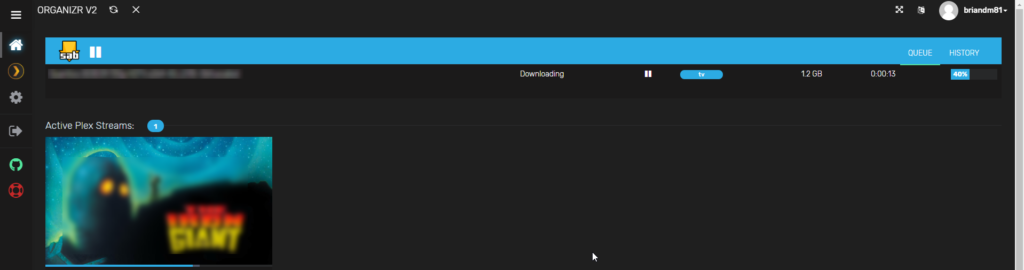

Now let’s reload Organizr and take a look at our homepage:

Excellent! Now we can move on to reordering everything the way we want it on the homepage.

Reordering

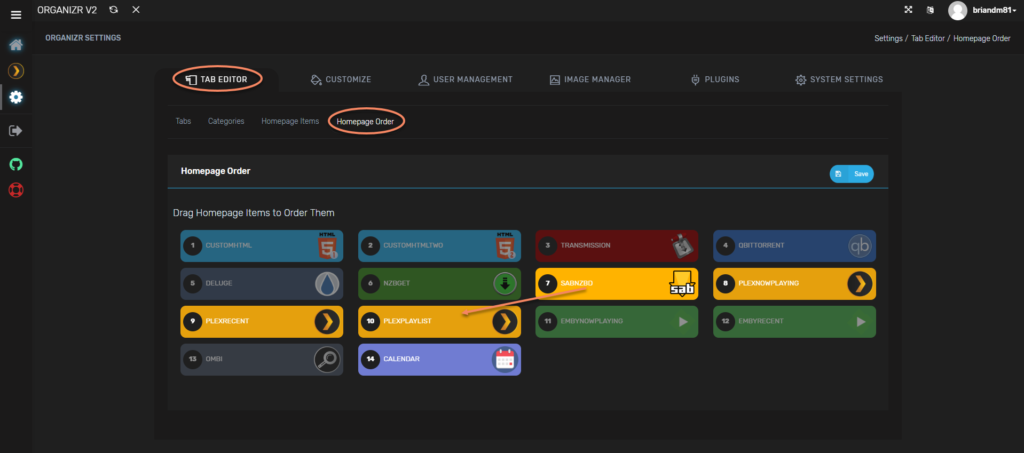

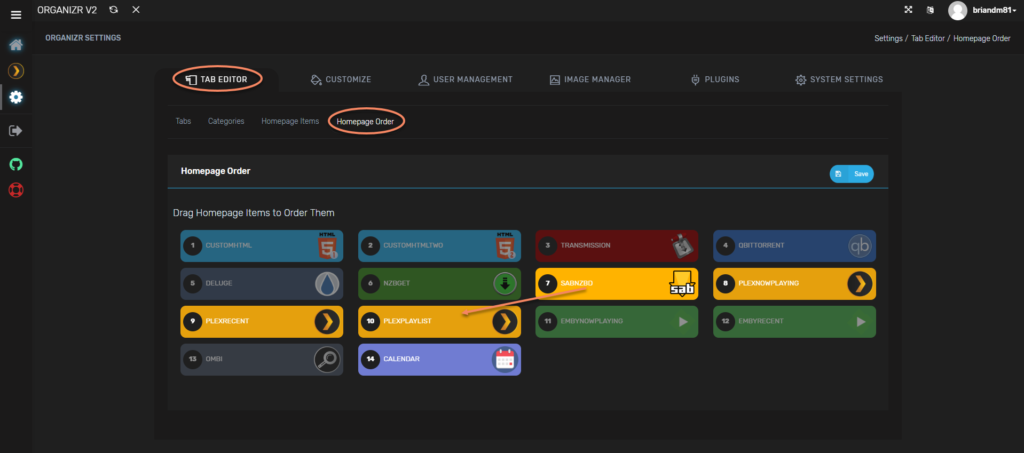

Go to settings and click on Tab Editor, then Homepage Order. I prefer to have Plex above SABnzbd, so I drag SABnzbd just after Plex:

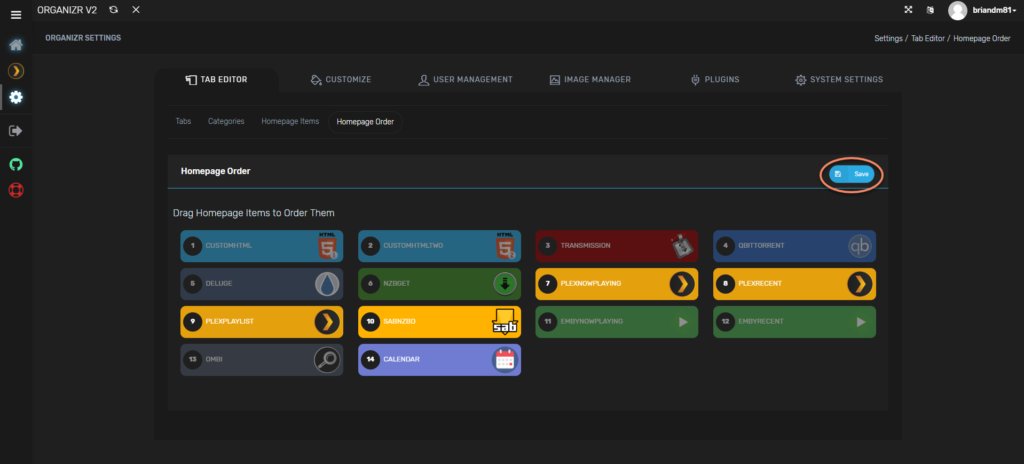

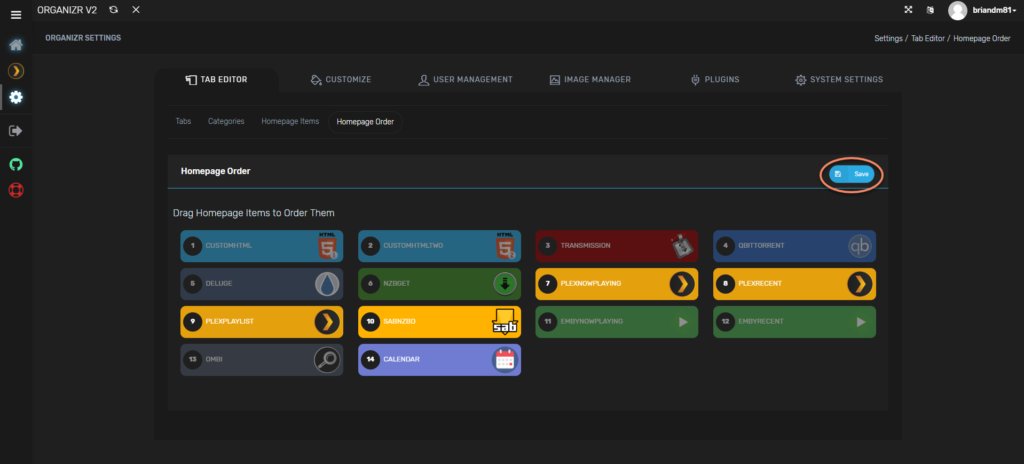

Be sure to click Save and it should look something like this:

Finally we can reload Organizr one last time and check it out:

Conclusion

And that’s that. We have a barebones Organizr configuration completed and we are ready to move on to InfluxDB (for real this time)! Happy dashboarding!

Brian Marshall

July 9, 2018

Welcome to Part 2 of the my soon to be one million part series about building your own Homelab Dashboard. Today we will be laying the foundation for our dashboard using an open source piece of software name Organizr. Before we dig any deeper into Organizr, let’s re-cap the series so far (yes, only two parts…so far):

What is Organizr?

So I know what you are thinking…in part 1 of this series I mentioned that I prefer the custom dashboard built by Gabisonfire and extended by me. And while that is still true, I think that the broader audience would benefit more from the far larger set of functionality. Additionally, the installer does a fantastic job of taking a base Linux install and bring along all of the dependencies without any real effort. I’m always a fan of that. But, what is Organizr? Essentially, it is a pre-built homelab organization tool that is built on PHP and totally open source. It has really great integration with many of the major media and server platforms out there. Here’s a short list:

I also find that it just works with things like Grafana and really anything that works inside an iframe. And if it doesn’t work in an iframe, just set it to pop out so that you can still have links to everything in one place. I use it for these non-media related items:

- Grafana

- IPMI for all of my server (pop-out)

- FreeNAS

- pfSense (pop-out)

- vCenter (pop-out)

- My Hyperion instances (this is now officially a Hyperion post!)

Getting Started

As I mentioned earlier, the goal of this set of tutorials is to provide a soup-to-nuts solution. As a result, I’m going to assume that you need to install an operating system, configure that operating system, and then you will be ready to proceed.

Why am I making all of these assumptions? Mostly because that’s what I went through when I started this process. I’ve used Linux quite a bit over the years, but at the end of the day, I’m still a “Windows Guy.” In my industry, most of my work is done…in Windows. While a lot of this software will “work” in Windows, in most cases Windows is an afterthought, or beta…forever. So where do we start?

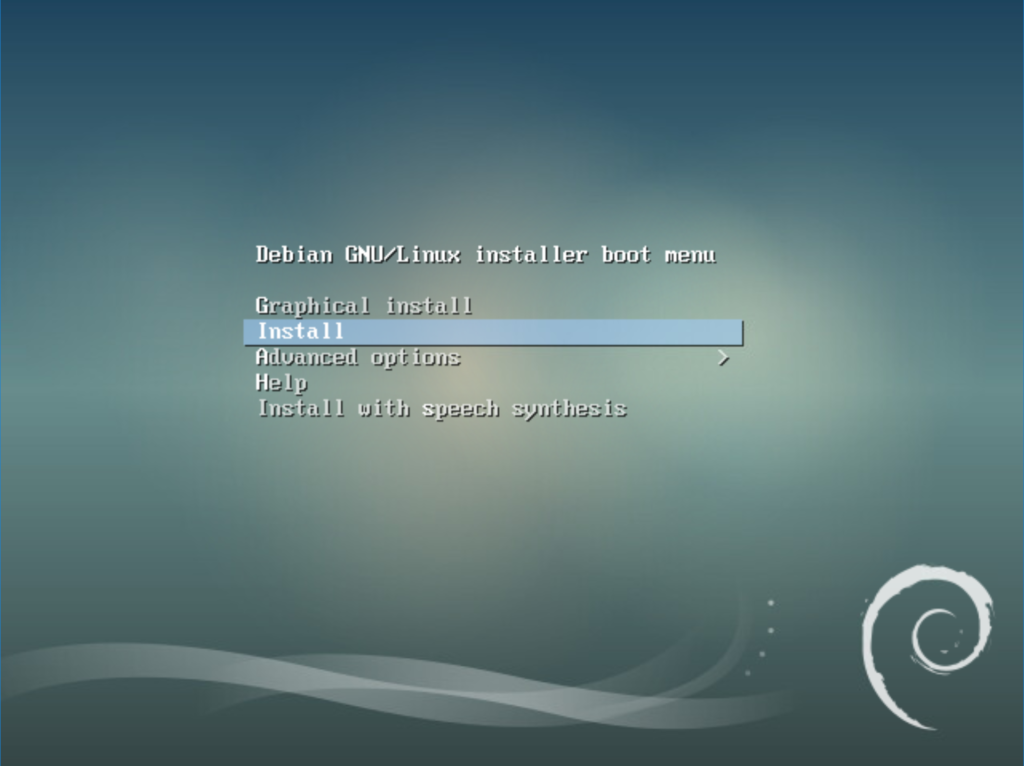

Installing Linux

We start at the very beginning with our operating system. I’ve worked with a lot of distributions over the years, but I always seem to end up on Debian. So I’ve decided to use Debian 9 (stretch). When I install, I use the net installer. You can find more information here:

https://www.debian.org/distrib/netinst

Or, you can just skip directly to downloading Debian 9.4 (netdist) here:

https://cdimage.debian.org/debian-cd/current/amd64/iso-cd/debian-9.4.0-amd64-netinst.iso

The reason I use the net installer is that it makes future package installer easier. It will configure the package manager during the installation and we’ll be ready to do basic configuration prior to installing Organizr. You can skip all of this if you already have a system you will be using or if you happen to know what you are doing…better than I do.

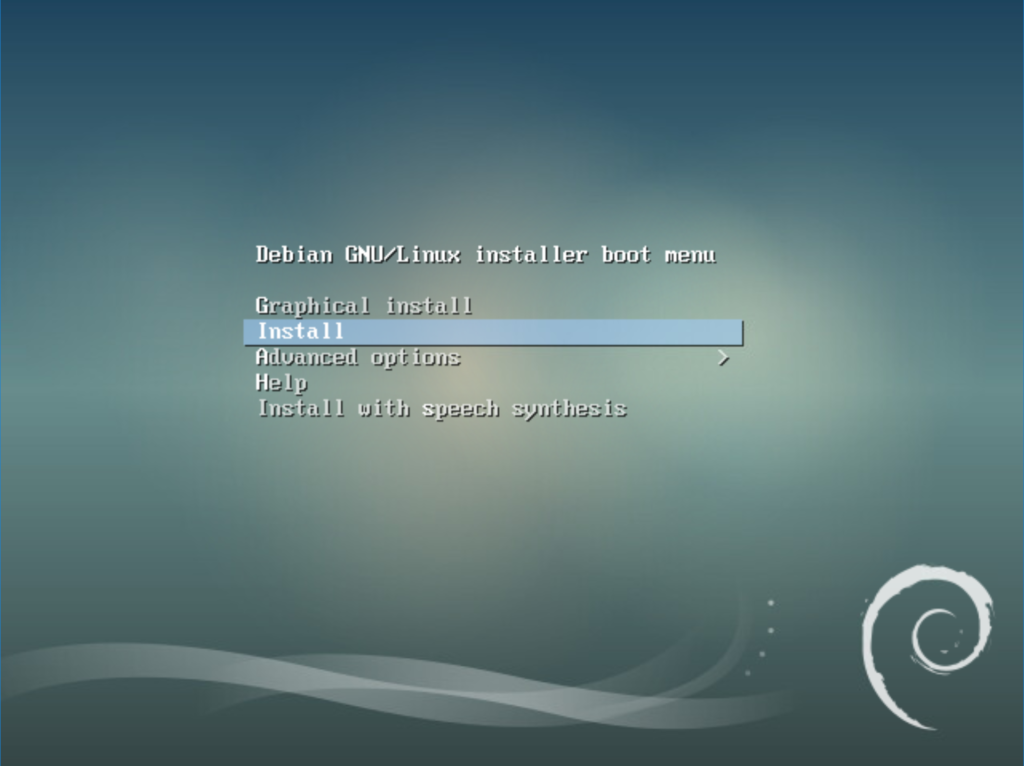

We start off by selecting the old-school installer:

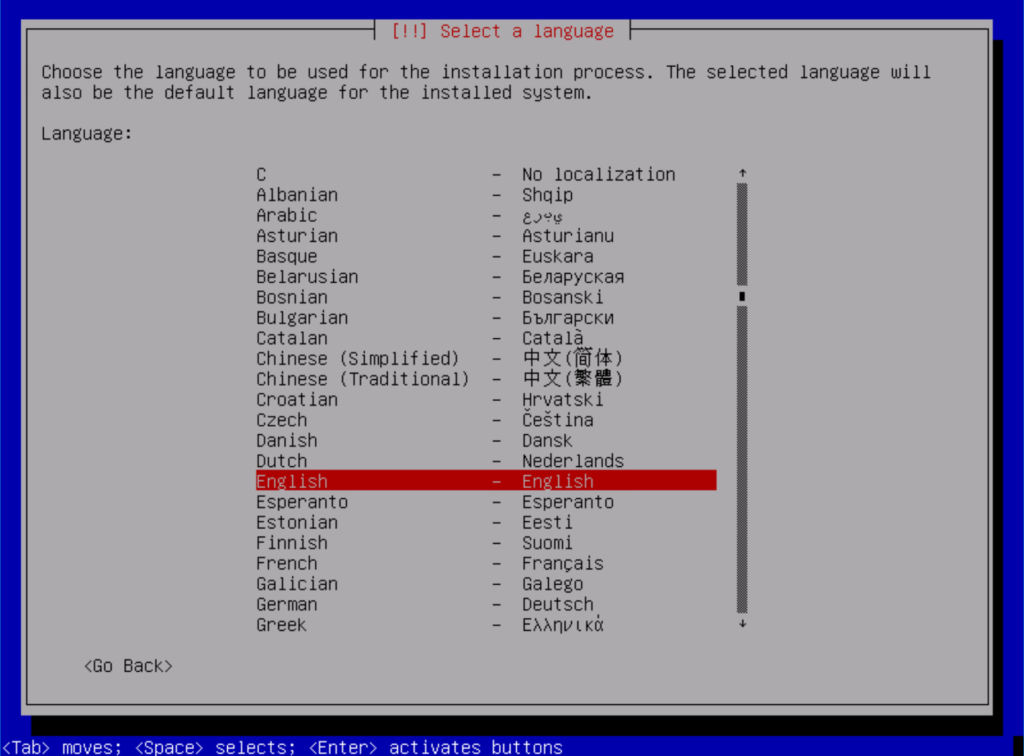

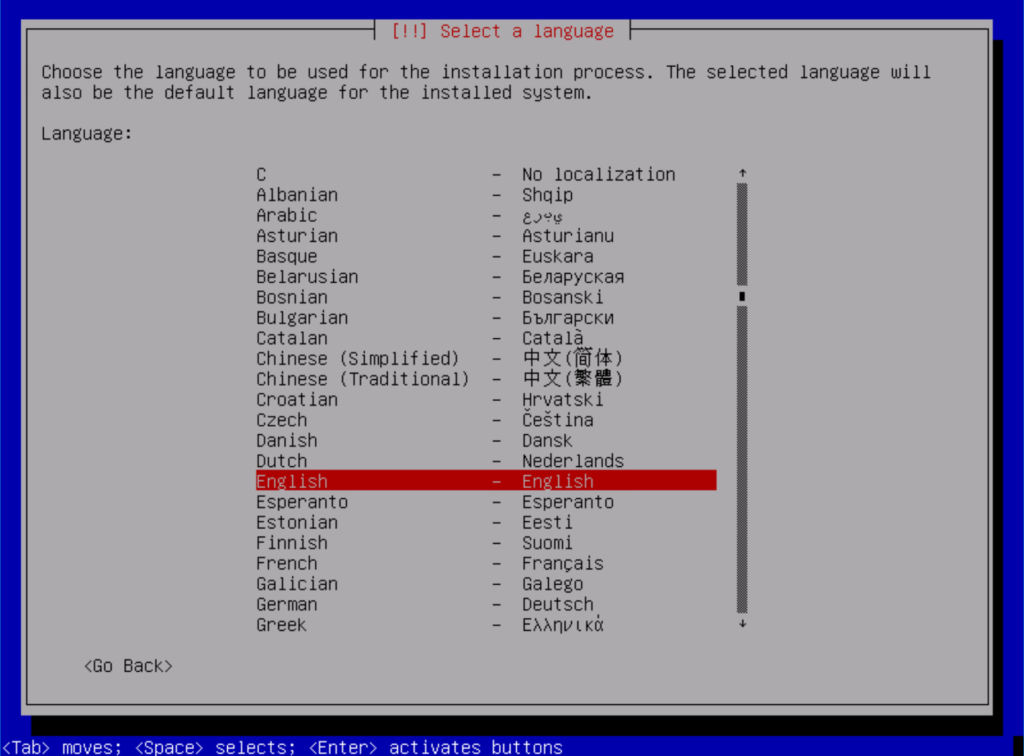

Select your language:

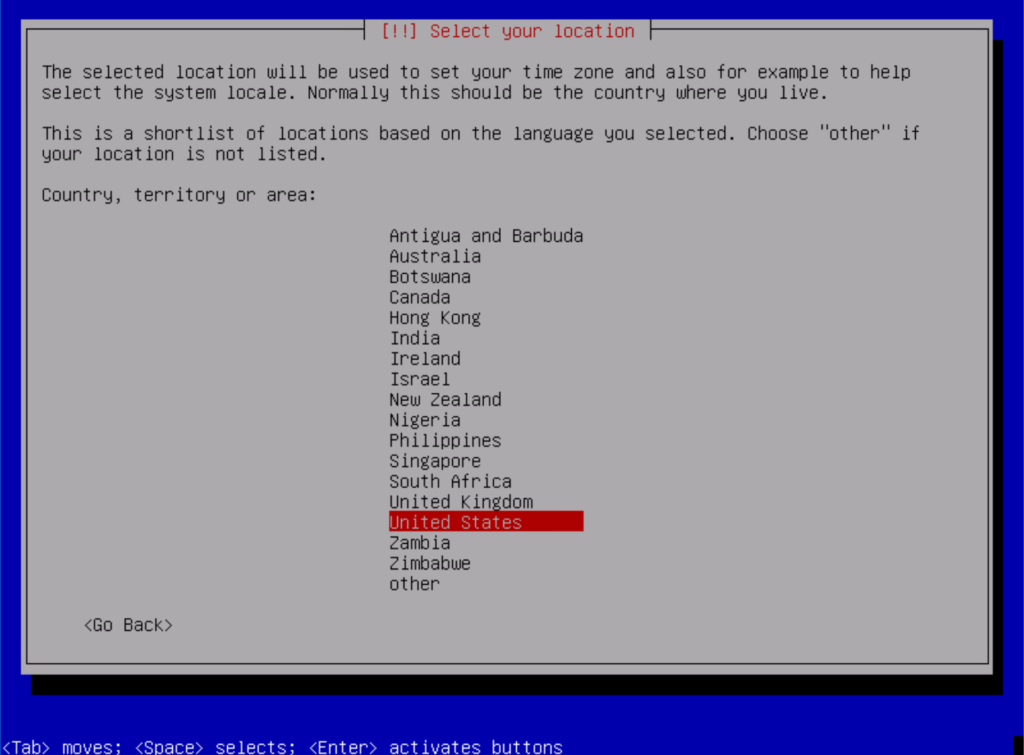

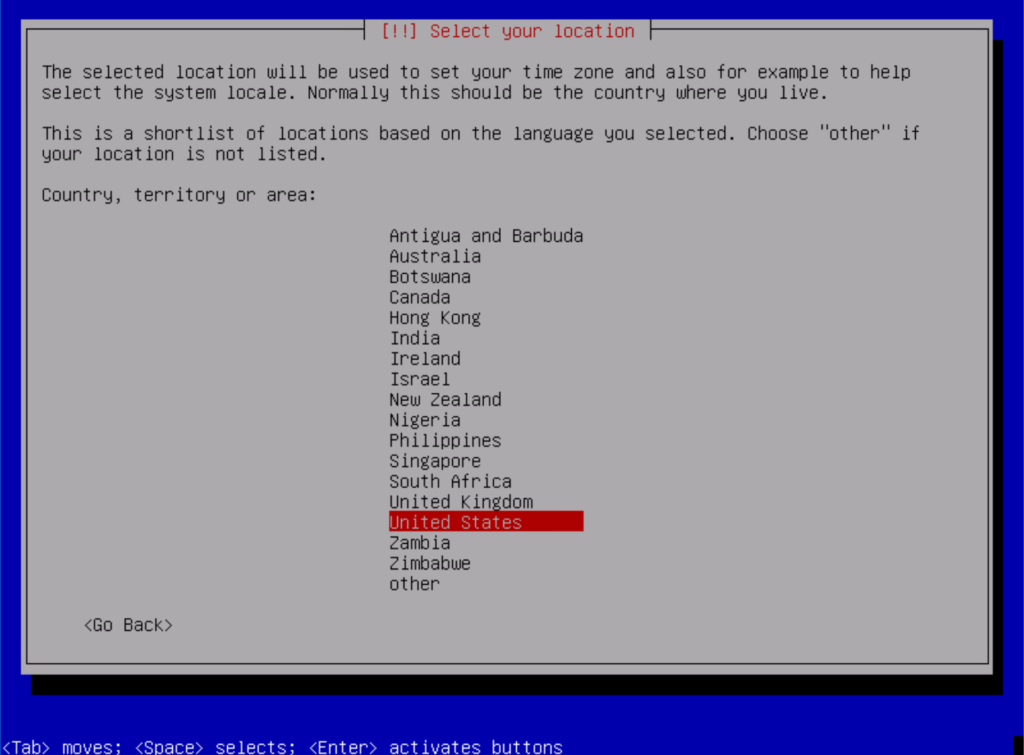

Next select your location:

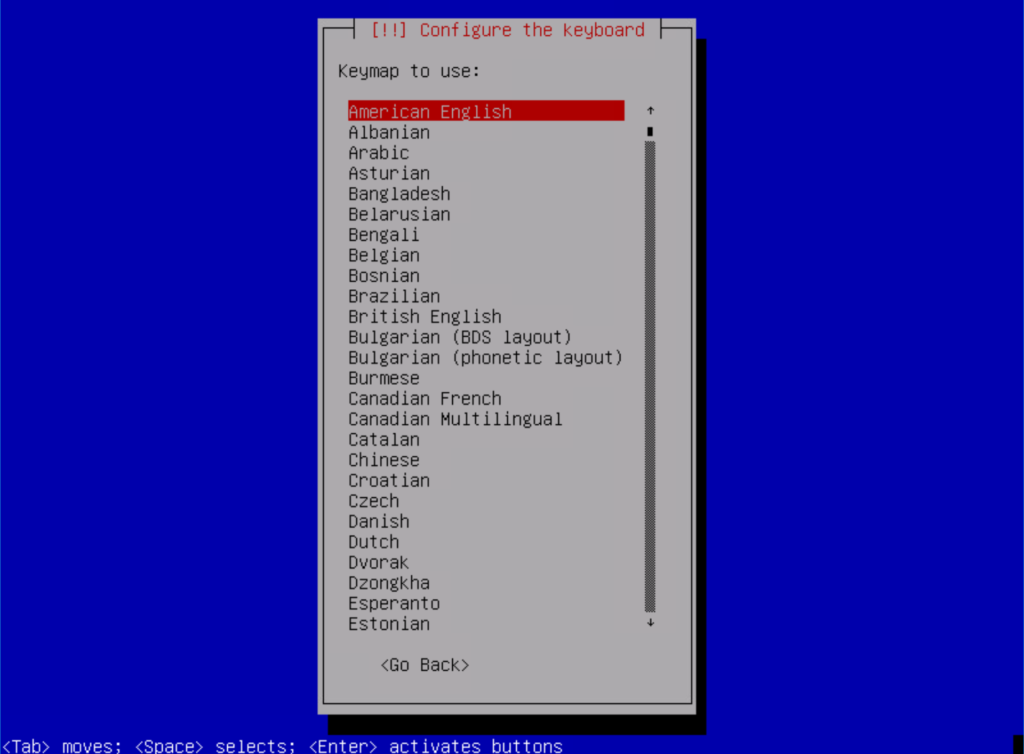

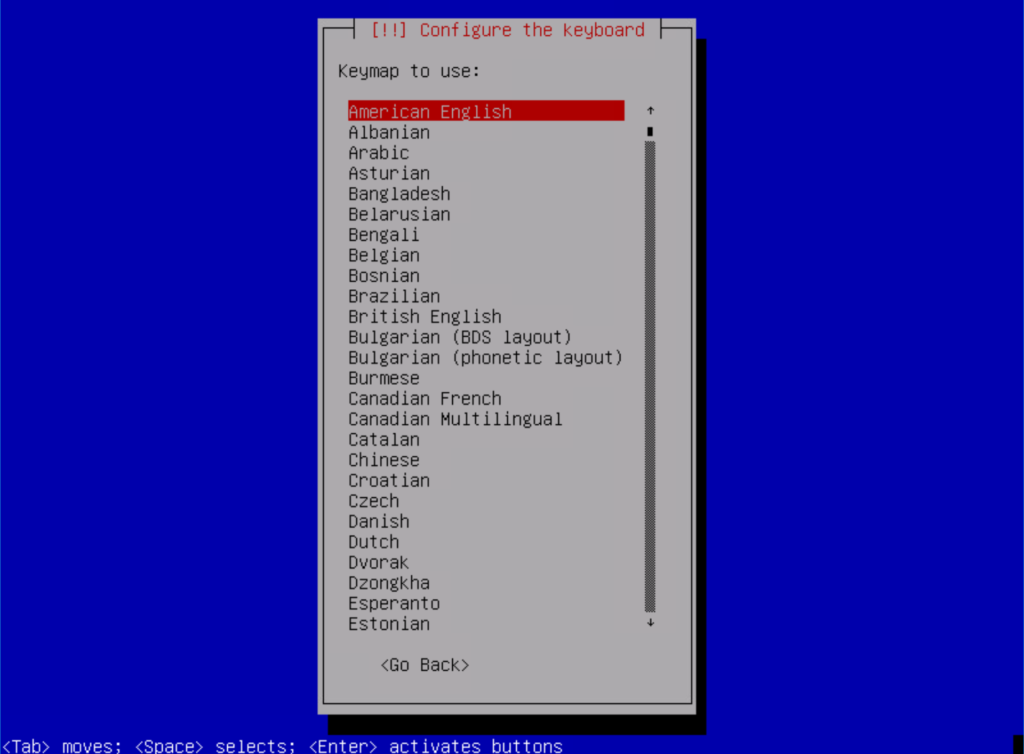

Select a keymap:

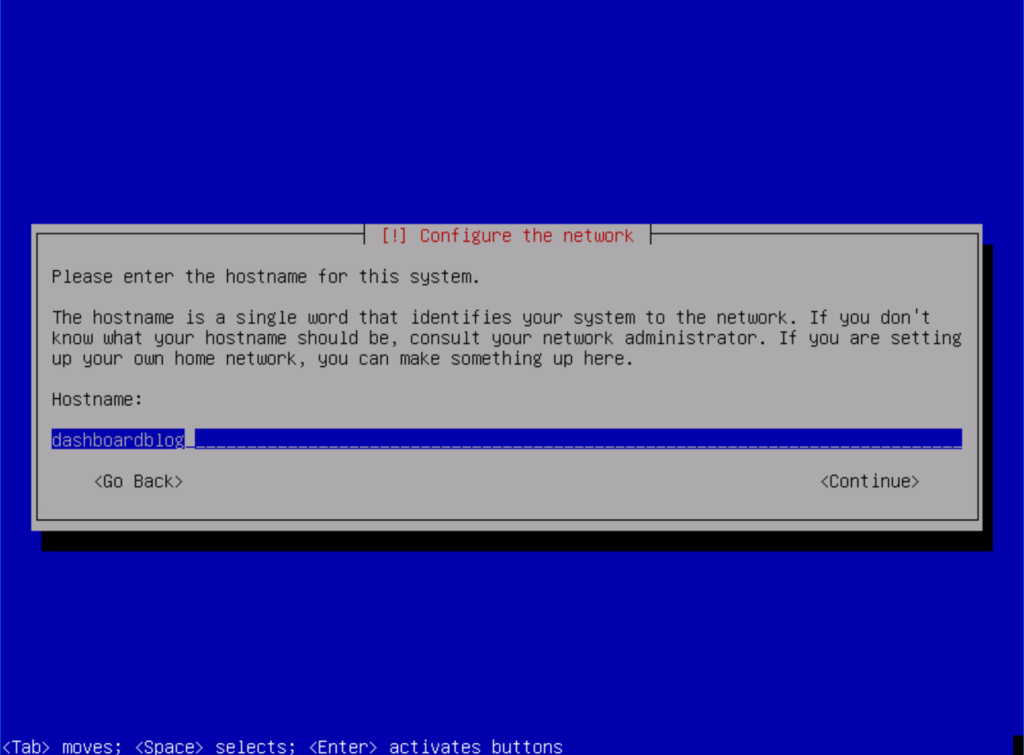

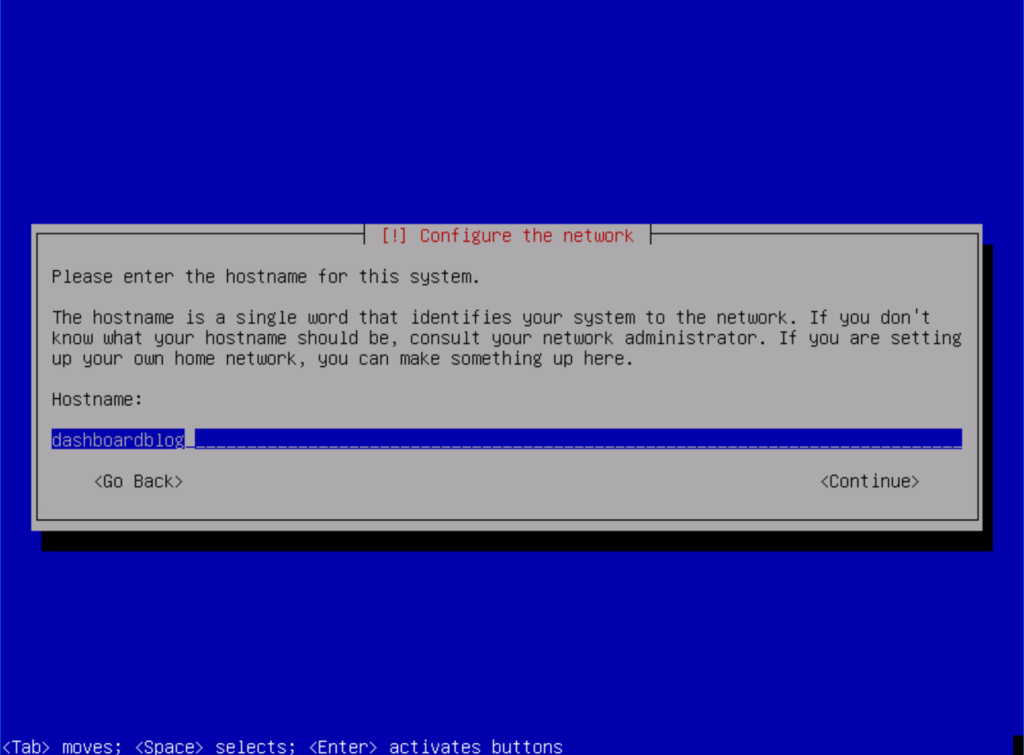

Enter your hostname:

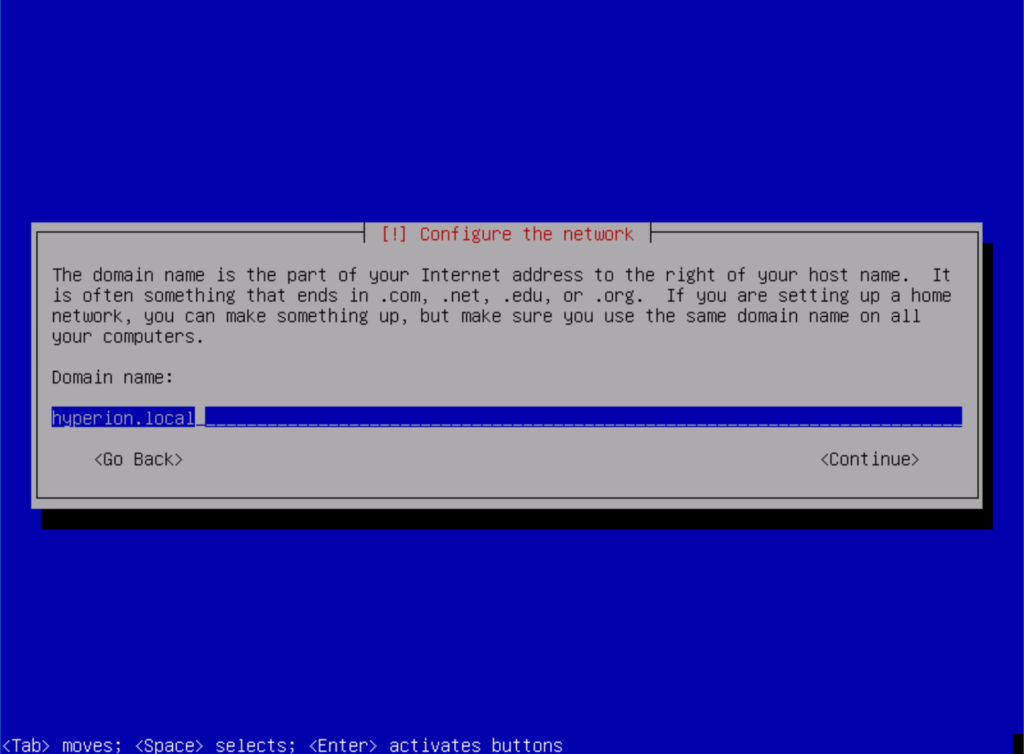

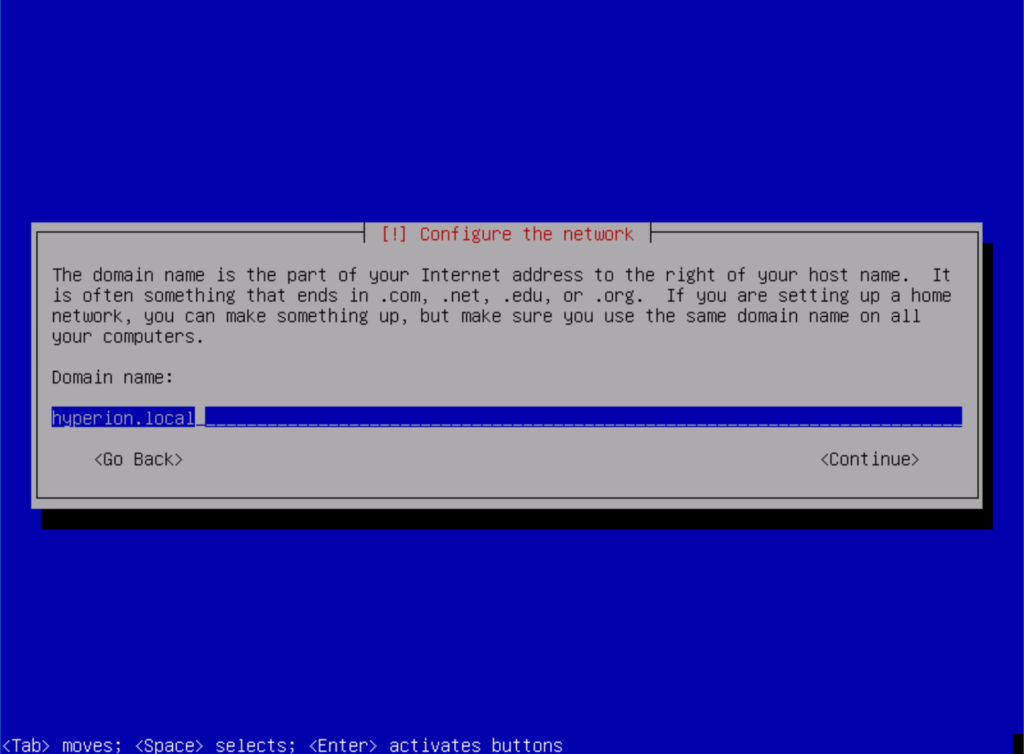

Now, enter your domain name:

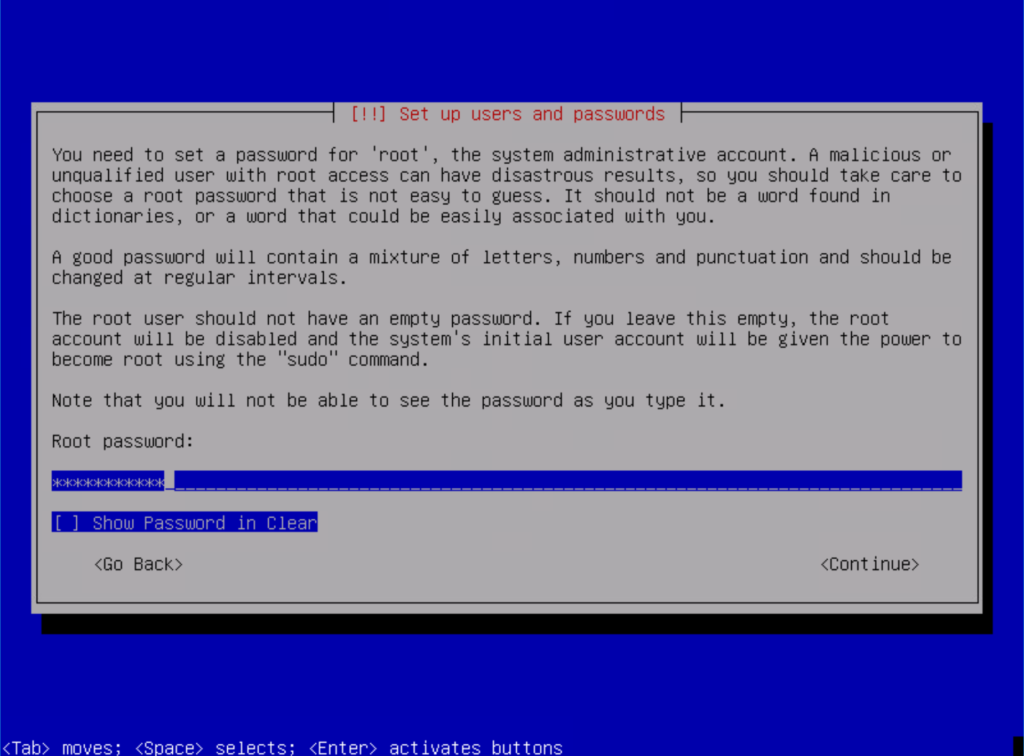

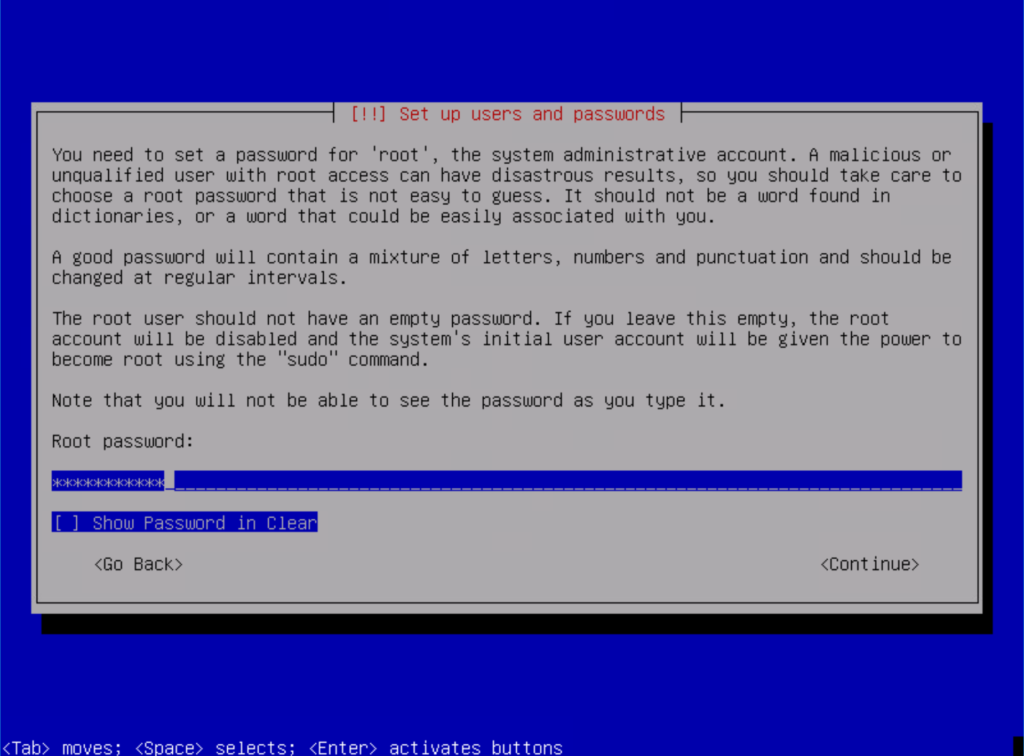

Enter your password for the root account:

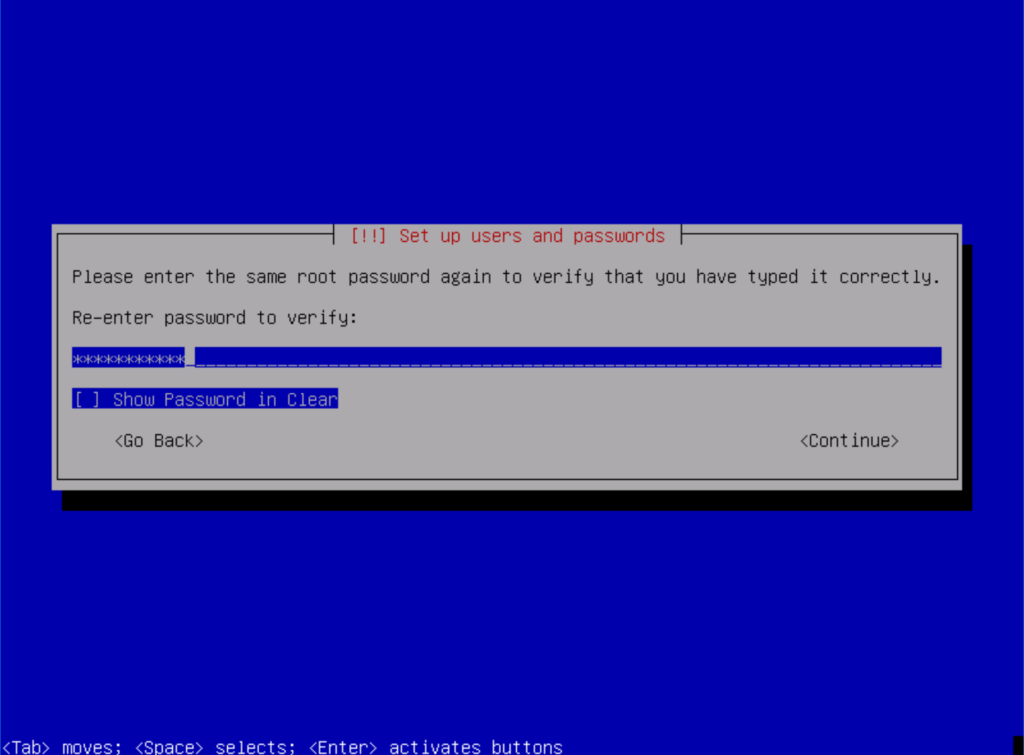

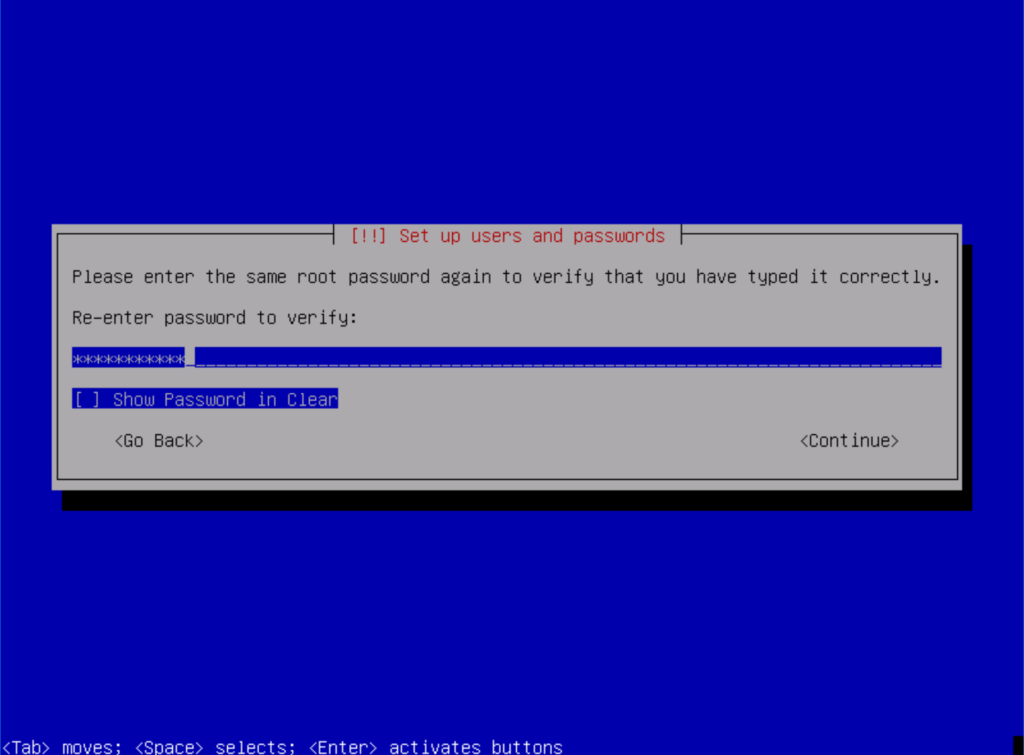

Re-enter your password for the root account:

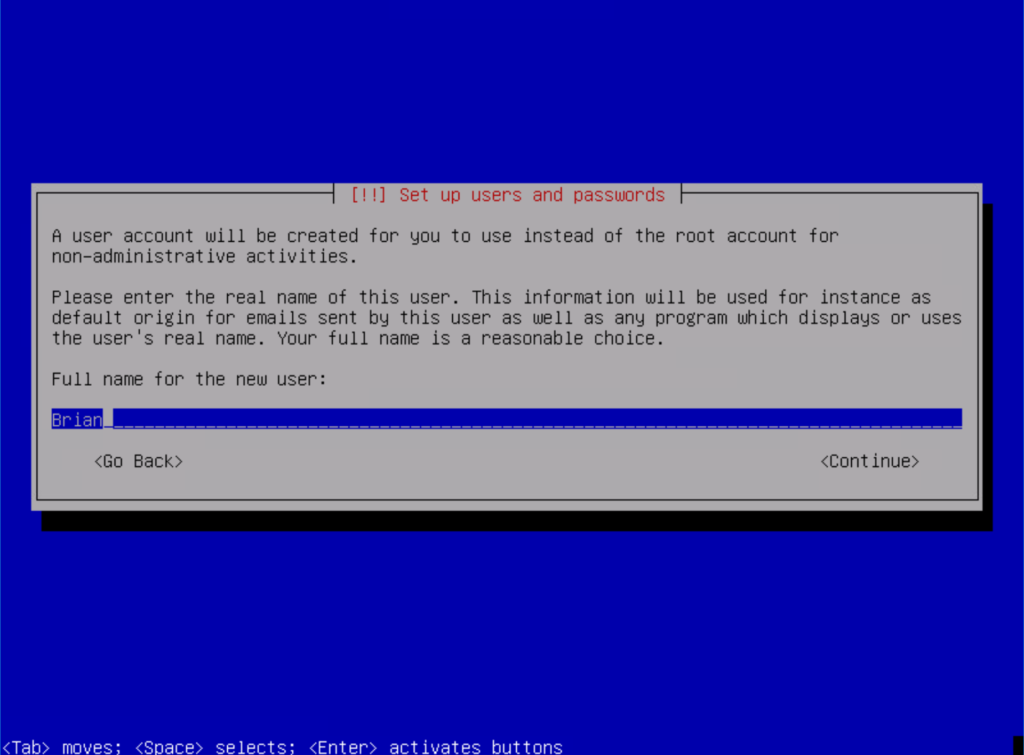

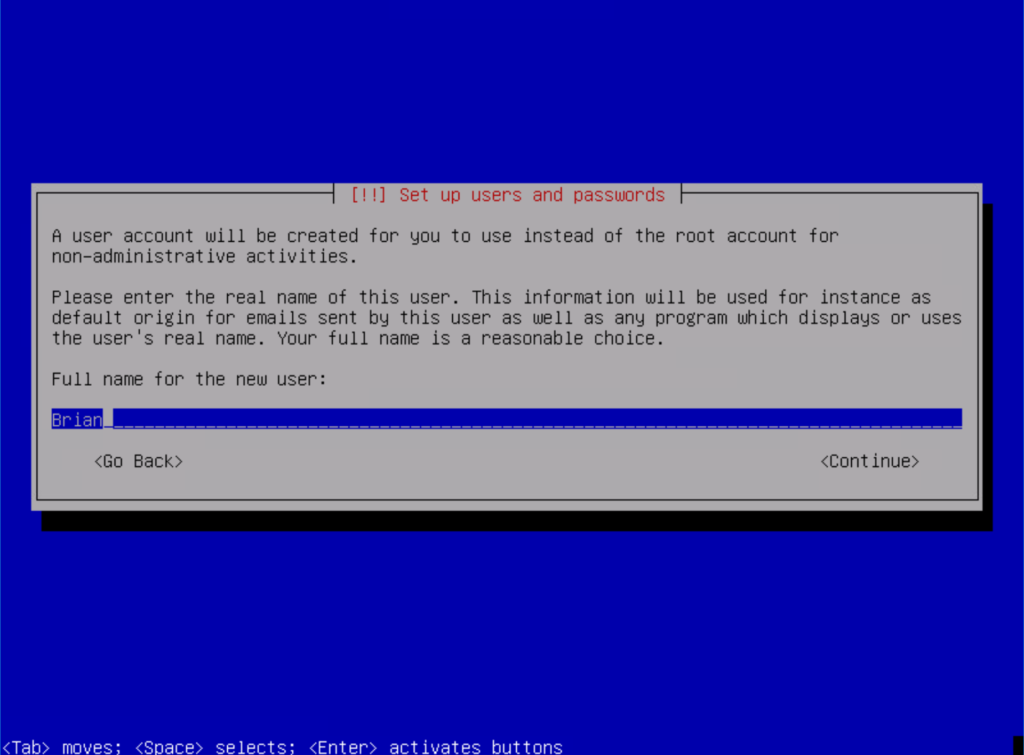

Enter the name of your non-administrative user:

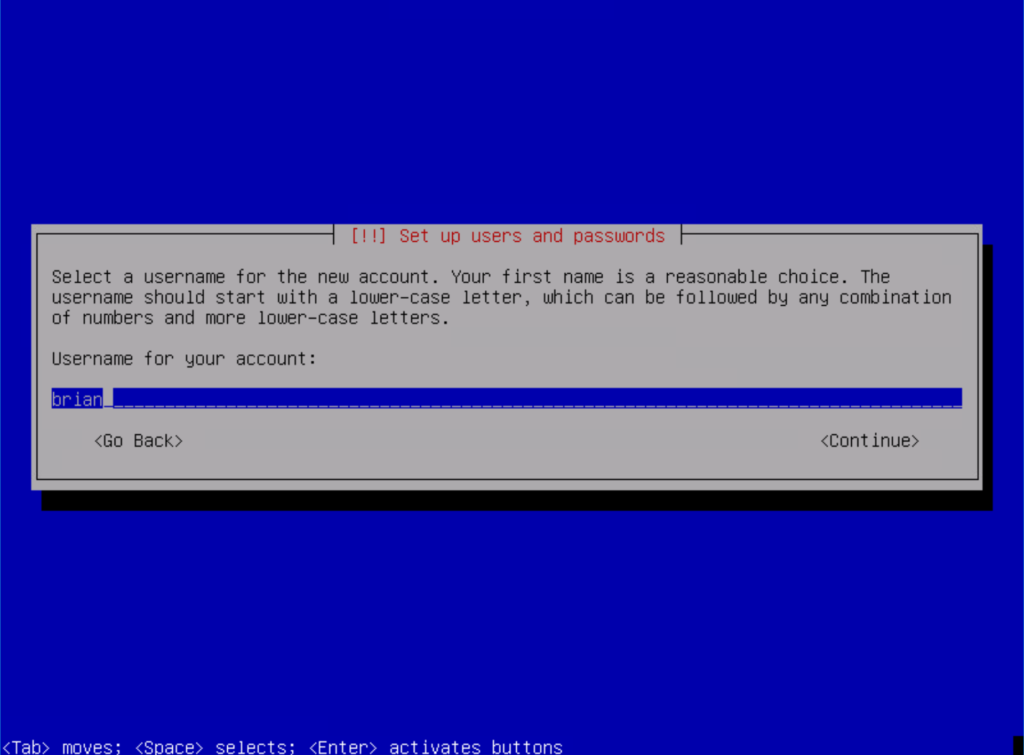

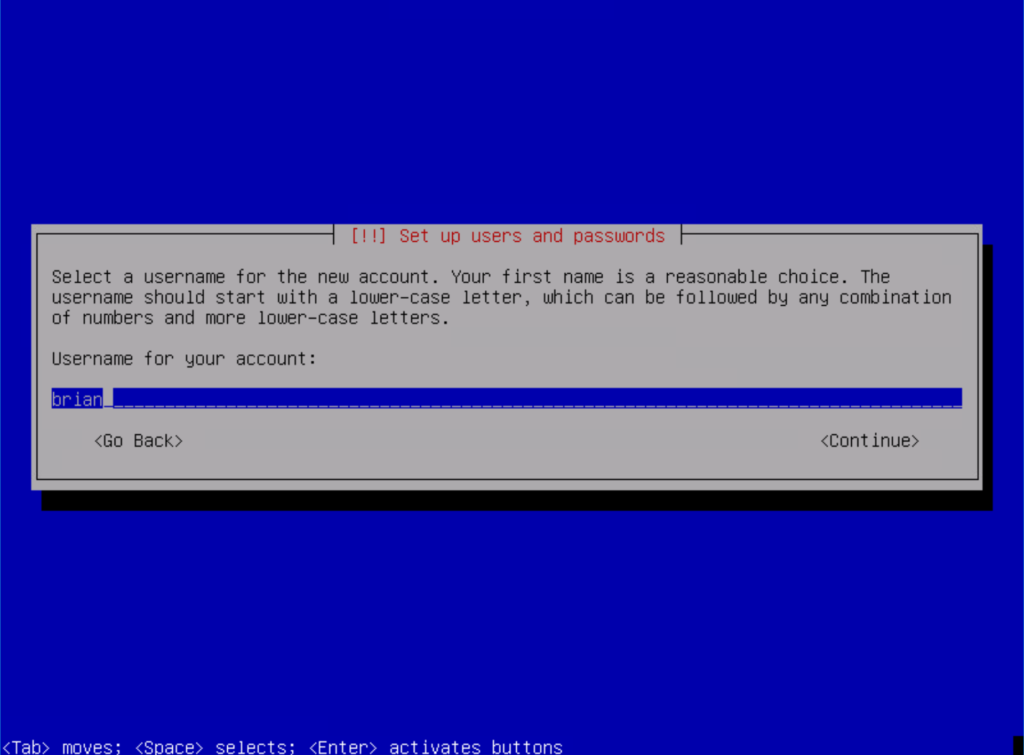

Enter the username of your non-administrative user:

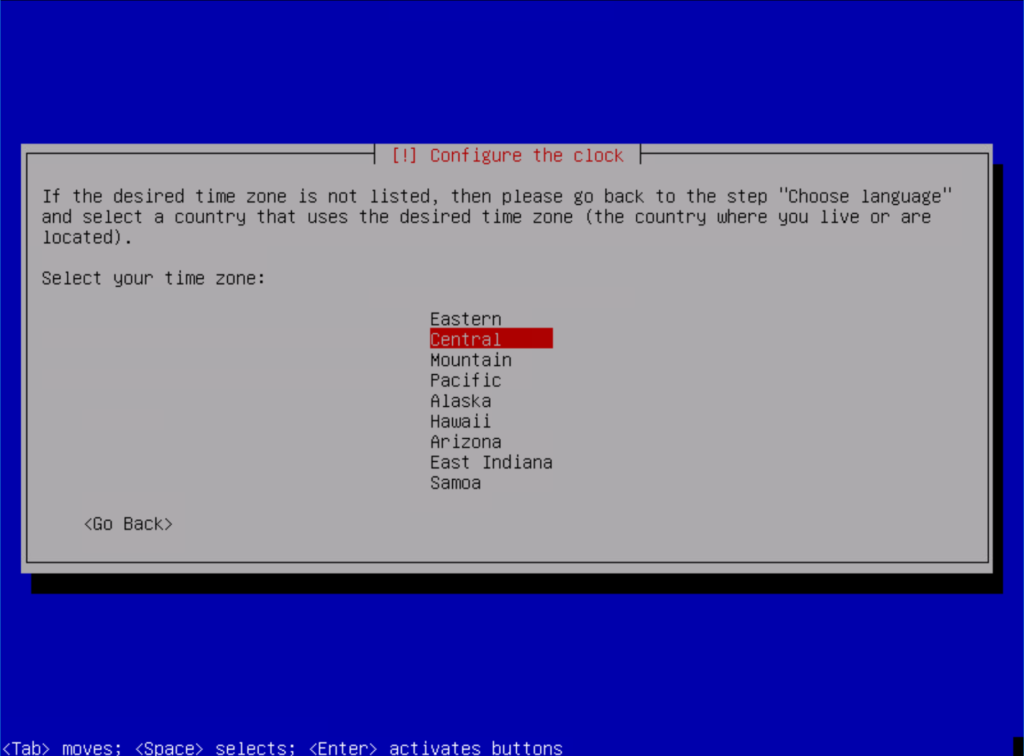

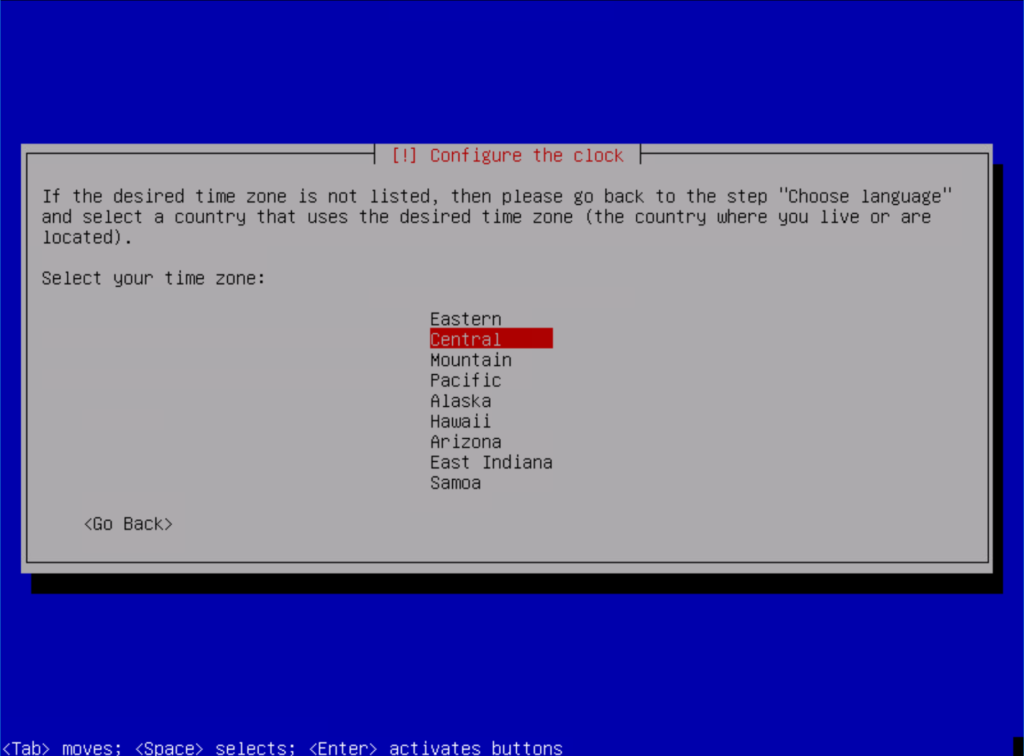

Select your time zone:

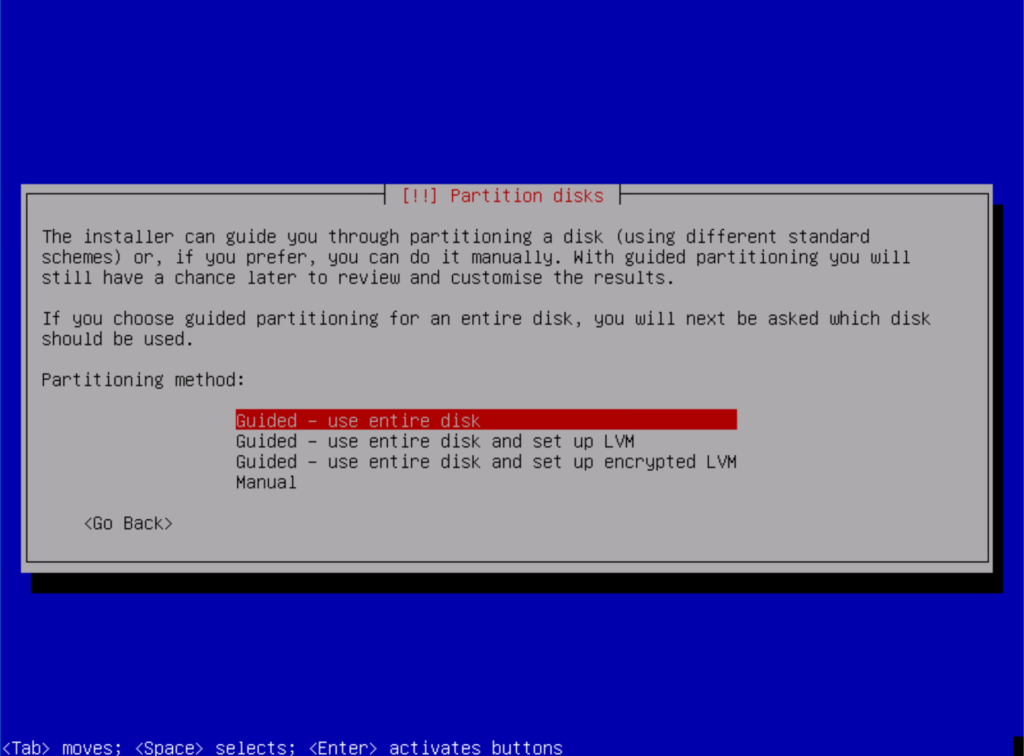

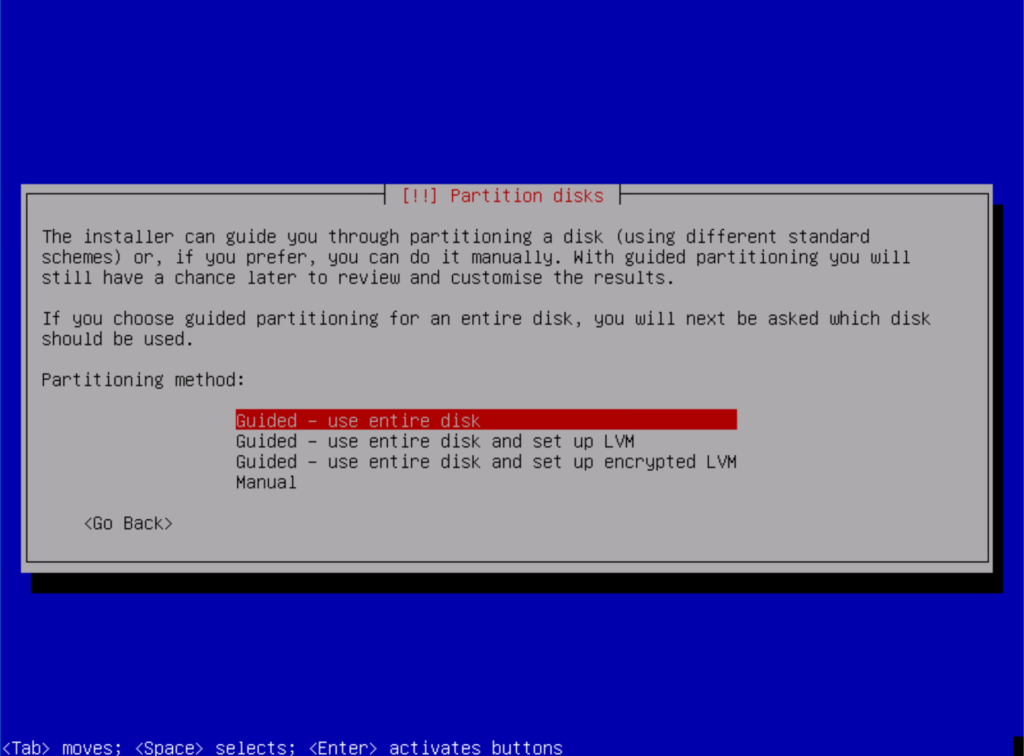

Use the guided partition using the entire disk (this is up to you, this is just the setting that I used):

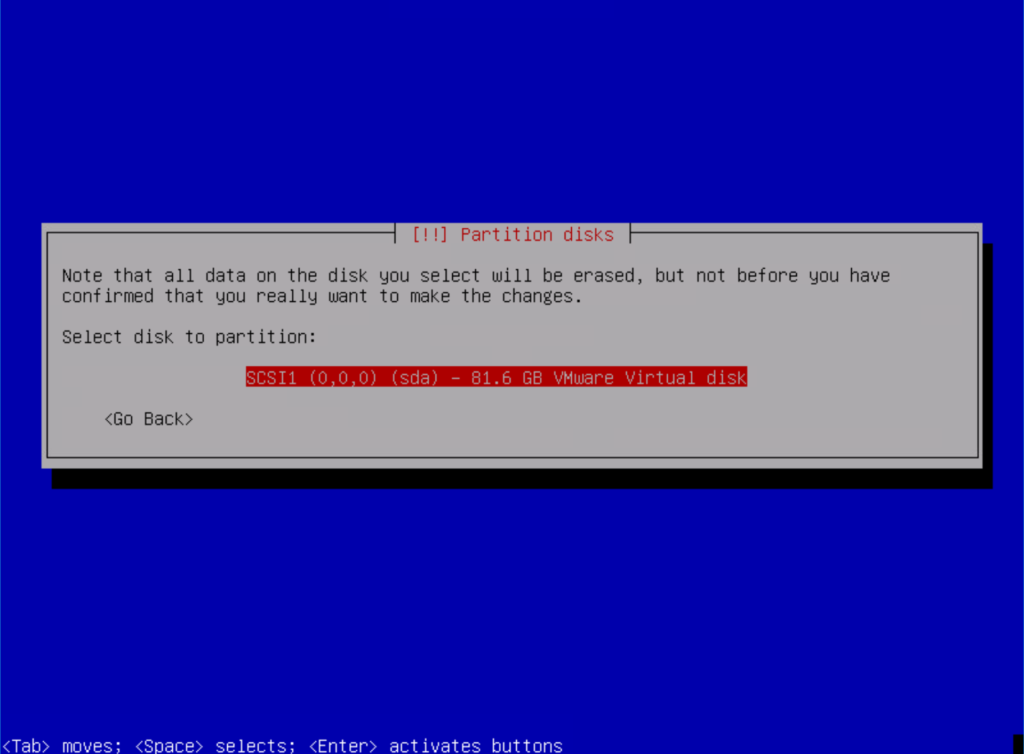

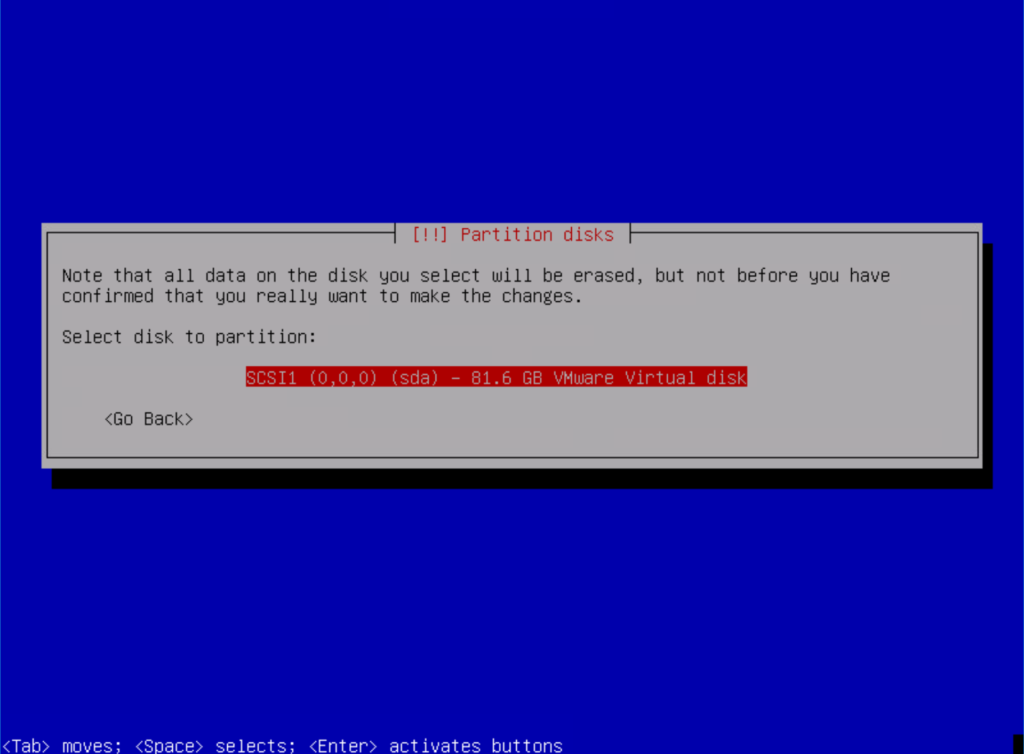

Select the disk to partition (I only have one on the VM that I created):

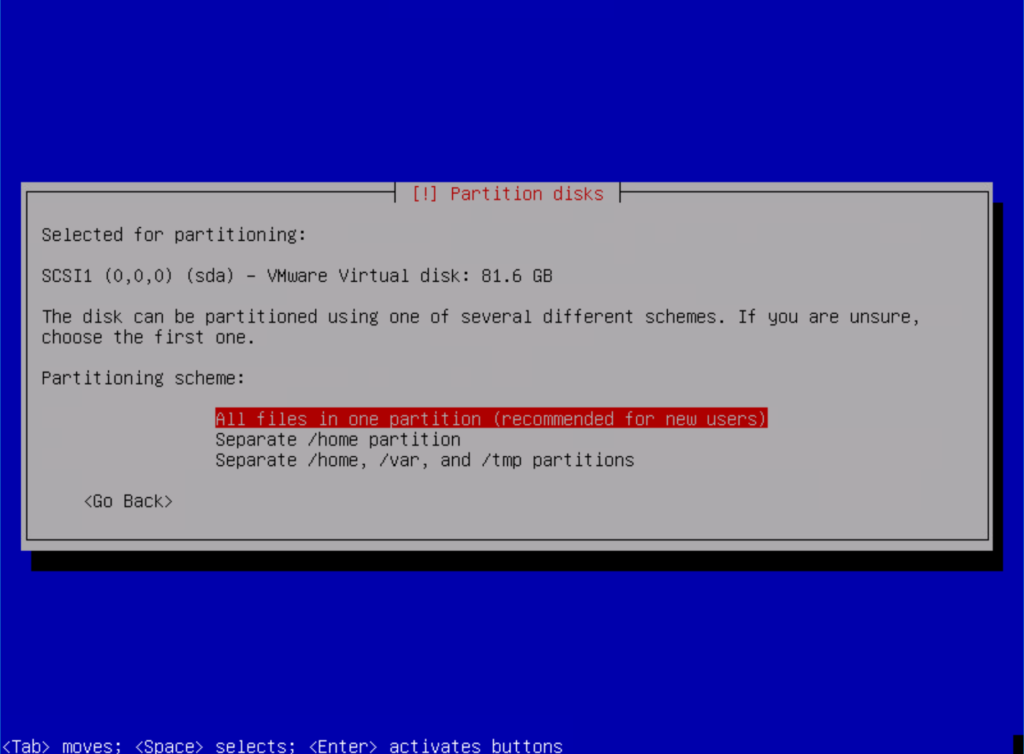

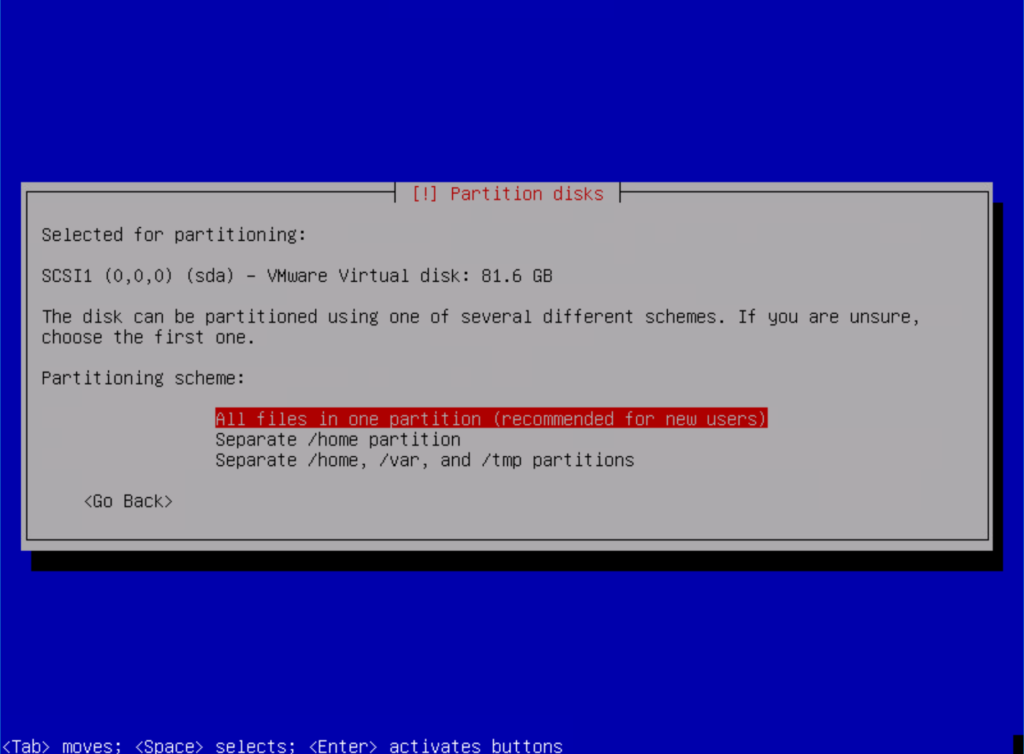

Select a partitioning scheme (I went the new user route):

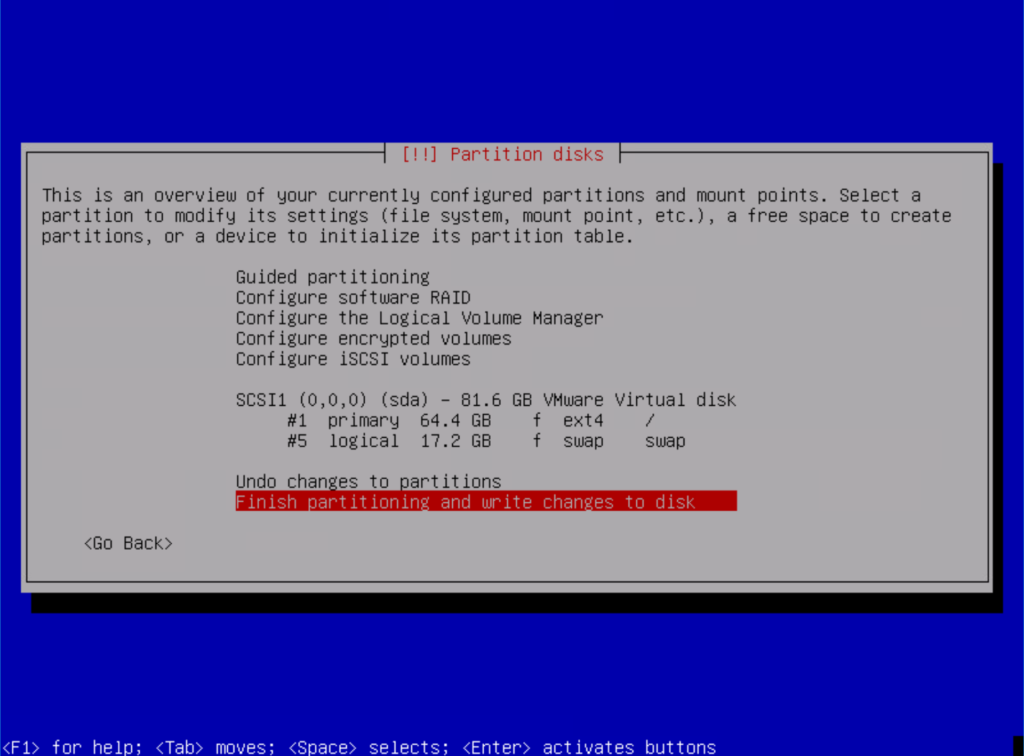

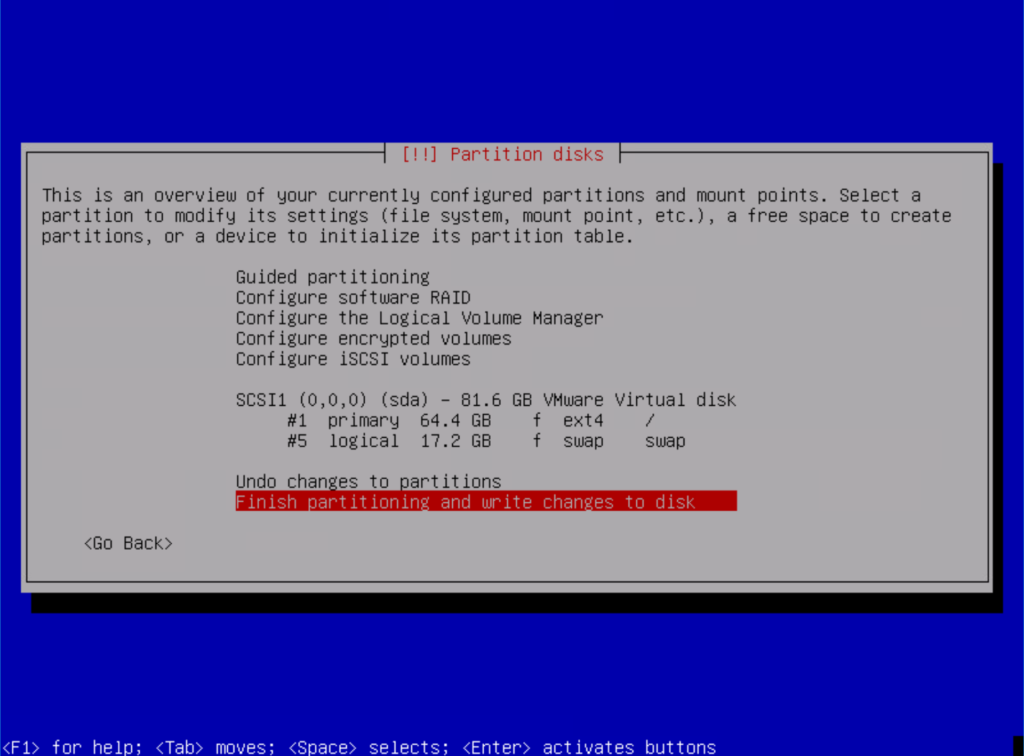

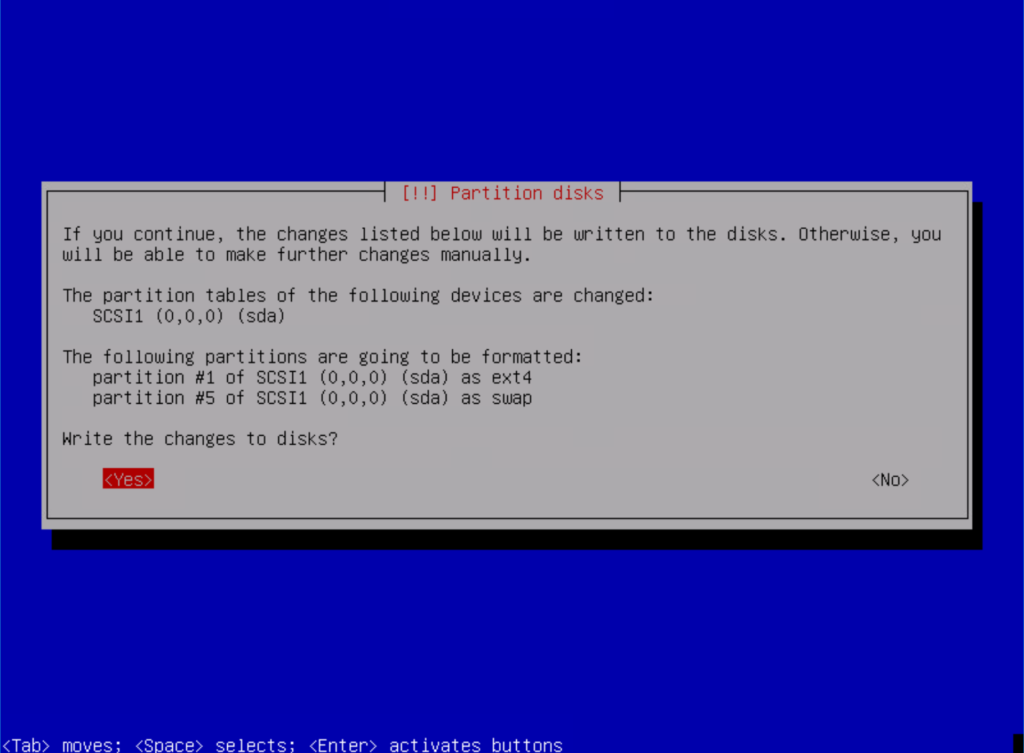

Write your changes to the disk(s):

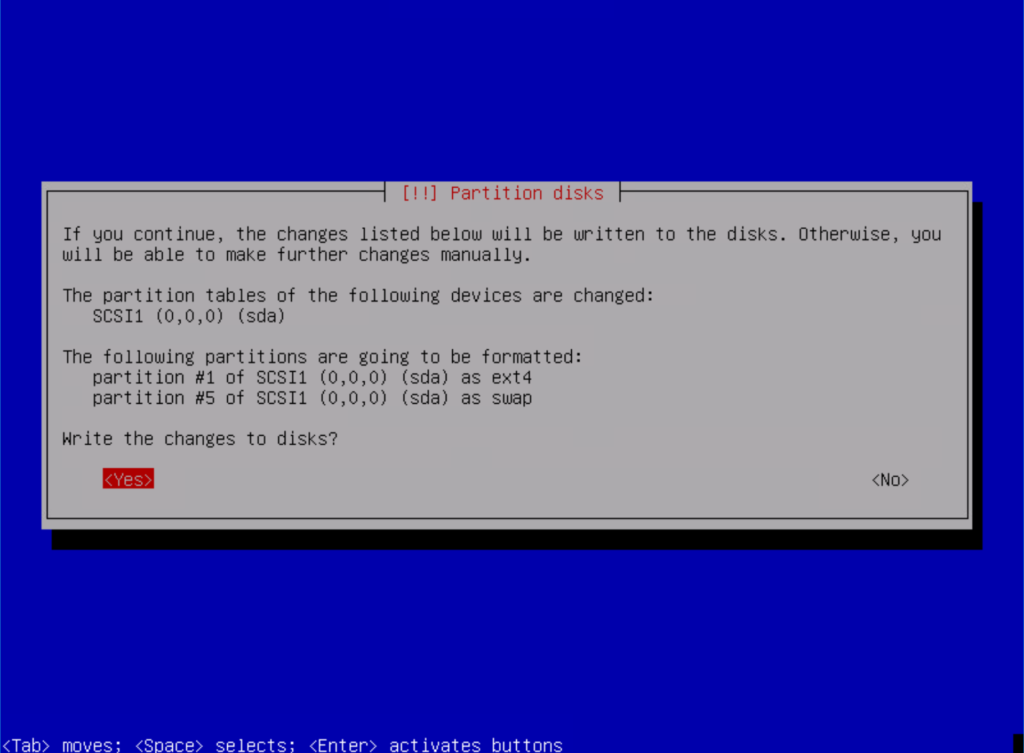

Confirm that you REALLY want to make those changes:

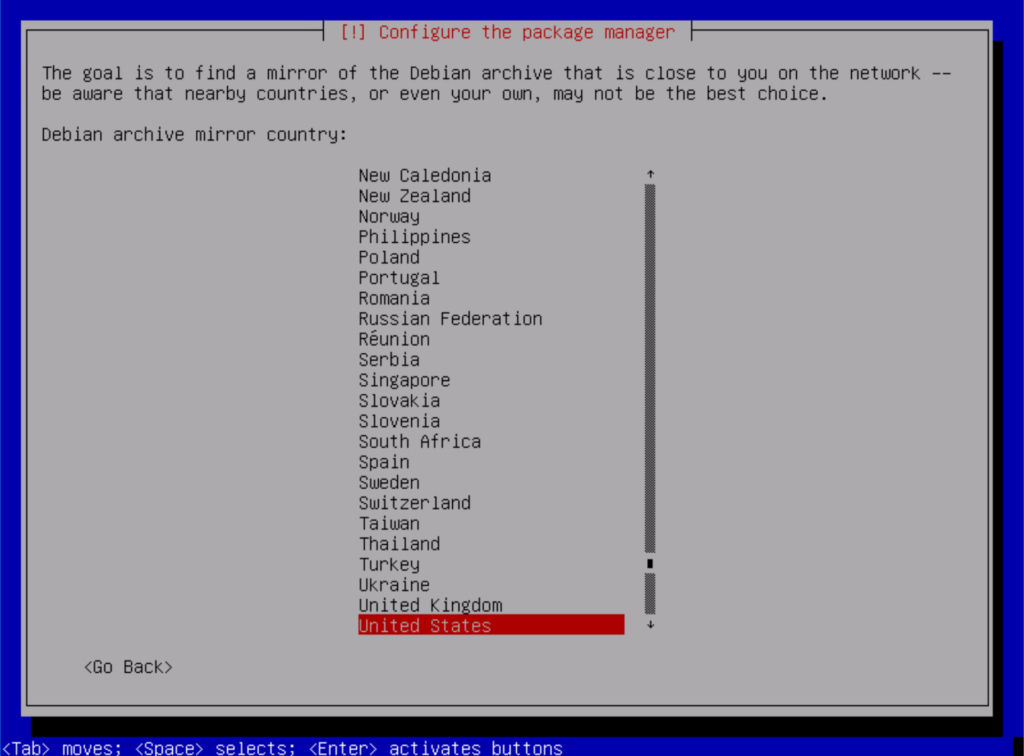

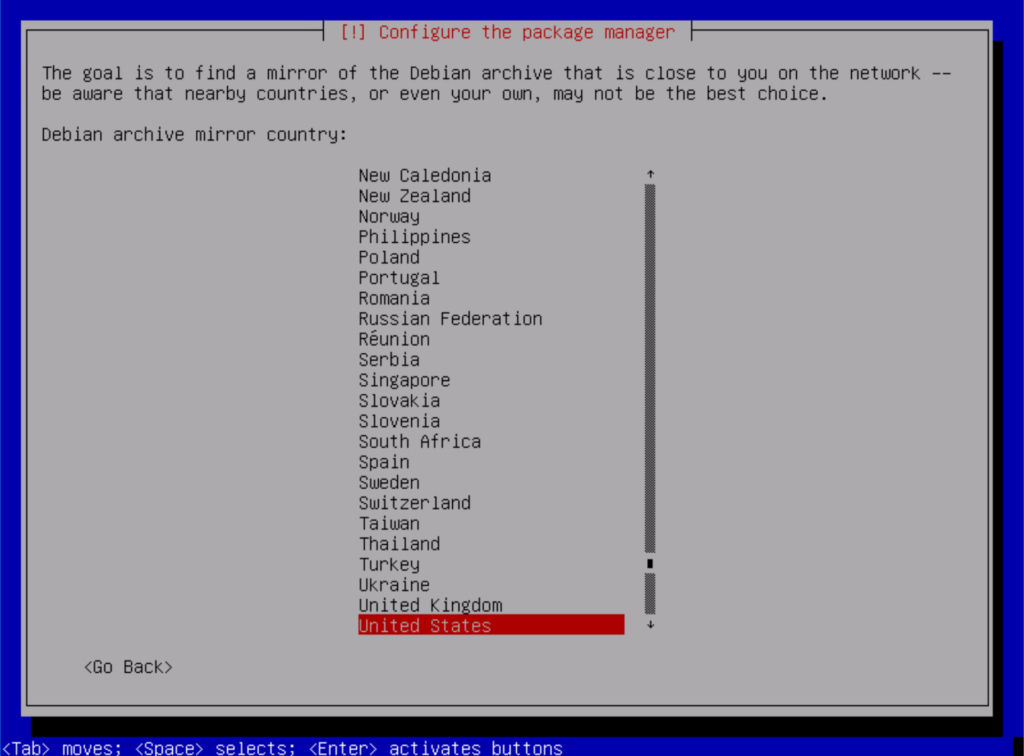

Select the country you would like to use for your package manager mirror:

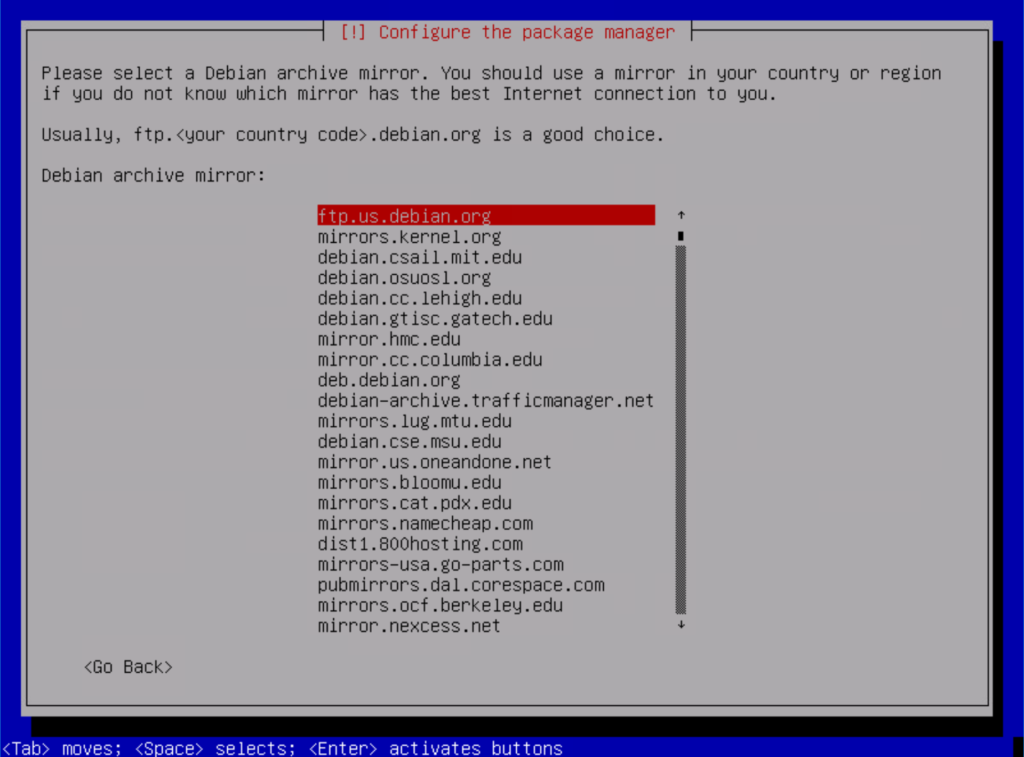

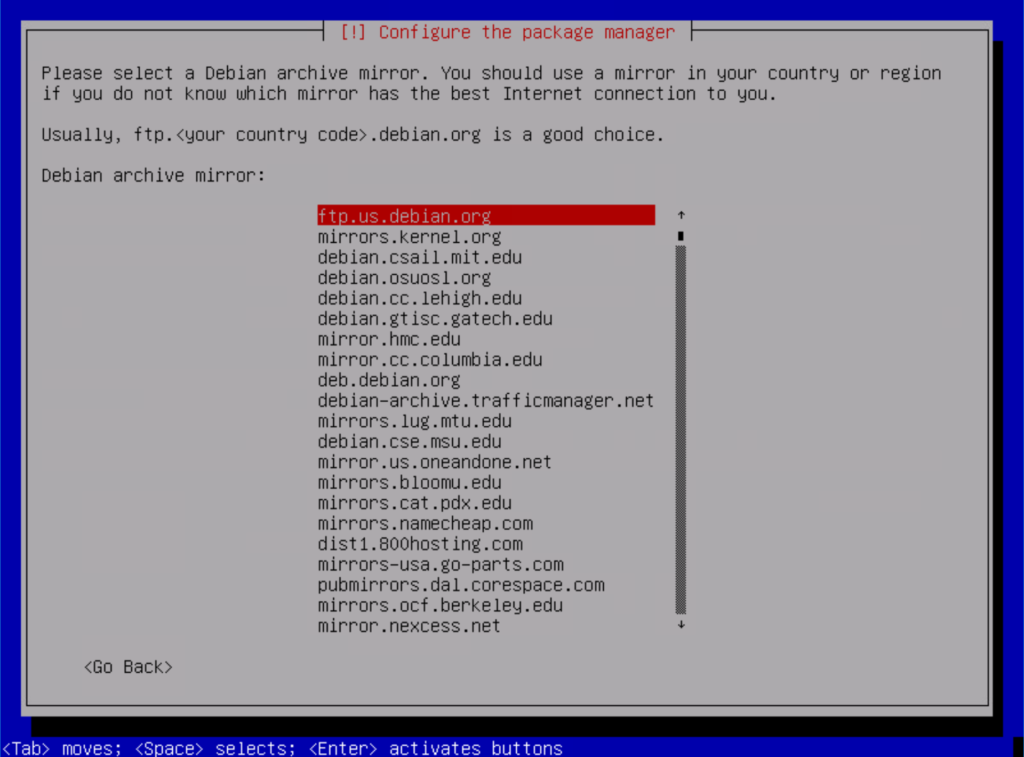

Select your favorite mirror:

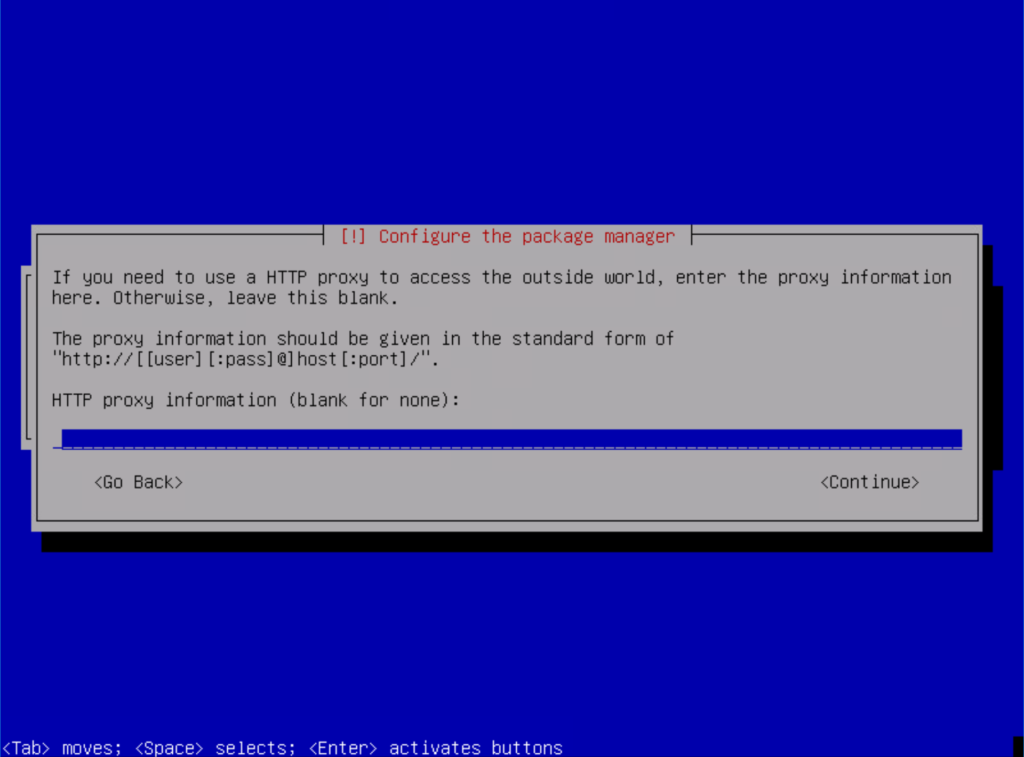

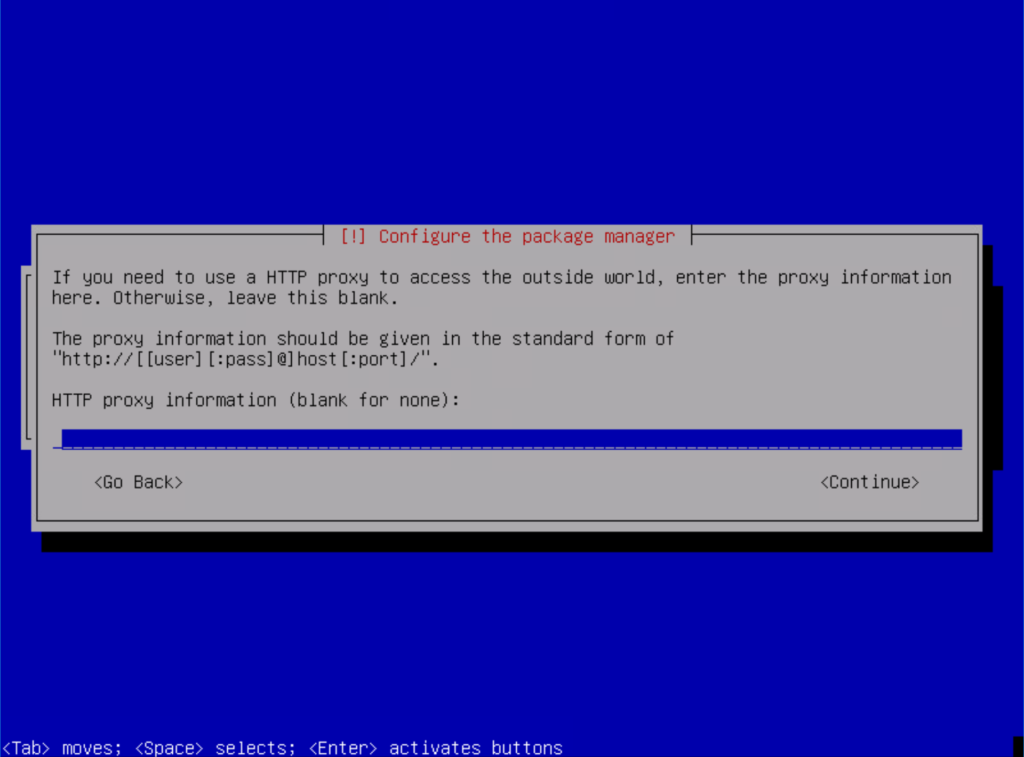

Enter a proxy if you use one in your homelab:

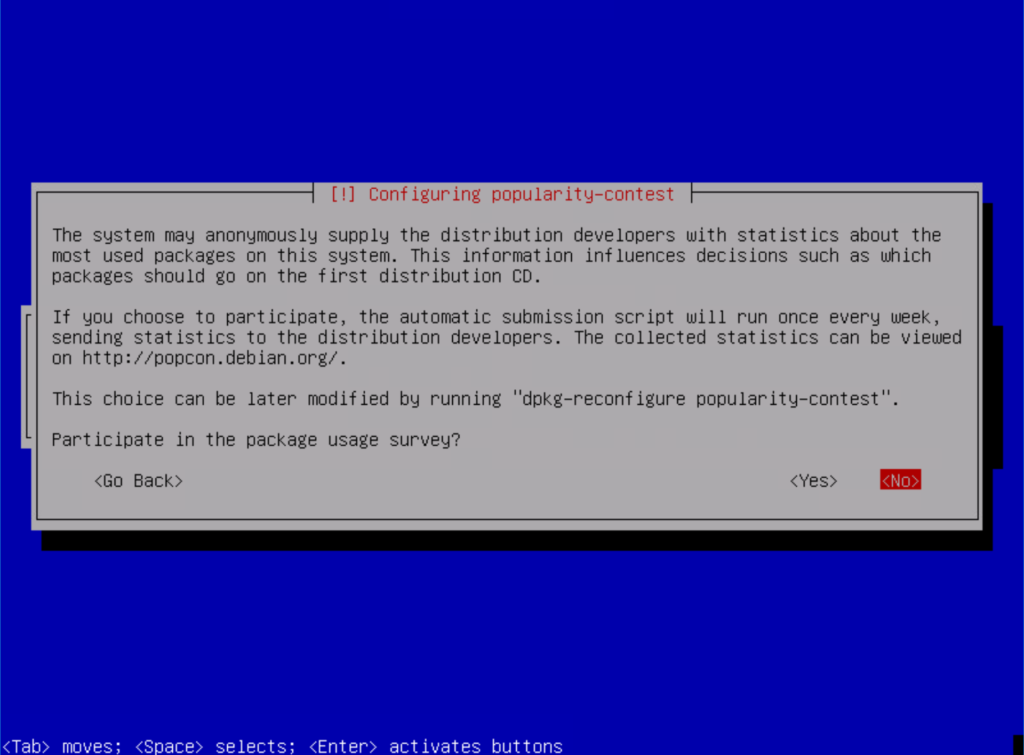

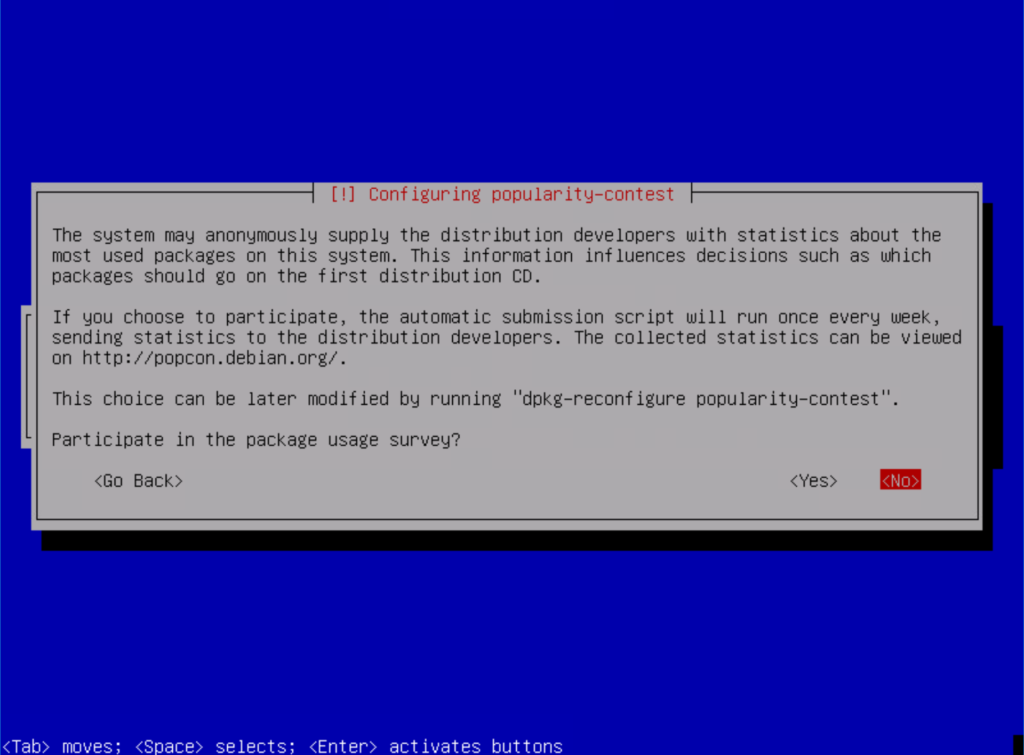

Opt in…or out of the survey:

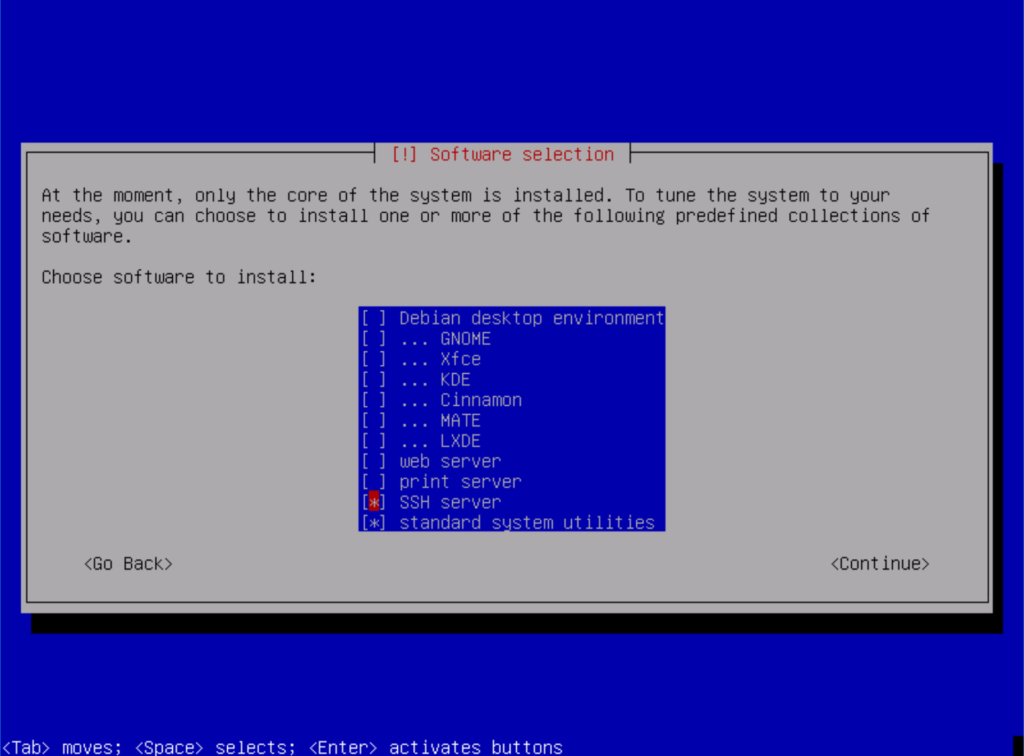

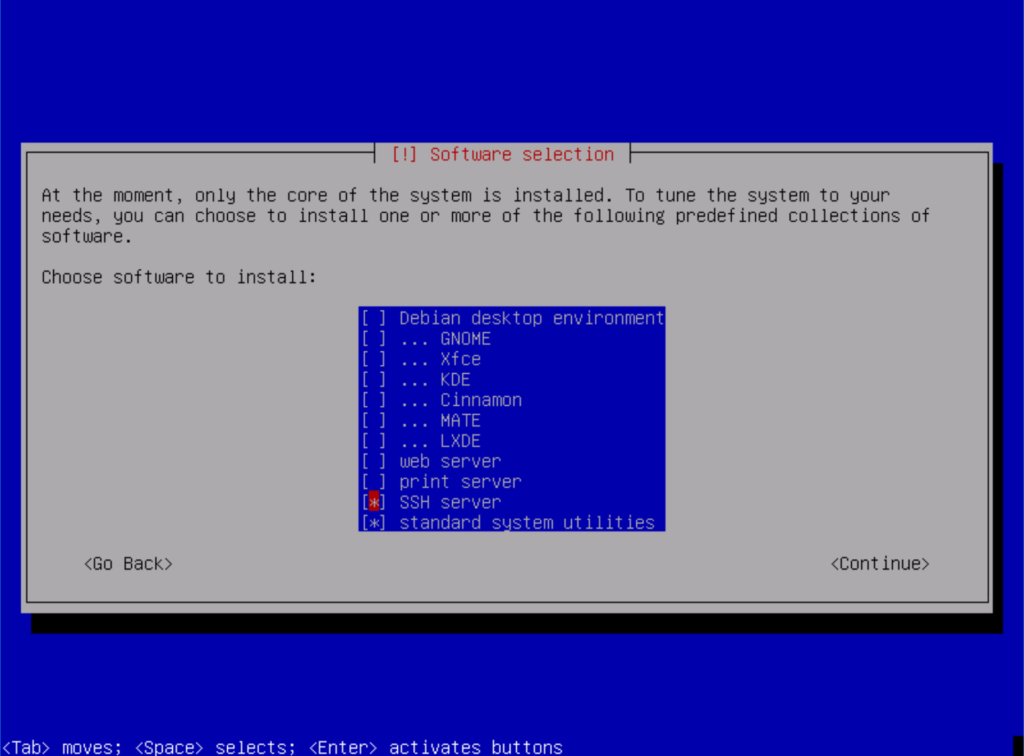

Select SSH and standard system utilities (this will give us a very basic install):

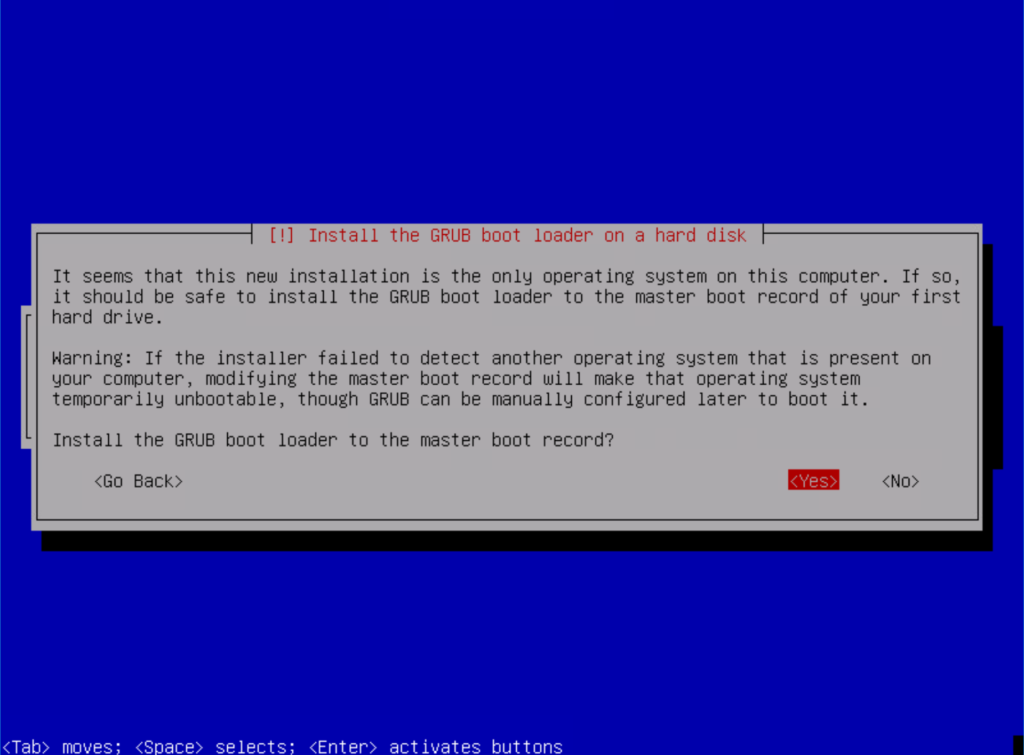

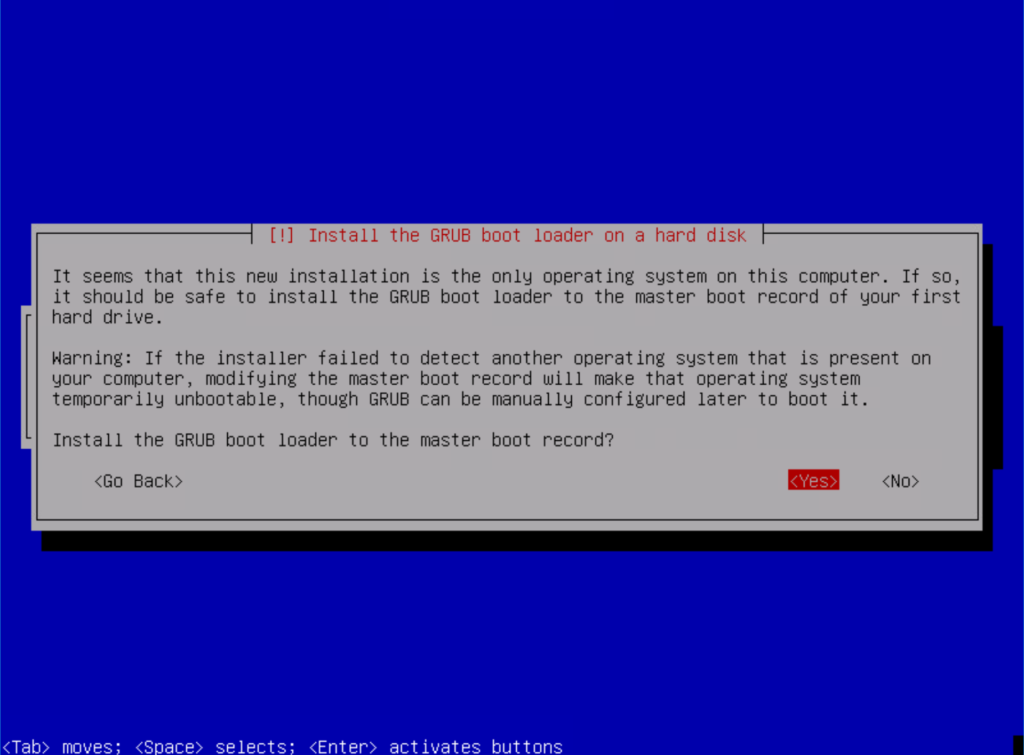

Next, select yes to install GRUB:

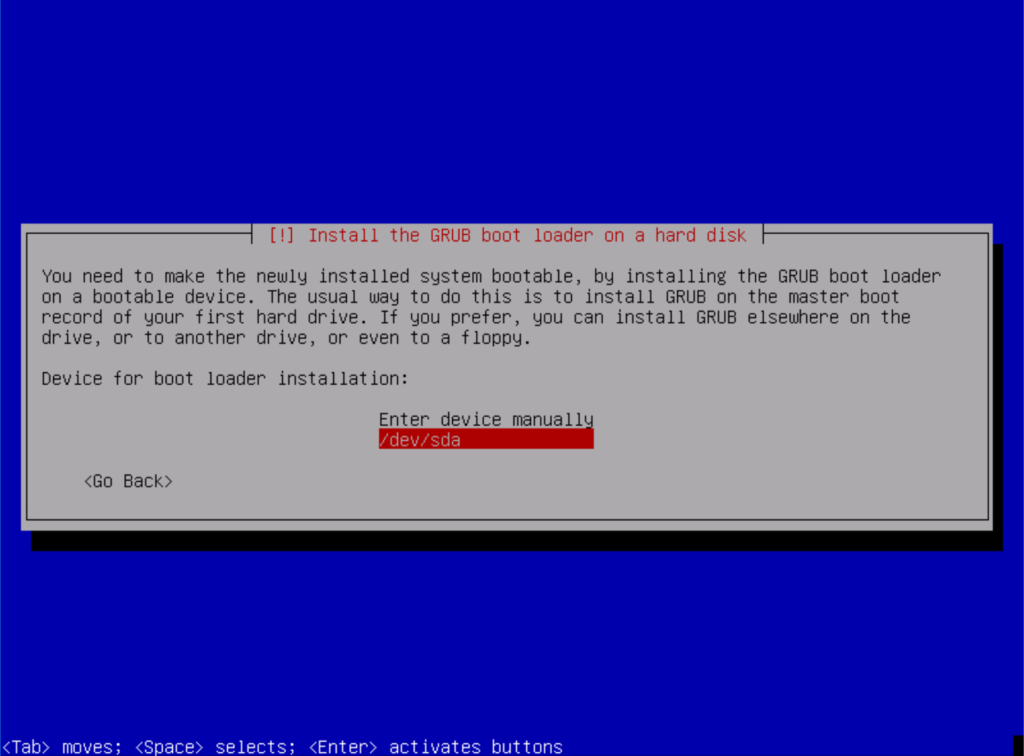

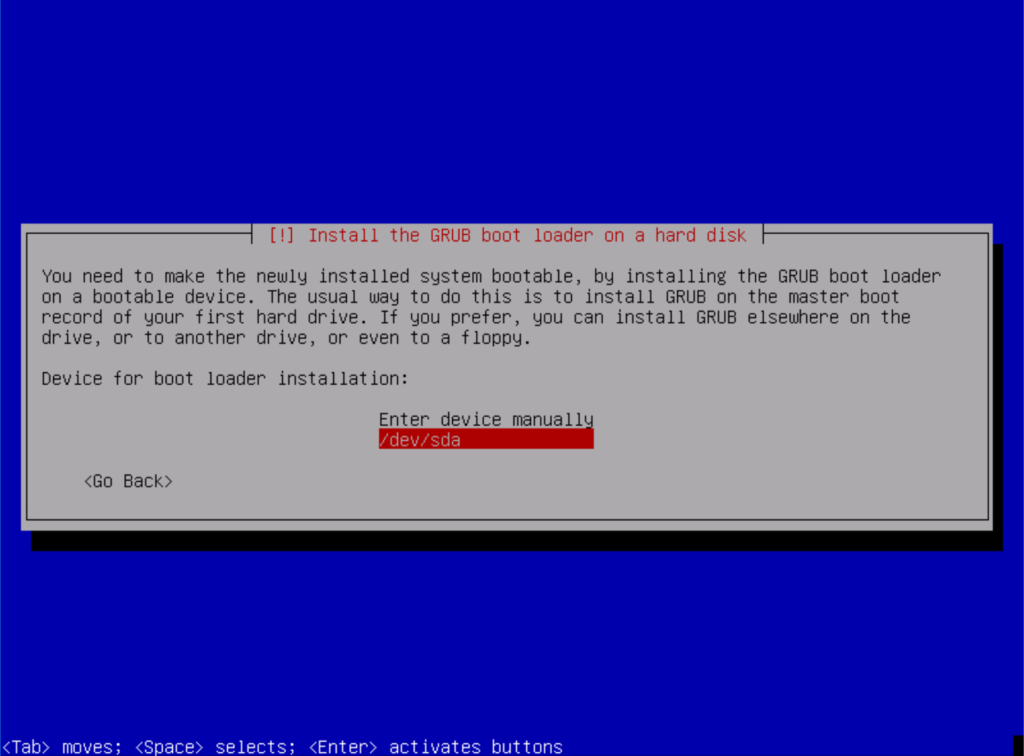

Select the device on which to install GRUB:

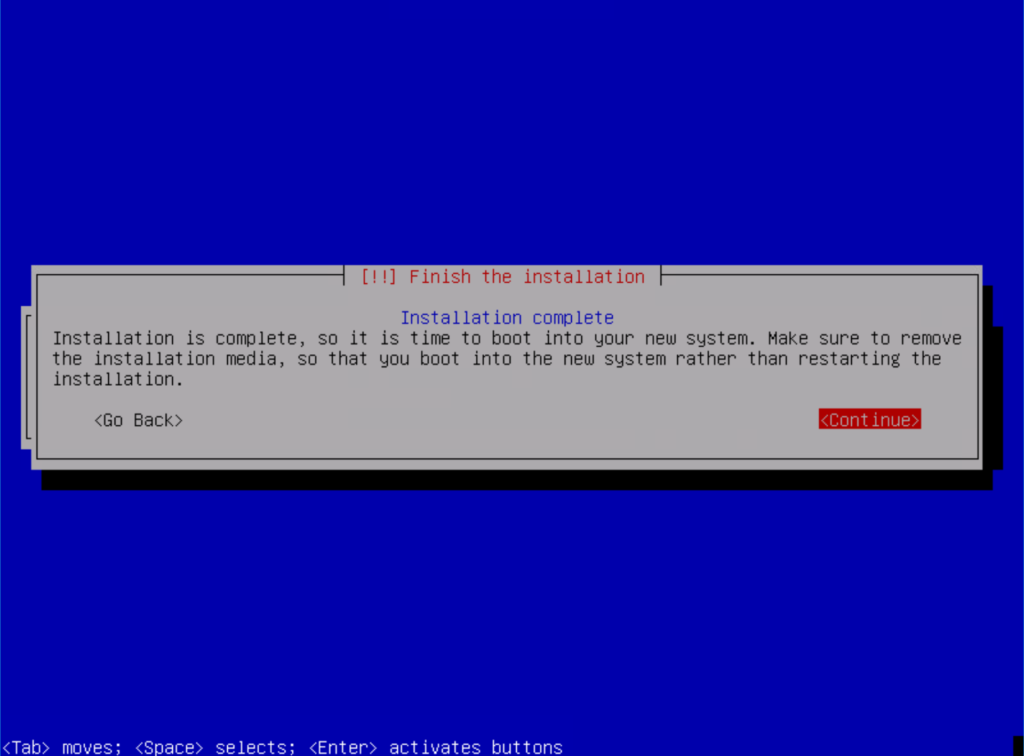

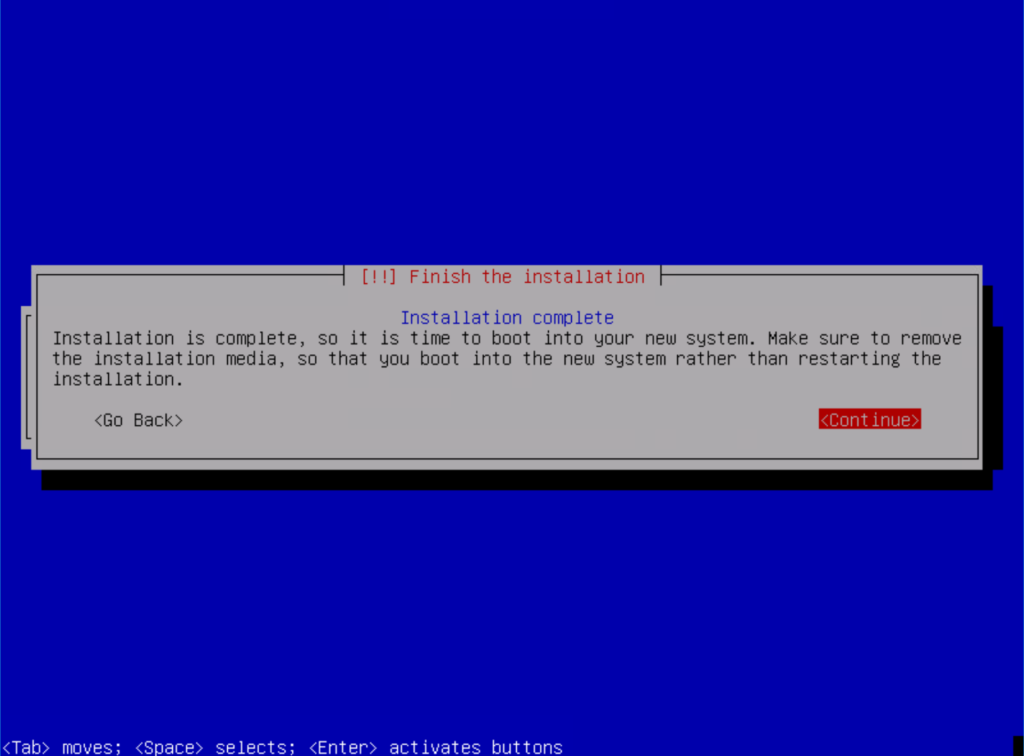

Let it go (my kids are really into Disney, so this will be stuck in my head for the remainder of this post):

You have officially installed Debian Linux. Throw some type of small celebration if this is a big deal for you…otherwise proceed to configuration.

Linux Configuration

Before we install Organizr, let’s get our Linux OS configured the way we want it. Basically we will install sudo, change our ssh configuration, and update our network settings. Once we do that, we’ll be ready to install Organizr.

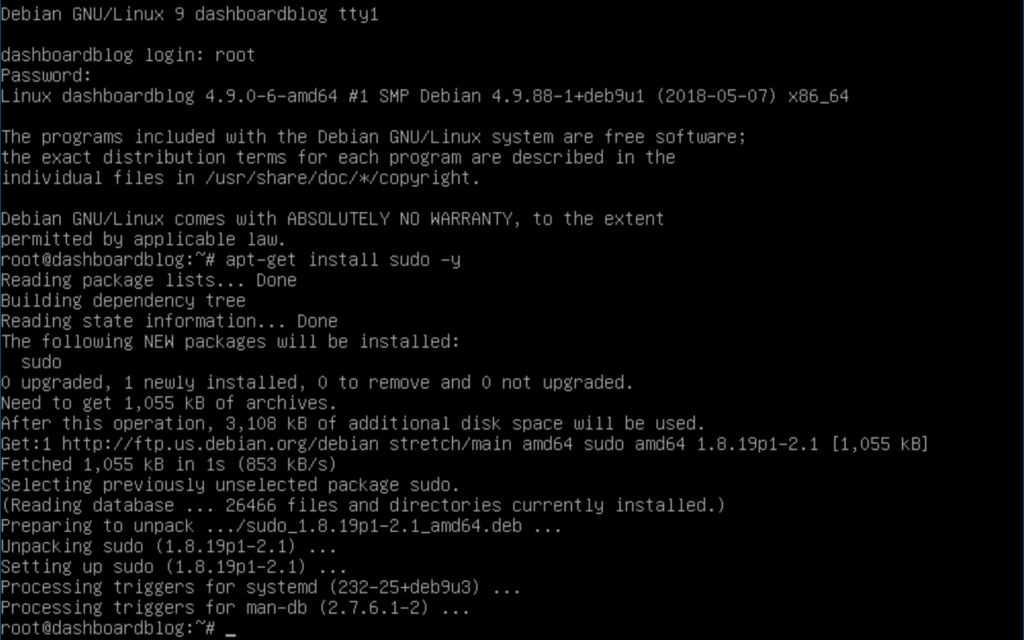

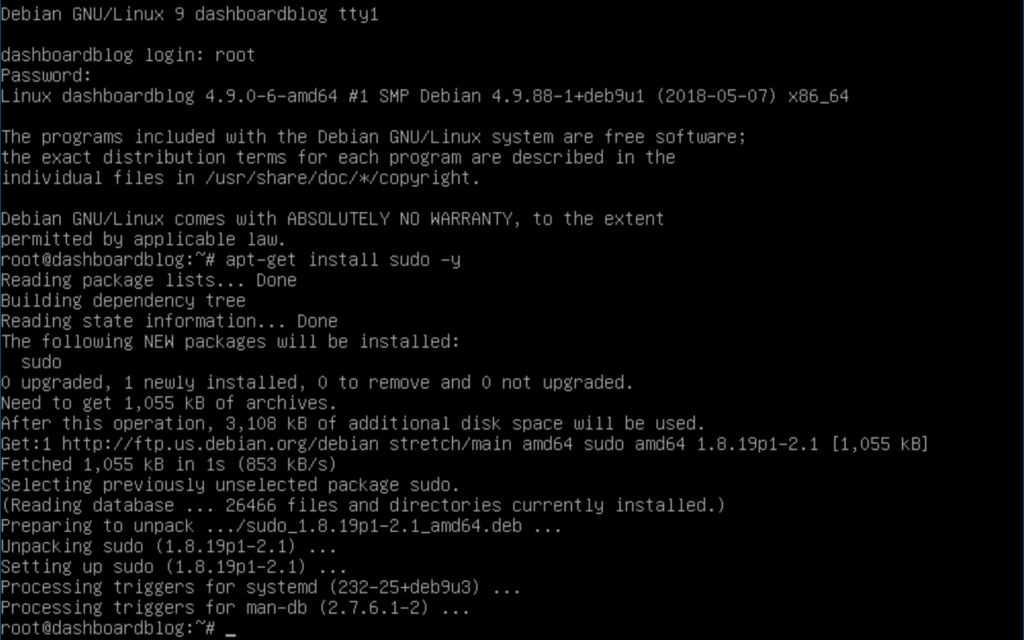

Install sudo

Log into your system as root and issue this command:

apt-get install sudo -y

Which should look something like this:

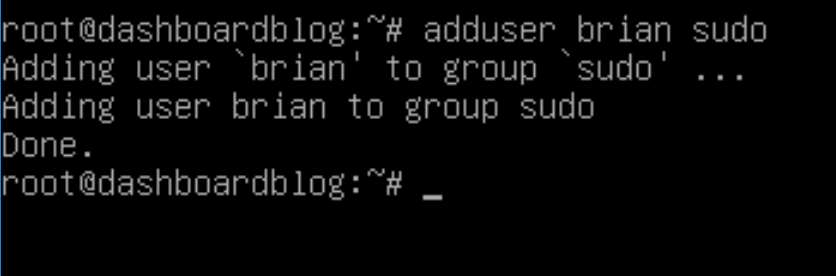

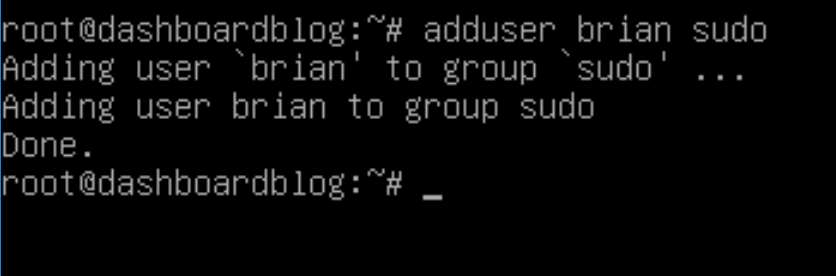

Sudo

Rather than using our root username and password with SSH, we’ll grant the user that we created during our Linux installation access to sudo. Sudo essentially executes a single command as root. Here’s the command:

adduser brian sudo

This should look like this:

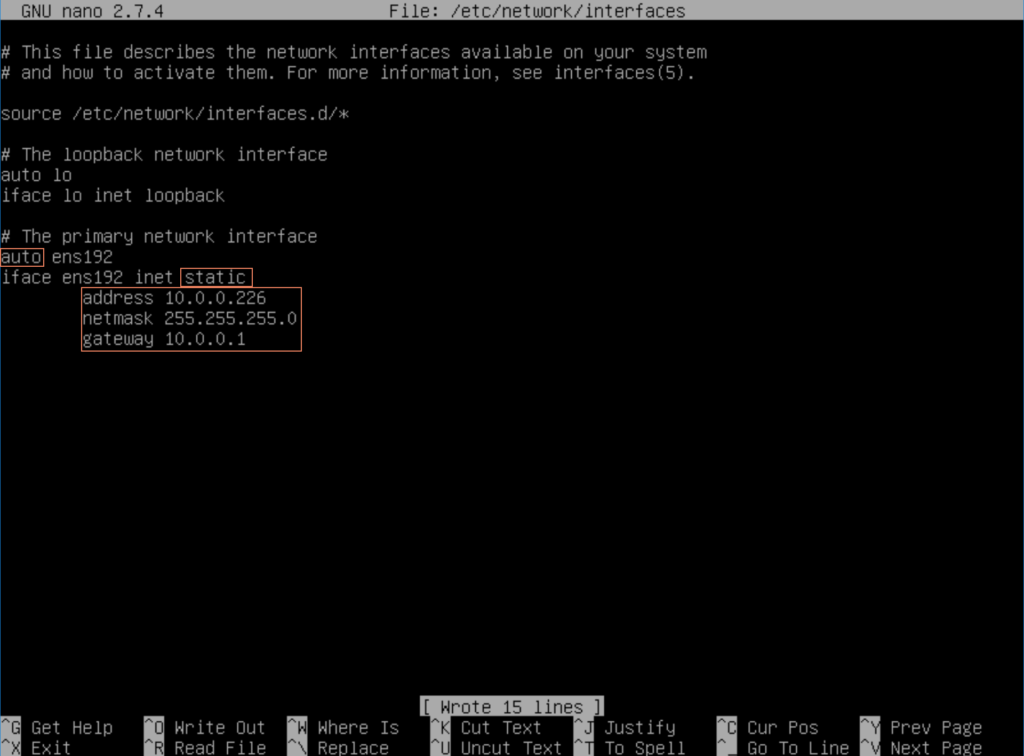

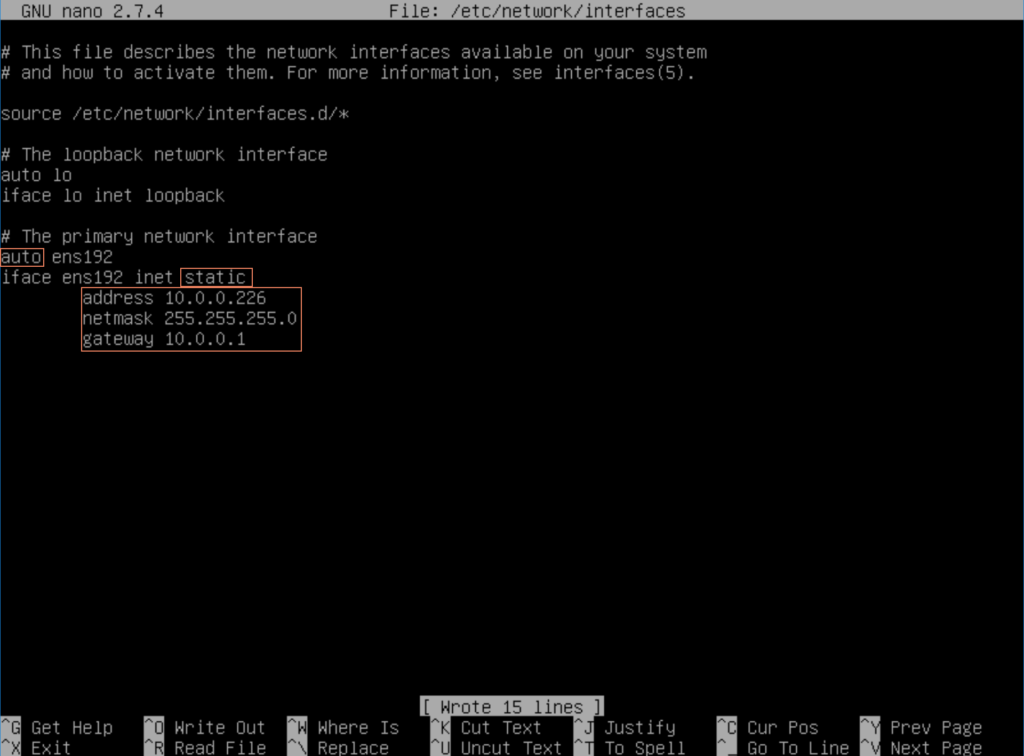

Update Network Configuration

Now we are ready to change our network settings. By default, everything is set to use DHCP. In my lab, everything has a static IP, so this system will be no different. Issue this command:

nano /etc/network/interfaces

Change the adapter to auto (ens192), change from dhcp to static, and enter your network information (address, netmask, and gateway):

Hit control-o to save the changes and control-x to exit nano.

Now let’s restart our network services by issuing this command:

service networking restart

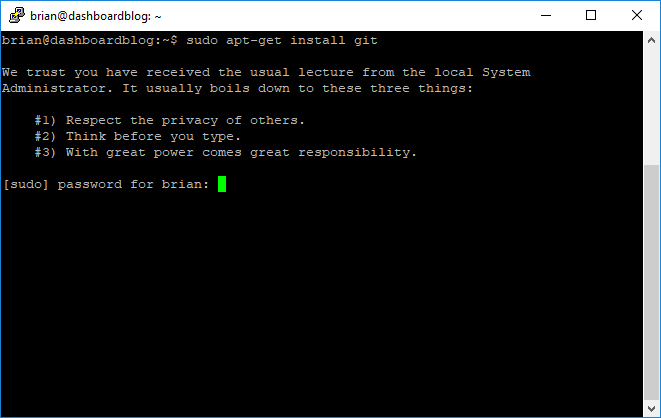

Oranizr Pre-Requisites

Organizr has very few pre-reqs. But, let’s get everything ready. I’m switching from the console now over to PuTTY. If you aren’t familiar with SSH, PuTTY is the easiest way to connect to your server. You can download it here:

https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html

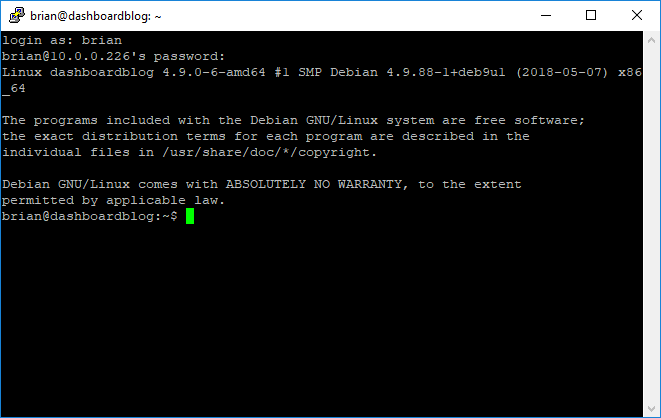

Once you have PuTTY installed, you can connect to your new Linux box using our user account:

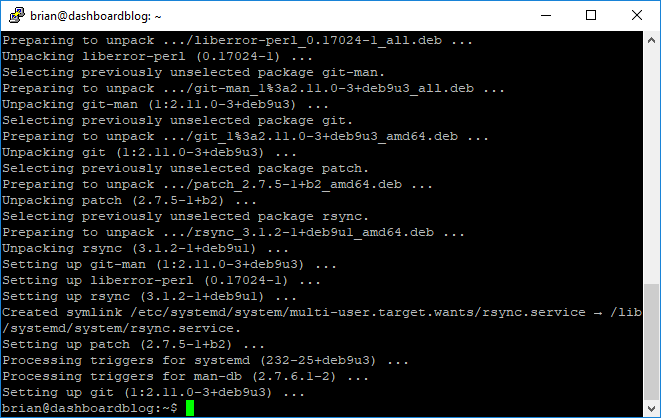

Now that we are connected, we can install git:

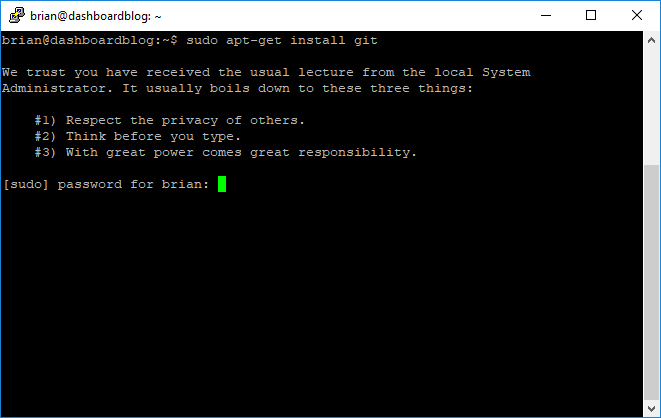

sudo apt-get install git

Notice that we are prompted for our password:

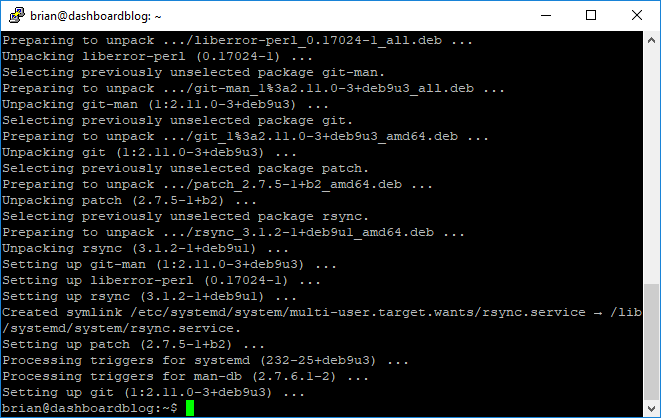

Now we should see this:

Installing Organizr

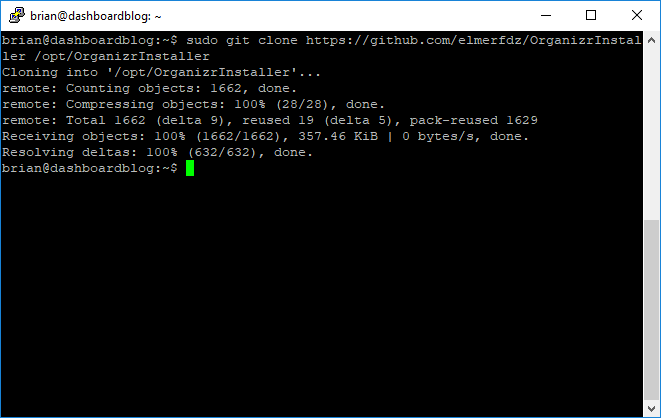

We can now finally begin the installation of Organizr. We’ll start by cloning the git repository:

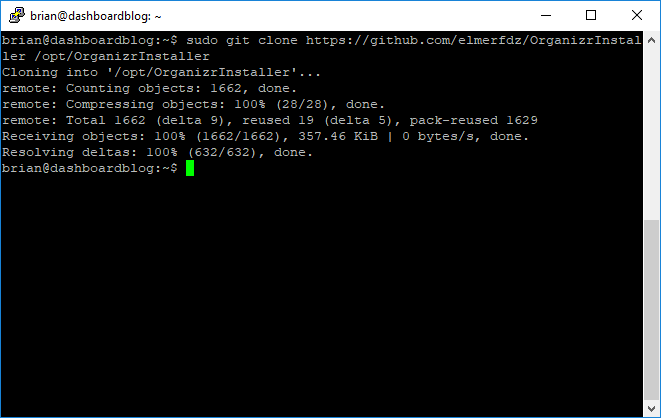

sudo git clone https://github.com/elmerfdz/OrganizrInstaller /opt/OrganizrInstaller

Now we will go into the proper directory and start the installer:

cd /opt/OrganizrInstaller/ubuntu/oui

sudo bash ou_installer.sh

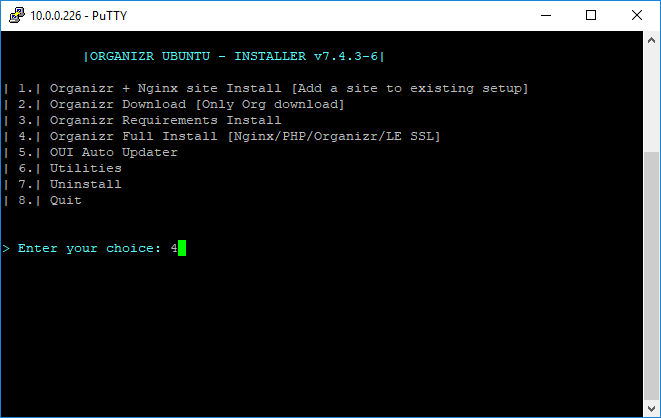

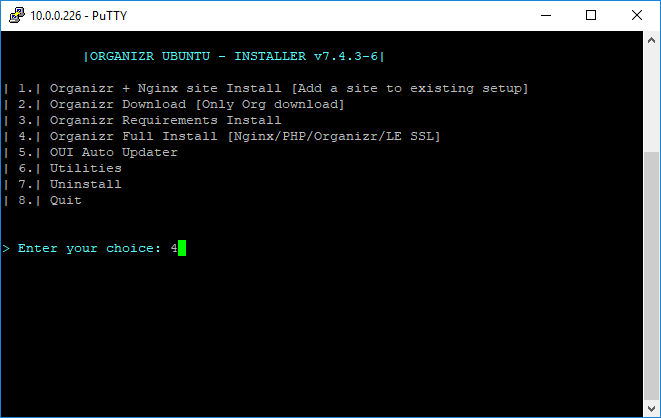

The installer will start up and we can select option 4, which will install everything:

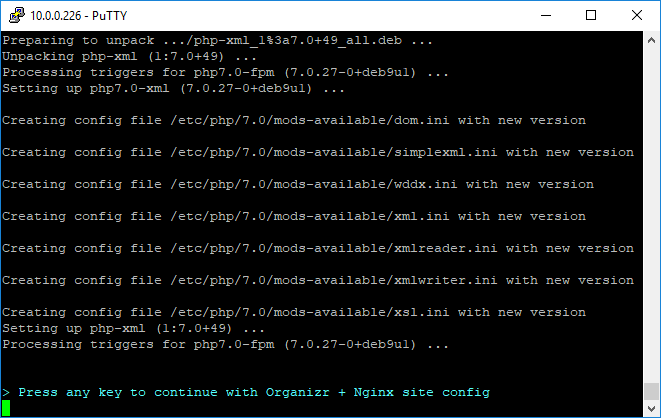

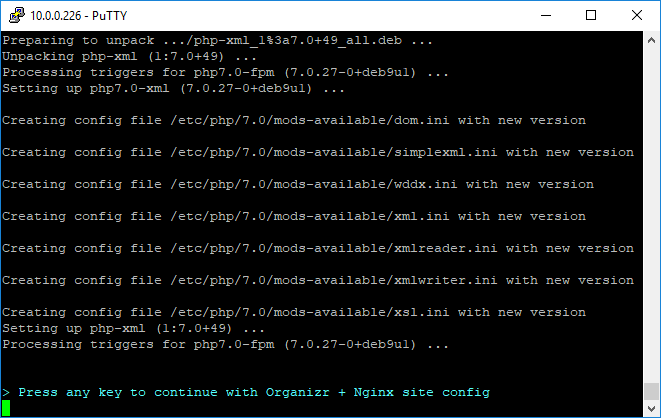

The installer will now download everything and prompt you to continue:

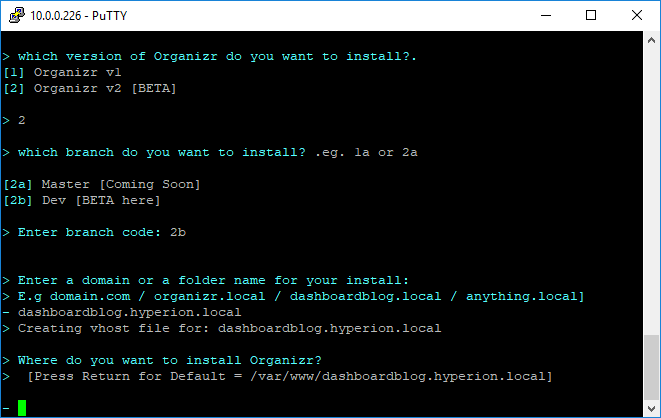

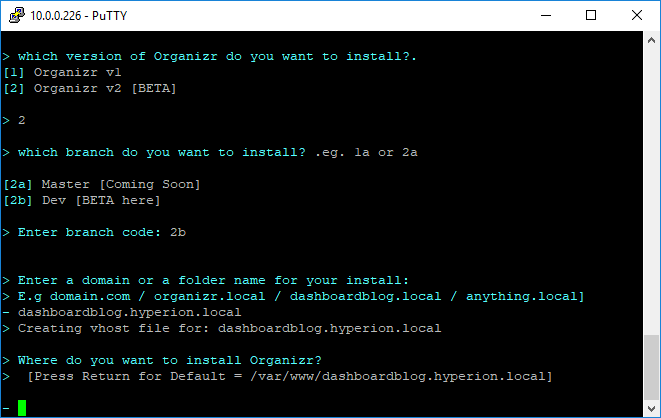

Now we can select our version of Organizr (2 for the beta), which branch to install (2b, since this is a beta), a domain name and directory:

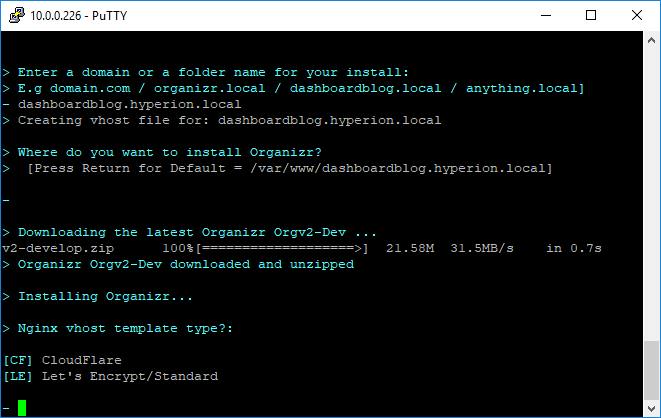

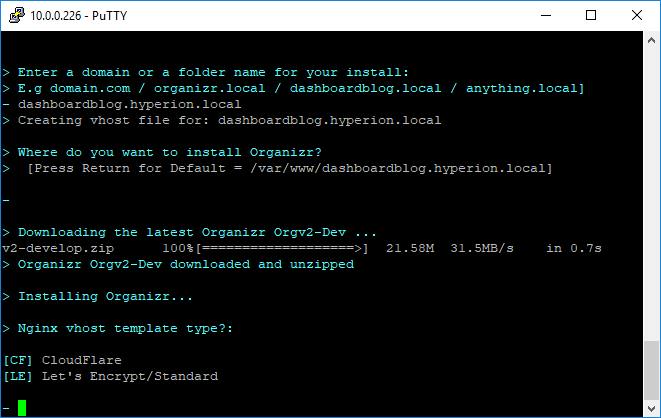

Finally the installer will download the beta and install everything. When prompted for the Nginx vhost template type… I just hit enter:

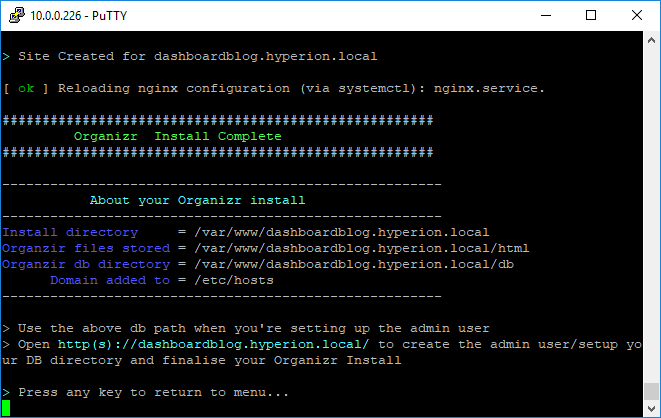

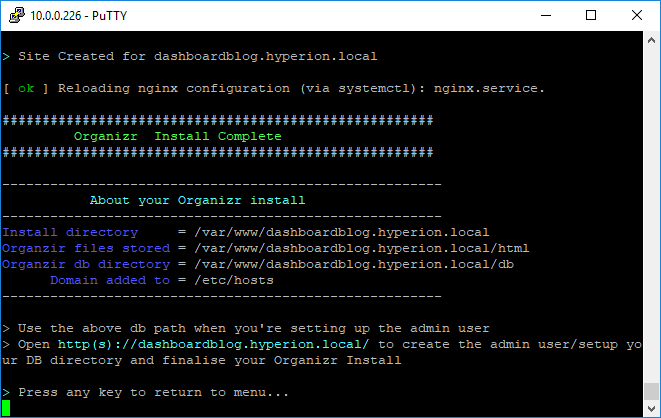

Assuming everything completed successfully, we’re all done:

Now we can visit our freshly created Organizr site to complete our configuration.

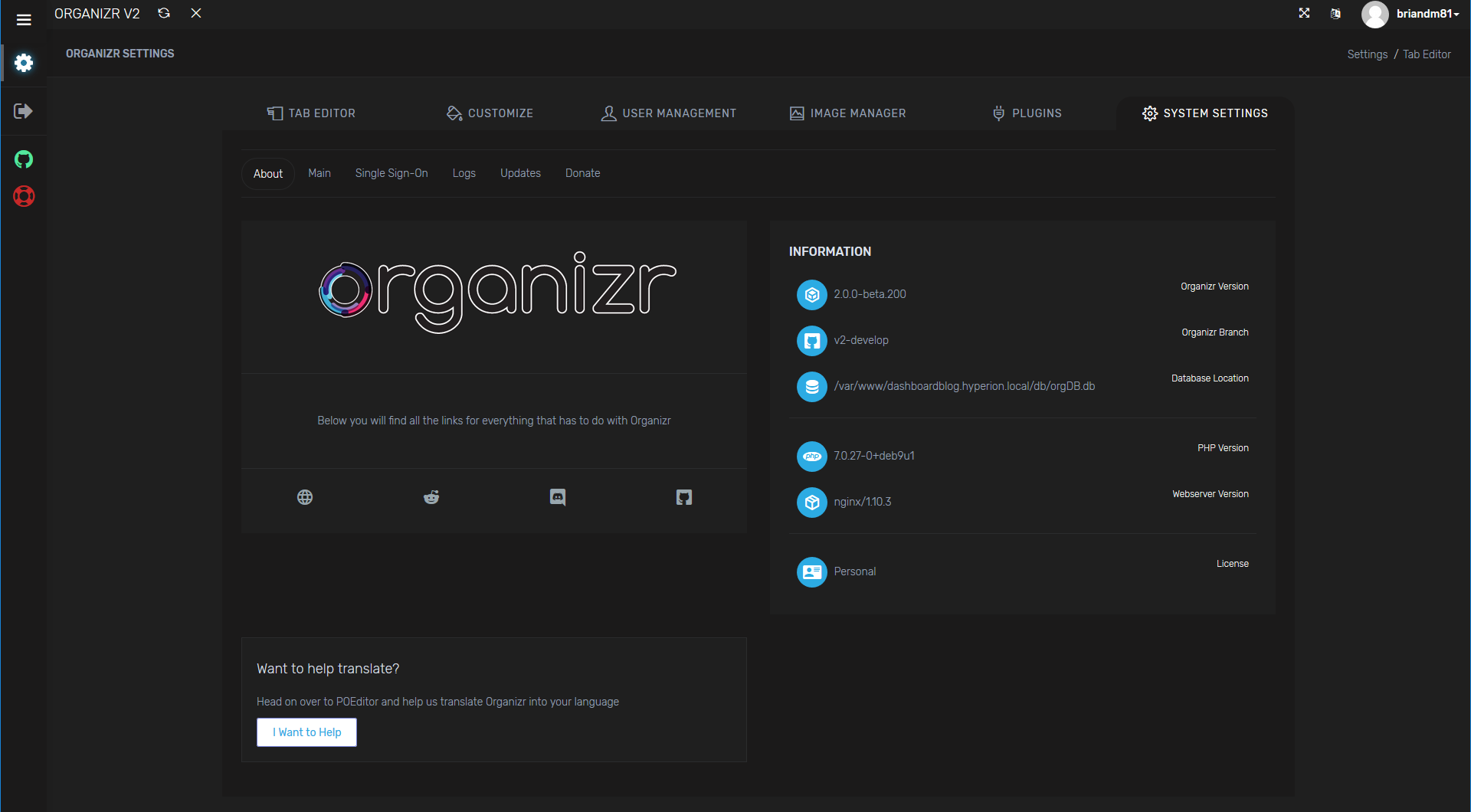

Organizr Configuration

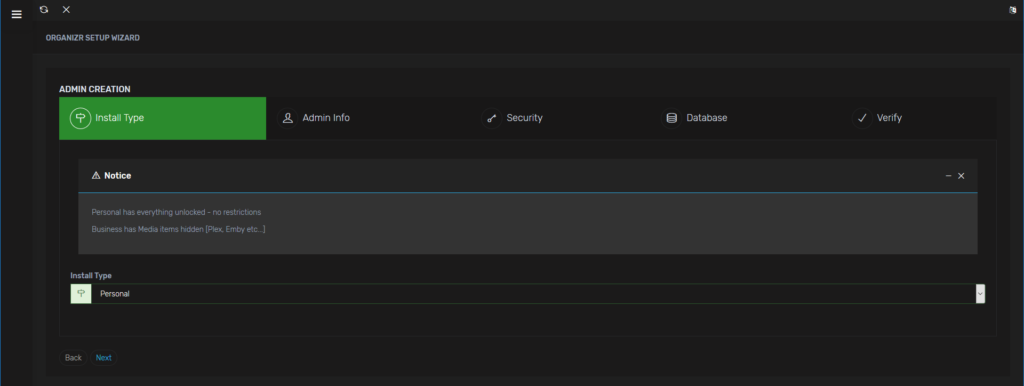

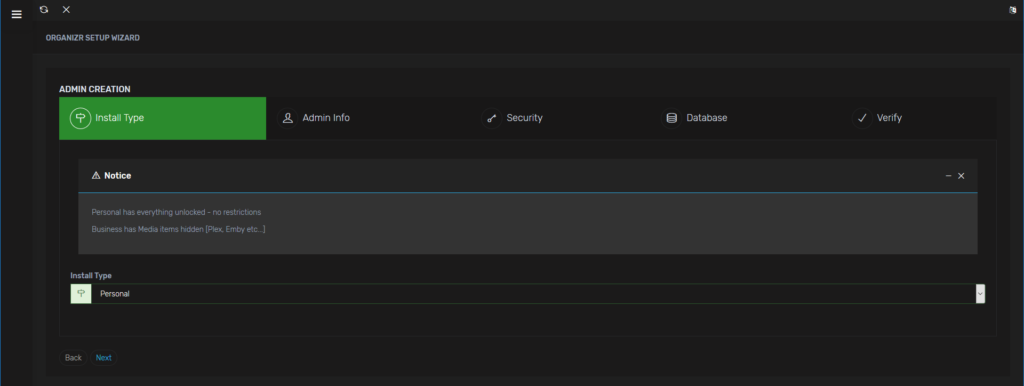

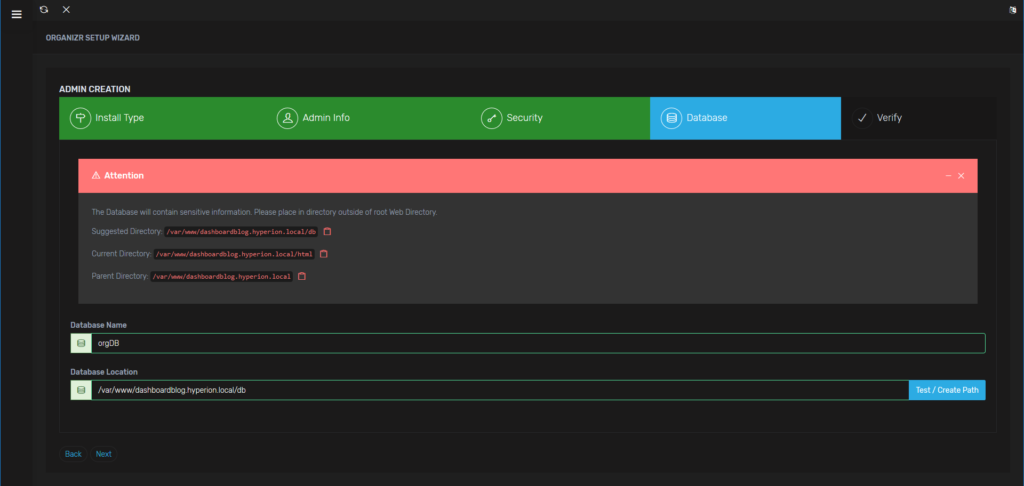

Once again, assuming everything has gone well, we are ready to configure Oranizr. First we’ll select a license type. This is new and tells me that the Organizr team has their sites set on a wider audience in the future. Very interesting…and could be great for the project. So I selected Personal:

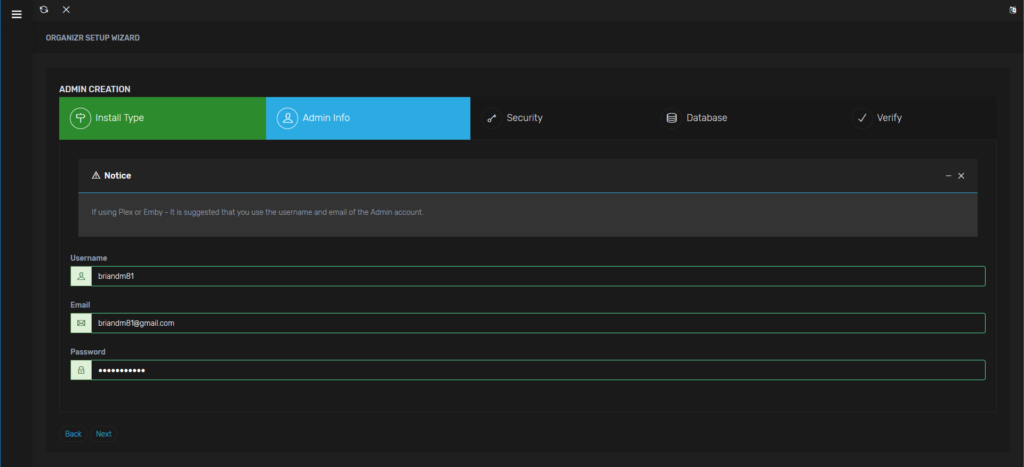

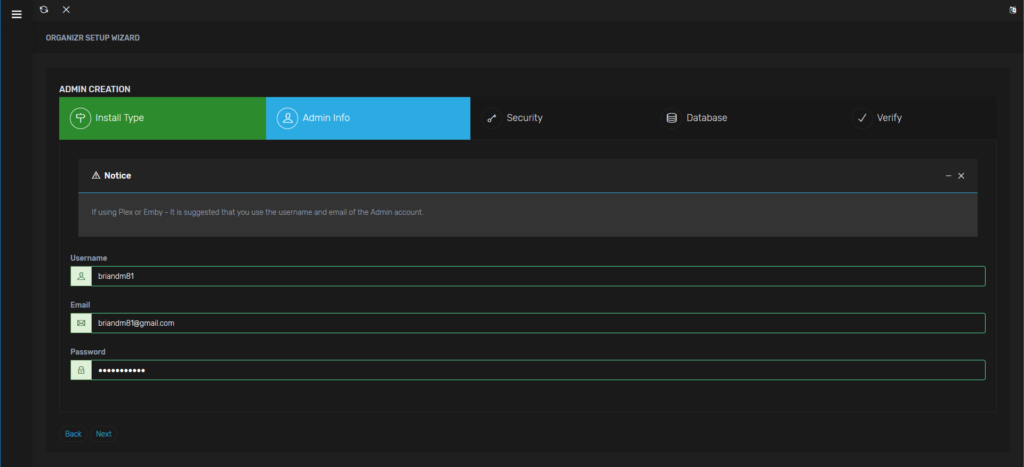

I entered my username, e-mail, and password:

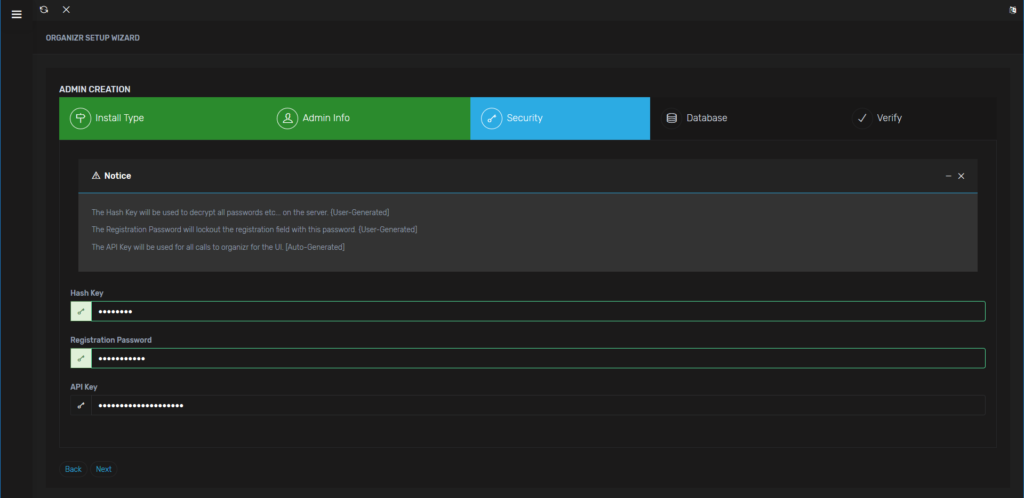

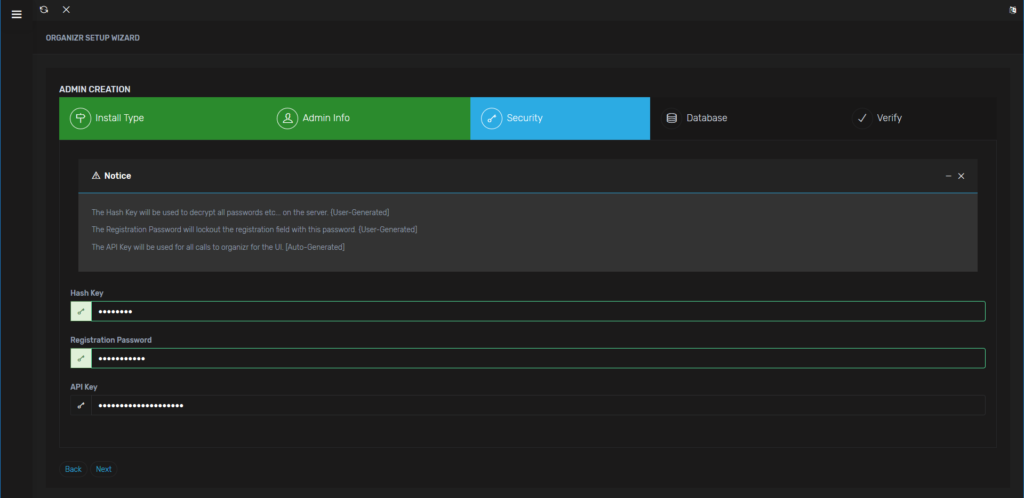

Next, come up with a hash key and a registration password:

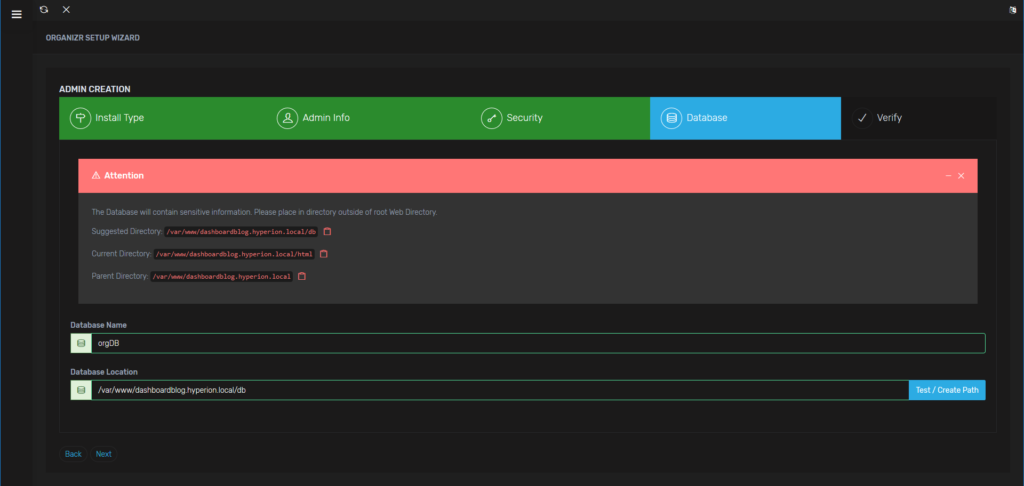

Name your database and select a location from the options or select your own:

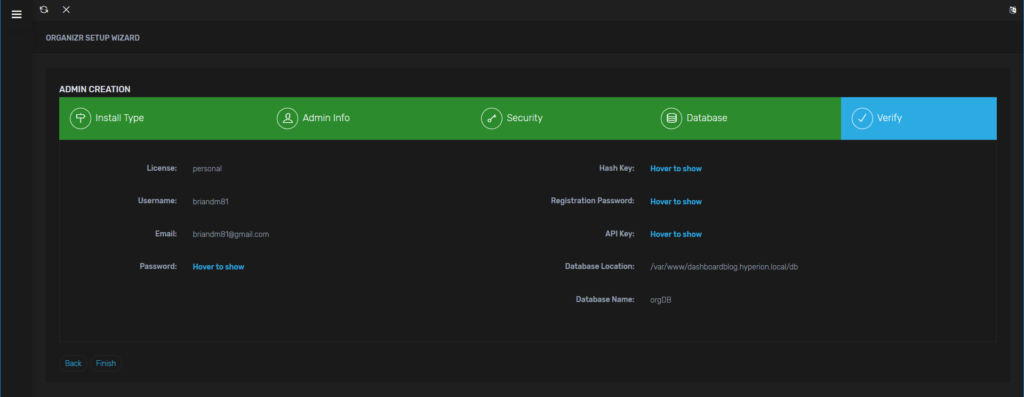

Finish off your installation:

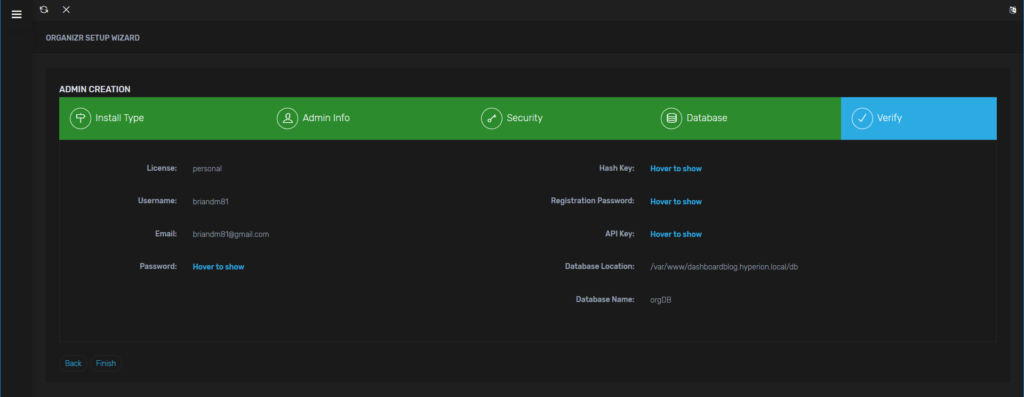

And there we have it:

Conclusion

That’s it! Ogranizr is now ready to use. This is of course beta, but it is a little prettier and a bit better administrative interface than V1. Next up we can move on to laying the foundation for our statistics and reporting using InfluxDB.

Brian Marshall

July 2, 2018

I’ve blogged about EPM for a few years now but I have recently decided to try to spend some more time dedicated to just homelab content. So…what on earth is a homelab and why on earth do I need one? I’ll try to answer this question and provide some resources for those of you interested in starting down the homelab path and wondering where to start. I’ll approach this question from two different perspectives: EPM people and non-EPM people. This should allow you to skip sections of content that just don’t apply to you.

Introduction to EPM

With my recent uptick in content related to homelabs, there is actually a large portion of my readers that wonder…what is EPM? Well, for starters, EPM is my day job. EPM stands for Enterprise Performance Managements. EPM is essentially an industry acronym use by Oracle and others to define a segment of their software products. Oracle has their Hyperion products, OneStream has their CPM (Corporate Performance Management) products, and a variety of other vendors out there like Anaplan and Host Analytics are in this space.

So what is this space? Essentially, medium to large companies have a set of financial activities that they perform each month, quarter and year. Some of those activities are more accounting specifics, like consolidating hundreds of legal entities into a single set of reporting. Others are more finance driver like putting together a budget or forecast for the month or year. The list of buzz-words goes on, but basically EPM includes Financial Consolidation, Planning, Budget, Account Reconciliation, Profitability and Cost Management, Strategic Modeling (long range planning), and financial and sometimes operation reporting.

Traditionally, EPM has been reserved for companies generally in or around the Fortune 1000 list. Companies whose revenues are generally in the billions of dollars or at the very least hundreds of millions of dollars. Why? Because the software has been very expensive and the implementations aren’t exactly cheap. These days, the landscape has changed a bit. Companies of all sizes can now start to afford many of the cloud-based products that require shorter and less expensive implementations.

The larger companies are of course still using it, but the installed base of Oracle’s Hyperion Planning has more than doubled since it became a cloud-based product named PBCS (Planning and Budgeting Cloud Service). This of course shouldn’t be a shock given the price difference. Hyperion Planning is $3500 per user initially plus 20% per year forever. In contrast, the cloud-based product (PBCS), which boasts considerably more functionality starts at only $120 per user per month. So, with the price of the cloud being so attractive, why would I need a homelab? We’ll get to that…

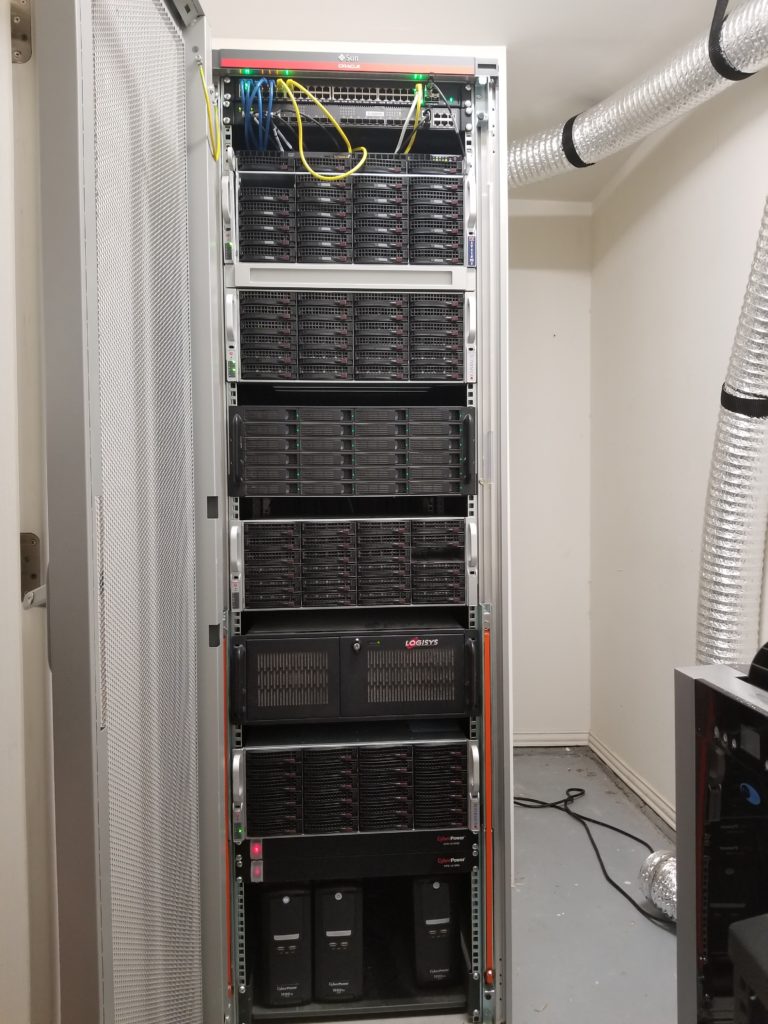

Introduction to a Homelab

So what exactly is a homelab? A homelab, in the simplest terms is a sandbox that you can learn and play with new or unfamiliar technologies. They can be as simple as a set of VM’s on an old PC or laptop to as complex as my lab (or even more complex than my lab in some cases). Some people will use a lab to learn things for a certification around networking or virtualization. Some people will use their homelab to try out a variety of new software options that they are interested in. Others will be more interested in the hardware aspect of things like say, networking and switches. Still others may just be toying around with cryptocurrency. At the end of the day, a homelab is whatever you want it to be.

Why do I need one (EPM)?

As the EPM industry continues to change with the major push to the cloud, I often hear that I don’t need an on-premise environment any more. While the cloud has certainly changes the landscape, I believe that having an on-premise environment is still critical. Why? Because the cloud provides us so much and so little all at the same time. I get all of my infrastructure and patches…and zero access to things we look at all the time when implementing on-premise software. Things like the outline file. Here are a few use-cases that I’ve run into in my implementations of various Oracle EPM Cloud products.

Dynamic Smart Lists in PBCS

We implemented the dynamic smart-lists based on dimension in a PBCS implementation. We discovered that when you migrate the application, the HSP_OJECT ID’s change. Because the alias table where these ID’s exist is hidden by PBCS, we had no idea that when we migrated, it didn’t take that into account. Instead, our business rules based on the dyanmic smart lists just started producing incorrect results. Once we took at look at the outline file, we were able to determine the issue and build a work-around.

HSP_NOLINK

This one may seem basic, but I’ve had it bite at least three very seasoned consultants. Basically if you have a member shared between plan types, that member will be dynamic with a system generated formula in the non-primary plan types. The way you turn this off is to add a UDA to the member (HSP_NOLINK). The problem is that you can’t see the formula, so if you just look at the member in PBCS, it is set to stored and there is no formula. But, if you download and open the outline, you will see the formula on the member and setting of dynamic. Then you remember that you forgot to add the UDA and move on with your day. Without the outline, you could spend hours trying to fix a very simple mistake.

Because

Yes, because. Because many of us just want to play with the software and push it to its limits. The cloud doesn’t let us do that as we don’t really have a place to play. And even when we do play, we can’t really see whats going on. So you need a homelab as an EPM professional…because. Be sure to use this reason with your significant other should they question the need. Hopefully it works better for you than it did for me.

Why do I need one (Non-EPM)?

If you are a non-EPM person, you are likely just interested in learning new things. I won’t dwell on this, because most of you already want a homelab if you are reading this post anyway! So what does a non-EPM person generally do with a homelab:

- Network Learning (CCNA, etc.)

- Virtualization Learning (vmWare, etc.)

- General IT-related administration activities

- Learning new software

- Development of new software

- This list can go on and on

How do I start building a homelab?

Where you start will all depend on your specific needs. When I started this 20 years ago, virtualization wasn’t even a thing, so I just had a few physical boxes, mostly older system from upgrades of my gaming computer. Now…it may be as simple as a memory upgrade to your existing desktop so that you can use a desktop-based virtualization. For those of you that are looking to build or buy a separate box, I’ll have an updated series on this topic coming over the summer. In the meantime, you can check out my older series:

Great Resources

I’ve found that there are a lot of great resources in the form of communities for homelabs. Here are a couple of my favorites:

Conclusion

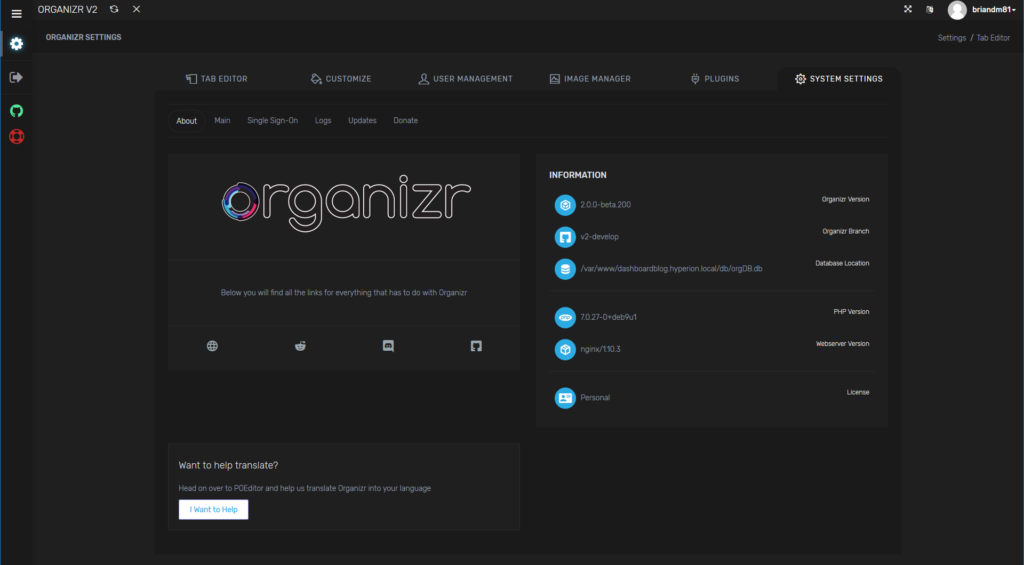

Hopefully this was a helpful introduction for those of you considering a homelab. Good luck on your quest and be very careful as homelabbing can be addictive. You could end up with something like this:

Brian Marshall

June 30, 2018

If you’ve read much of my blog, you know at this point that I like to create “series” of blog posts. Welcome to the latest addition. The topic of this extremely long series? Homelab Dashboards. So what is a homelab dashboard? If you don’t know the answer to this question, you will likely not enjoy the rest of this series…but just in case, I’ll give a brief description and then we’ll get started into some of the technologies that I have used and will demonstrate. A homelab dashboard is quite simply a dashboard that provides a nice interface to your homelab and all of the things you want to know about your homelab. So…if you don’t have a homelab, either build one, or probably ignore this series!

Homelab Dashboards

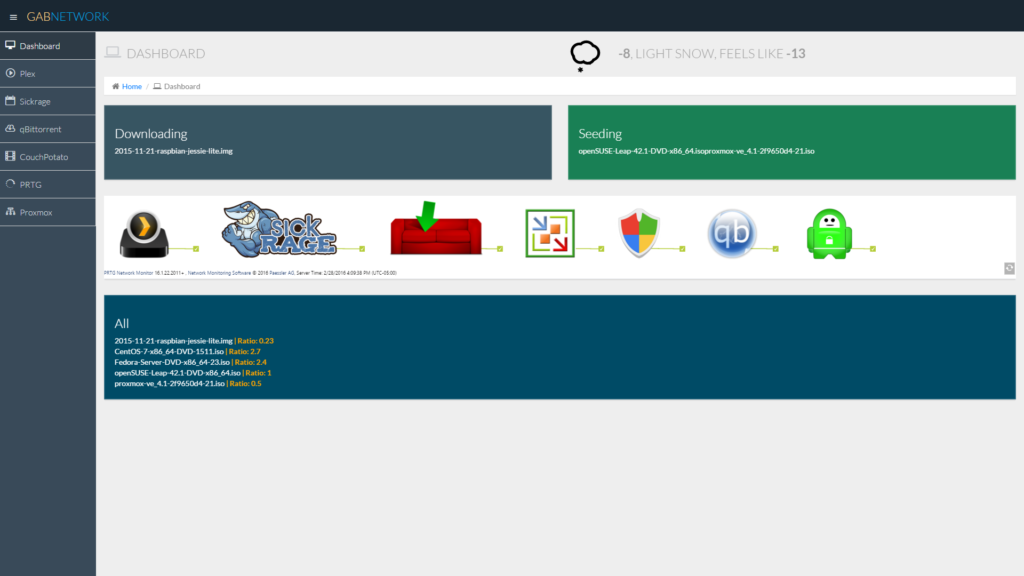

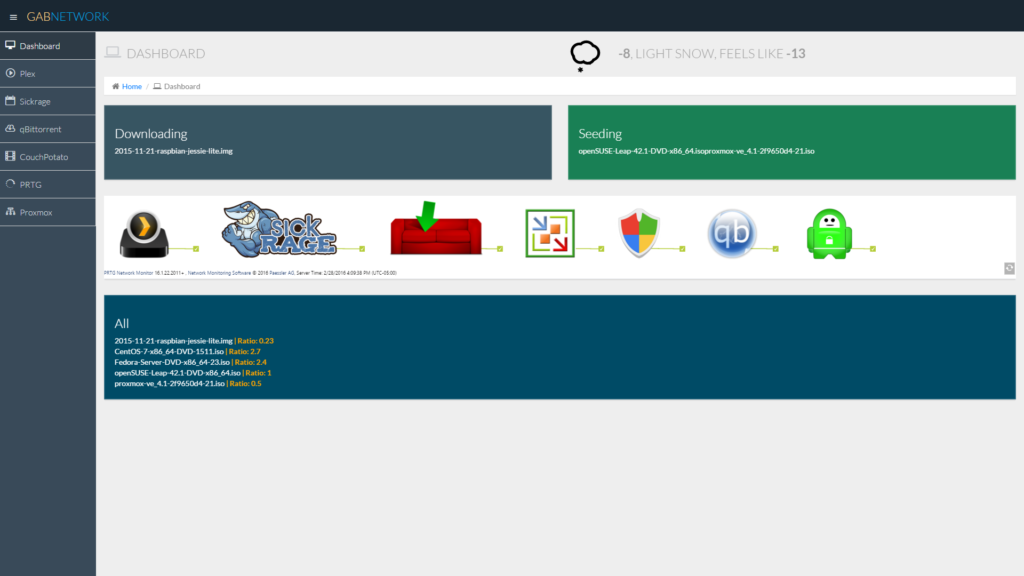

I’m going to cover a variety of technologies that make up a homelab dashboard in this series. You have all of the back-end plumbing that provides information for your dashboard, you have the dashboard itself, and generally some other interface to put it all together. Let’s start and the end and work our way back to the beginning. I like having a place to see all of my statistics and status information, but I also like having a single landing zone to get to all of my interfaces. For me, this might be pfSense, FreeNAS, my Hyperion environments, or any number of other things I go to many times a day. For this, I need more than just a pretty set of graphs. I started off going through a list of dashboards on /r/homelab to see what other people were using. You can see a list here of a variety of homelab dashboards. As I went through this list, the author of this post had a nice custom dashboard that was easy to modify and easy on the eyes. So I started there:

This dashboard was create by Gabisonfire and he has distributed it on Github here. He was very helpful in getting everything set up and hopefully we’ll see some of my modifications rolled into his code soon.

Organizr

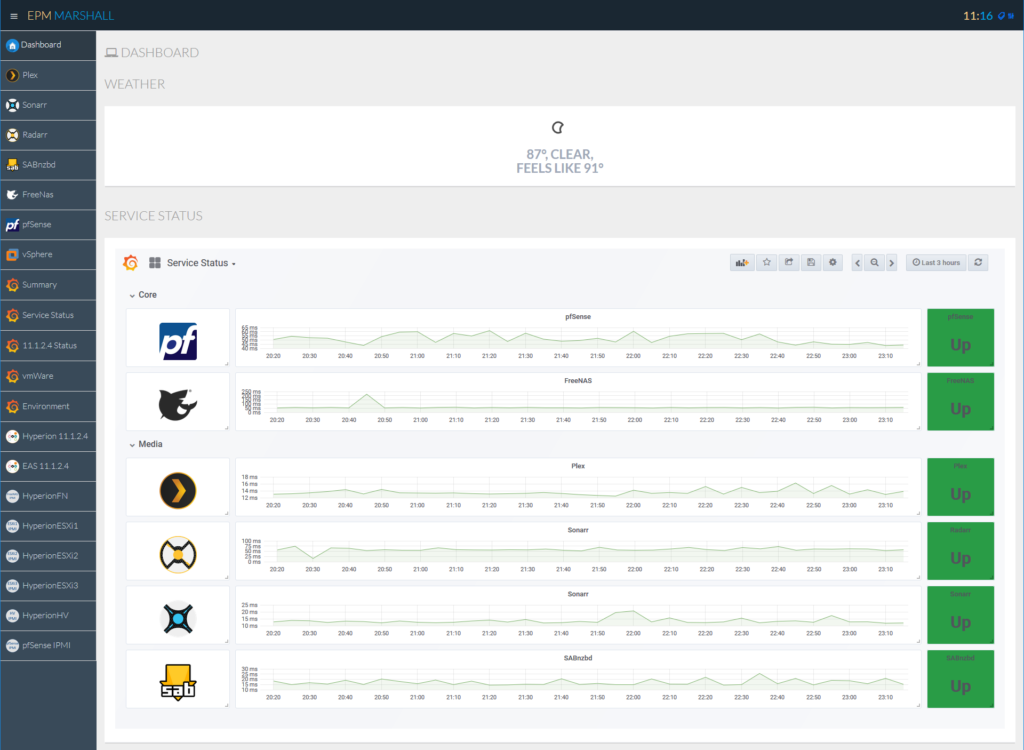

I really enjoyed working with this dashboard and finally posted my results of working with it. Imagine my surprise when someone mentioned a piece of software in that post named Organizr. This is basically what I was using, but far more advanced. So next, I turned my attention to setting up Organizr and giving that a whirl. My attempt looks something like this:

Organizr Homepage

Nifty Admin Interface

Custom vs. Pre-Built

When it comes to the decision of custom vs. pre-built dashboard software, its a complicated answer for me. Let’s take a look at a few Pro’s and Con’s:

Organizr Pro’s

- Ton’s of pre-built integrations (Plex, SABnzbd, Sonarr, Radarr, etc.)

- User Management

- Really nice administrative interface

- Very Pretty

Organizr Con’s

- Much harder to tinker with (IE, play with the code)

- User Management (yes, this can be a con…its just one more thing for you to manage in your homelab)

Custom Pro’s

Infinite flexibility to tinker

I still prefer the look of the option I selected over Organizr

Custom Con’s

Way less pre-built integrations

Less refined administrative interface

My Homelab Dashboard

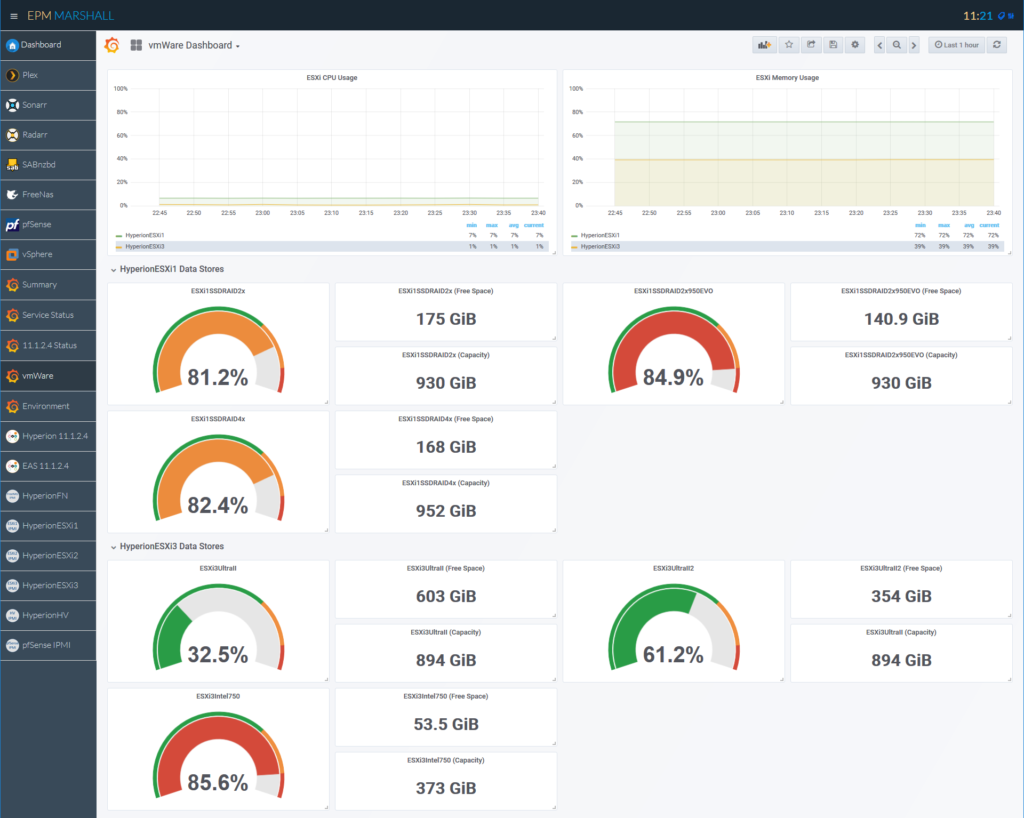

In the end, I have both up and running and will likely build tutorial for both. At the moment, I still prefer the custom dashboard, and I’ve gone through and extended it quite a bit with logos on the interface and a brand new administrative engine. Take a look:

Custom Homepage

New Admin Interface

Grafana Fun

More Grafana Fun

The TIG Stack

Hopefully you are looking at my dashboard saying…”That’s really cool. What else does it take to do that?” If you aren’t saying that, keep it to yourself and let me live in ignorant bliss. So what else does it take? Enter the TIG stack. Telegraf, InfluxDB, and Grafana. These are three open-source projects that make all of the really cool visualizations possible.

Telegraf

Telegraf is an open-source product with a ton of plug-ins that gives us the ability to write statistics to a database on an interval. For instance, it might look at the CPU usage on a server and then write that to a database every 30 seconds. The Telegraf agent will track all types of things like CPU and memory usage, SNMP metrics, and anything else you can write a plug-in for. But what kind of database does it write the data to?

InfluxDB

InfluxDB is from the same development team as Telegraf. It is also open-source and provides a streaming database specific to time-based data. This means telegraf and anything else that can stream to a web-service can write to InfluxDB. Once you have InfluxDB configured and Telegraf sending data, you can move on to making pretty pictures with Grafana!

Grafana

What good is a homelab dashboard if you can’t make pretty pictures? Exactly, And this is where Grafana comes in. Grafana is yet another open-source piece of software, this time geared towards the actual dashboarding part of a homelab dashboard. Basically, it can take anything from InfluxDB and make it pretty (and useful).

PowerShell

I guess the P is silent? For all of the things that Telegraf can’t handle natively, I have a bunch of custom PowerShell scripts that give us statistics. This includes things like VMware and service status.

What’s Next?

As I already mentioned, I love blog series. We’ll have at least one blog post dedicated to every step in the process of building your own homelab dashboard. In an ideal world, this will be a soup-to-nuts solution for anyone looking for pretty graphs and a central location to management their homelab.

(DreamHost Test Note)

Brian Marshall

June 25, 2018