If you attended my recent presentation at Kscope18, I covered this topic and provided a live demonstration of MDXDataCopy. MDXDataCopy provides an excellent method for creating functionality similar to that of Smart Push in PBCS. While my presentation has all of the code that you need to get started, not everyone likes getting things like this out of a PowerPoint and the PowerPoint doesn’t provide 100% of the context that delivering the presentation provides.

Smart Push

In case you have no idea what I’m talking about, Smart Push provides the ability to push data from one cube to another upon form save. This means that I can do a push from BSO to an ASO reporting cube AND map the data at the same time. You can find more information here provided in the Oracle PBCS docs. This is one of the features we’ve been waiting for in On-Premise for a long time. I’ve been fortunate enough to implement this functionality at a couple of client that can’t go to the cloud yet. Let’s see how this is done.

MDXDataCopy

MDXDataCopy is one of the many, many functions included with Calculation Manager. These are essentially CDF’s that are registered with Essbase. As the name implies, it simply uses MDX queries pull data from the source cube and then map it into the target cube. The cool part about this is that it works with ASO perfectly. But, as with many things Oracle, especially on-premise, the documentation isn’t very good. Before we can use MDXDataCopy, we first have some setup to do:

- Generate a CalcMgr encyrption key

- Encrypt your username using that key

- Encrypt your password using that key

Please note that the encryption process we are going through is similar to what we do in MaxL, yet completely different and separate. Why would we want all of our encryption to be consistent anyway? Let’s get started with our encrypting.

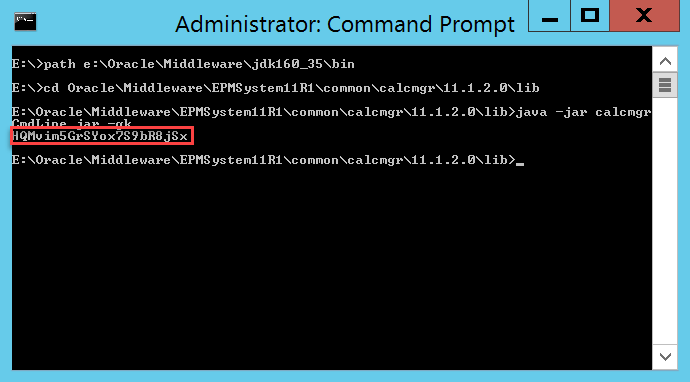

Generate Encryption Key

As I mentioned earlier, this is not the same process that we use to encrypt usernames and passwords with MaxL, so go ahead and set your encrypted MaxL processes and ideas to the side before we get started. Next, log into the server where Calculation Manager is installed. For most of us, this will be where Foundation Services happens to also be installed. First we’ll make sure that the Java bin folder is in the path, then we’ll change to our lib directory that contains calcmgrCmdLine.jar, and finally we’ll generate our key:

path e:\Oracle\Middleware\jdk160_35\bin

cd Oracle\Middleware\EPMSystem11R1\common\calcmgr\11.1.2.0\lib

java -jar calcmgrCmdLine.jar –gk

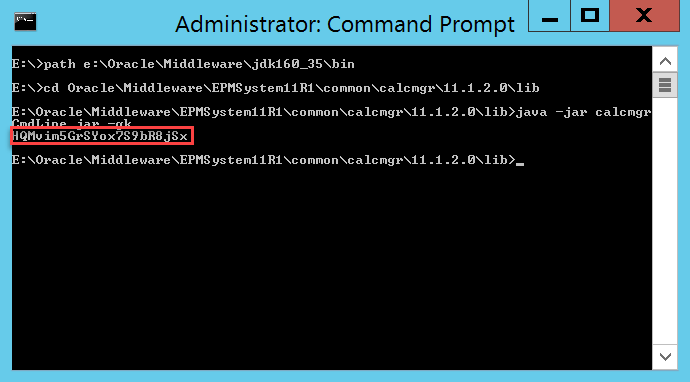

This should generate a key:

We’ll copy and paste that key so that we have a copy. We’ll also need it for our next two commands.

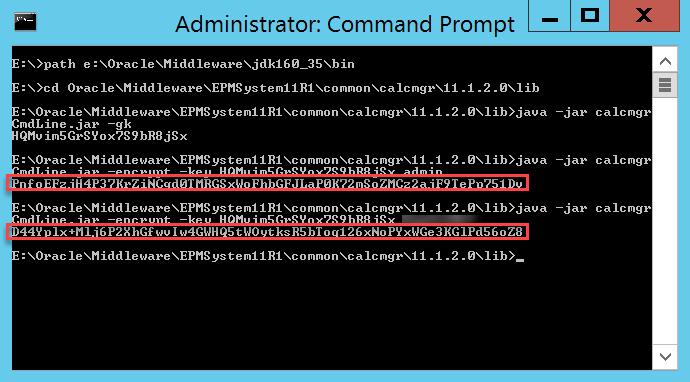

Encrypt Your Username and Password

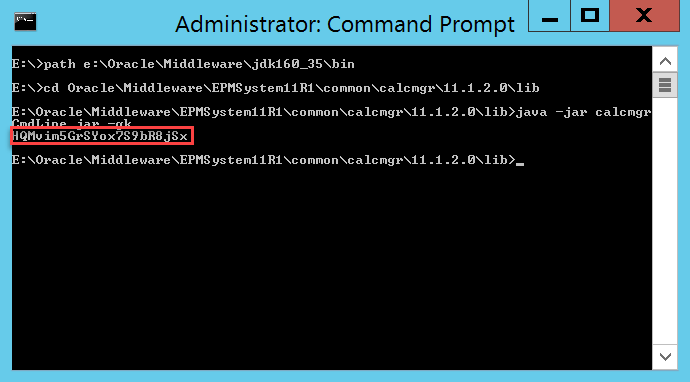

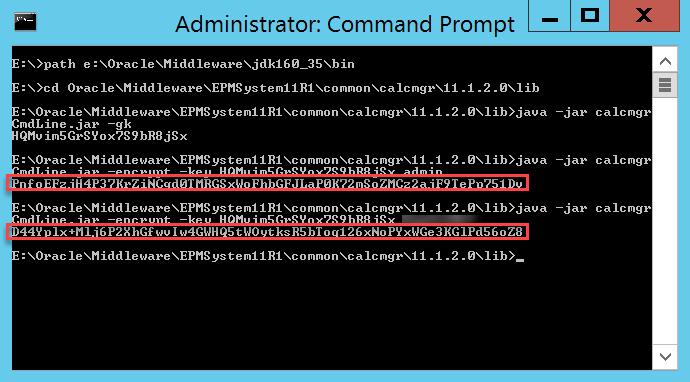

Now that we have our key, we should be ready to encrypt our username and then our password. Here’s the command to encrypt using the key we just generated (obviously your key will be different):

java -jar calcmgrCmdLine.jar -encrypt -key HQMvim5GrSYox7S9bR8jSx admin

java -jar calcmgrCmdLine.jar -encrypt -key HQMvim5GrSYox7S9bR8jSx GetYourOwnPassword

This will produce two keys for us to again copy and paste somewhere so that we can reference them in our calculation script or business rule:

Now that we have everything we need from our calculation manager server, we can log out and continue on.

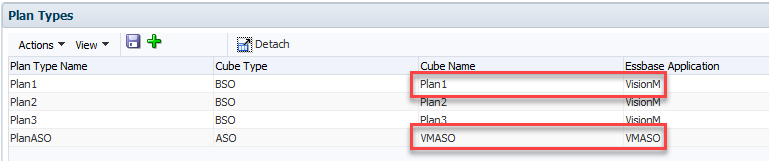

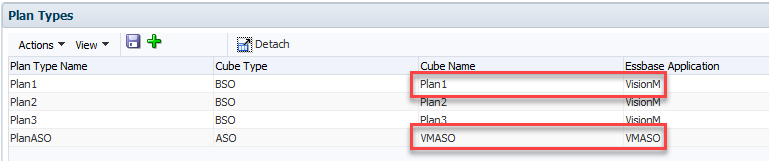

Vision

While not as popular as Sample Basic, the demo application that Hyperion Planning (and PBCS) comes with is great. The application is named Vision and it comes with three BSO Plan Types ready to go. What it doesn’t come with is an ASO Plan Type. I won’t go through the steps here, but I basically created a new ASO Plan Type and added enough members to make my demonstration work. Here are the important parts that we care about (the source and target cubes):

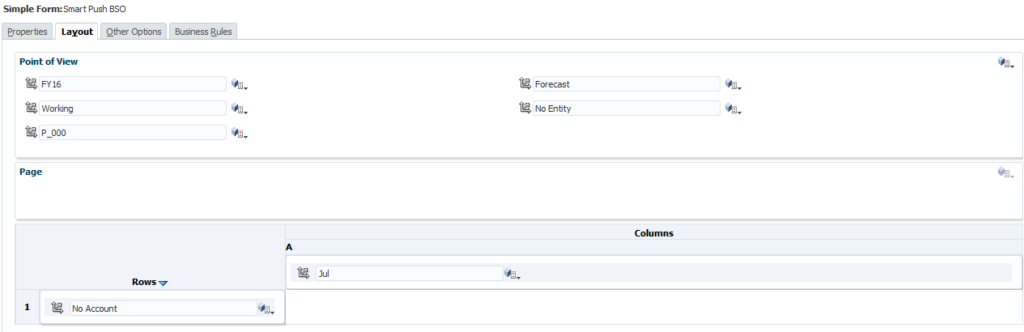

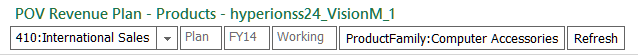

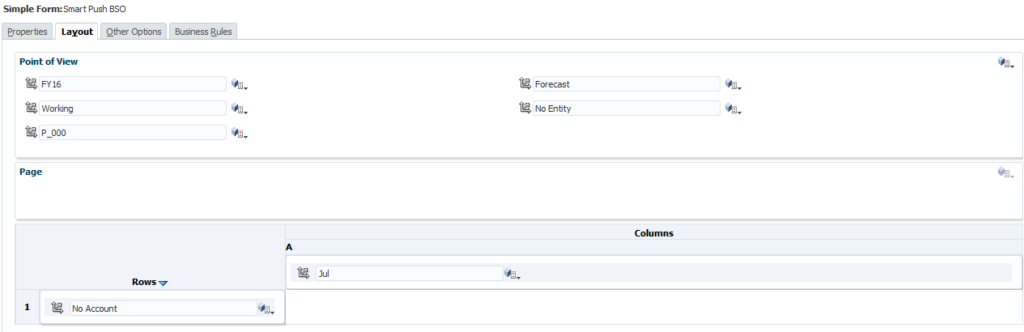

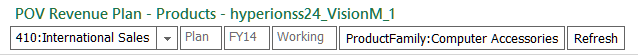

Now we need a form so that we have something to attach to. I created two forms, one for the source data entry and one to test and verify that the data successfully copied to the target cube. Our source BSO cube form looks like this:

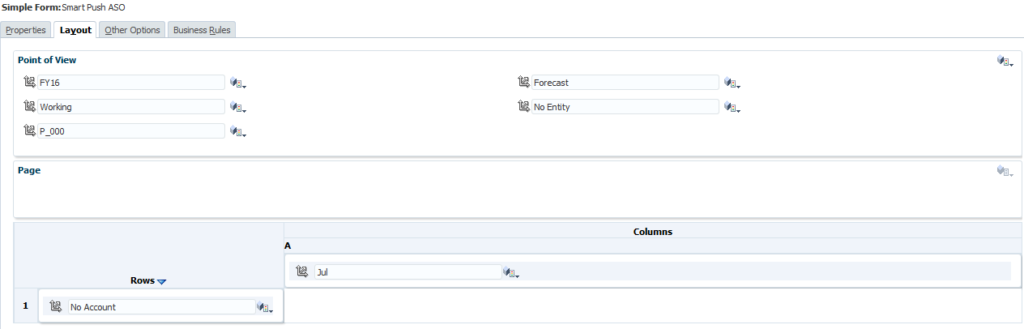

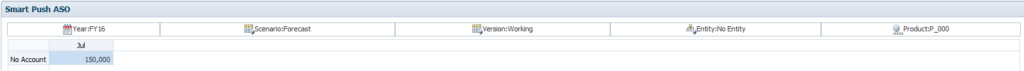

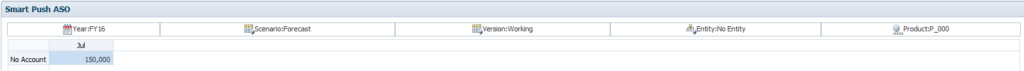

Could it get more basic? I think not. And then for good measure, we have a matching form for the ASO target cube:

Still basic…exactly the same as our BSO form. That’s it for changes to our Planning application for now.

Calculation Script

Now that we have our application ready, we can start by building a basic (I’m big on basic today) calculation script to get MDXDataCopy working. Before we get to building the script, let’s take a look at the parameters for our function:

- Key that we just generated

- Username that we just encrypted

- Password that we just encrypted

- Source Essbase Application

- Source Essbase Database

- Target Essbase Application

- Target Essbase Database

- MDX column definition

- MDX row definition

- Source mapping

- Target mapping

- POV for any dimensions in the target, but not the source

- Number of rows to commit

- Log file path

Somewhere buried in that many parameters you might be able to find the meaning of life. Let’s put this to practical use in our calculation script:

RUNJAVA com.hyperion.calcmgr.common.cdf.MDXDataCopy

"HQMvim5GrSYox7S9bR8jSx"

"PnfoEFzjH4P37KrZiNCgd0TMRGSxWoFhbGFJLaP0K72mSoZMCz2ajF9TePp751Dv"

"D44Yplx+Mlj6P2XhGfwvIw4GWHQ5tWOytksR5bToq126xNoPYxWGe3KGlPd56oZ8"

"VisionM"

"Plan1"

"VMASO"

"VMASO"

"{[Jul]}"

"CrossJoin({[No Account]},CrossJoin({[FY16]},CrossJoin({[Forecast]},CrossJoin({[Working]},CrossJoin({[No Entity]},{[No Product]})))))"

""

""

""

"-1"

"e:\\mdxdatacopy.log";

Let’s run down the values used for our parameters:

- HQMvim5GrSYox7S9bR8jSx (Key that we just generated)

- PnfoEFzjH4P37KrZiNCgd0TMRGSxWoFhbGFJLaP0K72mSoZMCz2ajF9TePp751Dv (Username that we just encrypted)

- D44Yplx+Mlj6P2XhGfwvIw4GWHQ5tWOytksR5bToq126xNoPYxWGe3KGlPd56oZ8 (Password that we just encrypted)

- VisionM (Source Essbase Application)

- Plan1 (Source Essbase Database)

- VMASO (Target Essbase Application)

- VMASO (Target Essbase Database)

- {[Jul]} (MDX column definition, in this case just the single member from our form)

- CrossJoin({[No Account]},CrossJoin({[FY16]},CrossJoin({[Forecast]},CrossJoin({[Working]},CrossJoin({[No Entity]},{[No Product]}))))) (MDX row definition, in this case it requires a series of nested crossjoin functions to ensure that all dimensions are represented in either the rows or the columns)

- Blank (Source mapping which is left blank as the two cubes are exactly the same)

- Also Blank (Target mapping which is left blank as the two cubes are exactly the same)

- Also Blank (POV for any dimensions in the target, but not the source which is left blank as the two cubes are exactly the same)

- -1 (Number of rows to commit which is this case is essentially set to commit everything all at once)

- e:\\mdxdatacopy.log (Log file path where we will verify that the data copy actually executed)

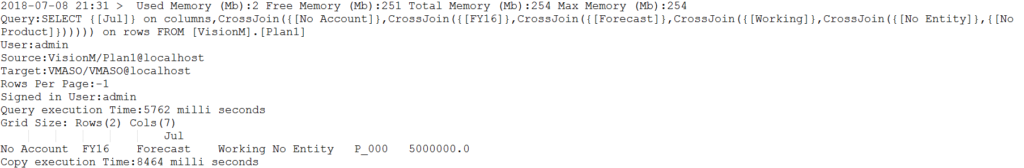

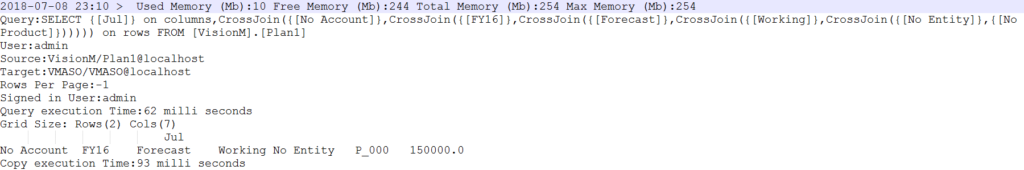

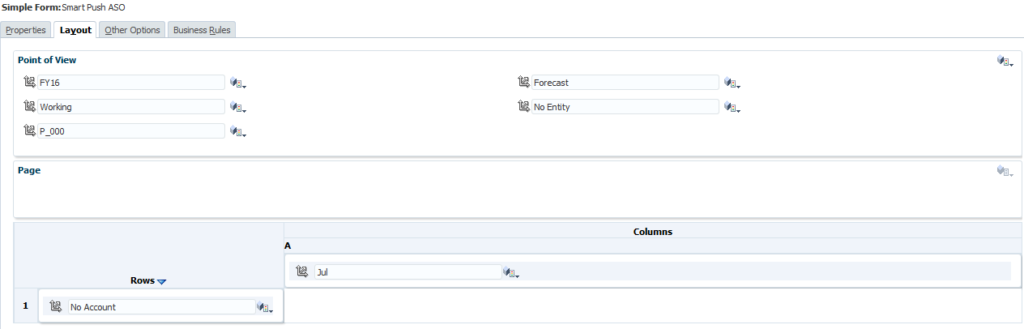

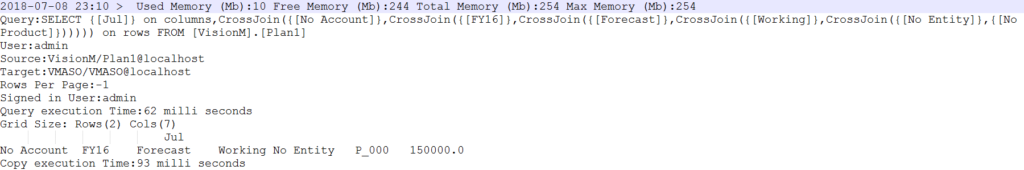

The log file is of particular importance as the script will execute with success regardless of the actual result of the script. This means that especially for testing purposes we need to check the file to verify that the copy actually occurred. We’ll have to log into our Essbase server and open the file that we specified. If everything went according to plan, it should look like this:

This gives us quite a bit of information:

- The query that was generated based on our row and column specifications

- The user that was used to execute the query

- The source and target applications and databases

- The rows to commit

- The query and copy execution times

- And the actual data that was copied

If you have an error, it will show up in this file as well. We can see that our copy was successful. For my demo at Kscope18, I just attached this calculation script to the form. This works and shows us the data movement using the two forms. Let’s go back to Vision and give it a go.

Back to Vision

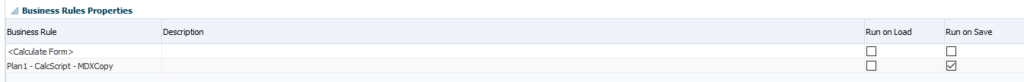

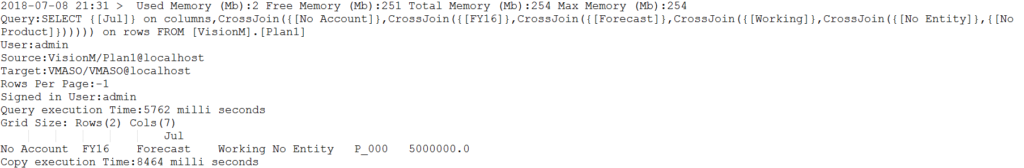

The last step to making this fully functional is to attach our newly created calculation script to our form. Notice that we’ve added the calculation script and set it to run on save:

Now we can test it out. Let’s change our data:

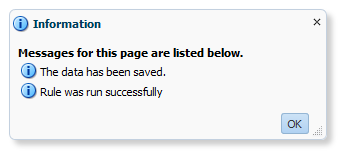

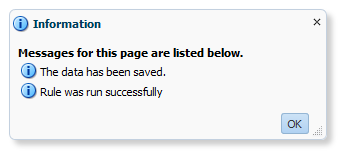

Once we save the data, we should see it execute the script:

Now we can open our ASO form and we should see the same data:

The numbers match! Let’s check the log file just to be safe:

The copy looks good here, as expected. Our numbers did match after all.

Conclusion

Obviously this is a proof of concept. To make this production ready, you would likely want to use a business rule so that you can get context from the form for your data copy. There are however some limitations compared to PBCS. For instance, I can get context for anything that is a variable or a form selection in the page, but I can’t get context from the grid itself. So I need to know what my rows and columns are and hard-code that. You could use some variables for some of this, but at the end of the day, you may just need a script or rule for each form that you with to enable Smart Push on. Not exactly the most elegant solution, but not terrible either. After all, how often do your forms really change?

Brian Marshall

July 8, 2018

As we all know, Oracle has put virtually all of their development efforts into the cloud. This is especially true for the EPM Suite of products (PBCS, FCCS, ARCS, etc.). As a result, PBCS keeps getting great new features that we may never see in on-premise Hyperion Planning. I was talking to Jake Turrell today and we were comparing notes on the new functionality that we have used in PBCS on projects recently. That conversation devolved into us making a rather long list of new features. Special thanks to Jake for helping me make this list, as I wouldn’t have thought of a good portion of the things on it without his help. So what new functionality has been added to PBCS that will likey never make it to on-premise Hyperion Planning?

Forms

Hyperion Planning has existed for over 15 years now, so you might think that the form design capabilities would be fully-baked by now. For the most part, this is a true statement. But, there have been some pretty big holes that PBCS has finally filled. Two new additions in particular make for a better form design experience for developers: Exclusions and Ranges.

Exclusions

In Planning, when we attempt to select members, that’s the only option…select members. In PBCS, they have added the ability to edit the selection (our old select members option) and the ability to add exclusions. Exclusions give us an easy way to take, for example, inclusive descendants of our entity dimension while excluding a specific list. This is particularly useful when we are referencing a substitution variable or a user variable. We don’t know the full extent of what could be returns, but we do know what we definitely don’t want.

Ranges

When you do monthly forecasting, nothing has been more annoying in form design than the inability to easy specify a range of members. In Planning, I can’t just ask the form to give me Jan through &CurrentMonth in one column and &CurrentMonth through Dec in another column. This means to really make my forms dynamic, I need more substitution variables than I’m comfortable with and a form that has a ton of columns with the combinations. In PBCS, I now have four new member selection functions that allow me to put together a range:

- Left Siblings

- Left Sibling (inc)

- Right Siblings

- Right Siblings (inc)

Finally! I can do a range of members with just two columns and a single substitution variable!

Formatting

We can now format our forms! You can change colors, font styles, add lines, along with other formatting options. These options will show up in Excel and in the Simplified Interface. This does not work in Workspace…but who cares, it’s officially dead in PBCS anyway as of the February release coming out shortly.

Smart Forms

Not to be confused with regular forms…we have Smart Forms. This is an exciting new feature that allows you to take an ad hoc form, add formulas, and save them to the actual form! While this is cool for a demo, I’m not necessarily a fan in practice. While it is much better than building formulas in an actual form, which is painful, it still presents a problem. Why are you doing form math? In general I try to put math back in the Essbase model rather than having formulas on multiple forms.

Periods

In Planning, if I want to add periods to just a single plan type, I’m totally out of luck. The boxes are all grey and there’s no way around it. In PBCS, I can now simply un-check the plan types from which I would like to exclude the member. This is a simple feature, but makes a massive difference in the flexibility in our designs.

Years

For literally years I’ve helped companies add and delete years from Planning applications. There are a few ways to do this, but none of them are supported or in the interface. In PBCS, if I want to delete a year, I simply select the year and click the delete button. Again, this is super-simple, but so very nice to have. Additionally, if I want to add years in the past, I can now do this in the interface! Simply add the number of years you wish to add, and when PBCS asks if you would like to add them to the end, click no. Now you have years years in the past. This feature is a little more obfuscated, but still pretty simple.

Data Maps

On-Premise planning does have the idea of a reporting cube and it does give you the ability to create some level of mapping. But it definitely doesn’t do what PBCS does. PBCS has the ability to map and move data on the fly and then it takes it a step further: Smart Push. Smart Push is one of the most amazing features that they have added to PBCS. For many applications, it gives us the ability to have an ASO cube with live data from our BSO cube with no crazy partitions or really any work at all beyond the mapping. So as long as we input to our BSO cube and report from our ASO cube, I may never need to aggregate my BSO cube again.

It is fair to mention that while this functionality is not baked into Planning, if you really need it, you can build it from the ground up with some fancy scripting on the back end. Even still, it doesn’t hold a candle to the ease of use and stability of Smart Push.

Valid Intersections

I’ve been demoing Planning and Essbase for a very long time. When people ask what benefit Essbase might have over Planning, there are very few good answers. One of those answers however has always been that Essbase can support what we call matrix security. This is essentially the ability to allow a user to have write access to a cross dimensional set of intersections. For instance, for Entity A I can modify Account 1000 while for Entity B I can modify Account 2000. Planning simply doesn’t support that. I have to give a user Entity A, Entity B, Account 1000 and Account 2000. That user will be able to modify all combinations.

PBCS fixes this. With valid intersections, I can create a set of intersections as defined above and limit the user’s ability to write back to invalid intersections. From a security perspective, they still have access, but with valid intersections, they lose it. Many people wanted valid intersections to give us the ability to cascade member selections across dimensions, which would be cool, but this functionality is just as useful.

SmartLists

I know what you’re thinking, Planning has SmartLists. But PBCS has SmartLists that can be dynamically created directly from a dimension. This means that I can provide the user with a list of accounts. Big deal…who cares, right? I care if I add an account. With this new functionality, when an account is added, the SmartList is updated automagically. Ok…that is a big deal. Not content with this already amazing feature, Oracle took it a step further. You can also reference the value of a SmartList in a calculation. This means that I can use the selection in a SmartList to truly manipulate data. Basically a new alias is created that references the OBJECT_ID. That OBJECT_ID is also used as the value stored in Essbase for the SmartList selection. Combined, I can easily reference the member that the SmartList is linked to. Like I said…big deal. Huuuuuge even.

Attribute Dimensions

This is another item that has some support in Planning, but missed the point. I can technically add attribute dimensions to a Planning application and I can use them in a variety of ways. But the two ways I need to be able to use them are missing. They can’t be used in a form. They can’t be used in Smart View. I can technically use an Essbase connection directly and use them for analysis, but that only works on BSO and doesn’t work at all on ASO Plan Types.

PBCS fixes both of these issues. I can layer in attribute dimensions easily on forms. It also fixes the Smart View issues by allowing for attribute dimension selection in the Planning Ad Hoc connector. We’ve only been asking for this in Planning for a decade. The chances seem so very slim that we actually ever see it given the list ten years.

Navigation Flows

Technically speaking, the simplified interface is available in 11.1.2.4. But I don’t think it could possibly be any worse than it is. It’s essentially there for dashboards and everything partially works. The simplified interface in PBCS on the other hand is pretty great. It may require 100 extra clicks for a variety of administrative functions, but for end-users, I would consider it an upgrade.

One of the reasons I believe this is the addition of navigation flows. I can create my own customized tile interface for my application and assign it to a user. This means I can really create a user-specific interface tailored for a specific set of business processes. This helps me put together a pretty awesome demo and makes end-users feel like it is a more truly customized application.

But wait, there is a downside. I love navigation flows. And if your users are primarily in the web-based interface, they are amazing. If the majority of your users are in Excel however…they will totally be out of luck. Navigation flows haven’t made it over there yet. I’m not even sure if they can without a major interface overhaul.

Dashboards

While we are on the topic of the simplified interface, let’s discuss dashboards. They do exist, like the simplified interface, in 11.1.2.4. But, much like the entire simplified interface in 11.1.2.4, they aren’t great. PBCS has also added a variety of new visualization types:

- Combination Graphs (seriously, how is this not in on-premise)

- Funnel

- Radar

- Tile

While I believe PBCS dashboards are fantastic, they do have at least one major downside. Again, they don’t work in Smart View. But, it’s a dashboard, so I’ll give Oracle a free pass.

Browser Support and Mobile Support

For a very long time, Internet Explorer was it with Hyperion. Finally, Oracle finally brought Firefox into the fold. Now, with PBCS, it really doesn’t matter what platform you work on. The simplified interface is fully compatible with Internet Explorer, Firefox, Chrome, and Safari. This is of particular importance given how easily I can access PBCS from my phone or tablet. The interface is great on mobile devices. This is an area where dashboards can really shine. To get mobile access in Planning, I have to bribe somebody in IT to open ports on the firewall. And frankly, I don’t think any of us have enough money to afford the bribe necessary for that to happen.

Localization

If you haven’t done a lot of international applications, you probably don’t care about this at all. But companies with users all over the world, PBCS has made life much, much better. First is the ability for PBCS to automatically detect your language settings in your browser and to automatically translate everything that’s built in. Oracle has taken this a giant leap further and added something called Artifact Labels. Essentially I can add languages and labels to all of my objects now. Instead of a form being Revenue Input for all of my languages, I can now label that form in any language. This is pretty impressive compared to Planning.

Application Reporting

No, not financial reports, but reports about the application. Planning essentially provide nothing in the way of reporting. You can get a variety of information out of the repository, but that’s just painful. PBCS has added a wealth of reporting options. Here’s a quick list:

- User Login Report – When and how often are users in the system?

- Form Definition Report – Great for documentation, this produces a PDF of selected forms with the entire definition in a nice set of tables. Rows, column, POV, page, business rules, etc.

- Approval Status Report – How can I tell where everyone is on their approvals? This will produce a report providing just that in a variety of formats including XLSX and HTML.

- Access Control Report – See how everyone is provisioned. It will show either explicitly assigned rights or effective rights. Pretty convenient.

- Activity Reports – Check out what your users are up to.

- Access Logs – Get the full picture of everything that happened.

- Audit Report – Finally, I don’t have to query the HSP_AUDIT_RECORDS table. I also don’t have to go to the specific cell. I can run a quick export to Excel. Not perfect, but I’ll take it.

Groovy Business Rules

With EPBCS, I can now write business rules in Groovy. These rules can go far beyond the simple bounds of Essbase data. They can pull context from the application itself. I am sad that this feature has not yet and will likely not ever make it into regular PBCS. Here’s hoping.

LCM Maturity

I’ve been using LCM for a long, long time. I can’t point to specific things in LCM that are better, but I can describe LCM in PBCS as more “mature.” It just feels more stable and seems to work better. This could just be in my head (and Jake’s)…

Academy

I know, on-premise applications have a ton of documentation. But, there’s something to be said for easy access to what I’m looking for. There is a ton of content on the Academy and much if it is especially useful for new users. Planning for new users are basically on their own.

No Infrastructure Needs

For those of you that do infrastructure, this is not a plus. But for the rest of us, not needing to install and configure the system is just easy. I don’t have to worry about something in IT getting messed up. I don’t have to worry about applying patches. Having said that, you do lose control of your infrastructure. But hey, it’s the cloud.

No VPN Necessary

I mentioned earlier that I can finally access my PBCS application with my mobile devices. The cloud makes this so much easier. Not only that, but if you need to give your consultant access to the system, it takes 5 minutes and doesn’t require hours of paperwork and begging of IT. I love not needing yet another VPN connection just to modify a form.

Free FDMEE!

Okay, so it isn’t FDMEE. But for most client, it does more than enough. And again…it is free. So stop complaining that it only loads text files.

Conclusion

Having said all of that, and it was a lot, PBCS still isn’t for everyone. But as time passes and development continues for PBCS while it stands still for Planning, it is becoming more and more difficult to ask the question why PBCS? Instead we really have to ask why NOT PBCS?

Brian Marshall

January 29, 2018

Have you ever built a form in Hyperion Planning (or PBCS) that really needed to have the same dimension represented in both the user selectable page and the rows or columns? Normally, we want to give the user the ability to filter a form dynamically. As an example, perhaps I would like to select and upper level parent of a product in the page, but I want to see all of the descendants of that selection in the rows of a

form. As another example, I’d like to select an upper-level cost center in the page, but have it show me all of the descendents of that selection in the rows of the form. I recently encountered that very request from a client. As it happens, I recently had a request come in via a comment on this site as well.

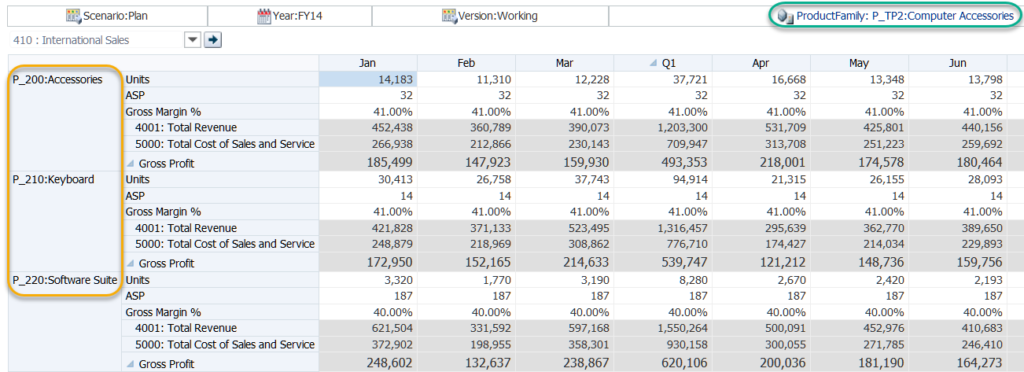

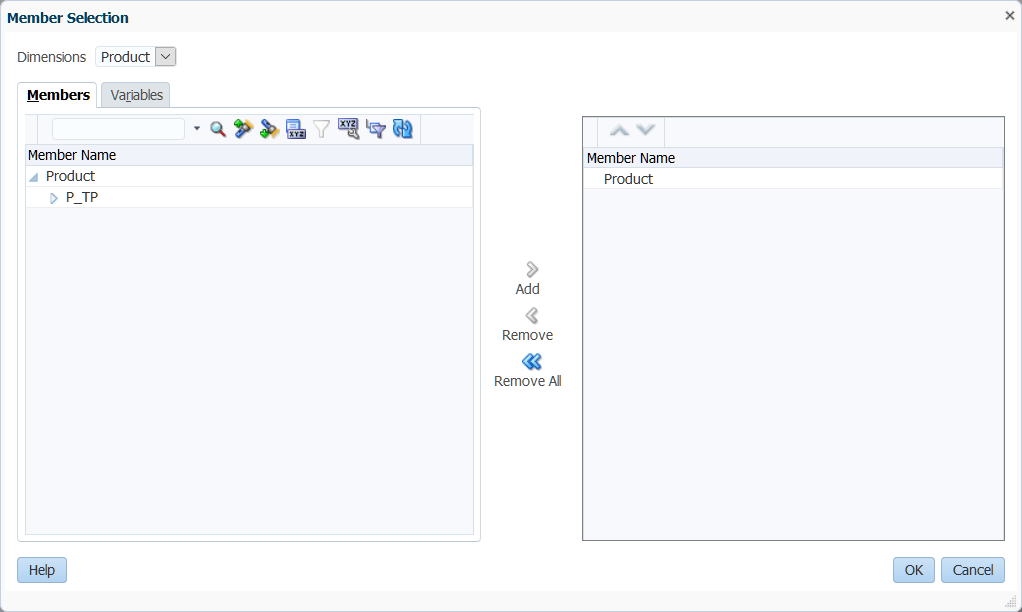

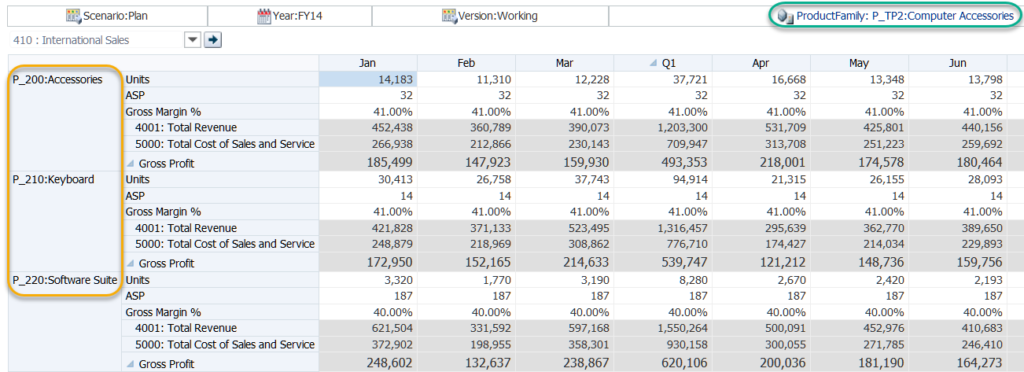

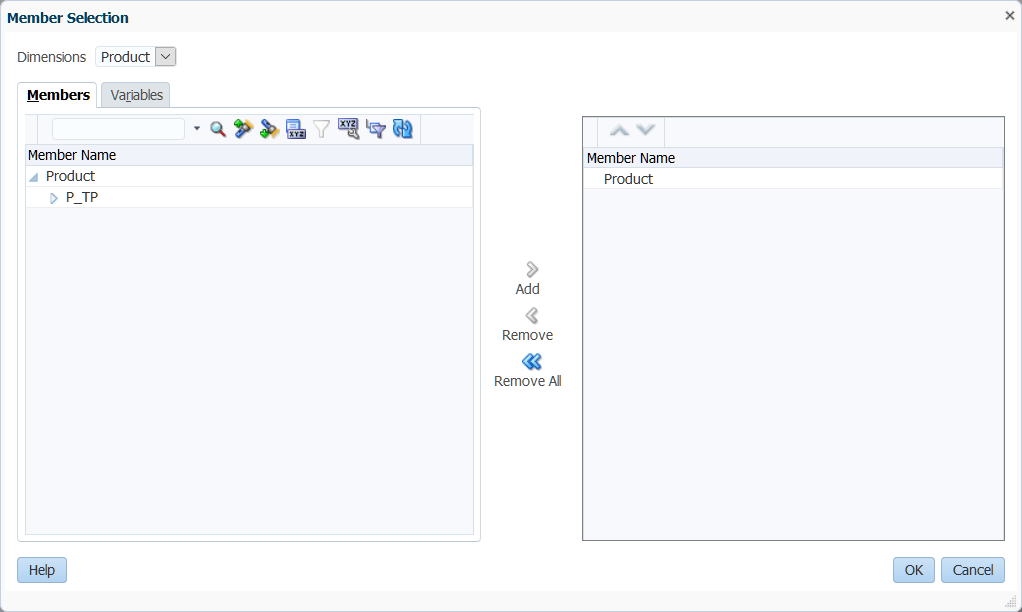

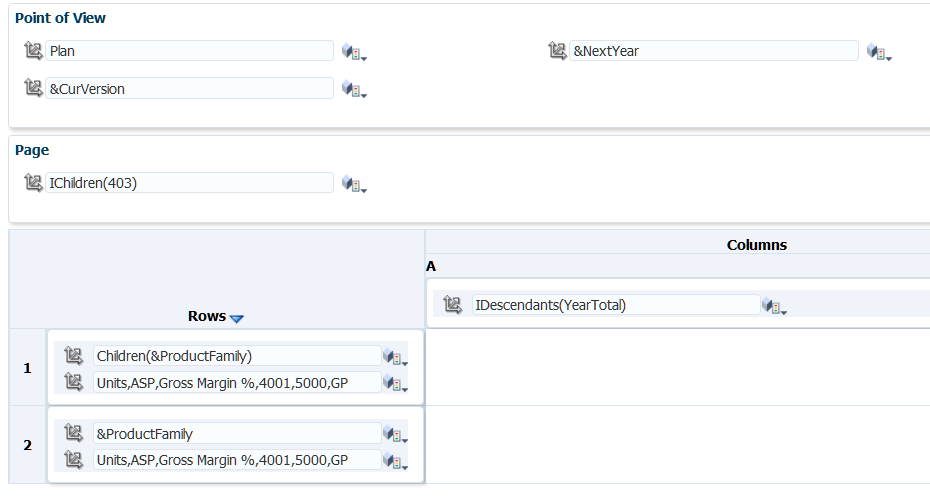

So how can we do this? I know how to build forms, and a dimension can only be part of the POV, the page, rows, or columns, right? If we look at the form designer, that’s totally how it seems. This is where the form designer is a bit deceiving. Let’s start with a cool screen shot:

If we look at the green box in the top right corner, we have the product dimension. If we look at the yellow box on the left…we have the product dimension. So what happens if I click on green box?

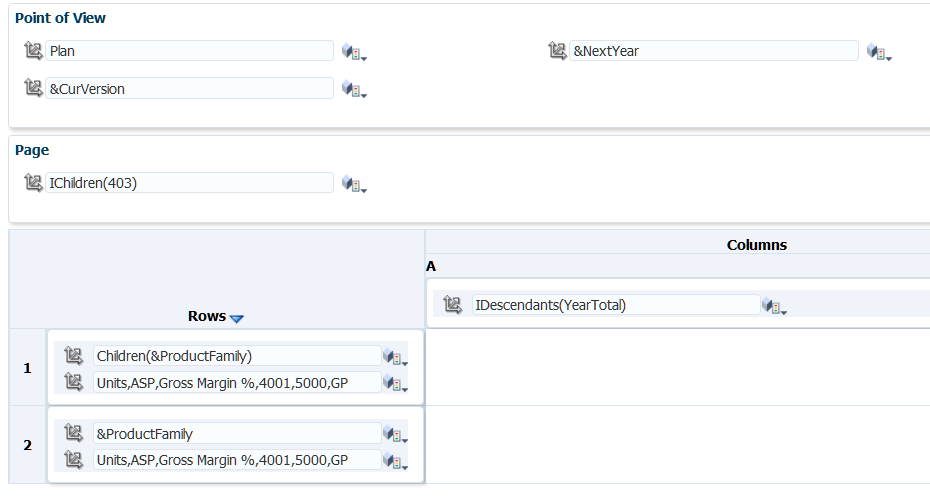

Look at that! It’s not quite as cool as a drop-down in the page, but it still let’s us select a member from the product dimension. Now let’s take a look at the form designer:

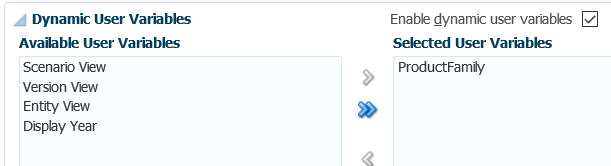

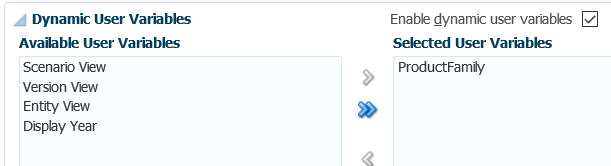

Looking closely, we’ll notice that the product dimension only exists in one place in the designer…the rows. But wait, we should make note of one other thing: the ProductFamily variable. This is not a substitution variable. This is instead a dynamic user variable. Let’s check out the Other Options tab:

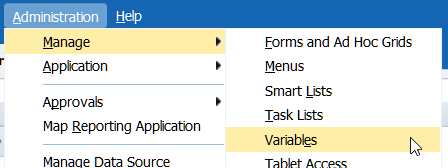

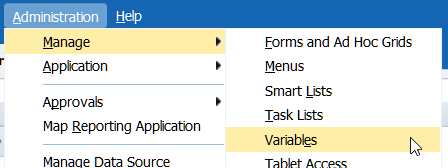

There’s the magic! We have a dynamic user variable added to our form. This automatically places the member in the POV, but enables a member selector. So there you have it, a super-simple provide a solution to a complex user request. So how do I create a dynamic user variable? Click Administration, then click Manage, then click Variables:

From here, you can easily add your user variables. The only real downside to the method is that if the users don’t have the variable defined yet, it will let them know that they need to before allowing them to open the form. This looks great in the web, but what happens when I try it in Smart View?

It still works! Let’s be honest, you have to check these things in Smart View because we just never really know. That’s all for a quick post on a neat feature that I’ve found to be somewhat obscure.

Brian Marshall

June 12, 2017

Hybrid Essbase is the biggest advancement in Essbase technology since ASO was released. It truly takes Essbase to another level when it comes to getting the best out of both ASO and BSO technology. Converting your application from BSO to Hybrid can be a long process. You have to make sure that all of your calculations still work the way they should. You have to make sure that your users don’t break Hybrid mode. You have to update the storage settings for all of your sparse dimensions.

I can’t help you with the first items, they just take time and effort. What I can help you with is the time required to update your sparse dimensions. I spend a lot of time hacking around in the Planning repository. I suddenly found a new use for all of that time spent with the repository…getting a good list of all of the upper level members in a dimension. If we just export a dimension, we get a good list, but we have to do a lot of work to really figure out which members are parents and which are not. Luckily, the HSP_OBJECT table has column that tells us just that: HAS_CHILDREN.

Microsoft SQL Server

The query to do this is very, very simple. The process for updating your dimensions using the query takes a little bit more explanation. We’ll start with SQL Server since that happens to be where I’m the most comfortable. I’m going to assume you are using SQL Server Management Studio…because why wouldn’t you? It’s awesome. Before we even get to the query, we first need to make a configuration change. Open Management Studio and click on Tools, then Options.

Expand Query Results, then expand SQL Server, and then click on Results to Grid:

Check the box titled Include column headers when copying or saving the results and click OK. Why did we start here? Because we have to restart Management Studio for the new setting to actually take affect. So do that next…

Now that we have Management Studio ready to go, we can get down to the query. Here it is in all of its simplicity:

SELECT

o.OBJECT_NAME AS Product

,po.OBJECT_NAME AS Parent

,'dynamic calc' AS [Data Storage (Plan1)]

FROM

HSP_OBJECT o

INNER JOIN

HSP_MEMBER m ON m.MEMBER_ID = o.OBJECT_ID

INNER JOIN

HSP_DIMENSION d ON m.DIM_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT do ON do.OBJECT_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT po ON po.OBJECT_ID = o.PARENT_ID

WHERE

do.OBJECT_NAME = 'Product'

AND o.HAS_CHILDREN = 1

We have a few joins and a very simple where clause. As always, I’m using my handy-dandy Vision demo application. A quick look at the results shows us that there are very few parents in the Product dimension:

Now we just need to get this into a format that we can easily import back into Planning. All we have to do it right-click anywhere in the results and click on Save Results As…. Enter a file name and click Save.

Now we should have a usable format for a simple Import to update our dimension settings. Let’s head to workspace and give it a shot. Fire up your Planning application and click on Administration, then Import and Export, and finally Import Metadata from File:

Select your dimension from the list and then browse to find your file. Once the file has uploaded, click the Validate button. This will at least tell us if we have a properly formatted CSV:

That looks like a good start. Let’s go ahead and complete the import and see what happens:

This looks…troubling. One rejected record. Let’s take a look at our logs to see why the record was rejected:

As we can see, nothing to worry about. The top-level member of the dimension is rejected because there is no valid parent. We can ignore this and go check to see if our changes took affect.

At first it looks like we may have failed. But wait! Again, nothing to worry about yet. We didn’t update the default data storage. We only updated Plan1. So let’s look at the data storage property for Plan1:

That’s more like it!

Oracle Database

But wait…I have an Oracle DB for my repository. Not to worry. Let’s check out how to do this with Oracle and SQL Developer. First, let’s take a look at the query:

SELECT

o.OBJECT_NAME AS Product

,po.OBJECT_NAME AS Parent

,'dynamic calc' AS "Data Storage (Plan1)"

FROM

HSP_OBJECT o

INNER JOIN

HSP_MEMBER m ON m.MEMBER_ID = o.OBJECT_ID

INNER JOIN

HSP_DIMENSION d ON m.DIM_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT do ON do.OBJECT_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT po ON po.OBJECT_ID = o.PARENT_ID

WHERE

do.OBJECT_NAME = 'Product'

AND o.HAS_CHILDREN = 1

This is very, very similar to the SQL Server query. The only real difference is the use of double quotes instead of brackets around our third column name. A small, yet important distinction. Let’s again look at the results:

The Oracle results look just like the SQL Server results…which is a good thing. Now we just have to get the results into a usable CSV format for import. This will take a few more steps, but it still very easy. Right click on the result set and click Export:

Change the export format to csv, choose a location and file name, and then click Next.

Click Finish and we should have our CSV file ready to go. Let’s fire up our Planning application and click on Administration, then Import and Export, and finally Import Metadata from File:

Select your dimension from the list and then browse to find your file. Once the file has uploaded, click the Validate button. This will at least tell us if we have a properly formatted CSV:

Much like the SQL Server file, this looks like a good start. Let’s go ahead and complete the import and see what happens:

Again, much like SQL Server, we have the same single rejected record. Let’s make sure that the same error message is present:

As we can see, still nothing to worry about. The top-level member of the dimension is rejected because there is no valid parent. We can ignore this and go check to see if our changes took affect.

As with SQL Server, we did not update the default data storage property, only Plan1. So let’s look at the data storage property for Plan1:

And just like that…we have a sparse dimension ready for Hybrid Essbase. Be sure to refresh your database back to Essbase. You can simply enter a different dimension name in the query and follow the same process to update the remaining sparse dimensions.

Celvin also has an excellent Essbase utility that will do this for you, but it makes use of the API and Java, and generally is a bit more complicated than this method if you have access to the repository. So what happens if you can’t use the API and you can’t access the repository? We have another option. We’ll save that for another day, this blog post is already long enough!

Brian Marshall

August 2, 2016

One of the long-running tenets of working with the Planning repository is that you must restart Planning to see your changes. I’ve always heard that there were ways around this, but Oracle hasn’t ever been forthcoming with the specifics of how to make that happen. Finally, at Kscope16 during my presentation on the Planning Repository, someone in the audience by the name of Tjien Lie had the code from Oracle to make this happen. Before I get to that, let’s start with a primer on the HSP_ACTION table. I would provide one, but John Goodwin did such a great job, so I’ll just point you to his post here.

<<<wait for the reader to go to John Goodwin’s site and read the information and come back, assuming they don’t get distracted by his wealth of amazing content>>>

Ok…now that you understand what the HSP_ACTION table does, how do we use it differently than John uses it? By differently, I mean I don’t wan to insert specific rows and update specific things. That seems like a lot of work. Instead, why not just have the HSP_ACTION table update the entire cache for us? Let’s give it a shot. First I’m going to go add a dimension to my Vision application:

Now let’s make sure the dimension shows up:

Any now let’s delete it:

DELETE FROM HSP_MRU_MEMBERS WHERE DIM_ID IN (SELECT OBJECT_ID FROM HSP_OBJECT WHERE OBJECT_NAME = 'ToBeDeleted')

DELETE

FROM

HSP_UNIQUE_NAMES

WHERE

OBJECT_NAME IN (

SELECT

OBJECT_NAME

FROM

HSP_MEMBER m

INNER JOIN

HSP_OBJECT o ON o.OBJECT_ID = m.MEMBER_ID

WHERE

DIM_ID IN (SELECT OBJECT_ID FROM HSP_OBJECT WHERE OBJECT_NAME = 'ToBeDeleted'))

SELECT

OBJECT_ID INTO #DeleteChildren

FROM

HSP_MEMBER m

INNER JOIN

HSP_OBJECT o ON o.OBJECT_ID = m.MEMBER_ID

WHERE

DIM_ID IN (SELECT OBJECT_ID FROM HSP_OBJECT WHERE OBJECT_NAME = 'ToBeDeleted')

AND o.HAS_CHILDREN = 0

SELECT

OBJECT_ID INTO #DeleteParents

FROM

HSP_MEMBER m

INNER JOIN

HSP_OBJECT o ON o.OBJECT_ID = m.MEMBER_ID

WHERE

DIM_ID IN (SELECT OBJECT_ID FROM HSP_OBJECT WHERE OBJECT_NAME = 'ToBeDeleted')

AND o.HAS_CHILDREN = 1

DELETE

FROM

HSP_MEMBER

WHERE

MEMBER_ID IN (SELECT OBJECT_ID FROM #DeleteChildren)

DELETE

FROM

HSP_MEMBER

WHERE

MEMBER_ID IN (SELECT OBJECT_ID FROM #DeleteParents)

DELETE

d

FROM

HSP_DIMENSION d

INNER JOIN

HSP_OBJECT o ON d.DIM_ID = o.OBJECT_ID

WHERE

o.OBJECT_NAME = 'ToBeDeleted'

DELETE

FROM

HSP_OBJECT

WHERE

OBJECT_ID IN (SELECT OBJECT_ID FROM #DeleteChildren)

DELETE

FROM

HSP_OBJECT

WHERE

OBJECT_ID IN (SELECT OBJECT_ID FROM #DeleteParents)

I’ll have a post on that command at some point, but basically it deletes the dimension from the repository. Now let’s go look again at our dimension list and make sure that it still shows up:

Still there…as expected. Now let’s try this little query, courtesy of Tjien Lie:

INSERT INTO HSP_ACTION (FROM_ID, TO_ID, ACTION_ID, OBJECT_TYPE, MESSAGE, ACTION_TIME) VALUES (0, 0, 2, -999, 'CACHE RESET',GETDATE())

And let’s take a look at the HSP_ACTION table and make sure that we have the row inserted:

We can also check out this table to see if our cache has been updated yet. As long as the row is here, we know that the cache hasn’t yet been updated. After a little while, I checked the table again:

Now that our table is empty, Planning will tell us that it did in fact refresh the cache:

That takes the guess work out of it! So how about our dimension…is it gone?

And just like that, the dimension is gone. I can make all of the change that I want and I no longer need to restart Planning. Special thanks to Tjien Lie from The Four Seasons for providing the code. Information exchange like this is why I love Kscope and can’t wait to see everyone in my home state of Texas next year! That’s it for now!

Brian Marshall

July 12, 2016

It’s that time of year again…Kscope! Unfortunately, Kscope always occurs during my busy season. As a result, much like last year, this will be a very quick in and out trip. I’ll be there Tuesday, June 28th only. Luckily, both of my presentations happen to be on that day, so it worked out nicely for me. I also have a few meetings with Oracle that I’m not allowed to talk about that occur on that day as well. Here are my presentations:

PBCS is Still Hyperion Planning

Jun 28, 2016, Session 9, 11:15 am – 12:15 pm

With Oracle’s release of PBCS, many service companies have started releasing their one-size-fits-all implementations. The unfortunate truth is that PBCS is still Hyperion Planning. This presentation discusses the best practices around implementing PBCS and how to avoid the pitfalls of implementations offered at insanely cheap (and underestimated) pricing. Attend this session if you don’t want to have your PBCS project “land and expand.”

The Planning Repository Revisited: Beyond the Basics

Jun 28, 20165, Session 10, 2:00 pm – 3:00 pm

If you’ve enjoyed my past presentations on the Planning repository, you should enjoy this presentation even more. We’ll take a step beyond the basics and provide a whole new set of examples that take a leap into real-world use. Whether it’s synchronizing metadata across applications or deleting dimensions, this presentation will dive deeper than ever before into the repository. But wait…there’s more. This presentation will have full samples in both Transact SQL for SQL Server users and PL/SQL for Oracle users. That’s two languages for the price of one (shipping and handling not included).

And of course, you can find me on the Kscope16 site here.

I hope everyone enjoys the content!

Brian Marshall

June 22, 2016

While recently debugging an issue with FDMEE, I needed to test drill-through in Hyperion Planning without using FDMEE. But wait…can you even do that? I had always planned on showing how to use Drillbridge with Hyperion Planning, but as I was talking with Jason, he mentioned we could even get it working in PBCS. So how does this work?

Let’s start easy with Hyperion Planning. If you happen to read Francisco’s blog, you may have already read this post about FDMEE drill-through. It actually tells us that FDMEE just uses the Essbase drill-through definitions. This happens to be the exact functionality that Drillbridge uses. As it happens, if we set up drill-through on an Essbase cube that supports Planning, it just works. See…easy. But let’s try it with the Vision cube:

I’ll spare all of the details of the Drillbridge setup, but we’ll cover a few specifics. First we’ll set up a test deployment specification:

So you can use all of your regular functions here, but I wanted to keep it super simple for testing purposes. Next, we need to setup a connection to the Essbase database:

Once we deploy the report, we can take a peek at what it actually produces in Essbase:

So what happens in Planning?

Here we can see that the cell is enabled for drill-through:

When we right-click we have to click drill-through to see our choices:

Once we click Drill Through we should see a list of all of the valid reports for that intersection. Just like in Essbase, we can see multiple reports if multiple reports are defined:

Once we click Drill Through we should see a list of all of the valid reports for that intersection. Just like in Essbase, we can see multiple reports if multiple reports are defined:

Finally, we can click on the link and we are redirected to our report in Drillbridge:

So there we have it…drill-through without the use of FDMEE. The coolest part is that this works everywhere in Planning. Planning Web Forms, Planning Ad Hoc Grids, Planning Web Forms in Smart View, Planning Ad Hoc in Smart View, and Financial Reports.

But what about PBCS? As it happens, PBCS works basically the same way as Planning. The difference is, we can’t directly deploy the drill-through definition through Drillbridge. So how do we do this? If we look at the drill-through region defined, all we really need to do is create one manually that will point back to Drillbridge. We’ll fire up PBCS and find a way…

Without EAS, Oracle has moved many of the features we would normally find there to Calculation Manager. Open calculation manager:

Click on the small Essbase Properties icon:

Find the application to which you wish to add drill-through and click on Drill Through Definitions:

If the database isn’t started, you will get a dialog like this:

If the database isn’t started, you will get a dialog like this:

Once the Drill Through Definitions dialog is displayed, click the plus sign:

We’ll start by entering a name and the XML contents. I copied and pasted my on-prem XML from EAS. Then click Add Region:

Next we add our region (copy and pasted from EAS, changing year to FY14 for PBCS Vision) and click Save:

Now let’s go see what happened:

Enabled for drill-through! Now let’s right-click and take it for a spin:

And let’s click Drill Through:

And just like on-prem we see our drill-through report name. Now let’s click on it and…oh no!

Okay, that might be a little dramatic. The one downside to this approach is that we are leaving PBCS to come back to an on-prem server. So it let’s us know that this might not be secure. Let’s just click continue and see our data!

And there we have it…working drill-through to on-prem using something other than FDMEE. Our very own Hybrid approach. The one thing to note from above is that the circled area will not function properly. I’m re-using a report I created for my on-prem version of Vision, so it does show numbers, but in PBCS you will need to turn off anything that references the API. This also means that upper level drill-through won’t work…yet. The REST API does give us what we need to enable upper-level drill-through, so I expect this feature to be added in the future.

At the end of the day, we have basically three options for Planning drill-through:

FDMEE

Pros:

- 100% Oracle Product with Oracle Support

- Standard integration tool for the EPM stack

- Loads data, audits loads, and provides drill-through

Cons:

- Requires an additional license from Oracle

- Does not support drill-through above level 0

- Can’t bolt it onto an existing application without reloading data

- The drillable content must exist in FDMEE

- No ability to change the way the drill-through looks

Drillbridge

Pros:

- Bolts onto any existing application

- Insanely fast time to implement

- Allows for full customization of the drill-through report

- Data does not technically have to live in Essbase

- Drill at any level, not just level 0

Cons:

- Not an Oracle product, though supported by Applied OLAP, this can be a deal-breaker for some companies

Custom Drill-through

Pros:

- You get total control over how you enable drill-through

Cons:

- You get to do a mega-ton more work

For Planning, I think Drillbridge is a great alternative to FDMEE. This is especially true for companies that don’t actually own FDMEE. And for those of you that need upper-level drill-through, it really is the only choice short of hiring a developer to build you a custom solution.

PBCS is a little bit trickier. There are still three options available:

FDMEE

Pros:

- 100% Oracle Product with Oracle Support

- Standard integration tool for the EPM stack and you likely loaded data to PBCS using it

- Loads data, audits loads, and provides drill-through

- You can use on-premise now, or the built-in version

Cons:

- Does not support drill-through above level 0

- The drillable content must exist in FDMEE

- FDMEE on PBCS has a limited number of fields, and those fields have a character limit

- No ability to change the way the drill-through looks

Drillbridge

Pros:

- Bolts onto any existing application

- Insanely fast time to implement

- Allows for full customization of the drill-through report

- Data does not technically have to live in PBCS

Cons:

- Not an Oracle product, though supported by Applied OLAP, this can be a deal-breaker for some companies

- Does not yet support upper-level drill-through (more on this later)

Custom Drill-through

Pros:

- You get total control over how you enable drill-through

Cons:

- You get to do a mega-ton more work

For PBCS, right now, I would generally stick with FDMEE. Most of us are using it to load data into Planning anyway, so adding additional detail to the import format for drill-through isn’t much in the way of additional work. However…if you need upper-level drill-through, you are out of luck…for now. I fully expect that we will see a future release of Drillbridge that includes REST API integration. This means that at a minimum, it should allow for upper-level drill-through. When that happens…Drillbridge becomes a more powerful tool for drill-through for PBCS than even FDMEE.

Brian Marshall

May 3, 2016

If you are 11.1.2.4 and you use ASO Plan Types, you may be aware of a bug that prevents drill-through from working properly. Essentially, I can define drill-through in BSO and everything works fine, but ASO just doesn’t work. You can see the intersection as being available for drill-through, but when you attempt to actually drill through…you get this:

Well that sucks. But wait, there is now hope! First, you need 11.1.2.4.004. Then, you need the recently released intermediate patch 23023767. Now if we look at the read-me, we see this:

That is not terribly promising. In fact, it just plainly says absolutely nothing about drill-through. It doesn’t even mention ASO at all. But hey, let’s follow the instructions and patch our system anyway. Flash forward a few minutes, or hours depending on your installation and we should be ready to try it out again:

That is not terribly promising. In fact, it just plainly says absolutely nothing about drill-through. It doesn’t even mention ASO at all. But hey, let’s follow the instructions and patch our system anyway. Flash forward a few minutes, or hours depending on your installation and we should be ready to try it out again:

Now that looks better! Working drill-through from an ASO Plan Type! The cool part is that we can use FDMEE…or something else. Be on the lookout for another post on using DrillBridge with Planning AND PBCS.

Brian Marshall

April 26, 2016

Not to sound like a broken record, but…a few disclaimers:

- This is the fun stuff…that voids your warranty. Not that you have to tell Oracle that you poke around here, but if you do, they will blame anything and everything that’s wrong on you for being in there.

- The content here has been tested and validated against 11.1.2.3.500 and 11.1.2.4.

- The content here has been tested and validated against Microsoft SQL Server and Oracle 11g.

- The content here is based on the Vision sample application.

- The content here is totally unsupported by Oracle (in case you missed the first bullet).

So it’s been a while since we took at look at the Planning Repository. Today we’ll move past the HSP_MEMBER table (and associated tables) to look at the first of our built-in Planning dimensions: HSP_ACCOUNT. As the name indicates, the HSP_ACCOUNT table contains properties related to the account dimension in Planning. Let’s take a look at the table structure:

| Field Name | SQL Server Type | Oracle Type | Description |

| ACCOUNT_ID | int | NUMBER(38,0) | The OBJECT_ID of the member. |

| USE_445 | smallint | NUMBER(38,0) | If you application is set to use weekly distribution, this field will be populated when the Distribution checkbox is checked.

0=don't adjust values

1=445 spread

2=454 spread

3=544 spread |

| TIME_BALANCE | smallint | NUMBER(38,0) | Determines the Essbase time balance attribute.

0=none

1=first

2=last

3=average |

| SKIP_VALUE | smallint | NUMBER(38,0) | Determines the Essbase skip attribute.

0=none

1=skip missing values

2=skip zero values

3=skip missing and zero values |

| ACCOUNT_TYPE | smallint | NUMBER(38,0) | Determines the account type.

1=expense

2=revenue

3=asset

4=liability

5=equity

6=statistical

7=saved assumption |

| VARIANCE_REP | smallint | NUMBER(38,0) | Determines the Essbase variance reporting attribute.

1=expense

2=non-expense |

| CURRENCY_RATE | smallint | NUMBER(38,0) | If your application is set to use the built-in currency functionality, this determines which rate to use for conversion.

0=none

1=average

2=ending

3=historical |

| PLANNING_METHOD | smallint | NUMBER(38,0) | No idea on this one. |

| USED_IN | smallint | NUMBER(38,0) | The plan type usage for the member. This is also a bitmask that tells us which plan types are used in a single integer. This field also appears in the HSP_MEMBER table and appears to stay in sync. |

| DATA_TYPE | smallint | NUMBER(38,0) | This field is also found in the HSP_MEMBER table. It is populated in HSP_MEMBER but is always NULL in this table. |

| SRC_PLAN_TYPE | int | NUMBER(38,0) | "Indicates the source of data value for account." - Direct from Oracle. |

| SUB_ACCOUNT_TYPE | int | NUMBER(38,0) | "Identifies the type of account and how its useds" - Direct from Oracle. |

Overall, this table is pretty simple. We could probably stop there, but hey, how about a quick query:

SELECT

po.OBJECT_NAME AS Parent

,o.OBJECT_NAME AS Account

,CASE a.USE_445

WHEN 0 THEN 'None'

WHEN 1 THEN '445'

WHEN 2 THEN '454'

WHEN 3 THEN '544'

ELSE ''

END AS WeeklySpread

,CASE a.TIME_BALANCE

WHEN 0 THEN 'None'

WHEN 1 THEN 'First'

WHEN 2 THEN 'Last'

WHEN 3 THEN 'Average'

ELSE ''

END AS TimeBalance

,CASE a.SKIP_VALUE

WHEN 0 THEN 'None'

WHEN 1 THEN 'Skip Missing'

WHEN 2 THEN 'Skip Zeros'

WHEN 3 THEN 'Skip Missing and Zeros'

ELSE ''

END AS SkipValue

,CASE a.ACCOUNT_TYPE

WHEN 1 THEN 'Expense'

WHEN 2 THEN 'Revenue'

WHEN 3 THEN 'Asset'

WHEN 4 THEN 'Liability'

WHEN 5 THEN 'Equity'

WHEN 6 THEN 'Statistical'

WHEN 7 THEN 'Saved Assumption'

ELSE ''

END AS AccountType

,CASE a.VARIANCE_REP

WHEN 1 THEN 'Expense'

WHEN 2 THEN 'Non-expense'

ELSE ''

END AS VarianceReporting

,CASE a.CURRENCY_RATE

WHEN 0 THEN 'None'

WHEN 1 THEN 'Average'

WHEN 2 THEN 'Ending'

WHEN 3 THEN 'Historical'

ELSE ''

END AS CurrencyRate

FROM

HSP_ACCOUNT a

INNER JOIN

HSP_OBJECT o ON a.ACCOUNT_ID = o.OBJECT_ID

INNER JOIN

HSP_OBJECT po ON o.PARENT_ID = po.OBJECT_ID

ORDER BY

o.POSITION

This is basically a decode of all of the different fields. Here are the results:

SQL Server:

Oracle:

Oracle:

We’ll be keeping it simple for the next few posts, focusing on the built-in dimensions that we have left. That’s all for now!

Brian Marshall

April 17, 2016

I was reminded recently that I promised to continue with PowerShell in my last PowerShell blog post, but that I hadn’t done it yet. I may have gotten a little caught up in my home lab series. So, if you were waiting and waiting for this, my apologies.

Now that we have a little bit of PowerShell working knowledge and we have it working with MaxL, let’s get serious about some of our other batch commands. Today we’ll focus on the outline load utility for Planning. If you’ve used this utility in your batch commands before, you can probably skip the first part of this blog post. If not, let’s first talk about what the outline load utility is. It may be the worst named utility that I’ve ever used. The outline load utility doesn’t actually load an outline at all. And to make matters worse…it’s not even just a meta-data tool.

The outline load utility allows us to load meta-data, numeric data, text-based data, build smart lists, and generally manage the back-end of Hyperion Planning from a batch script (or PowerShell). Today we’ll focus on getting the utility working in PowerShell. So where is this mythical utility? You should be able to find it here:

<epm install drive>:\Oracle\Middleware\user_projects\epmsystem1\Planning\planning1

You will actually find quite a few utilities in this directory. There are utilities for imports, exports, running business rules. This directory is why I will never wake up one day and say “I wish I had something to blog about.” I’ll literally never run out of content with all of these hidden gems out there. Here’s a sample:

Next we need to decide what we want to do with the utility and give it a shot. There are so many things that we can do with it, I’m going to stick with something really simple and use the 11.1.2.3.500 Sample Vision app for the demonstration. This way you should all be able to reproduce what we are doing.

So let’s first log into our Vision sample app and take an export of the Product dimension. Once inside our app, click on Administration then Import and Export and then Export Metadata to File.

Select our Product dimension from the list and click Run and we should have a great file to start playing with. When prompted, just save the file away and we’ll come back for it later.

Now let’s get back to our Planning server and start up PowerShell. Once we have our window open, let’s just change our directory and run the CMD file and see what happens:

You should get something totally useful like this:

Before we go any further, we need to go ahead and generate a password file for the load utility. This will allow us to execute our PowerShell script without needing to enter a password each time. To do this we just type in PasswordEncryption.cmd PasswordFile.pass. It should prompt you for a password. This will be the password to the username that we will eventually tell the load utility to use. Enter your password, press enter, and the file we need should be produced.

Now we are ready to load the file that we exported earlier from Workspace. I placed my exported file directly into the planning1 folder with the utility for my first execution just to make things simple. So first let’s look at the command, then we’ll pick it apart:

.\OutlineLoad.cmd -f:PasswordFile.pass /A:Vision /U:admin /I:admin_ExportedMetadata_Product.csv /D:Product /L:Product.log /X:Product.exc

| Parameter | Description | Value |

| -F: | Specifies the password file that we just generated so that we don't need to enter a password. | PasswordFile.pass |

| /A | Specifies the application that we are connecting to. | Vision |

| /U | Specifies the username that we are connecting with. | admin |

| /I | Specifies the file that we will be importing. | admin_ExportedMetadata_Product.csv |

| /D | Specifies the dimension that we will be loading. | Product |

| /L | Specifies the file to log our results to. | Product.log |

| /X | Specifies the file to write exceptions to. | Product.exc |

So what happens?

Success! And how about our log and exception files?

Great…they are there. Now let’s make this something worthy of PowerShell. To do that, we’ll make everything into a variable. I’ve created a folder on my Planning server to store my files and referenced that file in the following PowerShell script:

###############################################################################

#Created By: Brian Marshall

#Created Date: 12/16/2015

#Purpose: Sample PowerShell Script for HyperionEPM.com

###############################################################################

###############################################################################

#Variable Assignment

###############################################################################

$PlanningPath = "C:\Oracle\Middleware\user_projects\epmsystem1\Planning\planning1"

$PlanningUsername = "admin"

$PlanningPasswordFile = "PasswordFile.pass"

$PlanningApp = "Vision"

$PlanningDim = "Product"

$PlanningDimFile = "admin_ExportedMetadata_Product.csv"

$PlanningWorkingFolder = "C:\Data\HyperionEPM\PowerShell"

###############################################################################

#Outline Load Utility Execution

###############################################################################

& $PlanningPath\OutlineLoad.cmd -f:$PlanningPath\$PlanningPasswordFile /A:$PlanningApp /U:$PlanningUsername /I:$PlanningWorkingFolder\$PlanningDimFile /D:$PlanningDim /L:$PlanningWorkingFolder\$PlanningDim.log /X:$PlanningWorkingFolder\$PlanningDim.exc

Along with the file above, I’ve placed the Product file that we exported from Workspace. I’ve set the PlanningWorkingFolder variable to reflect the name of my folder and I should be ready to execute my new Product PowerShell script. But wait…I don’t want to schedule this process to run on the Planning server and another process to run on the Essbase server. I need them to run from the same place. We’ll cover that exact need in our next post…stay tuned!

Brian Marshall

February 29, 2016

Once we click Drill Through we should see a list of all of the valid reports for that intersection. Just like in Essbase, we can see multiple reports if multiple reports are defined:

Once we click Drill Through we should see a list of all of the valid reports for that intersection. Just like in Essbase, we can see multiple reports if multiple reports are defined:

If the database isn’t started, you will get a dialog like this:

If the database isn’t started, you will get a dialog like this:

That is not terribly promising. In fact, it just plainly says absolutely nothing about drill-through. It doesn’t even mention ASO at all. But hey, let’s follow the instructions and patch our system anyway. Flash forward a few minutes, or hours depending on your installation and we should be ready to try it out again:

That is not terribly promising. In fact, it just plainly says absolutely nothing about drill-through. It doesn’t even mention ASO at all. But hey, let’s follow the instructions and patch our system anyway. Flash forward a few minutes, or hours depending on your installation and we should be ready to try it out again:

Oracle:

Oracle: