Welcome to the seventh part in an on-going blog series about Essbase performance. Today we will focus on Essbase Physical vs. Virtual Performance, but first here’s a summary of the series so far:

I started sharing actual Essbase benchmark results in part six of this series, focusing on Hyper-Threading. Today I’ll shift gears away from physical Essbase testing and focus on virtualized Essbase.

Testing Configuration

All testing is performed on a dedicated benchmarking server. This server changes software configurations between Windows and ESXi, but the hardware configuration is static. Here is the physical configuration of the server:

I partitioned each of the storage devices into equal halves: one half for use in the Windows installation on the physical hardware and one half for use in the VMware ESXi installation. here is the virtual configuration of the server:

| Processors | 32 vCPU (Intel Xeon E5-2670) |

| Memory | 96GB RAM (DDR3 1600) |

| Solid State Storage | (4) Samsung 850 EVO 250 GB on LSI SAS in RAID 0 (Data store) |

| Solid State Storage | (1) Samsung 850 EVO 250 GB on LSI SAS (Data store) |

| NVMe Storage | Intel P3605 1.6TB AIC (Data store) |

| Hard Drive Storage | (12) Fujitsu MBA3300RC 300GB 15000 RPM on LSI SAS in RAID 10 (Data store) |

| Network Adapter | Intel X520-DA2 Dual Port 10 Gbps Network Adapter |

Network Storage

Before we get too far into the benchmarks, I should probably also talk about iSCSI and network storage. iSCSI is one of the many options out there to provide network-based storage to servers. This is one implementation used by many enterprise storage area networks (SAN). I implemented my own network attache storage device so that I could test out this type of storage. Network storage is even more common today because most virtual clusters require some sort of network storage to provide fault tolerance. Essentially, you have one storage array that is shared by multiple hosts. This simplifies back-ups and maintains high availability and performance.

There is however a problem. Essbase is heavily dependent on disk performance. Latency of any kind will harm performance because as latency increases, random I/O performance decreases. So why does network storage have a higher latency? Let’s take a look at our options.

Physical Server with Local Storage

In this configuration, the disk is attached to the physical server and the latency is contained inside of the system. Think of it like a train: the longer we travel on a track, the longer it takes to reach out destination and return home.

Virtual Server with Local Storage

With virtual storage, we have a guest server that communicates to the host server for I/O requests that has a disk attached. Now our train is traveling on a little bit more track. So latency increases and performance decreases.

Physical Server with Network Storage

Our train track seems to be getting longer by the minute. With network storage on a physical server, we now have our physical server communicating with our file server that has a disk attached. This adds an additional stop.

Virtual Server with Network Storage

This doesn’t look promising at all. Our train now makes a pretty long round-trip to reach its destination. So performance might not be great on this option for Essbase. That’s unfortunate, as this is a very common configuration. Let’s see what happens.

EssBench Application

The application used for all testing is EssBench. This application has the following characteristics:

- Dimensions

- Account (1025 members, 838 stored)

- Period (19 members, 14 stored)

- Years (6 members)

- Scenario (3 members)

- Version (4 members)

- Currency (3 members)

- Entity (8767 members, 8709 stored)

- Product (8639 members, 8639 stored)

- Data

- 8 Text files in native Essbase load format

- 1 Text file in comma separated format

- PowerShell Scripts

- Creates Log File

- Executes MaxL Commands

- MaxL Scripts

- Resets the cube

- Loads data (several rules)

- Aggs the cube

- Executes allocation

- Aggs the allocated data

- Executes currency conversion

- Restructures the database

- Executes the MaxL script three times

Testing Methodology

For these benchmarks to be meaningful, we need to be consistent in the way that they are executed. The tests in both the physical and virtual environment were kept exactly the same on an Essbase level. Today I will be focusing on native Essbase loads. The process takes eight (8) native Essbase files produced from a parallel export and loads them into Essbase using a parallel import. Because this is a test of loading data, CALCPARALLEL and RESTRUCTURETHREADS have no impact. Let’s take a look at the steps used to perform these tests:

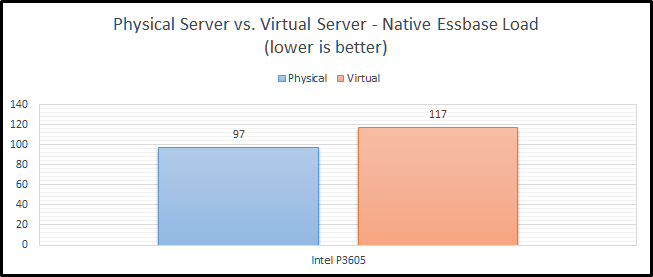

- Physical Test – Intel P3605

- A new instance of EssBench was configured using the application name EssBch06.

- The data storage was changed to the Intel P3605 drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

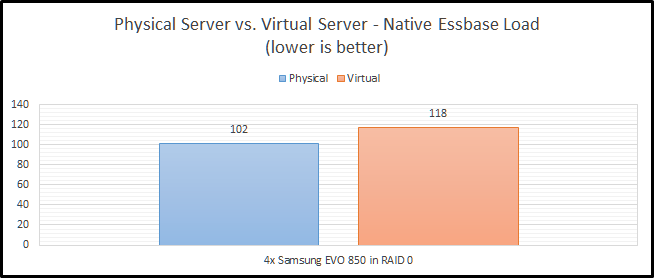

- Physical Test – SSD RAID

- A new instance of EssBench was configured using the application name EssBch08.

- The data storage was changed to the SSD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

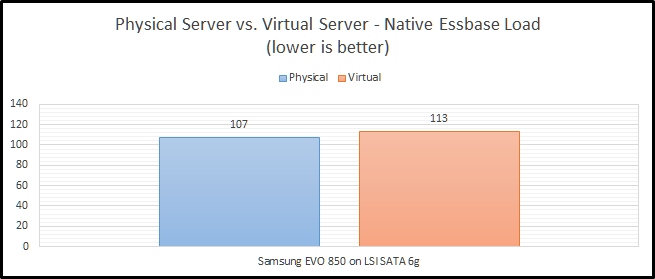

- Physical Test – Single SSD

- A new instance of EssBench was configured using the application name EssBch09.

- The data storage was changed to the single SSD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

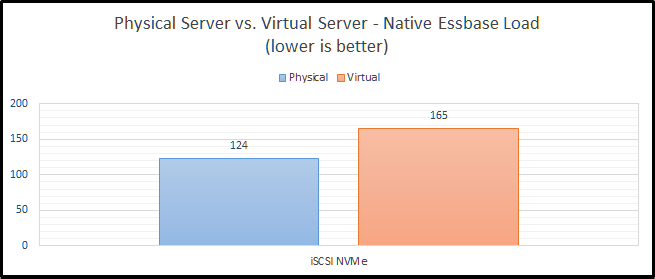

- Physical Test – iSCSI NVMe

- A new instance of EssBench was configured using the application name EssBch10.

- The data storage was changed to the iSCSI NVMe drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

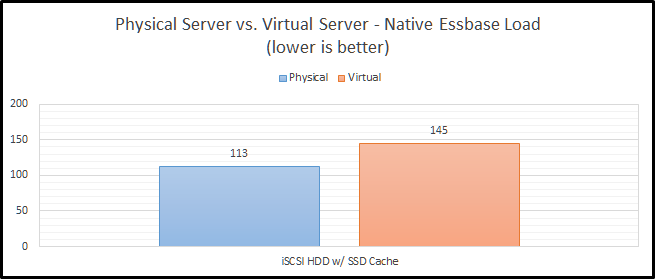

- Physical Test – iSCSI HDD w/ SSD Cache

- A new instance of EssBench was configured using the application name EssBch11.

- The data storage was changed to the iSCSI HDD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

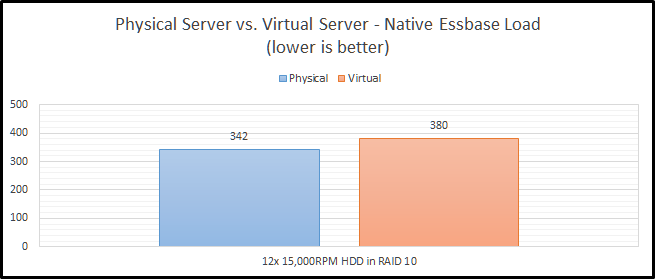

- Physical Test – HDD RAID

- A new instance of EssBench was configured using the application name EssBch07.

- The data storage was changed to the HDD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – Intel P3605

- A new instance of EssBench was configured using the application name EssBch15.

- The data storage was changed to the Intel P3605 drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – SSD RAID

- A new instance of EssBench was configured using the application name EssBch16.

- The data storage was changed to the SSD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – Single SSD

- A new instance of EssBench was configured using the application name EssBch17.

- The data storage was changed to the single SSD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – iSCSI NVMe

- A new instance of EssBench was configured using the application name EssBch18.

- The data storage was changed to the iSCSI NVMe drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – iSCSI HDD w/ SSD Cache

- A new instance of EssBench was configured using the application name EssBch19.

- The data storage was changed to the iSCSI HDD drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- Virtual Test – HDD RAID

- A new instance of EssBench was configured using the application name EssBch14.

- The data storage was changed to the HDD RAID drive.

- The application was restarted.

- The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

So…that was a lot of testing. Luckily, that set of steps will apply to the next several blog posts.

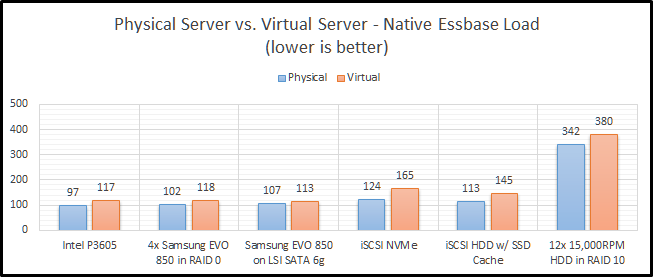

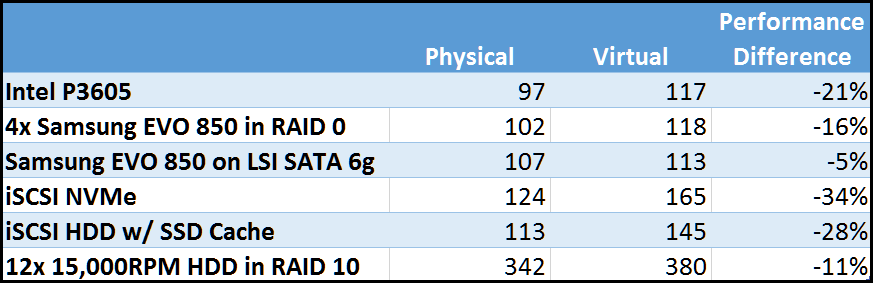

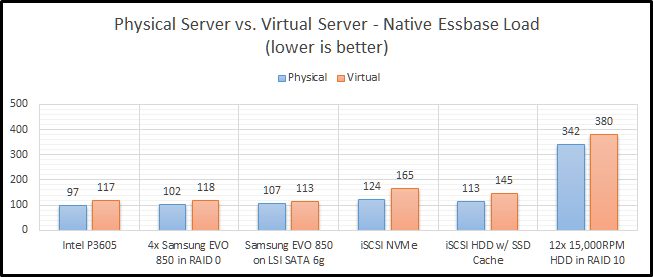

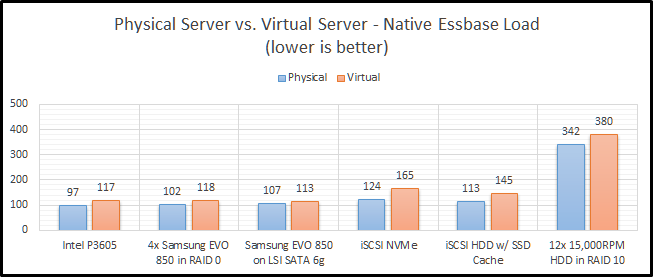

Physical vs. Virtual Test Results

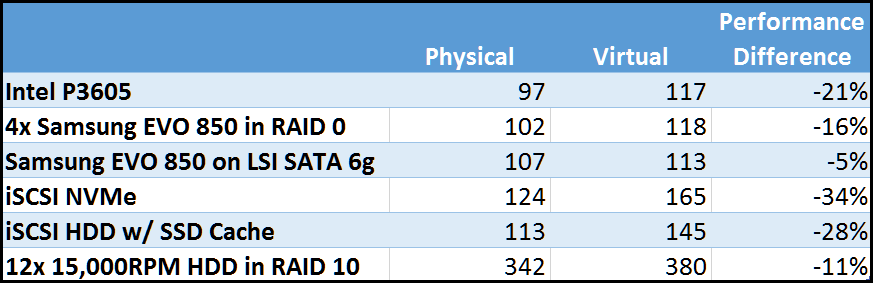

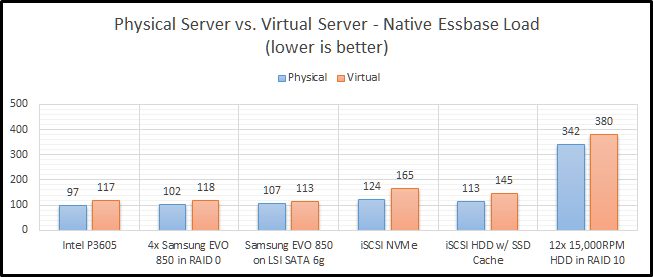

This is a very simple test. It really just needs a disk drive that can provide adequate sequential writes, and things should be pretty fast. So how did things end up? Let’s break it down by storage type.

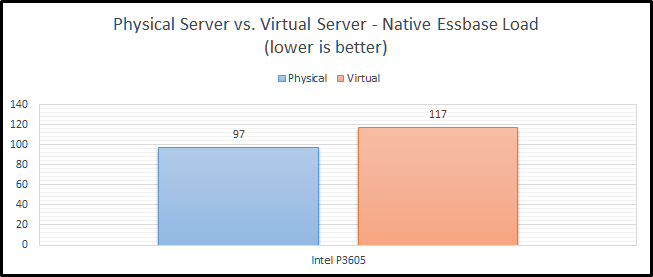

Intel P3605

The Intel P3605 is an enterprise-class NVMe PCIe SSD. Beyond all of the acronyms, its basically a super-fast SSD. When comparing these two configurations, we see that clearly the physical server is much faster (21%).

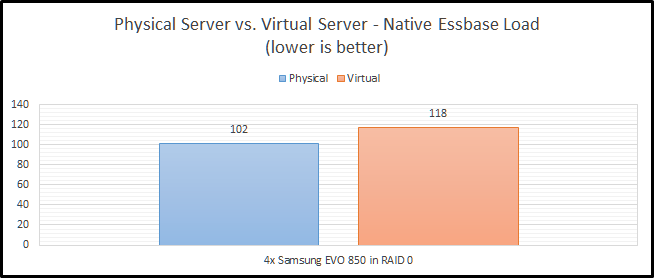

SSD RAID

I’ve chosen to implement SSD RAID using a physical LSI 9265-8i SAS controller with 1GB of RAM. The drives are configured in RAID 0 to get the most performance possible. RAID 1+0 is preferred, but then I would need eight drives to get the same performance level. The finance committee (my wife) would frown on another four drives. Our SSD RAID option is still faster on a physical server, as we would expect. The difference however is lower at 16%. It is also worth noting that it is basically the same speed as our NVMe option in the virtual configuration. I believe this is likely due to the drivers for Windows being better for the NVMe SSD than they are for ESXi.

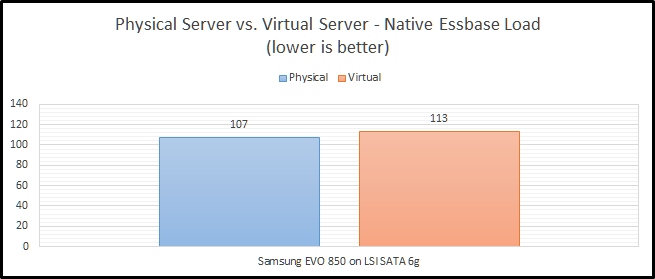

Single SSD

How did our single SSD fair? Pretty well actually with only a 5% decrease in performance going to a virtual environment. In our virtual environment, we actually see that it is faster than both our NVMe and SSD RAID options. But…they are pretty close. We’ll wait to see how everything performs in later blog posts before we draw any large conclusions from this test.

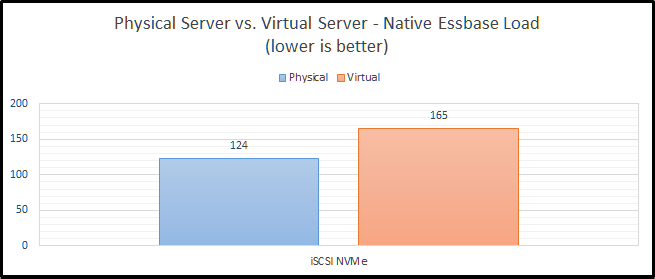

iSCSI NVMe

Now we can move on to a little bit more interesting set of benchmarks. Network storage never seems to work out at my clients. It especially seems to struggle as they go virtual. Here, we see that NVMe over iSCSI performs “okay” on a physical system, but not so great on a virtual platform. The difference is a staggering 34%.

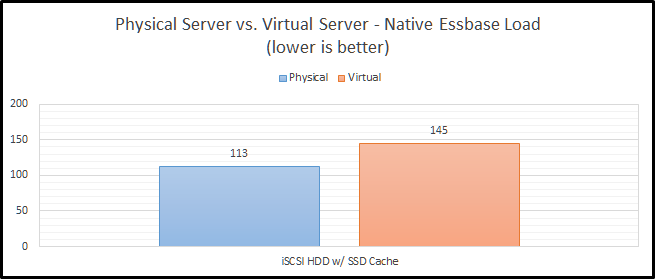

iSCSI HDD w/ SSD Cache

Staying on the topic of network storage, I also tested out a configuration with hard drives and an SSD cache. The problem with this test is that I’m the only user on the system and the cache is a combination of 200GB of SSD and a ton of RAM. Between those two things, I don’t think it ever leaves the cache. The difference between physical and virtual is still pretty bad at 28%. I still can’t explain why this configuration is faster than the NVMe configuration, but my current guess is that it is related to drivers and/or firmware in FreeBSD.

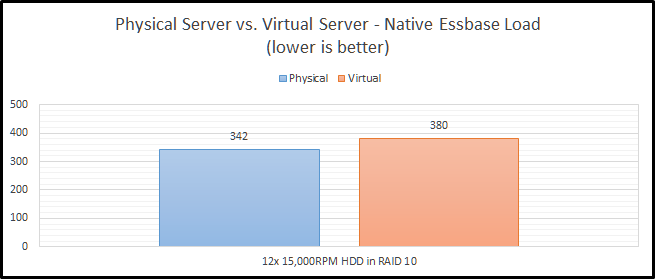

HDD RAID

Finally, we come to our slowest option…old-school hard drives. It isn’t just a little slower…it is a LOT slower. This actually uses the same RAID card that I am using for the SSD RAID configuration. These drives are configured in RAID 1+0. With the SSD’s, I was trying to see how fast I can make it. With the HDD configuration, I was really trying to test out a real-world configuration. RAID 0 has no redundancy, so it is very uncommon with hard drives. We see here that the hard drive configuration is only 11% slower in a virtual configuration than in a physical configuration. We’ll call that the silver lining.

Summarized Essbase Physical vs. Virtual Performance Results

Let’s look at the all of the results in a grid:

And as a graph:

CONCLUSION

In my last post, I talked about preconceived notions. In this post, I get to confirm one of those. Physical hardware is faster than virtual hardware. This shouldn’t be shocking to anyone. But, I like having a percentage and seeing the different configurations. For instance, network storage in this instance is an upgrade compared to regular hard drives. But if you are going from local SSD storage to network storage, you will end up slower on two fronts. First, you obviously lose speed going to a virtual environment. Next, you also lose speed going to the network.

Brian Marshall

July 12, 2017

Essbase Performance Series

Welcome to the sixth part in an on-going blog series about Essbase performance. Here’s a summary of the series so far:

Now that EssBench is official, I’m ready to start sharing the benchmarks in Essbase and some explanation. Much of this was covered in my Kscope17 presentation, but if you download the PowerPoint, it lacks some context. My goal is to provide that context via my blog. We’ll start off with actual Essbase benchmarks around Hyper-Threading. For years I’ve always heard that Hyper-Threading was something you should always turn off. This normally lead to an argument with IT about how you even go about turning it off and if they would support turning it off. But before we too far, let’s talk about what Hyper-Threading is.

What is Hyper-Threading

Hyper-Threading is Intel’s implementation of a very old technology known as Simultaneous Multi-Threading (SMT). Essentially it allows each core of a processor to address two threads at once. The theory here is that you can make use of otherwise idle CPU time. The problem is that at the time of release in 2002 in the Pentium IV line, the operating system implementation of the technology was not great. Intel claimed up that it would increase performance, but it was very hit and miss depending on your application. At the time, it was considered a huge miss for Essbase. Eventually Intel dropped Hyper-Threading for the next generation of processors.

The Return of Hyper-Threading

With the release of the Xeon X5500 series processors, Intel re-introduced the technology. This is where it gets interesting. The technology this time around wasn’t nearly as bad. With the emergence of hypervisors and a massive shift in that direction, Hyper-Threading can actually provide a large benefit. In a system with 16 cores, now guests can address 32 threads. In fact, if you look at the Oracle documentation for their relational technology, they recommend leaving it on starting with the X5500 series of processors. With that knowledge, I decided to see how it would perform in Essbase.

Testing Configuration

All testing is performed on a dedicated benchmarking server. This server changes software configurations between Windows and ESXi, but the hardware configuration is static:

One important note on hardware configuration. All of the benchmarks that have been run are using the same NVMe storage. This is our fastest storage option available, so this should ensure that we don’t skew the results with hardware limitations.

EssBench Application

The application used for all testing is EssBench. This application has the following characteristics:

- Dimensions

- Account (1025 members, 838 stored)

- Period (19 members, 14 stored)

- Years (6 members)

- Scenario (3 members)

- Version (4 members)

- Currency (3 members)

- Entity (8767 members, 8709 stored)

- Product (8639 members, 8639 stored)

- Data

- 8 Text files in native Essbase load format

- 1 Text file in comma separated format

- PowerShell Scripts

- Creates Log File

- Executes MaxL Commands

- MaxL Scripts

- Resets the cube

- Loads data (several rules)

- Aggs the cube

- Executes allocation

- Aggs the allocated data

- Executes currency conversion

- Restructures the database

- Executes the MaxL script three times

Testing Methodology

For these benchmarks to be meaningful, we need to be consistent in the way that they are executed. This particular set of benchmarks requires a configuration change at the system level, so it will be a little less straight forward than the future methodology. Here are the steps performed:

- With Hyper-Threading enabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 32. A new instance of EssBench was configured using the application name EssBch01. The EssBench process was run for this instance and the results collected. The three sets of results were averaged and put into the chart and graph below.

- With Hyper-Threading enabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 16. Another new instance of EssBench was configured using the application name EssBch06. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

- With Hyper-Threading enabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 8. Another new instance of EssBench was configured using the application name EssBch05. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

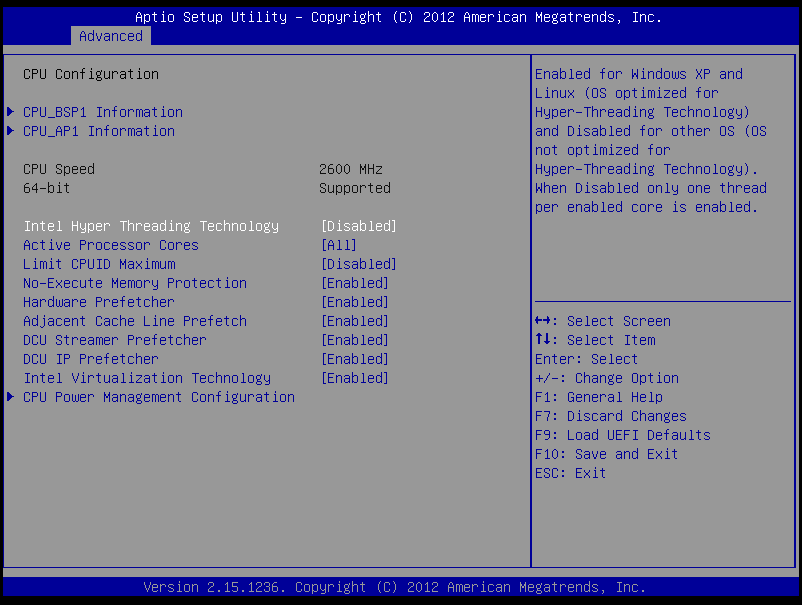

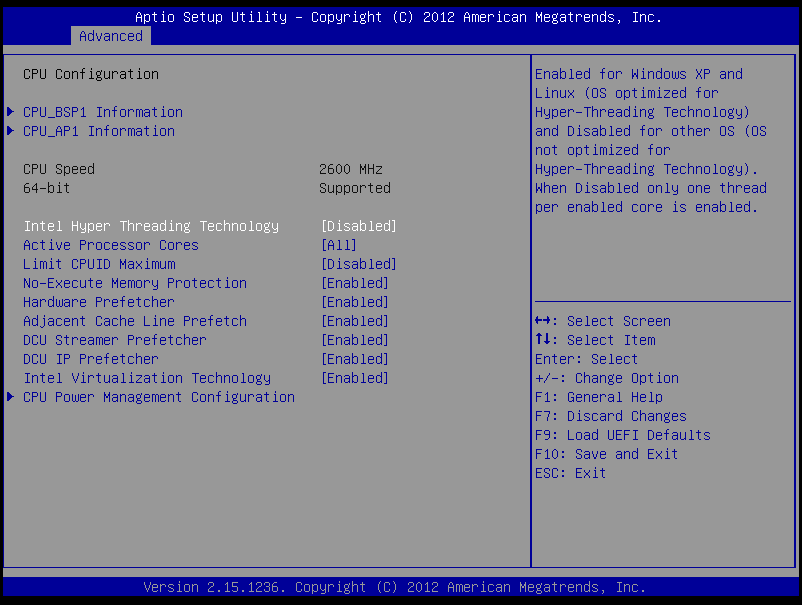

- Now we disable Hyper-Threading:

- With Hyper-Thread disabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 16. Another new instance of EssBench was configured using the application name EssBch02. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

- With Hyper-Thread disabled, CALCPARALLEL and RESTRUCTURETHREADS were both set to 8. Another new instance of EssBench was configured using the application name EssBch04. The EssBench process was run and the results collected. The three sets of results were averaged and put into the chart and graph below.

Essbase and Hyper-Threading

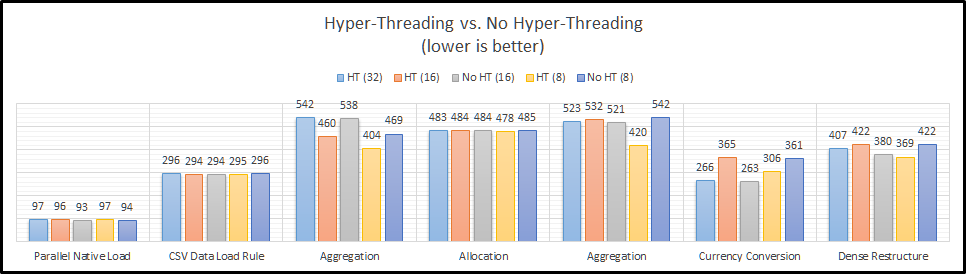

Let’s start by taking a look at each step of EssBench individually. I have some theories on each of these…but they could also just be the ramblings of a nerd recovering from Kscope17.

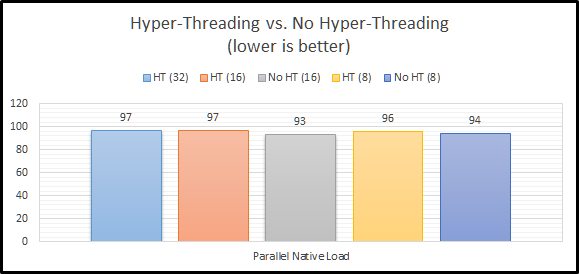

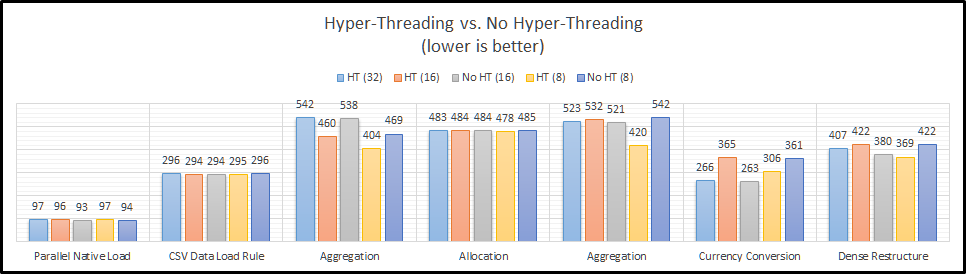

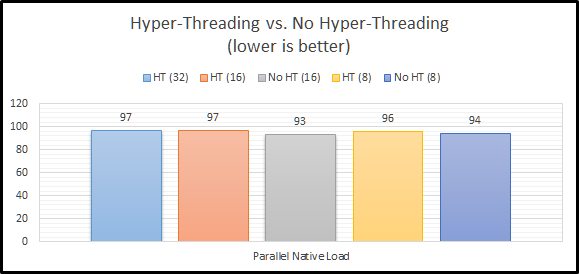

Parallel Native Load

The parallel native load uses eight (8) text files and loads them into the cube in parallel. This means that the difference between our CALCPARALLEL and RESTRUCTURETHREADS should have no bearing on the results. The most interesting part about this benchmark is that all of the results are within 5% of each other. The first thing I notice is that they are not massively different when comparing Hyper-Threading enabled and Hyper-Threading disabled. Let’s move on to a single-threaded load.

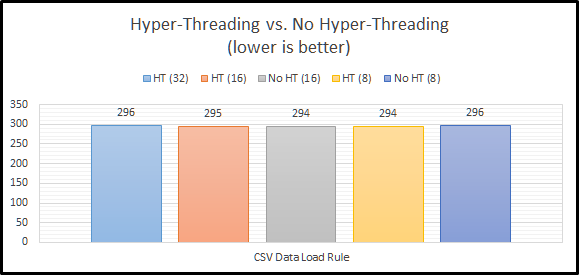

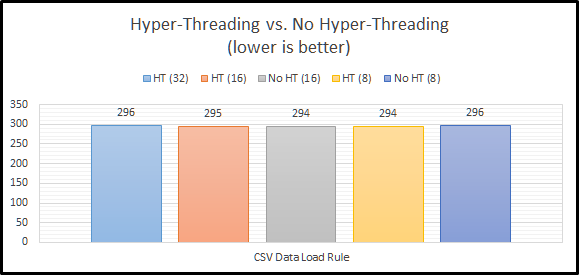

CSV Data Load Rule

The next step in the benchmark simply loads a CSV file using an Essbase load rule. This is a single-threaded operation and again our settings outside of Hyper-Threading should have no bearing. And again, the most interesting thing about these results…they are within 1% of of each other. So far…Hyper-Threading doesn’t seem to make a difference either way. What about a command that can use all of our threads?

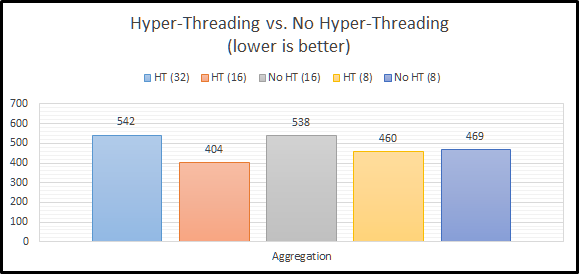

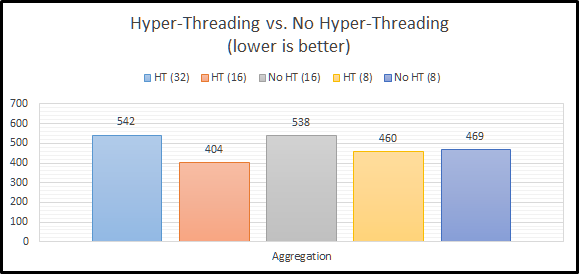

Aggregation

Now we have something to look at! We can clearly see that Hyper-Threading appears to massively improve performance for aggregations. This actually makes sense. With Hyper-Threading enabled, we have 32 logical cores for Essbase to use. With it disabled, we have 16. If we max out the settings for both, it would seem that we don’t have enough I/O performance to keep up. We can somewhat confirm this theory by looking at the results for CALCPARALLEL 8. When we attempt to only use half of our available CPU resources, there’s basically no difference in performance.

So is Hyper-Threading better, or worse for aggregation performance? The answer actually appears to be…indifferent. If we compare our two CALCPARALLEL 16 results, you might think that Hyper-Threading is what seems to be making things faster. But if we look at our setting of 8, we know that doesn’t make sense. Instead, I think what we are witnessing is the ability of Hyper-Threading to allow our system to really use cycles that otherwise go to waste. Our two settings that push our system to the max have the worst results. Essbase is basically taking all of the threads and keeping them to itself.

However, using 16 threads and having Hyper-Threading turned on seems to allow the operating system to properly use the additional 16 threads to good use for any overhead going on in the background. Basically, the un-used CPU time can be used if Hyper-Threading is turned on. The system seems to get overcome by Essbase and things slow down. So far, it looks our results tell us we should definitely use Hyper-Threading. They also indicate that Essbase doesn’t really seem to do well when it takes the entire server! If we consult the Essbase documentation, it actually suggests that CALCPARALLEL works best at 8.

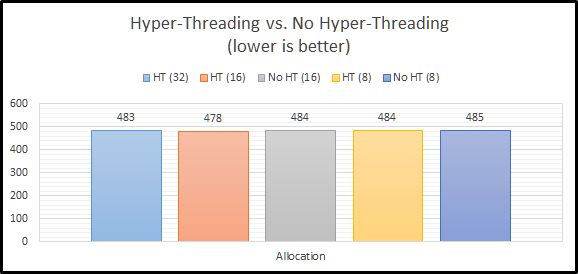

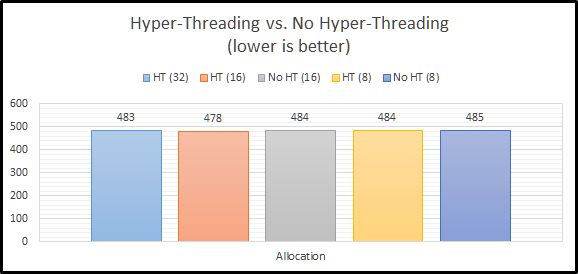

Allocation

The allocation script actually ignores our setting for CALCPARALLEL altogether. How? It uses FIXPARALLEL. On a non-Exalytics system, FIXPARALLEL has a maximum setting of 8. We again see an instance of Hyper-Threading having no impact on performance. I believe that there are two reasons for this. First, we have plenty of I/O performance to keep up with 8 threads. Second, there is no need to use the un-used CPU time. There are plenty of CPU resources to go around. The good news here is that performance doesn’t go down at all. This again seems to support leaving Hyper-Threading enabled.

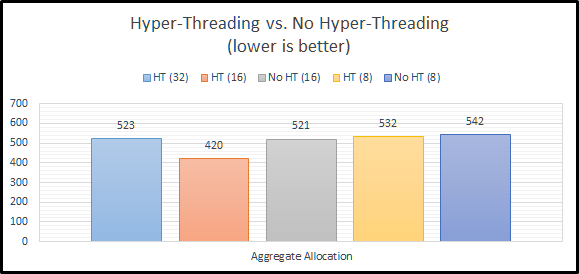

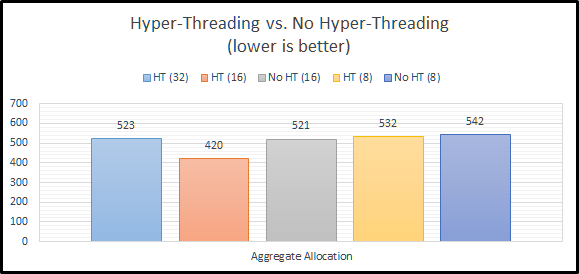

Aggregate Allocation

With our allocation calculation complete, the next step in EssBench is to aggregate that newly created data. These results should mirror the results from our initial allocation. And…they do for the most part. The results with CALCPARALLEL 8 are slower here. The results for CALCPARALLEL 16 are also slower. This is likely due to the additional random read I/O required to find all newly stored allocated data. The important thing to note here…CALCPARALLEL seems to love Hyper-Threading…as long as you have enough I/O performance, and you don’t eat the entire server.

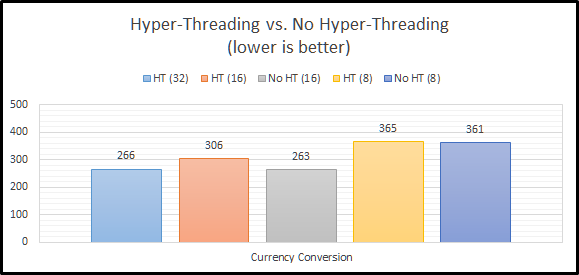

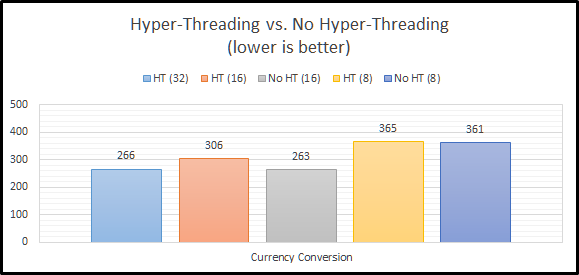

Currency Conversion

Our next step in the EssBench process…currency conversion. In this instance, it seems that the efficiency that Hyper-Threading gives us doesn’t actually help. Why? I’m guessing here, but I believe Essbase is getting more out of each core and I/O is not limiting performance here. If we look at CALCPARALLEL 8, the results are essentially the same. It seems that Essbase can actually use more of each processing core with the available I/O resources. So…does this mean that Hyper-Threading is actually slower here? On a per core basis…yes. But, we can achieve the same results with Hyper-Threading enabled, we just need to use more logical cores to do so.

Dense Restructure

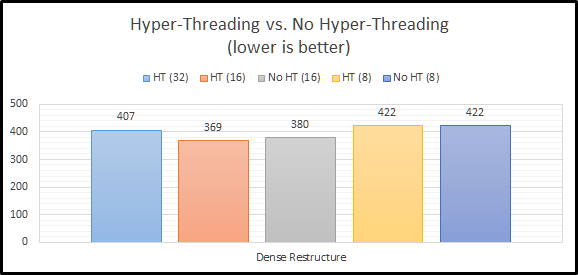

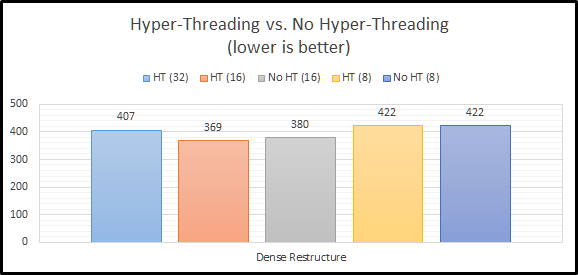

The last step in the EssBench benchmark is to force a dense restructure. This seems to bring us back to Essbase being somewhat Hyper-Threading indifferent. The Hyper-Threaded testing shows a very small gain, but nothing meaningful.

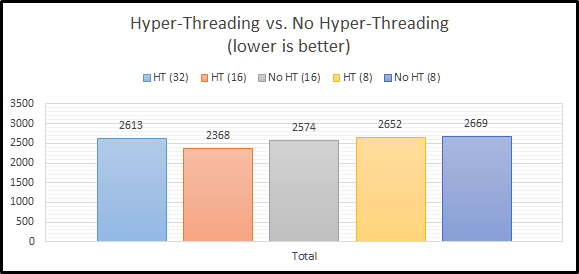

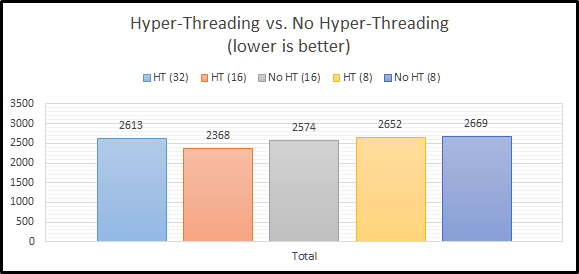

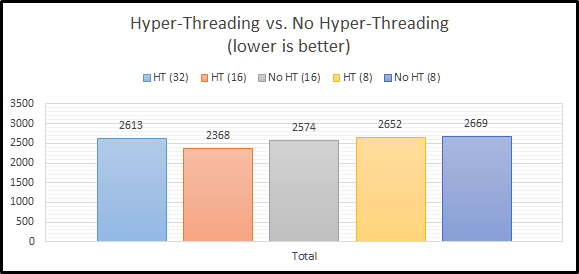

Total Benchmark Time

When we look at the final total results, we can see that Hyper-Threading overall performs better than or basically the same as no Hyper-Threading. This definitely goes against the long-standing recommendation to turn off Hyper-Threading.

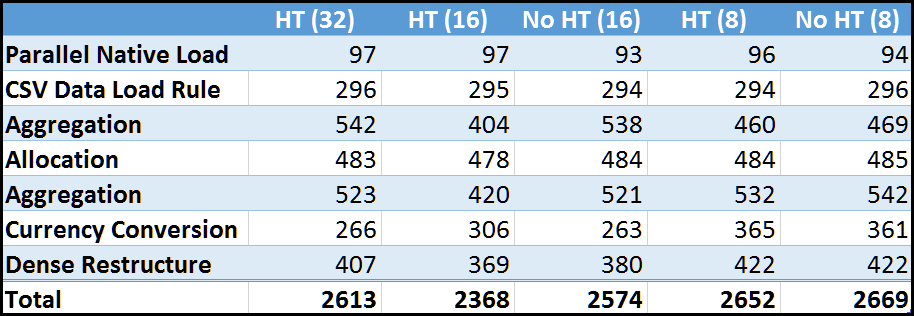

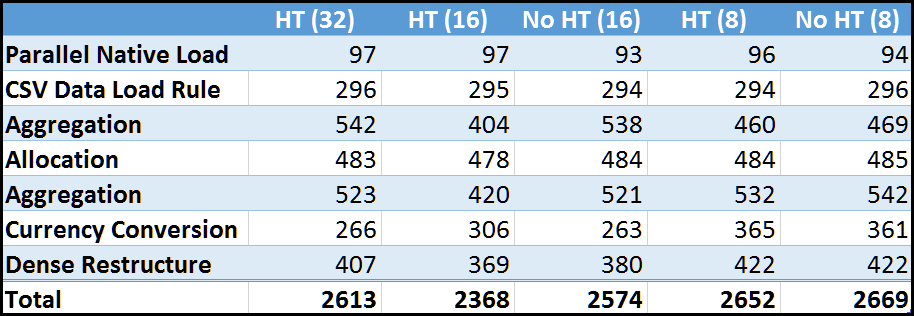

Summarized Results

Let’s look at the all of the results in a grid:

And as a graph:

Conclusion

When I started down the path of EssBench and my Essbase Performance Series, I had a set of preconceived notions. Essbase admins should disable Hyper-Threading. Right? Testing on the other hand seems to completely disagree. The evidence is pretty clear that for this application, Hyper-Threading is at worst…a non-factor and at best…a benefit. This means that we no longer have to tell IT to disable Hyper-Threading. More importantly, this means that we don’t have to do something different and give IT a reason to question us.

The other important thing to take away from this…test your application. Try different settings on calculations to see what setting works the best for that calculation. Clearly we shouldn’t just rely on a single setting for CALCPARALLEL. If we wanted the absolute fastest performance, we would leave Hyper-Threading enabled and then use CALCPARALLEL set to 16 for everything but our currency conversion. For currency conversion we can save ourselves 40 seconds (over 10%) of our processing time by setting CALCPARALLEL to 32.

Brian Marshall

July 5, 2017

Background

What on earth is EssBench? First…some background. I have a number of clients who have transitioned to either new physical hardware, have been virtualized, or are being sent to the cloud. All of these options bring along challenges around performance. When you go to new physical hardware, you might end up attached to network storage. When you go to a virtualized environment, performance generally suffers across the board, and when you go to the cloud, any number of things can happen. So what do those clients do?

You really have three options to get IT to help you:

- Option 1: Test your application on your old system and compare it to your new system

- This presents a problem as the old system has a variety of differences, including the version of Essbase most likely

- Option 2: Test your application on an independent system and compare it to your new system

- This can be done, but it sounds expensive to either borrow another companies instance or have your consultant do this

- Option 3: Test a standardized benchmark application and compare it to other tested configurations

- If only something like this existed, this would be the best option, right?

Introducing EssBench

Well it’s taken me a couple of years to finally prepare it, but EssBench is finally ready for initial release. The goal of EssBench is to provide a standardized Essbase application for benchmarking between environments. The initial release is a basic BSO cube with the following characteristics:

- Dimensions

- Account (1025 members, 838 stored)

- Period (19 members, 14 stored)

- Years (6 members)

- Scenario (3 members)

- Version (4 members)

- Currency (3 members)

- Entity (8767 members, 8709 stored)

- Product (8639 members, 8639 stored)

- Data

- 8 Text files in native Essbase load format

- 1 Text file in comma separated format

In addition to the cube and data, there is a process included for Windows users. I am working on putting together a better way to execute the testing, but this is a good start and let’s us start collected data for comparison purposes. Included in this release is a combination of a PowerShell script and a MaxL script with the following characteristcs:

- PowerShell Scripts

- Creates Log File

- Executes MaxL Commands

- MaxL Scripts

- Resets the cube

- Loads data (several rules)

- Aggs the cube

- Executes allocation

- Aggs the allocated data

- Executes currency conversion

- Restructures the database

- Executes the MaxL script three times

Where Do I Get EssBench?

I’ve set up a landing zone and domain for EssBench. You can visit that here. There are three main components to download:

Installation Guide

Essbase Artifacts

Automation and Data Files

What Next?

I’m working now on two items. First, I want to start collecting information so that we can have an actual database of test results. This provides everyone the ability to compare without needing more than their own environment. Next, I’m working on putting together an application that will actually execute the test. It will also eventually collect the results and upload them automatically to a database. Finally, I would like it to also collect system configuration information (CPU, Memory, Storage, etc…). But that will be for another day. In the meantime, go benchmark your environment today!

Brian Marshall

June 30, 2017

As I continue down the path of my Essbase testing and benchmarking, I’m always looking for ways to make Essbase lay waste to hardware. As I was working on my new benchmarking application, I needed to load a lot of data into a BSO cube. I’m impatient and noticed that the data load was terribly inefficient at using the available hardware on my server. Essbase was using a single CPU thread to perform the load. So how can we make this load more intensive on the server and more importantly…faster? Essbase BSO Parallel Data Loads!

I know what you’re thinking, you can’t load data to a BSO in parallel. That only works in ASO, right? Wrong! Now, admittedly the ASO functionality for parallel loads is a lot more flexible, but starting in 11.1.2.2, BSO now has a basic way to perform parallel loads. Before we get to that, let’s take a look at the SQL load that was performed. The data set is roughly 10,000,000 rows. This is the basic MaxL code used:

import database EssBench.EssBench data

connect as hypservice identified by 'mypasswordnotyours'

using server rules_file 'dRev'

on error write to "e:\\data\\EssBench\\dRev.txt";

Now let’s take a look at our resource usage:

Clearly we aren’t making good use of all of those CPU’s. And here’s the timing results:

At 333 seconds, that’s not bad. But can we do better? Let’s try this as a text file and see how it compares. I exported by data to text file and changed up my MaxL to this:

import database EssBench.EssBench

data from data_file "e:\\data\\EssBench\\dRevCogsStats.ascii"

using server rules_file 'dtRev'

on error write to "e:\\data\\EssBench\\dtRev.err";

And let’s look at the resource usage:

That looks familiar. We are still wasting a lot of processing power. And how long did it take?

With a time of 331 seconds, we are looking at a virtual tie with the SQL-based rule. Now let’s see what happens when we break up the file into 16 parts (more on this another day). Here’s the MaxL:

import database EssBench.EssBench using max_threads 16

data from data_file "e:\\data\\EssBench\\dRevCogsStats*.txt"

using server rules_file 'dtRev'

on error write to "e:\\data\\EssBench\\dtRev.err";

We have 16 threads, let’s use them all! We have 16 files, so let’s see what happens:

That’s more like it! We still aren’t using all 16 threads fully, but at least we are using more than one! So how long did it take?

We are sitting at 219 seconds now. This is an improvement of roughly 34%. That’s a pretty nice improvement, but not nearly the improvement we would hope for given that we went from less than 10% CPU utilization to over 70% utilization. Why then did we not get a better improvement? That’s a question for another day.

In general, I found it interesting that the SQL-based load rule and the text-based load rule performed exactly the same. Obviously, the SQL-based load rule would be the faster of the two options given that we don’t have the overhead of first creating the text file. Next time, we’ll take a look at how to split a file using PowerShell.

Brian Marshall

February 22, 2017

Introduction

Welcome to part five the Essbase Performance series that will have a lot of parts. Today we’ll pick up where we left off on network storage baselines. Before we get there, here’s a re-cap of the series so far:

Essbase Performance

In case you’ve forgotten, here’s the list of configurations that will be tested:

- Eight (8) Hitachi 7K3000 2TB Hard Drives, four (4) sets of two (2) mirrors

- Eight (8) Hitachi 7K3000 2TB Hard Drives, four (4) sets of two (2) mirrors with an Intel S3700 200GB SLOG

- Eight (8) Hitachi 7K3000 2TB Hard Drives, four (4) sets of two (2) mirrors with sync=disabled (NFS) or sync=always (iSCSI)

- One (1) Intel P3605 1.6TB NVMe SSD

- One (1) Intel P3605 1.6TB NVMe SSD with sync=disabled (NFS) or sync=always (iSCSI)

And the four (4) datasets:

- One (1) dataset to test NFS on the Hard Drive configurations

- One (1) dataset to test iSCSI on the Hard Drive configurations

- One (1) dataset to test NFS on the NVMe configurations

- One (1) dataset to test iSCSI on the NVMe configurations

So what benchmarks were used?

- CrystalDiskMark 5.0.2

- Anvil’s Storage Utilities 1.1.0.337

Benchmarks

And the good stuff that you skipped to anyway…benchmarks!

As with the rest of the series, we’ll continue our flow. We started with CrystalDiskMark and now we’ll move on to Anvil. While Anvil will also provide MB/s metrics, we will focus on just the IO/s. Let’s get started.

Anvil Sequential Read

In our read tests everything is pretty well flat. The NFS Hard Drive configuration seems to be lower than everything else, but at a low queue depth, we’ll consider that an outlier for now.

Anvil 4K Random Read

The random performance at a low queue depth is also pretty flat. The iSCSI NVMe device does seem to separate itself here. We’ll see how it does at higher queue depths.

Anvil 4K Random QD4 Read

At a queue depth of four, things are basically flat across the board.

Anvil 4K Random QD16 Read

It seems that at higher queue depths, things still seem to stay relatively flat on the read side. Let’s see what happens with writes.

Anvil Sequential Write

As with our CDM results, write performance is a totally different story. Here again we see the three factors that drive performance: synchronous writes, SLOG, and media type. NFS and iSCSI are inverse of each other by default. NFS forces synchronous writes while iSCSI forces asynchronous writes.

Clearly asynchronous writes win out every time given the fire-and-forget nature. The SLOG does help in a big way. As in our CDM results, the S3700 SLOG still seems to perform better on iSCSI than even the NVMe SSD. Once we get to actual Essbase performance, we’ll see how this holds up.

Anvil 4K Random Write

Random performance follows the same trend as sequential performance in our write tests. At a low queue depth, SSD’s get us half-way to asynchronous performance, which is exciting.

Anvil 4K Random QD4 Write

As queue depth increases, the performance differential seem to stay pretty consistent. Asynchronous performance is pulling away just a tad from the rest of the options.

As queue depth increases, the performance differential seem to stay pretty consistent. Asynchronous performance is pulling away just a tad from the rest of the options.

Anvil 4K Random QD16 Write

In our final test, we see that at much higher queue depths, asynchronous really pulls away from everything. iSCSI seems to fair much better with the SLOG than the NVMe drive again.

So…can we get some Essbase benchmarks yet? That will be our next post!

Brian Marshall

October 3, 2016

Introduction

Welcome to part four of a series that will have a lot of parts. In our lost two parts we took a look at our test results using the CrystalDiskMark and Anvil synthetic benchmarks. As we said in parts two and three, the idea here is to see first how everything measures up in synthetic benchmarks before we get into the actual benchmarking of Essbase Performance.

Before we get into our network options, here’s a re-cap of the series so far:

Network Storage Options

Today we’ll be changing gears away from local storage and moving into network storage options. As I started putting together this part of the series, I struggled with the sheer number of options available for configuration and testing. I’ve finally boiled it down to the options that makes the most sense. At the end of the day, if you are on local physical hardware, you probably have local physical drives. If you are on a virtualized platform, you probably have limited control over your drive configuration.

So with that, I decided to limit my testing to the configuration of the physical data store on the ESXi platform. Now, this doesn’t mean that there aren’t options of course. For the purposes of this series, we will focus on the two most common network storage types: NFS and iSCSI.

NFS

NFS, or Network File System, is an ancient (1984!) means of connecting storage to a network device. This technology has been built into *nix for a very long time and is very stable and widely available. The challenge with NFS for Essbase performance relates to how ESXi handles writes.

ESXi basically treats every single write to an NFS store as a synchronous write. Synchronous writes require a response back from the device that they have completed properly. This is great for security of data, but terrible for write performance. Traditional hard drives are very bad at this. You can read more about this here, but basically this leaves us with a pair of options.

Add a SLOG

So what on earth is a SLOG? In ZFS, there is the concept of the ZFS Intent Log. This is a temporary location where things go before they are sent to their final place on the storage volume. The ZIL exists on the storage volume, so if you have spinning disks, that’s where the ZIL will be.

The Separate ZFS Intent Log (SLOG for short) allows for a secondary location to be defined for the Intent Log. This means that we can we something fast, like an SSD, to perform this function that spinning disks are quite terrible at. You can reach a much better description here.

Turn Sync Off

The second option is far less desirable. You can turn synchronous writes off on the volume altogether. This will make performance very, very fast. The huge downside is that no synchronous writes will ever happen on that volume. This is basically the opposite of how ESXi treats an NFS volume.

iSCSI

iSCSI, or Internet Small Computer System Interface, is a network-based implementation of the classic SCSI interface. You just trade in your physical interface for an IP-based interface. iSCSI is very common and does things a little differently than NFS. First, it doesn’t actually implement synchronous writes. This means that data is always written asynchronously. This is great for performance, but opens up some risk of data loss. ZFS makes life better by making sure the file system is safe, but there is always some risk. Again we have a pair of options.

Turn Sync On

You can force synchronization, but then you will be back to where NFS is from a performance perspective. For an NVMe device, this will perform well, but with spinning disks, we will need to move on to our next option.

Add a SLOG (after turning Sync On)

Once we turn on synchronous writes, we will need to speed up the volume. To do this, we will again add a SLOG. This will allow us to do an apples to apples comparison of NFS and iSCSI in the same configuration.

Essbase Performance

Because all of these things could exist in various environments, I decided to test all of them! Essbase performance can vary greatly based on the storage sub-system, so I decided to go with the following options:

- Eight (8) Hitachi 7K3000 2TB Hard Drives, four (4) sets of two (2) mirrors

- Eight (8) Hitachi 7K3000 2TB Hard Drives, four (4) sets of two (2) mirrors with an Intel S3700 200GB SLOG

- Eight (8) Hitachi 7K3000 2TB Hard Drives, four (4) sets of two (2) mirrors with sync=disabled (NFS) or sync=always (iSCSI)

- One (1) Intel P3605 1.6TB NVMe SSD

- One (1) Intel P3605 1.6TB NVMe SSD with sync=disabled (NFS) or sync=always (iSCSI)

I then created a four (4) datasets:

- One (1) dataset to test NFS on the Hard Drive configurations

- One (1) dataset to test iSCSI on the Hard Drive configurations

- One (1) dataset to test NFS on the NVMe configurations

- One (1) dataset to test iSCSI on the NVMe configurations

So what benchmarks were used?

- CrystalDiskMark 5.0.2

- Anvil’s Storage Utilities 1.1.0.337

Benchmarks

And the good stuff that you skipped to anyway…benchmarks!

If you’ve been following the rest of this series, we’ll stick with the original flow. Basically, we will take a look at CrystalDiskMark results first, and then we’ll move over to Anvil in our next part.

CDM Sequential Q32T1 Read

In our read tests we see NFS outpacing iSCSI in every configuration. All of the different configurations don’t really make a difference for reads in these tests.

CDM 4K Random Q32T1 Read

The random performance shows the same basic trend as the sequential test. NFS continues to outpace iSCSI here as well.

CDM Sequential Read

The trend continues with NFS outpacing iSCSI in sequential read tests, even at lower queue depths.

CDM 4K Random Read

The trend gets bucked a bit here at a lower queue depth. iSCSI seems to take the lead here…but ever don’t let the graph fool you. It’s really immaterial.

CDM Sequential Q32T1 Write

Write performance is a totally different story. Here we see the three factors that drive performance: synchronous writes, SLOG, and media type. NFS and iSCSI are inverse of each other by default. NFS forces synchronous writes while iSCSI forces asynchronous writes.

Clearly asynchronous writes win out every time given the fire-and-forget nature. The SLOG does help in a big way. I do find it interesting that our S3700 SLOG seems to perform better on iSCSI than even the NVMe SSD. Once we get to actual Essbase performance, we’ll see how this holds up.

CDM 4K Random Q32T1 Write

Random performance follows the same trend as sequential performance in our write tests. iSCSI clearly fairs better for random performance so long as an SSD is involved.

CDM Sequential Write

At a lower queue depth, the results follow the trend established in the higher queue depth write tests. There is still an oddity in the SLOG outpacing the NVMe device.

CDM 4K Random Read

In our final test, we see that random performance is just terrible across the board at lower queue depths. Clearly much better with a SLOG or an NVMe device, but as expected, very slow.

That’s it for this post! In our next post, we’ll take a look at the Anvil benchmark results while focusing on I/O’s per second. I promise we’ll make it to actual Essbase benchmarks soon!

Brian Marshall

September 23, 2016

Hybrid Essbase is the biggest advancement in Essbase technology since ASO was released. It truly takes Essbase to another level when it comes to getting the best out of both ASO and BSO technology. Converting your application from BSO to Hybrid can be a long process. You have to make sure that all of your calculations still work the way they should. You have to make sure that your users don’t break Hybrid mode. You have to update the storage settings for all of your sparse dimensions.

I can’t help you with the first items, they just take time and effort. What I can help you with is the time required to update your sparse dimensions. I spend a lot of time hacking around in the Planning repository. I suddenly found a new use for all of that time spent with the repository…getting a good list of all of the upper level members in a dimension. If we just export a dimension, we get a good list, but we have to do a lot of work to really figure out which members are parents and which are not. Luckily, the HSP_OBJECT table has column that tells us just that: HAS_CHILDREN.

Microsoft SQL Server

The query to do this is very, very simple. The process for updating your dimensions using the query takes a little bit more explanation. We’ll start with SQL Server since that happens to be where I’m the most comfortable. I’m going to assume you are using SQL Server Management Studio…because why wouldn’t you? It’s awesome. Before we even get to the query, we first need to make a configuration change. Open Management Studio and click on Tools, then Options.

Expand Query Results, then expand SQL Server, and then click on Results to Grid:

Check the box titled Include column headers when copying or saving the results and click OK. Why did we start here? Because we have to restart Management Studio for the new setting to actually take affect. So do that next…

Now that we have Management Studio ready to go, we can get down to the query. Here it is in all of its simplicity:

SELECT

o.OBJECT_NAME AS Product

,po.OBJECT_NAME AS Parent

,'dynamic calc' AS [Data Storage (Plan1)]

FROM

HSP_OBJECT o

INNER JOIN

HSP_MEMBER m ON m.MEMBER_ID = o.OBJECT_ID

INNER JOIN

HSP_DIMENSION d ON m.DIM_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT do ON do.OBJECT_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT po ON po.OBJECT_ID = o.PARENT_ID

WHERE

do.OBJECT_NAME = 'Product'

AND o.HAS_CHILDREN = 1

We have a few joins and a very simple where clause. As always, I’m using my handy-dandy Vision demo application. A quick look at the results shows us that there are very few parents in the Product dimension:

Now we just need to get this into a format that we can easily import back into Planning. All we have to do it right-click anywhere in the results and click on Save Results As…. Enter a file name and click Save.

Now we should have a usable format for a simple Import to update our dimension settings. Let’s head to workspace and give it a shot. Fire up your Planning application and click on Administration, then Import and Export, and finally Import Metadata from File:

Select your dimension from the list and then browse to find your file. Once the file has uploaded, click the Validate button. This will at least tell us if we have a properly formatted CSV:

That looks like a good start. Let’s go ahead and complete the import and see what happens:

This looks…troubling. One rejected record. Let’s take a look at our logs to see why the record was rejected:

As we can see, nothing to worry about. The top-level member of the dimension is rejected because there is no valid parent. We can ignore this and go check to see if our changes took affect.

At first it looks like we may have failed. But wait! Again, nothing to worry about yet. We didn’t update the default data storage. We only updated Plan1. So let’s look at the data storage property for Plan1:

That’s more like it!

Oracle Database

But wait…I have an Oracle DB for my repository. Not to worry. Let’s check out how to do this with Oracle and SQL Developer. First, let’s take a look at the query:

SELECT

o.OBJECT_NAME AS Product

,po.OBJECT_NAME AS Parent

,'dynamic calc' AS "Data Storage (Plan1)"

FROM

HSP_OBJECT o

INNER JOIN

HSP_MEMBER m ON m.MEMBER_ID = o.OBJECT_ID

INNER JOIN

HSP_DIMENSION d ON m.DIM_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT do ON do.OBJECT_ID = d.DIM_ID

INNER JOIN

HSP_OBJECT po ON po.OBJECT_ID = o.PARENT_ID

WHERE

do.OBJECT_NAME = 'Product'

AND o.HAS_CHILDREN = 1

This is very, very similar to the SQL Server query. The only real difference is the use of double quotes instead of brackets around our third column name. A small, yet important distinction. Let’s again look at the results:

The Oracle results look just like the SQL Server results…which is a good thing. Now we just have to get the results into a usable CSV format for import. This will take a few more steps, but it still very easy. Right click on the result set and click Export:

Change the export format to csv, choose a location and file name, and then click Next.

Click Finish and we should have our CSV file ready to go. Let’s fire up our Planning application and click on Administration, then Import and Export, and finally Import Metadata from File:

Select your dimension from the list and then browse to find your file. Once the file has uploaded, click the Validate button. This will at least tell us if we have a properly formatted CSV:

Much like the SQL Server file, this looks like a good start. Let’s go ahead and complete the import and see what happens:

Again, much like SQL Server, we have the same single rejected record. Let’s make sure that the same error message is present:

As we can see, still nothing to worry about. The top-level member of the dimension is rejected because there is no valid parent. We can ignore this and go check to see if our changes took affect.

As with SQL Server, we did not update the default data storage property, only Plan1. So let’s look at the data storage property for Plan1:

And just like that…we have a sparse dimension ready for Hybrid Essbase. Be sure to refresh your database back to Essbase. You can simply enter a different dimension name in the query and follow the same process to update the remaining sparse dimensions.

Celvin also has an excellent Essbase utility that will do this for you, but it makes use of the API and Java, and generally is a bit more complicated than this method if you have access to the repository. So what happens if you can’t use the API and you can’t access the repository? We have another option. We’ll save that for another day, this blog post is already long enough!

Brian Marshall

August 2, 2016

Welcome to part three of a series that will have a lot of parts. In our we took a look at our test results using the CrystalDiskMark synthetic benchmark. Today we’ll be looking at the test results using a synthetic benchmark tool named Anvil. As we said in part two, the idea here is to see first how everything measures up in synthetic benchmarks before we get into the actual benchmarking in Essbase.

Also as discussed in part two, we have three basic local storage options:

- Direct-attached physical storage on a physical server running our operating system and Essbase directly

- Direct-attached physical storage on a virtual server using VT-d technology to pass the storage through directly to the guest operating system as though it was physically connected

- Direct-attached physical storage on a virtual server using the storage as a data store for the virtual host

As we continue with today’s baseline, we still have the following direct-attached physical storage on the bench for testing:

- One (1) Samsung 850 EVO SSD (250GB)

- Attached to an LSI 9210-8i flashed to IT Mode with the P20 firmware

- Windows Driver P20

- Four (4) Samsung 850 EVO SSD’s (250GB)

- Attached to an LSI 9265-8i

- Windows Driver 6.11-06.711.06.00

- Configured in RAID 0 with a 256kb strip size

- One (1) Intel 750 NVMe SSD (400GB)

- Attached to a PCIe 3.0 8x Slot

- Firmware 8EV10174

- Windows Driver 1.5.0.1002

- ESXi Driver 1.0e.1.1-1OEM.550.0.0.139187

- Twelve (12) Fujitsu MBA3300RC 15,000 RPM SAS HDD (300GB)

- Attached to an LSI 9265-8i

- Windows Driver 6.11-06.711.06.00

- Configured three ways:

- RAID 1 with a 256kb strip size

- RAID 10 with a 256kb strip size

- RAID 5 with a 256kb strip size

So what benchmarks were used?

- CrystalDiskMark 5.0.2 (see part two)

- Anvil’s Storage Utilities 1.1.0.337

And the good stuff that you skipped to anyway…benchmarks!

Now that we’ve looked at CrystalDiskMark, we’ll take a look at Anvil results. While Anvil results include reads and writes in megabytes per second, we’ll instead focus on Inputs/Outputs per Second (IOPS). Here we see that the Intel 750 is keeping pace nicely with the RAID 0 SSD array. In this particular test, even our traditional drives don’t look terrible.

Next up we’ll look at the random IOPS performance. So much for our traditional drives. Here we really see the power of SSD’s versus old-school technology. It is interesting that all three solutions hover pretty closely together. But this is likely a queue depth issue.

Let’s see how things look with a queue depth of four. Things are still pretty clustered here, but much higher across the board.

And now for a queue depth of 16. Now this looks better. The Intel 750 has, for the most part, easily outpaced the rest of the options. The RAID 0 SSD array looks pretty good here as well.

That’s it for the read tests. Next we move on to the write tests. We’ll again start with the sequential writes. Before we expand our queue depths, the RAID 0 SSD array is looking like the clear winner.

Our random write test seems to follow closely to our random read test. The Intel 750 is well in the lead with the other SSD options trailing behind. Also of interest, the Intel 750 seems to struggle when physically attached and as a data store in these tests. We’ll see if this continues.

When the queue depth increases, to four, we see the Intel 750 continue to hold its lead. The RAID 0 SSD array is still trailing the regular single SSD. As with the previous random test, the Intel 750 continues to struggles, though the physical test has improved.

Finally, we’ll check out the queue depth at 16. It looks like our physical Intel 750 has finally caught up to the passthrough. This feels like an odd benchmark result, so we’ll see how this looks in real Essbase performance. We also finally see that the RAID 0 SSD array has pulled ahead of the single drive by a large margin.

Next up…we’ll start taking a look at the actual Essbase performance for all of these hardware choices. That post is a few weeks away with Kscope rapidly approaching.

Brian Marshall

June 14, 2016

Welcome to part two of a series that will have a lot of parts. In our introduction post, we covered what we plan to do in this series at a high level. In this post, we’ll get a look at some synthetic benchmarks for our various local storage options. The idea here is to see first how everything measures up in benchmarks before we get into the actual benchmarking in Essbase.

As we discussed in our introduction, we have three basic local storage options:

- Direct-attached physical storage on a physical server running our operating system and Essbase directly

- Direct-attached physical storage on a virtual server using VT-d technology to pass the storage through directly to the guest operating system as though it was physically connected

- Direct-attached physical storage on a virtual server using the storage as a data store for the virtual host

For the purposes of today’s baseline, we have the following direct-attached physical storage on the bench for testing:

- One (1) Samsung 850 EVO SSD (250GB)

- Attached to an LSI 9210-8i flashed to IT Mode with the P20 firmware

- Windows Driver P20

- Four (4) Samsung 850 EVO SSD’s (250GB)

- Attached to an LSI 9265-8i

- Windows Driver 6.11-06.711.06.00

- Configured in RAID 0 with a 256kb strip size

- One (1) Intel 750 NVMe SSD (400GB)

- Attached to a PCIe 3.0 8x Slot

- Firmware 8EV10174

- Windows Driver 1.5.0.1002

- ESXi Driver 1.0e.1.1-1OEM.550.0.0.139187

- Twelve (12) Fujitsu MBA3300RC 15,000 RPM SAS HDD (300GB)

- Attached to an LSI 9265-8i

- Windows Driver 6.11-06.711.06.00

- Configured three ways:

- RAID 1 with a 256kb strip size

- RAID 10 with a 256kb strip size

- RAID 5 with a 256kb strip size

So what benchmarks were used?

- CrystalDiskMark 5.0.2

- Anvil’s Storage Utilities 1.1.0.337

And the good stuff that you skipped to anyway…benchmarks!

We’ll start by looking at CrystalDiskMark results. The first result is a sequential file transfer with a queue depth of 32 and a single thread. There are two interesting results here. First, our RAID 10 array in passthrough is very slow for some reason. Similarly, the Intel 750 is also slow in passthrough. I’ve not yet been able to determine why this is, but we’ll see how it performs in the real world before we get too concerned. Obviously the NVMe solution wins overall with our RAID 0 SSD finishing closely behind.

Next we’ll look at a normal sequential file transfer. We’ll see here that all of our options struggle with a lower queue depth. Some more than others. Clearly the traditional hard drives are struggling along with the Intel 750. The other SSD options however are much closer in performance. The SSD RAID 0 array is actually the fastest option with these settings.

Next up is a random file transfer with a queue depth of 32 and a single thread. As you can see, on the random side of things the traditional hard drives, even in RAID, struggle. Actually, struggling would probably be a huge improvement over what they actually do. The Intel 750 takes the lead for the physical server, but it actually gets overtaken by the RAID 0 SSD array for both of our virtualized tests.

Our final read option is a normal random transfer. Obviously everything struggles here. A big part of this is just not having enough queue depth to take advantage of the potential of the storage options.

Next we will take a look at the CrystalDiskMark write tests. As with the read, we’ll start with a sequential file transfer using a queue depth of 32 and a single thread. Here we see that the RAID 0 SSD array takes a commanding lead. The Intel 750 is still plenty fast, and then the single SSD rounds out the top three. Meanwhile, the traditional disks are still there…spinning.

Let’s look at a normal sequential file transfer. For writes, our traditional drives clearly prefer lower queue depths. This can be good or bad for Essbase, so we’ll see how things look when we get to the real-world benchmarks. In general, our top three mostly hold with the RAID 0 traditional array pulling into third in some instances.

On to the random writes. We’ll start off the random writes with a queue depth of 32 and a single thread. As with all random operations, the traditional disks get hammered. Meanwhile, the Intel 750 has caught back up to the RAID 0 SSD array, but is still back in second place.

And for our final CrystalDiskMark test, we’ll look at the normal random writes. Here the Intel 750 takes a commanding lead while the RAID 0 SSD array and the single SSD look about the same. Again, more queue depth helps a lot on these tests.

In the interest of making my blog posts a more reasonable length, that’s it for today. Part three of the series will be more baseline benchmarks with a different tool to measure IOPS (I/O’s per second), another important statistic for Essbase. Then hopefully, by part four, you will get to see some real benchmarks…with Essbase!

Part five teaaser:

Brian Marshall

June 11, 2016

Welcome to the first in a likely never-ending series about Essbase performance. To be specific, this series will be designed to help understand how the choices we make in Essbase hardware selection affect Essbase performance. We will attempt to answer questions like Hyper-Threading or not, SSD’s or SAN, Physical or Virtual. Some of these things we can control, some of them we can’t. The other benefit of this series will be the ability to justify changes in your organization and environment. If you have performance issues and IT want’s to know how to fix it, you will have hard facts to give them.

As I started down the path of preparing this series, I wondered why there was so little information on the internet in the way of Essbase benchmarks. I knew that part of this was that every application is different and has significantly different performance characteristics. But as I began to build out my supporting environment, I realized something else. This is a time consuming and very expensive process. For instance, comparing Physical to Virtual requires hardware that is dedicated to the purpose of benchmarking. That isn’t something you find at many, if any clients.

As luck would have it, I have been able to put together a lab that allows me the ability to do all of these things. I have a dedicated server for the purpose of Essbase benchmarking. This server will go back and forth between physical and virtual and various combinations of the two. Before we get into the specifics of the hardware that we’ll be using, let’s talk about what we hope to accomplish from a benchmarking perspective.

There are two main areas that we care about that relate to Essbase performance. First, we have the back-end performance of Essbase calculations. When I run an agg or a complex calculation, how long does it take? Second, we have the front-end performance of Essbase retrieves and calculations. This is a combination of how long end-user queries take to execute and how long user-executed calculations take to complete. So what will we be testing?

Storage Impact on Back-End Essbase Calculations

We’ll take a look at impact our options in storage have on Essbase calculation performance. Storage is our slowest bottleneck, so we’ll start here to find the fastest solution that we can use for the next set of benchmarks. We’ll compare each of our available storage types three ways: a physical Essbase server, a virtual Essbase server using VT-d and direct attached storage, and a virtual Essbase server using data stores. Here are the storage options we’ll have to work with:

- Samsung 850 EVO SSD (250GB) on an LSI 9210-8i

- Four (4) Samsung 850 EVO SSD’s in RAID 0 on an LSI 9265-8i (250GB x 4)

- Intel 750 NVMe SSD (400GB)

- Twelve (12) Fujitsu MBA3300RC 15,000 RPM SAS HDD (300GB x 12) in RAID 1, 1+0, and RAID 5

CPU Impact on Back-End Essbase Calculations

Once we have determined our fastest storage option, we can turn our attention our processors. The main thing that we can change as application owners is the Hyper-Threading settings. Modern Intel processors found in virtually all Essbase clients support it, but conventional wisdom tells us that this doesn’t work out very well for Essbase. I would like to know what the cost of this setting is and how we can best work around it. ESXi (by far the most common hypervisor) even gives us some flexibility with this settings.

Storage Impact on Front-End Essbase Query Performance

This one is a little more difficult. Back-End calculations are easy to benchmark. You make a change, you run the calculation, you check the time it took to execute. Easy. Front-End performance requires user interaction, and consistent user interaction at that. So how will we do this? I can neither afford load runner, nor have the time to attempt to learn this complex tool. Again, as luck would have it, we have another option. Our good friends at Accelatis have graciously offered to allow us to use their software to perform consistent front-end benchmarks.

Accelatis has an impressive suite of performance testing products that will allow us to test specific user counts and get query response times so that we can really understand the impact of end-user performance. I’m very excited to be working with Accelatis.

CPU Impact on Front-End Essbase Query Performance

This is an area where we can start to see more about our processors. Beyond just Hyper-Threading, which we will still test, we can look at how Essbase is threading across processors and what impact we can have on that. Again, Accelatis will be key here as we start to understand how we really scale Essbase.

So what does the physical server look like that we are using to do all of this? Here are the specs:

You can see specs of the full lab supporting all of the testing here. And now, because I promised benchmarks, here are a few to start with:

Physical Server, Samsung EVO 850 x 4 in RAID 0 on an LSI 9265-8i

Physical Server, Intel 750 NVMe SSD

Well…that’s fast. In our next post in the series, we’ll look at benchmarking all of our baseline storage performance for Physical, Virtual with VT-d, and Virtual with Data stores. This will be our baseline for the next post after that about actual Essbase performance. In the meantime, I’ll also be working towards getting access to some fast network storage to test that against all of our direct and virtual options. In the meantime, let’s try out a graph and see how it looks:

CrystalDiskMark 5.0.2 Read Comparison:

CrystalDiskMark 5.0.2 Write Comparison:

Brian Marshall

May 23, 2016

As queue depth increases, the performance differential seem to stay pretty consistent. Asynchronous performance is pulling away just a tad from the rest of the options.

As queue depth increases, the performance differential seem to stay pretty consistent. Asynchronous performance is pulling away just a tad from the rest of the options.